Original URL: https://www.theregister.com/2013/08/21/unsung_heroes_dr_chris_shelton/

UK micro pioneer Chris Shelton: The mind behind the Nascom 1

...and Clive Sinclair's PgC Intel-beating wonder chip

Posted in Personal Tech, 21st August 2013 09:03 GMT

Archaeologic Chris Shelton is not well known today, yet the British microcomputer industry would have been a very much poorer place without him.

Never as famous as Sir Clive Sinclair, with whom he worked in the past; Acorn’s Chris Curry, Herman Hauser, Steve Furber and Sophie Wilson; or even Tangerine and Oric’s Paul Johnson. Nonetheless, Shelton played a major role in the evolution of UK microcomputers.

He not only designed what is arguably Britain’s first home-grown home computer, the Nascom 1, but he also devised and built what may be the first truly modular, multi-user personal computer system, the Sig/Net. He was also the mind behind one of the most innovative microprocessors ever developed.

Dr Chris Shelton today

Shelton graduated from London’s Imperial College in the late 1960s with a degree in engineering. He stayed on at university, shifting his emphasis to medical engineering, then overseen by the college’s Medical Physics department, for which he was subsequently awarded his master's degree and, later, his PhD. With these qualifications under his belt, he was offered a full-time position in the department.

But Shelton had an entrepreneurial streak, and the idea of staying in academia no longer appealed to him as much as it once had. Designing new techniques and new technologies was all very well, but unless they were taken to the next stage and put into production, they were not going to help people. He realised he not only wanted to design things but make and sell them too.

So, in the early 1970s, he founded Shelton Instruments to do just that. Operating in the main as a design consultancy, SI’s early work was provided by Shelton’s academic connections. He created various devices with medical and science applications, many of them based on the new microprocessor technology. Back then, chip maker Mostek had its Europe headquarters just round the corner from Shelton’s office in London’s borough of Islington.

SI quickly became known for its work with microprocessors and microcontrollers, especially the Fairchild F8, which Mostek had licensed and integrated from a two-part chipset into a single CPU, the 3870. SI did work for word-processor hardware company Data Recall and many others.

And then, in July 1977, Nasco boss John Marshall came knocking on Chris Shelton’s door.

Booting the British micro industry

Marshall had built up Nasco - short for the North American Semiconductor Company, which, despite its name, was British - as a distributor of US-sourced semiconductors and other electronic components in the UK. He frequently travelled to the States to meet his American suppliers and, in the mid-1970s, saw at first hand the explosion of enthusiast interest in the microprocessor and the personal computing devices the new chip technology was making possible. He was struck by the huge number of user groups and clubs being founded across the nation. In particular, he saw how component suppliers were actively engaging with this new community in order to encourage its members to buy more kit.

He wondered how this business model might be established back home in the UK. Over here, however, there was almost nothing in the way of a computing enthusiast scene. There was no shortage of electronics and wireless buffs, and some were beginning to tinker with microprocessors, but as yet no group of hobbyists specifically keen on computer technology had really begun to form.

As one Marshall’s colleagues, Kerr Borland (MD of Nasco-owned Nascom Microcomputers), would write in the first issue of Personal Computer World in 1978: “The electronic hobbyist in the UK has been left out in the cold. His spending power is far less than that of his American counterpart. The fall-out of products or peripherals from our highly specialised electronics industry is minimal.

“The American hobbyist scene has developed a long way... The clubs are not only well organised and well attended but have become forums where the small and medium, and even the large American electronics manufacturers feel the need to be represented... What do we have in the UK? We would suggest, virtually nothing.”

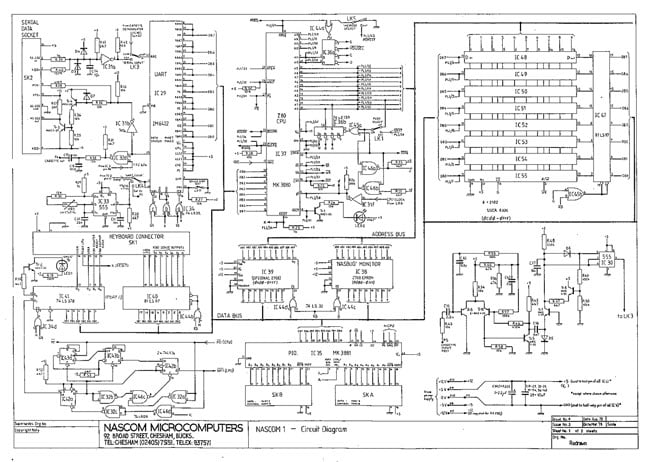

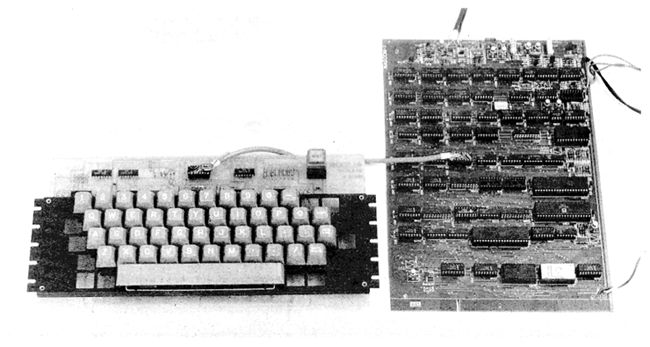

Home computing circa 1977: the Nascom 1

If there was no home computing scene in Britain, Marshall would have to create one. To that end, he decided to make and sell a small personal computer, priced at under £200 to make it accessible to as broad a range of buyers as possible. He knew Chris Shelton through his microprocessor distribution business, which worked with Mostek, as did Shelton. So Marshall approached Shelton to design the computer, which would debut in the autumn of 1977 as the Nascom 1.

The only restrictions imposed by Marshall upon Shelton: to make use of devices the user might already own, or could buy cheaply, for storage and display, specifically a cassette recorder and a TV. He was also told to make the computer capable of running the BASIC language and to meet that crucial £200 price point.

During the summer of 1977, Shelton set to work. He chose the Mostek MK3880, a licensed version of the Zilog Z80, as the Nascom’s processor, the result of a chance encounter with Bradford University post-grad student Paul Johnson, who went on to found Tangerine and, later, Oric. Johnson tipped Shelton off to a trick that allowed the Z80’s Non-Maskable Interrupt (NMI) facility to be used to synchronise the display signal and video memory refresh, saving him the cost of a separate chip. Why Mostek’s version rather than Zilog’s own? Mostek was just around the corner.

It has been claimed that the Z80 was selected because Nascom’s John Marshall had a commercially advantageous supply deal with Mostek. More than 35 years later, Shelton doesn’t recall whether that was the case, but he certainly admits that many of the Nascom’s other chips, including the Mostek MK3881 PIO, were chosen because they were the ones Nascom had in stock.

Designing the Nascom 1

The Nascom 1 design process was straightforward, Shelton says. The only trouble arose from Marshall’s choice of PCB layout contractor. The task of converting Shelton’s schematics into a circuit board layout was handled by an Irish firm selected because it could do the job using an early Computer Aided Design (CAD) system, which Marshall insisted upon. Unfortunately, the process was time consuming and costly. When the design came through and was found to be unnecessarily large, it was too expensive to change.

The result, says Shelton, is that the Nascom’s board was much larger than it needed to be. “It could have been a lot smaller,” he recalls.

Shelton’s design work was outlined in a series of articles published in 1977 and 1978 by Wireless World magazine, though today he doesn’t remember writing the articles that went out under his byline. The series had presumably been pitched by Marshall or Borland having seen how successful a similar article in the January 1975 issue of Popular Electronics had been in pushing the MITS Altair 8800 kit computer to forefront of the US micro enthusiast scene.

Marshall and Borland also devised a series of events called the Home Microcomputer Seminar, the first of which was held in the Wembley Conference Centre on 26 November, 1977. The prototype Nascom 1 now complete, Chris Shelton was on hand to give the new British micro its public demo.

The unveiling was an unequivocal success. The seminar attracted about 500 paying visitors - tickets cost £3.50; more if you wanted lunch included - and many of them immediately placed orders for the new machine. Kerr Borland claimed early in 1978 that some 400 had been ordered in the two weeks after the seminar and some 2,000 enquiries for more information had been received. Individuals placed orders, and “20 or so colleges have ordered one or more computers”, Borland wrote.

Shelton says John Marshall’s sales forecast predicted that roughly a thousand units would ship in the first year, “but sales were an order of magnitude greater than that”. Demand for the Nascom 1 was massive, and both Nasco and the subsidiary Marshall set up to make and sell the computer, Nascom, were entirely unprepared for it. With a clear success on his hands, Marshall raced to hire technical, sales and support staff - more, Shelton reckons, than was entirely necessary. The expansion certainly increased Nascom’s costs, making cash flow precarious and hindering the company’s ability to ramp up production to meet demand.

Britain’s most popular micro

The Nascom 1 was offered as both a self-assemble kit and as a fully built system that was slightly more expensive. The cost of bundling a high-quality Qwerty keyboard had already nudged the base price past £200 to £239.76 including eight per cent VAT; it only came in under £200 if you bought it without a power supply. The kit version - “a board and a load of chips in a bag” is how Shelton describes it - caused no end of tech support calls. Shelton says he got a look at the units that had been returned and almost none could be salvaged and re-used, such were their users’ poor soldering and assembly skills.

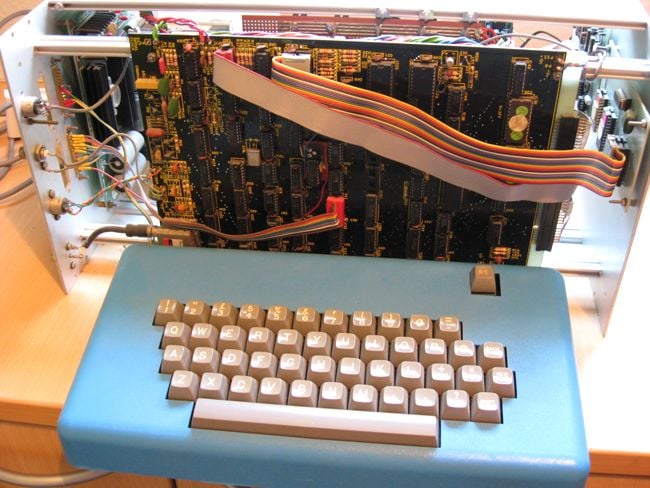

By the summer of 1978, Nascom staff were claiming the Nascom 1 had become the UK’s fastest selling microcomputer, with orders by then approaching £2m in total. That’s fewer than 10,000 units at just over £200 a pop, but still much more than the 300 to 400 Marshall had anticipated would be sold. Three quarters of the Nascom orders were coming in from the rest of Europe. As Your Computer noted a few years later, the Nascom 1 and Nascom 2 were “at the end of 1979, the most popular range of single-board computers” - more popular than Science of Cambridge’s MK-14, more popular than Acorn’s System 1 microcomputer. And £200 was considerably less than the prices the first few Apple IIs, Commodore Pets and Tandy TRS-80s only now starting to appear in the UK were commanding.

Shelton’s association with the Nascom 1 now largely came to an end. He briefly travelled to Munich to help a former colleague of John Marshall launch the Nascom 1 in Germany, but that was it. Shelton Instruments had other projects on the go, and he returned to London to oversee them as he had before. Among them: selling a series of graphics cards for a then little-known Canadian hardware company called Matrox.

Nascom itself created the Nasbus peripheral connectivity systems later in 1978 and, the following year, introduced the Nascom 2, a Nascom 1 with a higher clock speed, more RAM and ROM capacity, and a higher price at a time when other micros were getting cheaper. “The Nascom 2 makes extensive use of ROMs for on-board control decoding. This reduces the chip count and allows easy changes for specialised industrial use of the board,” said the firm’s ads. “We think no other board has quite so much in it for £295 plus eight per cent VAT. If you find us a board that has more, buy one for us too!”

The most popular board micro - still working

Source: Howard Smith

While Nascom was struggling to cope with high demand and, from 1980 onwards, declining sales - it would eventually be acquired by car part maker Lucas’ technology division - Chris Shelton found himself working on a variety of projects designing, and in some cases producing, microprocessor-based industrial controllers. Among them, controllers for Gestetner copiers and printers, and for the broaching tools used in the construction of jet engines. This led him to work increasingly with Programmable Logic Controller (PLC) units, devices for which he holds a number of patents.

During the late 1970s, Shelton was joined by Neil Harrison, who had briefly worked for Nascom but by now was a co-director of Shelton Instruments. SI’s business had expanded, with half of the company’s turnover now coming from consultancy and the rest from manufacturing, much of it off the back of the consultancy work. Harrison now suggested that some PLCs could be replaced with modules based on a general-purpose CPU, such as the Z80.

PLCs emerged in the late 1960s as a replacement for the complex relay systems, timers and sequencers used in complex manufacturing machinery, particularly in the car industry, explains Shelton today. Early PLCs were programmed using “ladder logic”, so named because it replicated the sequential structure of relay logic diagrams. A bus replaced the connecting wires of the old relay linkages.

Enter the Sig/Net

Harrison’s notion was to build a system into which Z80-based boards could be used in place of PLCs, but the outcome for Shelton Instruments was a new multi-user microcomputer. Taking the PLC bus, putting it into a chassis and equipping it with processor, memory and IO modules, Shelton created what became known as the Shelton Sig/Net, a business-oriented machine running an OS compatible with Digital Research’s CP/M 2.2, back then the de facto standard for business micros. It was released in 1981.

The Sig/Net comprised a 4MHz Z80A with 64KB of RAM and one or more RS232 ports and two floppy drives. The processor and memory, disk controller, and IO were each implemented on separate boards slotted into the compact chassis. The clever part was the ribbon cable-connected bus which linked the on-board modules and also allowed this single-user unit to be hooked up to a ‘hub’ box containing a floppy drive, a hard disk of formatted capacities of 5.25MB to 21MB, and another 4MHz Z80A, again with 64KB of RAM. Connected to the hub, the standalone machines now became “satellite” units able to host screens and keyboards for up to three users, all of who could access the shared hard drive. Had more users than that? Then just hook up as many satellite units as you needed.

“It was not that one processor ran a number of dumb terminals - because multi-user systems were all terminal-based back then,” says Shelton, “it was that each user had their own computer in a box connected by our own proprietary, parallel bus to the other boxes. What started out as the solution to an industrial problem, we though we might offer as a commercial box.”

The Shelton Sig/Net

The bus cable had a maximum reach of less than a metre, so satellites had to stay in the same room as the hub. But, as Personal Computer World reviewer Terry Lang, writing in the magazine’s April 1983 issue, noted: screens and keyboards connected to the satellite by RS232 serial links, “and can thus be located wherever required (using line drivers or modems for the longer distances)”.

Users got a directory on the hard drive and up to 16 sub-directories, each with its own drive letter in a kind of virtual floppy array, though each had no specific allocation size - space was taken up on the hard drive as required.

Making all this happen was McNOS (Micro Network Operating System), a CP/M-like multi-user OS bought in by Shelton from Avocet, a software developer in the States - “it was just a lad on the East Coast” - because CP/M itself had yet to demonstrate it was up to the task. Shelton Instruments tweaked the code to help programs that made use of internal CP/M routines to run on McNOS. The OS also came with a file-buffering system utilising RAM in the hub unit, and a basic file-lock system to prevent users from simultaneously writing data to the same file. It provided programs with a pool of locks. A command language interpreter, KCL (Kevin’s Command Language), formed the main user interface.

“The Shelton Sig/Net system is based on good hardware and provides good value for money,” opined Land. “The system provides a convenient cost-effective growth path for the user who wants to start small but expects to expand to a multi-user system later... Much of the system is of British manufacture or assembly, which should help price stability. It should be emphasised that in addition to the prices quoted you would require an additional terminal for each user.”

The Osborne Effect

Shelton later upgraded the system with a 6MHz Z80B processor and better 512 x 512 graphics, releasing the new machine as the Sig/Net 10025. A Sig/Net 3B machine came next. Extra modules provided new graphics resolutions, analog-to-digital converters, industrial IO ports and such. In an advert in the same April issue of PCW, SI claimed to have more than a thousand Sig/Net users in the UK. According to Shelton, the system found a home running small office databases, co-ordinating sales teams’ efforts to make sure the same widgets weren’t sold twice, and hosting multiple accountancy ledgers - essentially anywhere where a small group of office workers needed to access and share the same data.

It was, he says, very successful. Shelton Instruments recast itself as a computer maker, leaving its instrumentation heritage and its industrial consultancy behind, and pulled in venture capital funding to build the business.

And then he made a classic blunder, Shelton admits with a chuckle, and invoked the Osborne Effect. Like Adam Osborne before him, Shelton announced the arrival of a superior model some months before it was due and while the company still had stacks of stock yet to sell, all of it punched out in SI’s Islington HQ. The announcement killed demand for the current version, leaving Shelton Instruments and its dealer partners with kit they could not shift. Without the sales of the old machines, SI couldn’t afford to build the new one. It certainly couldn’t afford to junk all the old machines. The impact was devastating, and it led to the collapse of Shelton Instruments.

The Sig/Net itself was acquired by then recently revamped peripherals manufacturer Data Dynamics of Hayes, Middlesex. References to it offering the Sig/Net 3B extend into the late 1980s, but there the trail ends. It’s hard to see the CP/M machine, innovative though it was, surviving long into the 16-bit IBM PC era. Even Shelton admits the machine should have used a 16-bit processor from the start, but CP/M was the standard OS, and it took too long to move to 16 bits. Indeed, the shift to 16-bit prompted by the IBM PC is, says Shelton, one of things that helped do for the Sig/Net just as it did all the other manufacturers of business-centric 8-bit CP/M machines. Shelton had a 16-bit machine in the pipeline, though he can’t now recall what OS it might have run: CP/M, an updated version of McNOS or even MS-DOS.

Not that he found himself at a loose end when Shelton Instruments crashed. Sir Clive Sinclair, an old friend of Shelton’s, had a notion in the mid-1980s to create a cheaper, more competitive and British IBM clone - presumably after the QL has proved itself a failure. Sir Clive was convinced there must be a cheaper way to make one than simply buying in the components from Intel. He’d known Shelton for years, ever since Chris, as a student, had badgered him about errors in his technical writing. So he had Shelton consider what that ‘cheaper than Intel’ approach might be. Shelton did some research and soon had a proposal: to use UK memory and processor company Inmos’ new Transputer CPU to emulate a 4.77MHz IBM PC XT, then the business PC standard, entirely in software.

Sir Clive and Shelton began to plan out a desktop computer with high-resolution monochrome graphics and integrated fax, scanner and copier hardware - a leaf, in some ways, taken from Amstrad’s book. Tying it all together was a Mac-like GUI. It could, the two men thought, become a new standard in office automation, delivering greater capabilities than the IBM PC yet still be able to run all the PC software businesses had acquired.

Sir Clive Sinclair and the PgC7000

Basing the machine on the Transputer would require a host of other chips to go with it, all adding to the cost. Shelton recalls: “We got to the point where we found ourselves asking, why are we putting together a quick video chip to go with this? Why don’t we just have our own processor in the video chip and then we don’t actually need the Transputer? And maybe, if it’s fast enough, we don’t even need the video chip, which is how it turned out.”

Other issues with the Transputer approach were emerging. By 1987, Thorn EMI had acquired Inmos, taken a beating for its trouble, and now wanted rid of the ailing chip maker. And the chip was no longer able to emulate the latest PC technology: by now a 12MHz IBM PC AT. If Sir Clive was to realise his vision of a universal office workstation, he needed a new chip. And, because Sinclair wanted the computer to be as inexpensive to make as possible, the processor would have to be able to double-up as the graphics controller and the laser printer engine too.

In 1988, Shelton and a small team of engineers set out to create just such a chip. He was convinced that a RISC architecture was the way to go in order to create a small chip with as few logic gates as possible: “If you can go fast enough, you can run standard code at standard speed. But by making it an ultra-RISC device, not only will it go faster but it will also be smaller and therefore cheap.”

But he also saw that while a RISC core might well become sufficiently fast to be capable of handling the emulation, it would be hindered by its simplicity and memory access speed. For instance, the CPU would be idle for too long for Shelton's liking, waiting for the next instruction or piece of data to come in from memory. This was before the emergence of out-of-order processors able to re-order their instruction flows according to the availability of data - though at the cost of the highly complex circuitry required to assess which, if any, instructions can be pulled out of the queue early. Of course, implementing out-of-order execution would have required many, many more logic gates and made the chip too large and thus too costly for Sinclair.

Shelton wanted to clock his chip to deliver 200 MIPS of performance, a speed existing chips couldn’t then achieve because of their memory IO limitations. The Transputer could manage 20 MIPS. The upcoming Intel 486, clocked at 50MHz, could only run at between 40 and 50 MIPS. RISC chips generally had higher MIPS ratings but not of the order Shelton wanted.

Free-running core

His solution to the memory bandwidth issue: allow the processor, an “ultra-RISC” core with just a few hard-wired single-byte instructions, to operate at literally whatever clock speed a given chip’s thermal characteristics would allow - essentially automatic over-clocking - and leave the job of keeping it fed with instructions to separate interface controllers built into the chip.

The core had no external clock, or indeed any direct links with outside world. It took instructions and data from an on-die 32-instruction 2.5ns static RAM cache - called the Q Cache - arranged as a simple, circular buffer and kept stocked by the on-chip memory controller using fast RAM-to-RAM data transfers. Other on-die controllers sat between the core and the host system, to manage an I²C bus and to handle interrupts, for instance. The chip had its own video RAM cache.

“It was asynchronous. All the peripheral units were clocked because you have to meet external specs to attach anything to [the chip] but the core was asynchronous and ran at the speed of the day,” he says. Between any two external memory cycles, the core might be able to execute hundreds or even thousands of instructions if they were tightly looped and operating with register-stored data.

The chip had an expandable selection of 40 32-bit registers arranged in five banks named 0, 1, 2, 3 and TOP. When the chip received an interrupt, processor state information was saved to TOP, allowing the register bank to be expanded in future versions of the chip without breaking software compatibility.

On-die RAM, on-die ROM

Shelton’s processor also had its own software on board: a 768-byte ROM packed with hard-coded sub-routines used to implement CISC-style features, all directly accessible by the core by way of an Instruction Fetch Unit able to pull instructions from the cache or the ROM, which also contained the processor boot code. A third of the chip’s area was taken up by the RAM and ROM banks.

Shelton and his team worked in some tricks. The memory controller could support paging and memory access could be accelerated by, where possible, changing only a RAM chip’s column address rather than the row address during fetches; back then it took three times longer to select a row than accessing a column. The upshot: near serial communication with memory and thus fast enough to keep the core’s buffer stocked. If the core ever found itself without instructions, or the cache invalid, it would doze while waiting for an interrupt to signal a freshly filled cache. It could start executing instructions before the Q Cache was full again. Any loss in performance from the core taking a nap would be more than compensated for by the speed with which it tackled the newly cached instructions.

Shelton also chose to implement the chip using the old bipolar rather than the modern CMOS fabrication process because it yielded faster transistors and required fewer lithography masks. The team ran the chip at just 0.25V to deal with bipolar’s otherwise higher-than-CMOS power consumption.

By July 1989, the processor was running in simulation. The following March, the first lithography masks were completed. During 1990, Shelton and his engineers struggled with a flaw in the lithography process and, later, with a bug that emerged in the memory interface, all exposed during the testing of silicon samples fabricated by Ferranti aka GEC Plessey, which had never used its bipolar Collector Diffusion Isolation process for silicon as complex even as Shelton’s before. The team abandoned the original plan to incorporate a dedicated graphics controller and instead built in a dedicated interrupt to allow the chip, now known as the PgC7000 in its prototype form, to operate as a simple CRT controller. Running a 1024 x 768, 8-bit video buffer consumed just one per cent of the CPU time.

Massively parallel processing

The whole thing was built out of some 90,000 transistors, a fraction of the number found in rival CPUs: the PowerPC 601 had a million, the Pentium three million. The die was a mere centimetre-squared in area. Today, Shelton reckons, even fabbed using CMOS rather than bipolar, it would be barely a millimetre in size yet capable of clock speeds of many gigahertz. With so few transistors - in chip terms - yields would be in the order of 95 per cent, he claims, and so the cost of each chip “next to nothing”. It would be so economical and consume barely any power that it would be cheap to implement large arrays of chips operating in parallel. “It would find a huge home in internet nodes managing internet traffic at blazing speeds.”

Arrays, because Sinclair had always been keen on the potential of parallel processing - “Clive was always keen on arrays; parallelism as a way to bigger things was always part of his thinking” - so Shelton had designed the PgC7000 with processor-to-processor communications in mind. The PgC would ultimately form the basis for the massively parallel computers-on-wafers that Sinclair’s ill-fated wafer-scale integration endeavour, Anamartic, would produce. In part, that’s where the notion of the core reading a buffer independently filled by the memory controller had come from: “You could squirt masses of data from one processor to another at the RAM-RAM level. You could construct a matrix of channels all running at blazing speeds Ram to Ram with no processor downtime,” says Shelton.

Parallel systems would require a smart OS. Fortunately, Shelton had found one: TAOS from Tao Systems, “a wacky bunch of people” he had discovered when searching for emulation software. TAOS was a microkernel-based hardware-independent, object-oriented OS with multi-threading, asynchronous process-process messaging and load-balancing. It could have been made for the PgC7000. Indeed, Shelton tweaked the 7000’s instruction set to better support TAOS.

The prospects for the new processor looked rosy, and Sinclair formed a new company in 1991, PgC Ltd., to sell it. Now upped to 250 MIPS, the chip would go on sale as the PgC7600. It would cost $100 in quantity. PgC also announced a cheaper version to help get the chip’s foot in computer makers’ doors: a $40 part fabbed using CMOS technology and thus only capable of 80 MIPS - still impressive compared to the competition.

Ahead of its time

PgC promised it would have a 1000 MIPS CMOS part, the PgC7700, out by the middle of 1993 and sell it for $400. A bipolar version with less on-board memory but rated at a staggering 2000 MIPS yet costing a mere $200 would follow soon after, it pledged. Handheld computers, desktops, terminals and many an embedded application would all be homes for PgC parts, the company boasted.

In Sinclair Research’s 1991 annual report, Sir Clive wrote: “PgC Ltd continues to develop its very high speed computer chip and has received an injection of capital from outside investors. Progress is very promising.” Among those putting in money was Chris Shelton himself. Most of the rest came from Sinclair.

PgC took its processor to market. Shelton recalls talking to Toshiba. “We also talked to IBM. It used the same fab for processors and Dram, so [the PgC] would have been a great product for them. They could have put down one piece of silicon with the processor and the video ram on and made a Transputer that would have blazed away and been tiny, but they were too tied to producing the PowerPC for Apple.

“We did get working silicon, that was the exciting thing. We were able to go out and sell it on the basis that we could show it working. At the time, it was one of the fastest things ever.

”I’m still excited about it... it was way ahead of its time.”

Fast but unorthodox, and PgC failed to persuade any of the big-name chip companies to buy into its processor technology.

“[Sinclair had] given it a certain budget and a certain time to prove itself. But he was quite firm: when the budget was up, the budget was up, and that’s quite right,” SHelton remembers. “I put my own money in, and so did another friend. Eventually we sold it to a company in Seattle. We got some of our money back, but not all of it.”

Too revolutionary?

In April 1994, Sinclair announced he had “licensed” the PgC design to Microprocessor Technology, inc (MTI) in Seattle, which was seeking funding to put the CPU into production. In Sinclair Research’s 1994 annual report, Sir Clive noted: “This looks highly promising, we have received a first payment of £100,000, but it is too early to make predictions.”

It was, and MTI proved unable to get the PgC to market. The powerful laptops Sinclair hoped to make with the new processor wouldn’t arrive either, and neither would his office automation systems.

Chris Shelton largely turned his back on computers. He’s long held a love of mucking about in boats, and during the late 1990s he focused his engineering skills on marine technology. He designed the “submarine telepresence vehicle” Spyfish leisure RoV for Nigel Jagger’s H2Eye to “allow you to enjoy the water without getting wet”, as he puts it. Just over ten years ago, he acquired Autonnic Research, an Essex-based firm that designs and manufactures fluxgate magnetometers used in many a ship-board instrument.

Now in his late 60s, he hasn’t stopped working. Indeed, he’s currently investigating a system to improve the performance of the power systems that need to feed modern boats’ many different devices from motors to sensors to communications kit. “I live in the future,” he says, “not the past.” ®