Original URL: https://www.theregister.com/2012/05/03/unsung_heroes_of_tech_arm_creators_sophie_wilson_and_steve_furber/

ARM creators Sophie Wilson and Steve Furber

Part Two: the accidental chip

Posted in Personal Tech, 3rd May 2012 11:00 GMT

Unsung Heroes of Tech The Story so Far At Acorn, Sophie Wilson and Steve Furber have designed the BBC Micro, basing the machine on the ageing MOS 6502 processor. Their next challenge: to choose the CPU for the popular micro's successor. Now read on...

While Sinclair attempted to move upmarket with the launch of the QL in early 1984, Acorn was trying to invade Sinclair's lucrative low-end territory with a cut-down version of the BBC Micro, to be called the Electron. UK resellers assumed the Electron would be as big a success as the BBC Micro. They ordered in hundreds of thousands, but as the units stacked up in the warehouse the market collapsed.

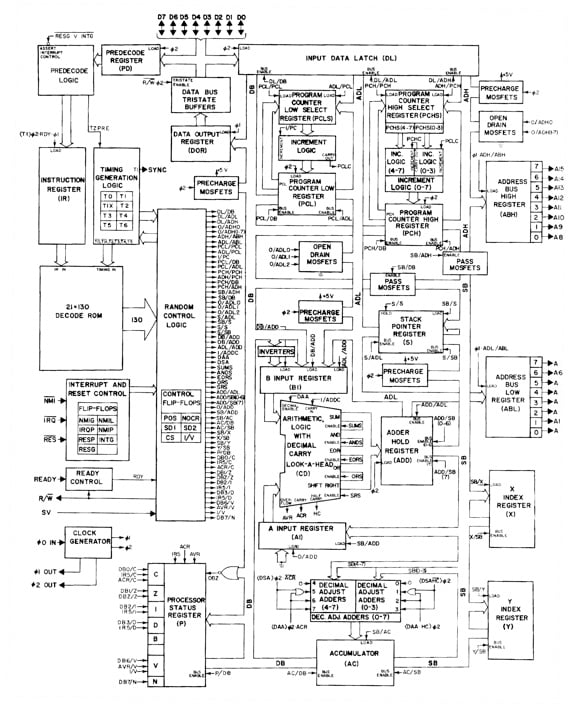

MOS' 6502: good enough for the Electron, not the BBC Micro's successor

While Acorn was heading into cash-flow problems, Wilson and Furber had already become engaged in what would prove to be the biggest adventure of their lives. This wasn't just the next Acorn computer, although that's how it had started, two years earlier.

By 1983, the 8-bit 6502 processor had outlived its shelf life, and Wilson and Furber had been experimenting with available 16-bit processors to power their next machine. The Motorola 68000, the NatSemi 32016 and the many others they'd evaluated all had one flaw in common: not being able to make anything like full use of the memory bandwidth that was becoming available.

“If we were going to use 16- or 32-bit wide memory, we could build a system that would run up to 16, 20 or even 30Mb per second," says Wilson. But the processor was the bottleneck.

With the success of the BBC Micro, Hauser and Curry had been persuaded that an investment in chip design might be a good next move — not necessarily with the idea of Acorn getting into the chip business itself, but to help the company become more informed about this fundamental component of its business.

Hauser had even gone to the lengths of hiring three integrated-circuit designers and buying in chip design tools and workstations. And he’d dumped a bunch of papers on the desks of Wilson and Furber, relating to a novel chip design idea that originated from IBM. Reduced Instruction Set Computing (Risc) meant creating processors that used a limited set of simple instructions rather than the increasingly complex instructions that tended to slow down processors like the 32016.

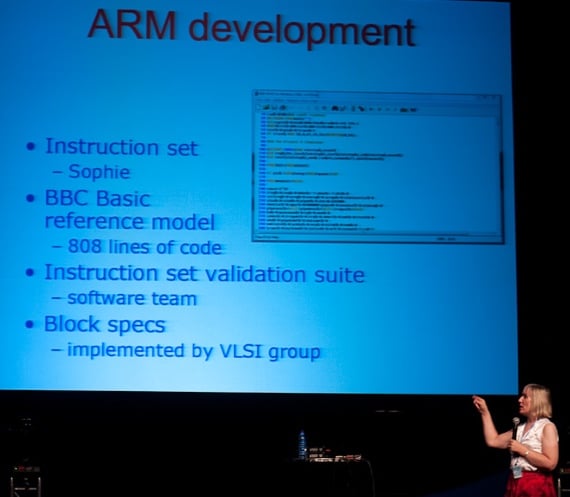

Sophie Wilson talks ARM

Source: Wikimedia

Wilson and Furber started visiting processor manufacturers. Typically they’d find, as Wilson says of a trip to NatSemi’s plant in Israel, “a huge building full of thousands of engineers”. Wilson’s affection for the 6502 also took them, in October of 1983, to the Western Design Centre in Phoenix, Arizona, where Bill Mensch was working on a version of the chip that would support 24-bit addressing.

Breakfast in America

The place was a revelation. As Furber recalls: “We went there expecting big shiny American office buildings with lots of glass windows, fancy copy machines… And what we found was… a bungalow in the suburbs… Yeah, they’d got some big equipment, but they were basically doing this on Apple IIs.”

Mensch assumed that Wilson and Furber turned down the new version of the 6502 because they were looking for a full 32-bit processor to compete with Apple’s plans for the 16-bit Apple IIgs. Mensch didn’t want to be rushed into 32-bit until he’d completed the current 24-bit project.

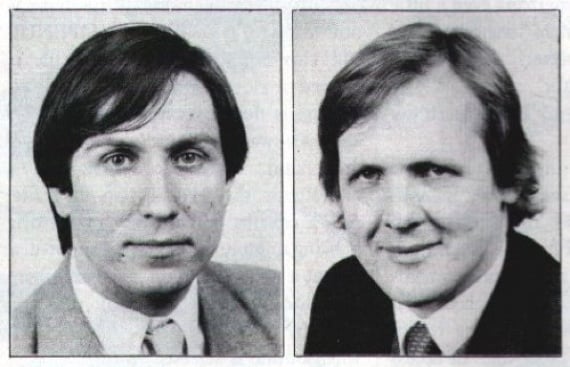

Wilson and Furber in the mid-1980s

He says: “They didn't want to wait for me to start the 32-bit version so they left upset with my choice and decided that they could do the design themselves in Cambridge.”

What had decided them wasn’t being “upset” at Mensch’s reluctance. It was the encouragingly tiny scale of his globally successful operation. As Wilson tells it: “A couple of senior engineers, and a bunch of college kids… were designing this thing… We left that building utterly convinced that designing processors was simple.”

Simple? IBM’s own commercially unsuccessful first attempt at a Risc processor had taken months of instruction set simulation on heavy mainframes. Wilson, however, just plunged right in. Herman Hauser remembers: “Sophie did it all in her brain.”

It was Furber’s job to turn Wilson’s brainchild into something that could be taped out and sent off to the factory for fabrication. Once she’d thought out the instruction sets, “Steve then knocked things out”, she recalls.

“It turned out there is no magic," says Furber. "A microprocessor’s just a lump of logic, like everything else we'd designed. There are no formidable hurdles. The Risc idea was a good one, it made things much simpler. And 18 months later, after about 10 or 12 man years of work, we had a working ARM in our hands. Which probably surprised us as much as it surprised everybody else.”

Call to ARMs

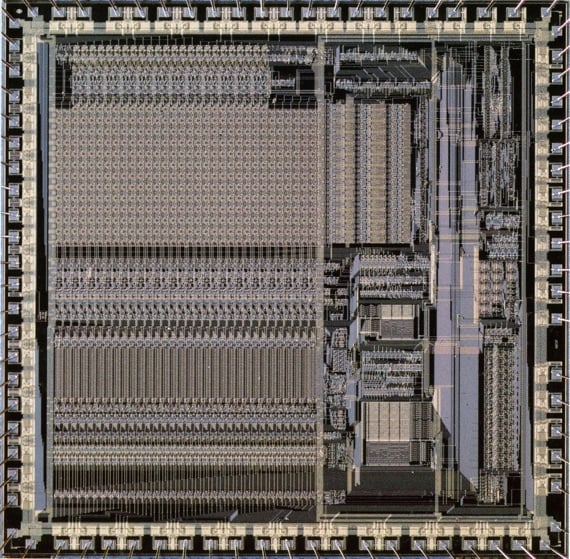

The first ARM used 25,000 transistors. "It was a really small, simple piece of design," says Furber.

Today one of the most significant features of the ARM family is its low power consumption. But that hadn't been an initial goal, according to Furber. “We designed the ARM for an Acorn desktop product, where power isn't of primary importance. But it had to be cheap. Cheap meant it had to go in a plastic package, plastic packages have a fairly high thermal resistance, so we had to bring it in under 1W.”

The original 3µm ARM chip

The power test tools they were using were unreliable and approximate, but good enough to ensure this rule of thumb power requirement. When the first test chips came back from the lab on the 26 April 1985, Furber plugged one into a development board, and was happy to see it working perfectly first time.

Deeply puzzling, though, was the reading on the multimeter connected in series with the power supply. The needle was at zero: the processor seemed to be consuming no power whatsoever.

As Wilson tells it: “The development board plugged the chip into had a fault: there was no current being sent down the power supply lines at all. The processor was actually running on leakage from the logic circuits. So the low-power big thing that the ARM is most valued for today, the reason that it's on all your mobile phones, was a complete accident."

Wilson had, it turned out, designed a powerful 32-bit processor that consumed no more than a tenth of a Watt.

From the horse's mouth...

“Running on leakage” also happened to be an appropriate description of Acorn outside the lab. To staunch the haemorrhaging finances, Italian computer manufacturer Olivetti stepped in with an initial cash injection in February 1985. By the end of the year, Olivetti had taken a controlling interest, effectively buying the company.

“Olivetti wasn't told about the ARM when they bought Acorn,” Steve Furber remembers. When they found out, he says, “they didn't know what to do with it”. Wilson and Furber did, and were able to push through the ARM’s initial commercial appearance in the ARM Development System, a specialist add-on to BBC Micro costing £4500.

Archimedes principle

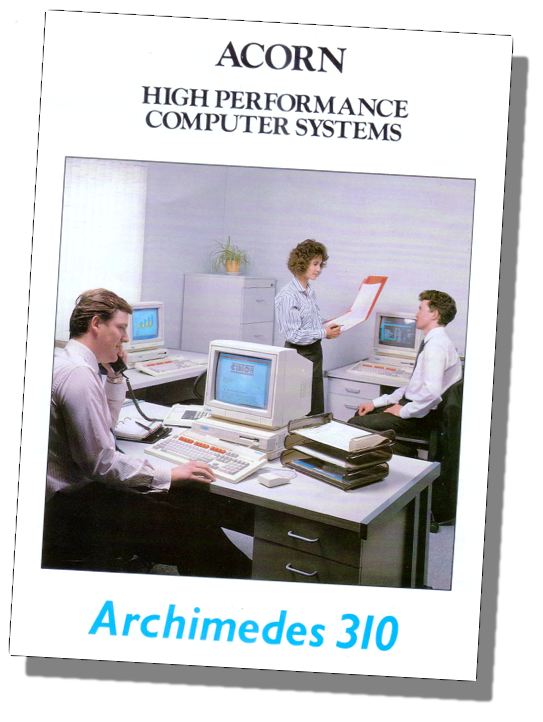

The ARM DS was followed, in 1987, by the first complete ARM-based computer, the Acorn Archimedes, costing a hair under £900. The various Archimedes machines that followed were among the most powerful home computers available, and by the time the last one arrived, in 1992, the processor had evolved to include a crucially important new feature. It was this that would prepare the chip for its triumphant role as the dominant mobile powerhouse.

The ARM had become a system-on-a-chip (SoC).

The key was the relatively small real estate on the die required by the processor proper, leaving plenty of room on the surrounding silicon for whatever else might take the designer’s fancy.

Says Furber: “You could just think about the four chips you needed to build the Archimedes system, and putting them onto one chip.”

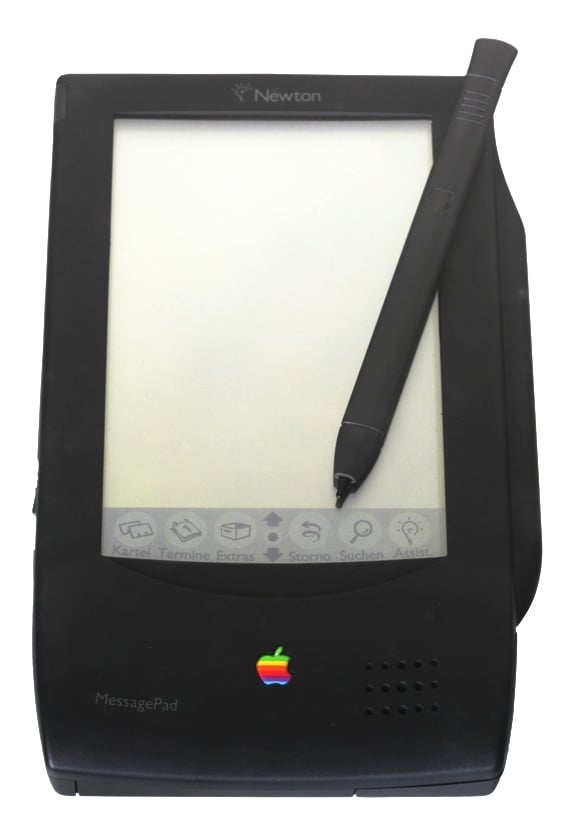

A large, ailing computer company across the Atlantic had seen the potential for this early on in the game. In 1986, Apple began using ARM processors for backroom prototypes of what was to become the first ever tablet computer, the Newton. Five years later, Apple ploughed a reported $1.5m into the newly-founded ARM company, taking a 43 per cent share of the joint ownership along with Acorn and the processor fabricator VLSI.

The Newton, launched in 1993, was a flop. But by the turn of the millennium, Steve Jobs was back in the saddle and Apple’s investment in ARM went on to pay huge dividends as the Cupertino company branched out into portable devices like the iPod, the iPhone and the iPad.

Acorn Computers, meanwhile, had unravelled. An escape vessel from the wreck was a company focusing on Digital Signal Processor (DSP) design called Element 14. Sophie Wilson was a key crew member, and when Element 14 was bought by Broadcom in 2000, Wilson went with it, to become, as she is today, chief architect of Broadcom’s DSL business.

ARM-powered but not Apple's saviour: the MessagePad 100

Source: Wikimedia

Herman Hauser was once asked why a great British success story like Acorn finally failed. He queried the last word: “There are over 100 companies in the Cambridge area that can trace their beginnings back to Acorn, and have been founded by Acorn alumni. ARM has now sold over ten billion processors, ten times more than Intel.”

Since 2008 when Hauser made that statement, ARM-based processors not only monopolise our smartphones, but also appear in 90 per cent of our hard drives, 80 per cent of our digital cameras, and have also found their way into printers and digital TVs. They are currently selling at a rate of over five billion per year. ®