This article is more than 1 year old

Google reveals how its Borg clusters have evolved yet still only use about 60 percent of resources (Alibaba might do better)

New dump of tracing data and pair of papers reveal plenty about ad giant’s internal operations

Google has published a huge trove of new data describing the performance of the "Borg" clusters that deliver its services and the antecedent to Kubernetes.

The ads-and-personal-data giant last revealed this sort of data in 2011 when it published 29 days’ worth of “every job submission, scheduling decision, and resource usage data for all the jobs in a Google Borg compute cluster”. Now it’s offered data generated by eight clusters across all of May 2019 and added CPU usage information in five minute intervals, shared resource reservation information and job-parent information for master/worker relationships. As a result the dump contains 350 gigabytes of data from each cluster, up from 40 gigabytes from the single cluster sampled in 2011.

Google has also published a paper [PDF] analysing it all, penned by folks from Google, Harvard, Carnegie Mellon University and the University of St Andrews. And in case you need to know more there’s another all-Google paper [PDF] that reveals the workings of a new scaling tool Google uses called “Autopilot”.

The Autopilot paper tells us this about Google’s innards:

The document also reveals that Google seems to be a bit jealous of rival clouds, by noting that Autopilot seldom uses more than 50% of memory across a cluster but Alibaba reportedly achieves 80 percent utilisation.

The trace analysis paper reveals that Borg “now supports multiple schedulers, including a batch scheduler that manages the aggregate batch job workload for throughput by queueing jobs until the cell can handle them, after which the job is given to the regular Borg scheduler.”

It also offers more about Autopilot’s role, by explaining that it “aims to remove the burden of specifying a job’s resource requirements. This can be challenging because asking for too few resources can be catastrophic: a job might fail to meet user service deadlines or even crash.

“This translates directly into efficiency improvements and costs savings, by allowing more work into the system. Autopilot makes use of historical data from previous runs of the same or similar jobs in order to configure the initial resource request, and then continually adjusts the resource limits as the job executes so as to minimize slack.”

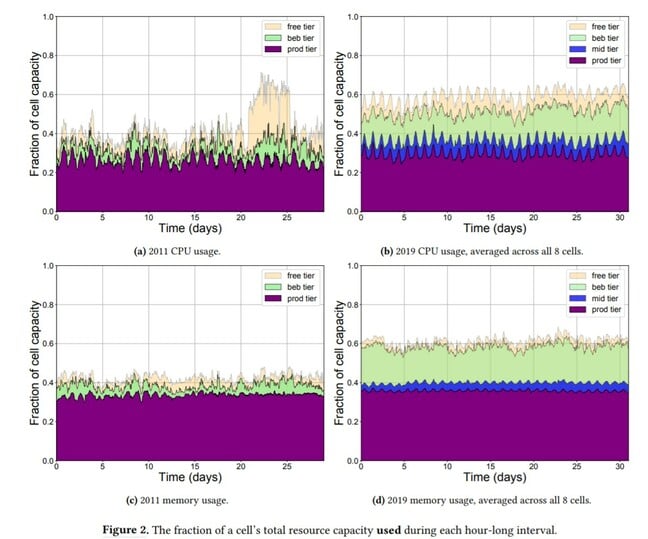

But even with Autopilot steering workloads, utilization of Borg clusters remains quite low: the graphs below shows that memory and CPU usage both seldom reach more than 60 percent.

Google's analysis of its own memory and CPU consumption. Click to enlarge.

The authors suggest more research into why that’s so.

Another observation is that one percent of resource-hungry applications consume 99 percent of resources used. The paper suggests that’s an issue because smaller jobs end up at the back of the queue and/or fighting for resources.

There’s also some discussion of changing use patterns within Google.

“Much of the workload has moved from the free tier (low priority) into the best-effort batch tier (jobs managed by a queued batch scheduler), while the overall usage for production tier (high priority) jobs has remained approximately constant,” the tracer analysis paper explains.

There’s plenty more in the papers and 2.8GB of tracing data on GitHub in BigQuery tables if you’d like to play.

The papers say they were presented at the Fifteenth Conference on Computer Systems (aka EuroSys ’20), an event due to run in in Heraklion, Greece, this week, but which has of course gone virtual. ®