This article is more than 1 year old

NetApp puts the pedal to the metal with Plexistor

It's on a smashing orange gig with an Optane silver bullet

Analysis NetApp hopes to have a MAX Data server persistent memory product announced before the end of the year, with single digit microsecond latency using Optane DIMMs, and tiering data to NVMe over Fabric-attached ONTAP all-flash arrays.

MAX Data, it is daring to hope, might actually be a silver bullet competition killer. This is revealed in a NetApp MAX Data podcast.

The product will be called NetApp MAX Data, standing for NetApp Memory Accelerated Data, and involves acquired Plexistor’s ZUFS; Zero-copy User-mode File System, a persistent memory-based file system in Linux user space.

MAX Data presents a POSIX file system interface to applications - they don’t need to change - and tiers data from an underlying block device, an ONTAP all-flash array, into persistent memory. The persistent memory is Intel’s 3D XPoint in DIMM form and data would travel between it and the underlying ONTAP flash box across an NVME over Fibre Channel (FC-NVMe) link, NetApp having announced ONTAP support for FC-NVMe in May.

The podcast people say that a server equipped with the Plexistor driver and Optane DIMMs could deliver single digit microsecond data access latency to applications; that’s literally something between 1 and 9μs. They say an Optane DIMM has a 10μs latency.

Plexistor’s driver cuts out a huge amount of the Linux IO stack, doing straight memory semantics, and while the podcasters don’t provide an actual number they do enthuse about how freaking fast MAX Data is.

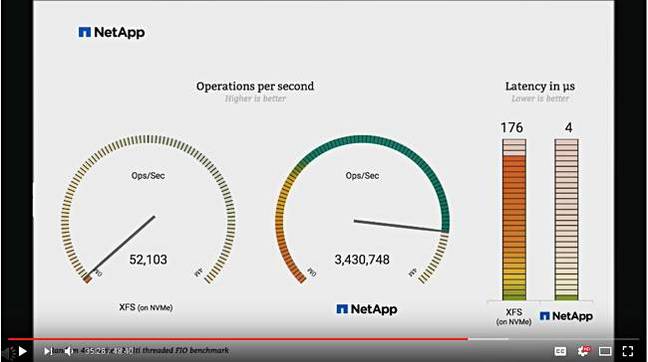

Jef Baxter, a NetApp ONTAP evangelist, presented at a Tech Field Day event in March and showed a slide with a server doing 3,430,748 random 4K write IOPS with 4μs latency using Plexistor SW and some Optane DIMMs.

In comparison, XFS and a single NVMe SSD did 83,963 IOPS with 176μs latency. So let’s settle on 4μs latency.

We’e told writes will always be at local speed whereas reads are tiered under a hot and cold formula, with a formula for how much PM you would need to cache a back-end database.

With ZUFS tiering data out to and from the FC-NVMe-attached ONTAP array that means data written to PM gets out to the ONTAPO box and benefits from its data management and protection capabilities; snapshotting, replication, and tiering out to the public cloud using NetApp’s data fabric.

NetApp people have tested that it works with skinny ONTAP arrays too; the ONTAP SELECT product and ONTAP in the public cloud, and it does.

They have tested the Plexistor SW with containers and it accelerates their data access. They are having thoughts longer term about seeing if it could accelerate a NAS back end, or actually run in the public cloud.

The NetApp podcasters are keen on the idea that NetApp is smashing orange (hint; the colour of Pure Storage) and confident they will move upwards and rightwards in next year’s Gartner SSA MQ. They don’t say they could overtake Pure and become the literal leader of the Leader’s quadrant but you can bet they’re thinking it.

They are confident this technology is unique; no one else has it and NetApp will rule the roost.

It’s not for all server applications; only the ones that need screamingly low latency, such as the usual financial trading ones, and any analytics on largish datasets (say up to 3TB) needing to be in memory, meaning DRAM plus persistent memory, and run in real time. Think AI and machine learning and high-frequency transaction processing.

MongoDB shard rebuilds, we’re told would be massively accelerated and the database platform wouldn’t need multiple separate modes.

There’s talk of being able to selectively provision persistent memory to server VMs in the future, with NetApp working with VMware on the idea. The v6.7 release of vSphere supports persistent memory now.

NetApp is dependent on Intel delivering Xeon CPUs with Optane DIMM support, and that should be the Cascade Lake SP processors slated for late 2018 availability.

Expect more MAX Data marketing and evangelism at NetAp’s forthcoming Insight events. The NetApp podcasters are hugely and deeply excited by the prospects of this technology and think they can take over the block array low-latency access world with it. They think their Plexistor technology is a silver bullet that can kill a lot of the demons that have dissed NetApp in the past.

Oh how they wish, how they do wish, that they are right. ®