This article is more than 1 year old

AI, caramba: NetApp pits scaly A800 ONTAP beast against Pure's AIRI fairy

Flashes next-gen unit to compete with FlashBlade box

The clash of the million-dollar AI titans resumes. NetApp has designed an ONTAP AI architecture based on its topline A800 flash array and Nvidia DGX-1 to try to win fat-pocketed customers away from Pure and Nvidia's AIRI AI system-in-a-box.

Nvidia and NetApp collaborated on a reference architecture in June – and provided AI performance results using NetApp's A700 flash array.

Pure Storage provided similar results for its AIRI system, which combines Nvidia GPUs with Pure's FlashBlade array, and beat the A700/Nvidia system.

The ONTAP AI documentation (PDF) spells out the system components and provides more performance data showing the A800 feeding data to Nvidia's GPUs, which is, for now, faster than the FlashBlade array in Pure's AIRI system.

ONTAP AI has pre-validated designs with product available through NetApp channel partners. The main components are:

- NetApp A800 with 48 x 1.92TB NVMe SSDs

- Nvidia DGX-1 with 8 x Tesla V100 graphic processing units (GPUs)

- Four Mellanox ConnectX4 single-port network interface cards per DGX-1

- Cisco Nexus 3232C 100Gb Ethernet switch

It uses a high-availability design with redundant storage and network and server connections.

The entry point is a 1:1 A800:DGX-1configuration and it can scale out to a 1:5 configuration and beyond. A 1:5 config has five DGX-1 servers fed by one A800 high-availability (HA) pair via two switches.

When it comes to AI, Pure twists FlashBlade in NetApp's A700 guts

READ MOREEach DGX-1 server connects to each of the two switches via two 100GbitE links. The A800 connects via four 100GbitE links to each switch. The switches can have two to four 100Gbit inter-switch links, designed for failover scenarios. The HA design is active-active.

NetApp A800 and A700 systems can scale from two nodes (364.8TB) to a 24-node (12 HA pairs) cluster (74.8PB with A800; 39.7PB with A700s).

A single A800 system supports throughput of 25GB/sec for sequential reads and 1 million IOPS for small random reads at sub-500μs latencies. A full A800 cluster can feed data to the DGX-1 at 300GB/sec. NetApp said an A800 HA pair has been proven to support up to 25GB/sec under 1ms latency for NAS workloads – the ones used here.

In comparison NetApp's A700s system supports multiple 40GbitE links to deliver a maximum throughput of 18GB/sec. The A800 system also supports 40GbitE.

NFS and RoCE

The DGX-1 supports 100GbitE RDMA over Converged Ethernet (RoCE) for its cluster interconnect.

However, the A800 uses NFS to send data to the DGX-1s across the Nexus switch, and not RDMA. The Nexus ability to prioritise RoCE over all other traffic allows the 100GbitE links to be used for both RoCE and traditional IP traffic, such as NFS v3 storage access traffic.

Multiple virtual LANs (VLANs) are provisioned to support both RoCE and NFS storage traffic. Four VLANs are dedicated to RoCE traffic, and two VLANs are dedicated to NFS storage traffic.

To increase data access performance, multiple NFSv3 mounts are made from the DGX-1 server to the storage system. Each DGX-1 server is configured with two NFS VLANs, with an IP interface on each VLAN. The FlexGroup volume on the AFF A800 system is mounted on each of these VLANs on each DGX-1, providing completely independent connections from the server to the storage system.

Containers containers containers

The DGX-1 server leverages GPU-optimised software containers from NVIDIA GPU Cloud (NGC), including containers for all of the most popular DL frameworks. The NGC deep learning containers are pre-optimised at every layer, including drivers, libraries and communications primitives.

Trident is a NetApp dynamic storage orchestrator for container images that is fully integrated with Docker and Kubernetes. Combined with Nvidia GPU Cloud (NGC) and popular orchestrators like Kubernetes or Docker Swarm, it enables customers to deploy their AI/DL NGC Container Images onto NetApp storage.

Performance

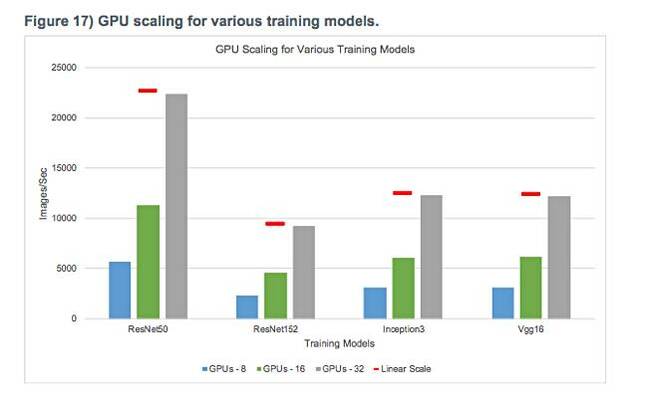

NetApp's technical paper contains performance information, with a summary chart showing results as the number of GPUs increase:

Everything appears to scale well. No numbers are provided but we've carefully inferred them for the Resnet-50 and Resnet-152 categories from the chart, and tabulated them with the known Pure AIRI and A700 numbers.

For now, the A800 numbers overlap the A700 and Pure AIRI numbers at the 8-GPU level and then scale out through 16 to 32 GPUs. Upcoming work by Pure could well provide company in the latter cells:

Resnet-50:

| 1 GPU | 2 GPUs | 4 GPUs | 8 GPUs | 16 GPUs | 32 GPUs | |

| Pure AIRI | 346 | 667 | 1335 | 2540 | ||

| NetApp A700 | 321 | 613 | 1131 | 2048 | ||

| NetApp A800 | 6000 | 11200 | 22500 |

Resnet-152:

| 1 GPU | 2 GPUs | 4 GPUs | 8 GPUs | 16 GPUs | 32 GPUs | |

| Pure AIRI | 146 | 287 | 568 | 1122 | ||

| NetApp A700 | 136 | 266 | 511 | 962 | ||

| NetApp A800 | 2400 | 4100 | 9000 |

Here are the previous Pure and NetApp charts.

There is no price/performance data for ONTAP AI but we imagine millions of dollars are involved. AI at this level does not come cheap. ®