This article is more than 1 year old

Total recog: British AI makes universal speech breakthrough

SpeechMatics bests world+dog at adding new language. How did it do it?

Interview SpeechMatics, the company founded by British neural network pioneer Tony Robinson, has made major advances in speech recognition.

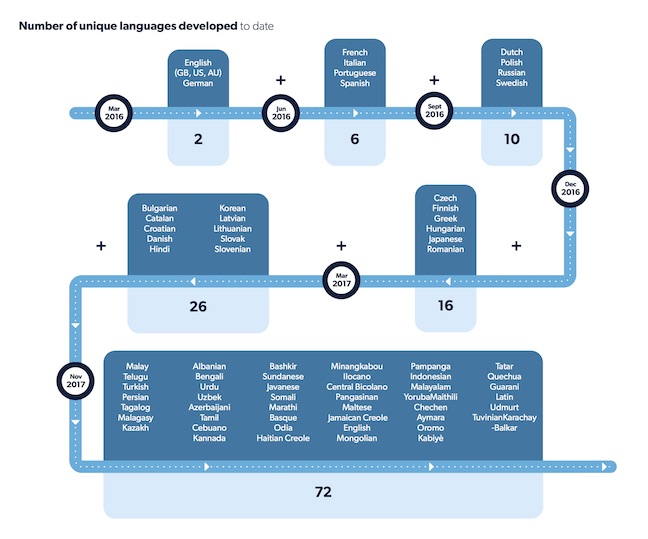

Speechmatics’ Automatic Linguist can now add a new language to its system automatically – without human intervention or tuning – in about a day, crunching through 46 new languages in just six weeks.

Consider that there are around 7,000 languages in the world, and that the top 10 languages cover less than half the world’s population. The top 100 most popular languages still only get you to about 85 per cent. So speeding things up is significant.

“It's a technology of such remarkable ingenuity - I was astonished when I heard it was possible," said Matthew Karas in our profile of Robinson earlier this year.

We invited Robinson, who pioneered the use of recurrent neural networks in speech recognition, the “first deep neural networks” - to explain how the team built Project Omniglot. We also asked for his comments on the general field, including whether the AI business can get around the predicted “pause”.

Life, the universe, and everything

The secret, it turns out, is starting from where nobody else starts out, and building a universal AI.

A language model - Speechmatics calls them a language pack - contains both the acoustic model, what that language sounds like, and the set of probabilistic data that matches those sounds with words. The key was finding commonalities between languages, Robinson told us.

Tony Robinson

“You can either say ‘all languages look so different’. Or you come from the opposite way, and say: look we are writing software that is going to work in every language in the world. You start with that, and then say how best can I fit all the languages into the one framework. What’s a word - our own definition of a word? We want it to work within our framework.

“That’s completely what AL is about.”

New models used to take months, because the process would include the compilation of pronunciation dictionaries, and other canonical data sources, which would then be applied to the training data from real speakers.

Karas, a speech entrepreneur and a former student of Robinsons at Cambridge University told us: “Then, the training data used to be marked up phonetically, which was only partially automated - requiring laborious manual correction.

“The waveform is in the same format whatever language you have. But the sounds can often differ. There’s a lot of commonality in the phonemes every language uses. That gets us to sounds. The other bit is what words follow other words. We found defining what a word was gets quite interesting having such a wide variety of languages. It’s not trivial.”

“Because it’s machine learning, we have a different definition of what a ‘word’ is internally, but it doesn’t matter at the end.”

Different languages represent different challenges. Context in tonal languages like Chinese must be inferred from the probability tables.

“Yes, you can have tiny differences in sound - but then you can be over a phone or be in a restaurant such that you can’t tell those differences in sound anyway - so you have to go on context. It’s all probabilities.”

Speechmatics can draw on the linguistic patterns identified in other languages to make the process significantly faster than the industry standard, the company explained.

In addition to getting to add new languages faster, Speechmatics is also boasting about its accuracy. In two weeks “using minimal data sets”. Speechmatics built Hindi to around 90 per cent accuracy, and it made 23 per cent fewer errors than Google’s Hindi transcription service.

Talk to Robinson for any time and you realise optimisation is an obsession.

“I like taking things apart, yes. That’s the bit I like - making it run faster and more accurately,” he said.

He ordered a tear-down and redesign last year. But with rapid developments in platforms and new techniques, he explained to us recently. This blog post by Remi Francis from the company also explains how the British upstart takes a different approach.

The universal approach also informs the way Speechmatics approaches the market. It currently offers its services as a utility, either in the cloud, or as an on-premises or on-device install. Robinson didn’t sound keen on the “bespoke” business model.

“One way is to say we’ll take one market segment, and do a lot of tuning for a few big customers, and it will work a bit better that way. We don’t like that. We have just one engine: the machine learning approach means it will get better with time.”