This article is more than 1 year old

What did OVH learn from 24-hour outage? Water and servers do not mix

Coolant leak crashed VNX array at web host's Paris data centre

An external water-cooling leak crashed a Dell EMC VNX array at an OVH data centre in Paris and put more than 50,000 websites out of action for 24 hours.

OVH is the world's third largest internet hosting company with 260,000 servers in 20 data centres in 17 countries hosting some 18 million web applications.

The failure took place around 7pm in OVH's P19 data centre on June 29. This was OVH's first data centre opened in 2003. It has since been surpassed in size by a Gravelines data centre, the largest in Europe, which has a 400,000-server capacity.

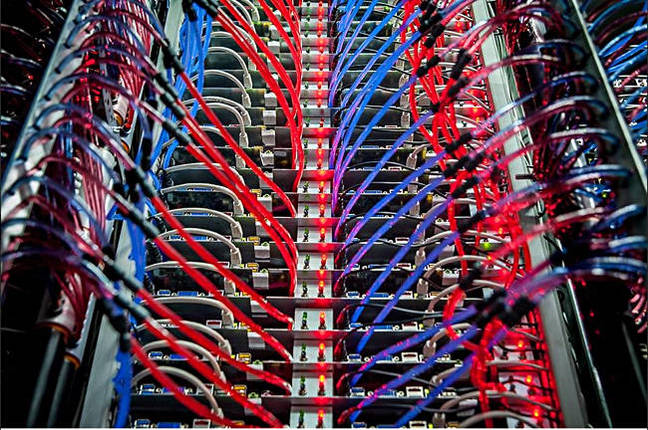

OVH has developed its own liquid cooling concept, which is used in the P19 facility. It has coolant circulating through the centre of server racks and other components to cool them down via component-level heat exchangers, hooked up to a top-of-rack water-block heat exchanger. The heated water is then cooled down by thermal exchange with ground water. This scheme saves electricity by avoiding the use of air conditioners.

OVH rack liquid cooling

According to the incident log, P19 has some gear in the basement, making cooling via outside air problematic, hence the water-cooling development.

OVH later bought several VNX 5400 arrays from EMC. The one in question had 96 SSDs in three chassis, 15 shelves of local disk, and the standard active-active pair of controllers. The host says: "This architecture is designed to ensure local availability of data and tolerate failures of both data controllers and disks."

Since then it has developed the use of non-proprietary commodity arrays using Ceph and ZFS at Gravelines and is migrating off the proprietary gear. The affected array at Paris, one of two there, was not long for this world. The two were acting as database servers, providing the data of the dynamic pages of the hosted websites, information related to the users, and article text and comments in the case of a blog.

The incident account says: "At 6:48pm, Thursday, June 29, in Room 3 of the P19 datacenter, due to a crack on a soft plastic pipe in our water-cooling system, a coolant leak causes fluid to enter the system.

"One of the two proprietary storage bays (racks) were not cooled by this method but were in close proximity. This had the direct consequence of the detection of an electrical fault leading to the complete shutdown of the bay."

OVH admits installing them in the same room as the water-cooled servers was a mistake. "We made a mistake in judgment, because we should have given these storage facilities a maximum level of protection, as is the case at all of our sites."

Fault on fault

Then the crisis was compounded by a fault in the audio warning system. Probes able to detect liquid in a rack broadcast an audio message across the data centre. But an update to add multi-language support had failed and the technicians were alerted to the leak late, arriving 11 minutes after it happened.

At 6:59pm they tried to restart the array. By 9:25 they hadn't succeeded and decided to carry on trying to restart the failed array (plan A) while (plan B) trying to restore its data to a second system using backups.

Plan A

Dell EMC support was called at 8pm and eventually restarted the array but it stopped 20 minutes later when a safety mechanism was triggered. So the OVH techs decided to fetch a third VNX 5400 from a Roubaix site and transfer the failed machine's disk drives to this new chassis, using its power modules and controllers.

The system from Roubaix arrived at 4.30am with all the failed system's disks moved over by 6am. The system was fired up at 7am but, disaster, the data on the disks was still inaccessible. Dell EMC support was recontacted at 8am and an on-site visit arranged.

Plan B

A daily backup was the resource used for Plan B, OVH noting: "This is a global infrastructure backup, carried out as part of our Business Recovery Plan, and not the snapshots of the databases that our customers have access to in their customer space.

"Restoring data does not only mean migrating backup data from cold storage to a free space of the shared hosting technical platform. It is about recreating the entire production environment."

This meant it was necessary, in order to restore the data, to:

- Find available space on existing servers at P19

- Migrate a complete environment of support services (VMs running the databases, with their operating systems, their specific packages and configuration)

- Migrate data to the new host infrastructure

This process had been tested in principle but not at a 50,000-website scale. A procedure was scripted and, at 3am the next day, VM cloning from a source template started.

At 9am, 20 per cent of the instances had been recovered. Hours passed and "at 23:40, the restoration of the [last] instance ends, and all users find a functional site, with the exception of a few users whose database was hosted on MySQL 5.1 instances and was restored under MySQL 5.5."

Hindsight

It was obvious that disaster recovery procedures for the affected array were inadequate and, in the circumstances, OVH's technical support staff did a heroic job but should not have had to.

The VNX array was in the wrong room but, even so, the failover arrangements for it were non-existent. Active DR planning and testing were not up to the job.

Communication with affected users was criticised and OVH took this in the chin. "About the confusion that surrounded the origin of the incident – namely a coolant leak from our water-cooling system – we do our mea culpa."

And what have we learned?

- Do not mix storage arrays and water

- Do full DR planning and testing for all critical system components

- Repeat at regular intervals as system components change

- Do not update critical system components unless the update procedure has been bullet-proof tested

®