This article is more than 1 year old

DeepMind takes a shot at teaching AI to reason with relational networks

Reasoning is one part of the puzzle to general intelligence

Analysis The ability to think logically and to reason is key to intelligence. When this can be replicated in machines, it will no doubt make AI smarter.

But it’s a difficult problem, and current methods used in deep learning aren’t advanced enough. Deep learning is good for processing information, but it can struggle with reasoning.

Enter a different player to the game: relational networks, or RNs.

The latest paper by DeepMind, Alphabet’s British AI outfit, attempts to enable machines to reason by tacking on RNs to convolutional neural networks and recurrent neural networks, both traditionally used for computer vision and natural language processing.

“It is not that deep learning is unsuited to reasoning tasks – more that the correct deep learning architectures, or modules, did not exist to enable general relational reasoning. For example, convolutional neural networks are unparalleled in their ability to reason about local spatial structure – which is why they are commonly used in image recognition models – but may struggle in other reasoning tasks,” a DeepMind spokesperson told The Register.

RNs are described as “plug-and-play” modules. They are designed in a way where the architecture allows the network to focus on the relationship between pairs of objects. It can be thought of as being similar to a graph network, where the nodes are objects and the edges connecting the nodes are the relationships between them.

In the CLEVR dataset, the network is presented with several objects with different shapes, sizes and textures, and is asked a series of questions that test its visual reasoning capabilities:

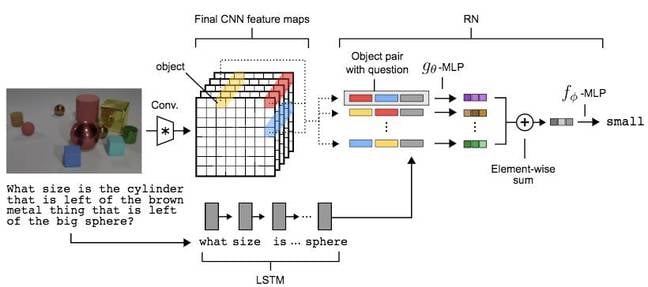

Different neural network components are used to solve a single task (Image credit: Santoro et al)

To answer the relational question above, the RN has to compare the size of all the objects to the “brown metal thing” and consider the cylinder only. First the convolutional neural network discerns the objects in the scene, and the question is then directed to the RN using a long-short term memory network.

The word embedding of the question allows the RN to focus on the relevant pairs of objects and calculate the relation to spit out an answer. It’s clever trickery that allows a single function to work out each relation. Researchers do not have to code a function that looks at shape, and another one for size. It means RNs can be more efficient with data.

“State-of-the-art results on CLEVR using standard visual question answering architectures are 68.5 per cent, compared to 92.5 per cent for humans. But using our RN-augmented network, we were able to show super-human performance of 95.5 per cent,” DeepMind said in a blog post.

“We speculate that the RN provided a more powerful mechanism for flexible relational reasoning, and freed up the CNN to focus more exclusively on processing local spatial structure. This distinction between processing and reasoning is important,” DeepMind’s paper said.

Talk to me

The RN has also shown promising signs that it can reason with language. The bAbI dataset, popularized by Facebook’s AI research team, is composed of 20 question-answering tasks that evaluate deduction, induction and counting skills.

First a few facts are given, such as, “Sandra picked up the football” and “Sandra went to the office,” before a question like “Where is the football?” is asked. The RN managed to pass 18 out of 20 tasks, beating previous attempts that used memory networks used by Facebook and DeepMind’s differentiable neural computer.

The two bAbI tasks it failed were more complex, requiring the RN to cope with “two supporting facts” and “three supporting facts.” It shows just how primitive the research is in elevating a machine’s intelligence to include reasoning.

It’s still early days, and DeepMind hopes to apply RNs to different problems such as modelling social networks and more abstract problem solving.

To make it more powerful, the team also wants to bump up its computing efficiency. A hundred objects were tested, but more can be considered with better hardware that allows more parallel computations.

The research was heavily inspired by ideas from symbolic AI, an area of research that believes knowledge should be explicitly represented as facts and rules, and was dominant until the late 1980s.

Murray Shanahan, a senior research scientist at DeepMind and professor of cognitive robotics at Imperial College London in the UK, believes that symbolic AI could make a comeback.

“I think there is an undercurrent of feeling in the machine learning community that some of the ideas of symbolic AI need to be incorporated in neural network architectures, and a fringe of researchers have been chasing this idea for years. Recently, though, the idea has been getting a bit more attention. It’s still only a beginning, but it’s a very promising one,” Shanahan told The Register.

Reasoning is one of many components that machines will have to master in order to solve the puzzle of general intelligence. And there are still several related unsolved problems, such as memory, attention and autonomy. ®