This article is more than 1 year old

Gotta speed up HPC arrays. Flash or disk, flash or disk. Let's do both – Seagate

Hybrid set-up plus Nytro caching software sends small I/Os to SSD

Seagate’s ClusterStor 300N storage array for high-performance computing is a hybrid flash/disk array, lifting sequential bandwidth by 33 per cent and small, random I/O by up to 1,200 per cent.

The idea is that the 300N systems run mixed workloads much better than previous ClusterStor arrays, by using flash to accelerate IOs that disk handles relatively badly. Seagate has added SSDs to the media mix plus software to handle them in conjunction with the disks. It claims applications will see the up to 12x IO speed benefit from SSDs for small I/O at a cost slightly above an HDD-only approach.

Thus should help ClusterStor compete more effectively against arrays such as those from DataDirect Networks, with that company’s burst buffer technology. A canned quote from Ken Claffey, Seagate VP and general manager of its HPC systems business, says: “Seagate’s ClusterStor 300N expands on our proven, engineered systems approach that delivers performance efficiency and value for HPC environments of any size, using a hybrid technology architecture to handle tough workloads at a fraction of the cost of all-flash approaches.”

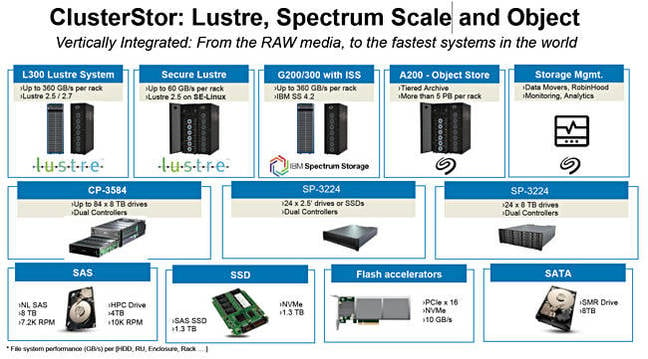

The ClusterStor array line came to Seagate with its 2013, $374m Xyratex acquisition, alongside its much-wanted disk drive testing equipment. The product line includes the 9000 Big Data rack system, and the 1500 Lustre parallel file system array (including the L300 variant). There is a near-equivalent G200 Spectrum Scale parallel file system array, and an A200 active archive object store which can be a backup target for the 1500 and G200.

Within the 300N line we have the L300N and G300N products, derivatives of the L300 and G200, and intended for use in Lustre and Spectrum Scale parallel file processing environments, meaning they potentially replace or sit alongside the existing L300 Lustre and G200 Spectrum Scale systems.

Seagate tells us the 300N improves sequential performance by 33 per cent, with up to 112GB/s per rack. Also it is the highest density - 720TB, 2U - Lustre storage array, making it possible to build the world's first 15 PB 42U rack. It calls them (in a somewhat over the top pronouncement) engineered solutions.

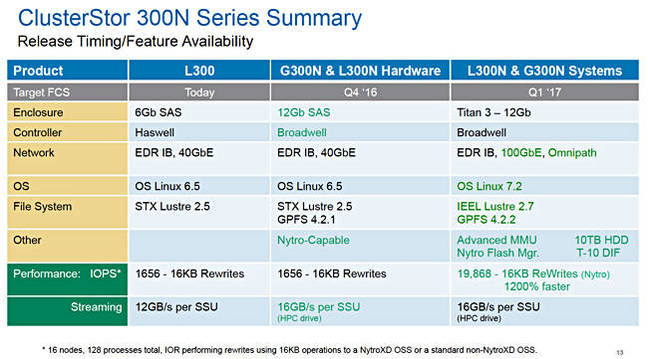

There is a 300N embedded application controller which uses a 14nm Broadwell CPU. It supports either Intel’s 100Gbit/s Omni-Path or Mellanox InfiniBand or Ethernet links to the outside world and a 12Gbit/s internal SAS fabric, enabling up to 16GB/sec of sequential IPO per storage enclosure.

ClusterStor 300N application controller

A ClusterStor Nytro Intelligent I/O Manager provides transparent performance acceleration for IO, checking out IO work and directing them to disk or flash as appropriate. Flash storage gets small block sizes, un-aligned I/O or read modify write workloads. The flash provides 100 per cent write acceleration and can be configured to accelerate reads as well.

Flash-held data can be automatically flushed to disk (10TB SAS drives), as SSDs fill a background task de-stages the oldest data to HDD, or, as a configuration choice, pinned in flash until an admin says otherwise.

Large data blocks are sent to the array’s so-called GridRAID disk pool.

The systems comes with a 2U, 24-drive System Management Unit (SMU) with 7 x 2.5-inch 10,000rpm drives and 5 x 2.5-inch 15,000rpm drives. There can be up to seven SSUs (Scalable Storage Units) with up to 7 in the base and expansion racks. Base rack SSUs hold 2 or 4 (1.3TB SAS or NVMe) SSDs*, and up to 82 x 3.5-inch drives spinning at either 7.200rpm or 10,000rpm; the faster speed being required for GriDRAID. Expansion rack SSUs hold up to 84 **disk drives.

PCIe x 16 NVMe flash accelerators also appear on a Seagate configuration overview slide.

ClusterStor configuration overview slide

The L300N and G300N have Mellanox QDR/FDR/EDR InfiniBand, 40/100 GbiE*** and Intel Omni-Path network connectivity support.

The L300N has a Metadata Management Unit in addition to the System Management Unit. This holds 22 x 2.5-inch 10,000 rpm disk drives.

Mike Vildibill, VP for HPC Storage in HPE issued a supportive canned quote: “The 300N offers the density, extreme bandwidth, low latency and simplified manageability that our customers demand in their HPC storage environments today.”

HPE, which is a ClusterStor reseller is acquiring SGI which is also a ClusterStor reseller.

Seagate says burst buffers are not POSIX-compatible, may require app software changes and proprietary compute client software.

Other news

The A200 Active Archive, usable as a G200/G200N and L200/L200N backup device, array gets10TB drive support, meaning up to 6.5PB per rack. It also has improved orchestration facilities with ClusterStor HSM collecting data from front-end ClusterStor arrays and pumping it into the archive tier. An active archive object storage tier for G200 Spectrum Scale (GPFS) platform is coming in the next release.

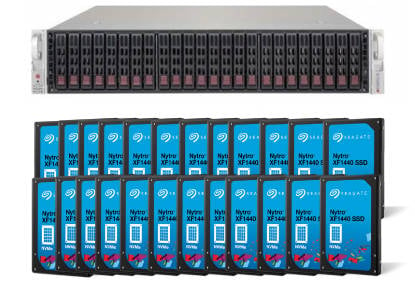

At its Salt Lake City SC16 booth, number 1209, Seagate is showing an NVMe over a Fabric array using Nytro flash drives, delivering 4.8 million IOPS with single-digit microsecond latency. We understand this is a 2U enclosure with 24 Seagate Nytro XF1440 NVMe SSDs. These are front-loaded and mounted vertically in three groups of eight.

Seagate NVMe over fabric array with 24 x Nytro SSDs.

Mellanox ConnectX-3 or ConnectX-4 network adapters are used, and they run at speeds of 25Gb/sec or faster. Seagate also has demo HAMR (Heat Assisted Magnetic Recording) disk drives on its stand to to show its progress towards building 50TB+, 3.5-inch nearline disk drives.

Initial shipments of the ClusterStor 300N begin this quarter with general availability next quarter. The table above has more detailed system and component schedule information. ®

* Only 2 SSDs with L300N

** Only 40GbitE for the L300N

*** Only 82 with L300N expansion racks.