This article is more than 1 year old

Super-slow RAID rebuilds: Gone in a flash?

Rebuilding SSDs should be faster than spinning disk drives

Analysis RAID rebuilds are too slow with 4, 6 and 8 and 10TB drives. So erasure coding is coming in to shorten the failed disk data rebuild time and also use less capacity overhead. What happens when SSDs are used instead of disk drives? Will RAID be more or less acceptable than with disk drives?

Intuitively we expect a flash array to recover faster from a component SSD failure than a disk array would recover from a disk failure. But why and how much faster?

RAID background

The background is that, when a disk fails in an array, the array controller hardware/software rebuilds the contents of the failed disk on another disk, while it is doing its normal work reading and writing data from the other disks in the array for accessing applications in connected servers. The failed disk rebuild time is affected by the amount of controller resource dedicated to the rebuild work, the amount of processing time needed, the time needed to read the failed disk data on other disks, and the time needed to write the recovered data to the new disk.

RAID 1 and 5 can recover from a single disk failure, with RAID 6 offering protection against two drive failures. Just two disks are the minimum need for RAID 1, three for RAID 5 and four for RAID 6. The redundant data and parity blocks are spread across all the drives to provide more uniform IO times.

RAID incurs a capacity overhead, with RAID 1, mirroring, requiring a 100 per cent overhead as a second disk mirrors the first. Just two drives are needed for a RAID 1 scheme. Other schemes, such as RAID 5 and RAID 6, add one (RAID 5) or two (RAID 6) calculated parity data blocks to each data block written to a disk, and that the disk’s data and parity data is copied in fragments (stripes) to other drives so that if the source disk fails, its contents can be reconstructed from the dispersed data fragments and parity data.

When the failed drive is replaced, the original contents are recovered and written to it.

RAID 5 example

In a 6 x 400GB array with RAID 5 there is 2.4TB of raw capacity and 2TB of usable capacity, meaning a 20 per cent overhead. You need a minimum of three drives and one of them is the notional spare, the scheme being called an N-1 scheme, where N is the number of drives in the array.

With a 10 x 400GB array there is 4TB of raw capacity and 3.6TB of usable capacity, a 10 per cent overhead.

RAID 6 example

In a 4 x 300GB array with RAID 6 there is 1.2TB raw capacity and 600GB usable – a 50 per cent overhead. With 8 drives there is 2.4TB raw capacity and 1.8TB usable – a 25 per cent overhead.

With 10 drives there is 3TB raw and 2.4TB usable – a 20 per cent overhead.

These RAID 5 and 6 capacity overhead numbers are the same for disk drives and solid state drives.

RAID 5 can recover from a single failed drive in an array, with RAID 6 offering protection against two disk failures. As RAID rebuild times lengthen in step with disk capacity rises, the chances that a second disk will fail while a disk rebuild process is underway rises, as does the chance that an unrecoverable read error will occur during the rebuild time, and that, of course, means data will be lost.

With 4TB and larger disk drives, and controllers only able to apply a percentage of their resources to rebuilds, rebuilds can take many days, extending the risk window to unacceptable durations.

Disk vs SSD failures

When an SSD fails, the RAID rebuild time, we intuitively think, should be less than disk. So will erasure codes then be needed as much?

There are two questions here: SSD vs HDD RAID rebuild times and RAID-vs-erasure code rebuild times. We’ll look at HDD vs SSD rebuild times here.

In a RAID disk rebuild, a portion of that time is disk access latency. What percentage of, say, a near 10 hour RAID rebuild of a failed 4TB disk drive is taken up disk latency? We can then ask: what percentage of a failed 4TB SSD data rebuild will be taken up by SSD access time? Will SAS vs SATA vs NVMe SSD access influence the overall SSD rebuild time?

To start this comparison we were advised that RAID 1 (mirroring) time to rebuild a disk must at least be the capacity of the disk divided by its sequential write bandwidth. Okay, then: assuming an 80MB/sec bandwidth and a 72GB disk we get (7,200/80) = 900 seconds (a quarter of an hour, 15 minutes).

Of course this ignores the fact that, in real life, a disk array/RAID controller is dealing with normal IO and can’t spend 100 per cent of its resources on the RAID rebuild process. But let’s set that aside for now so that we can get a basic idea of drive rebuild times as capacity rises.

Rebuild table

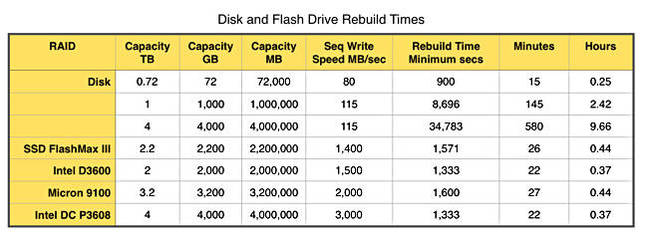

We can construct a table for different disk capacities and write bandwidth, and then take the same approach with SSDs to compare the resulting minimum rebuild times.

We can assume that, other things being equal, like RAID level, hardware or software RAID, and capacity, the processing time for a disk RAID rebuild will be the same as the processing time for an SSD RAID rebuild.

We chose SSDs with the fastest bandwidth for this exercise. Many have bandwidths in the 800MB to 1.0GB/sec area and so will be slower.

We see from this table that a 4TB disk drive with a 115MB/sec bandwidth for writes needs almost 10 hours minimum for a rebuild. SSDs are much faster than this, as we see from the table, and calculating with the same algorithm.

A 4TB Intel DC P3608 has a 3GB/sec sequential bandwidth, and its rebuild minimum time is 22 minutes, 96.6 per cent less than that for our 4TB HDD. Therefore the time pressure encouraging a switch from RAID to erasure coding will be much less.

What happens with different RAID levels?

The minimum drive rebuild time, based on sequentially writing data to it will be the same. But the processing time will be different as different RAID schemes have more or less parity data to compute.

Rebuilding SSDs with RAID should be quicker overall because reading data from the remaining SSDs will be quicker than reading data from other disk drives.

The processing time for rebuilding SSDs or disk drives should be exactly the same.

On the basis of the numbers above RAID schemes are acceptable for protecting against SSD failures with rebuilds at 4TB taking more than 26 times less with SSDs than disk drives. Any need to use erasure coding on SSDs is going to be much less than with disk. ®