This article is more than 1 year old

Storage with the speed of memory? XPoint, XPoint, that's our plan

And if it can't do it, (other) ReRAM can

Analysis Since the virtual dawn of computing, storage – where data puts its feet up when it's at home – has been massively slower than memory, where data puts on its trainers and goes for a quick run.

That massive access speed gap has been getting narrower and narrower with each storage technology advance: paper tape, magnetic tape, disk drives, and flash memory. Now it is set to shrink again with even faster non-volatile solid-state memory, led by 3D XPoint.

This will have far-reaching effects on servers and on storage as system hardware and software change to take advantage of this much faster – near-memory speed in fact – storage.

Memory contents are volatile or ephemeral, in that they are lost when power is turned off. Storage contents, be they held in NAND, on disk or tape, are persistent or non-volatile because they stay unharmed by power being switched off to the device.

Paradoxically, storage media that doesn’t move is faster than storage media that does, with solid state, non-volatile memory or NAND being much faster to access than rotating disk and streaming tape.

How much faster?

Nanoseconds and microseconds

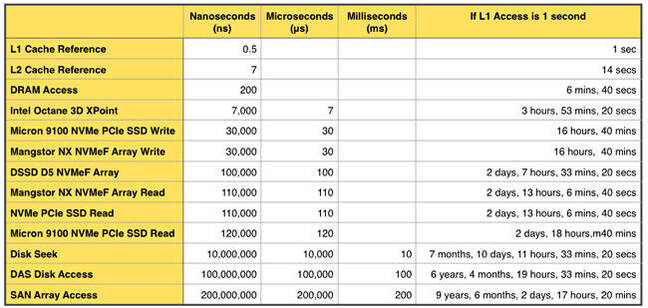

A memory access can take 200 nanoseconds*. This is slow by CPU cache-access speed standards, with a level 1 processor cache access taking half a nanosecond, 400 times faster, and a level 2 cache access needing seven nanoseconds.

A disk-drive seek, the time for the read-write head to move across the platter and reach the right track area, can take 10 million nanoseconds – that’s 10 milliseconds or 10,000 microseconds. Let’s say it’s slightly more than two orders of magnitude slower than a memory access.

A solid state drive (SSD) is vastly faster than this. Micron’s latest 9100, implemented as an NVMe protocol PCIe bus card, has a 120 microsecond read-access latency and a 30 microsecond write-access latency. That’s at least an order of magnitude faster than disk but 150 times slower than a DRAM access for write access and 600 times slower for a read access.

Why the asymmetry between read and write access? We understand it is because writes are cached in an SSD’s DRAM buffer whereas reads have to access, of course, the slower NAND.

Although NAND is much faster than disk it is still a great deal slower than memory and the essential coding rule of thumb that says IO is slower than a memory access still stands. It means coders, keen to shorten application run times, will try to restrict the amount of IO that’s carried out, and server/storage system engineers will try to use NAND caching to get over disk data delays, and DRAM caching to get over NAND access delays.

Servers with multi-socket, multi-core CPUs are inherently liable to be in IO wait states, as the storage subsystems, hundreds of times slower than memory at best, struggle to read and write data fast enough, meaning the processors wait, doing nothing, while turgidly slow storage does its thing.

The gaping access time gulf

If we treat an L1 cache reference as taking one second, then, on that measurement scale, a disk access can take six years, four months, 19 hours, 33 minutes, and 20 seconds. Reading from Micron’s 9100 SSD is much faster though, taking two days, 18 hours and 40 minutes. That’s good by disk standards, but it’s still tortuously slow by DRAM access speed standards – which take six mins and 40 secs.

This great gaping gulf in access speed between volatile memory and persistent storage promises to be narrowed by Intel and Micron’s 3D XPoint memory, a new form of non-volatile, solid state storage - if it lives up to expectations.

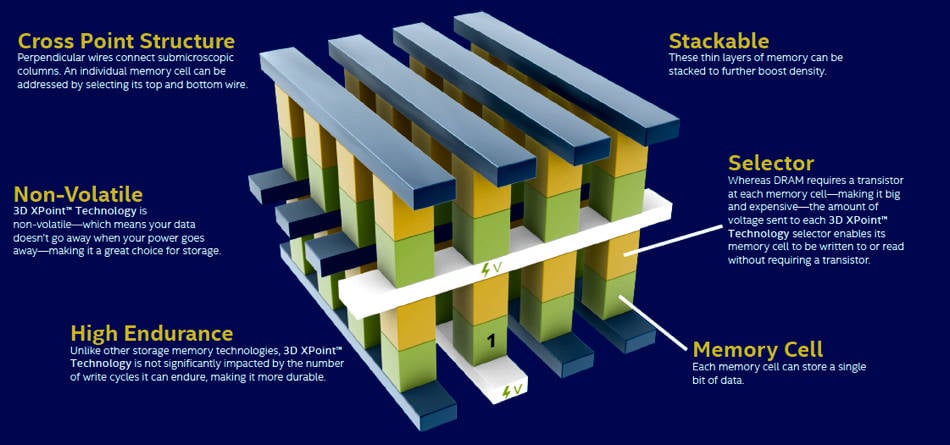

XPoint is made from Phase Change Memory material, a chalcogenide glass, and it has electricity applied to it, causing a bulk change in some aspect of its state that causes a resistance-level change. This can be reversed, leading the resistance levels signalling a binary 1 or zero. The XPoint die has two layers and a crossbar design, hence its full 3D CrossPoint name.

The bulk change is said to be different from the amorphous-to-crystalline state change used in Phase Change Memory (PCM) technology., which also modifies the material’s resistance level

The early marketing messages from Intel and Micron talked about it being 1,000 times faster than flash, as well as having 1,000 times more endurance, and a cost expected to be more than flash but less than DRAM.

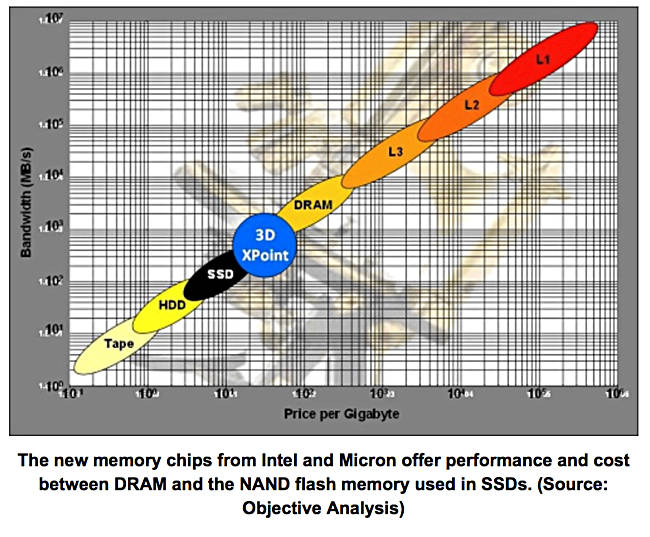

Jim Handy of Objective Analysis charted XPoint’s position on a log-scale graph;

The chart plots media in a 2D space defined by cost/GB and bandwidth, and we can see 3D XPoint sitting between NAND and DRAM.

XPoint details

Unlike NAND, it is byte-addressable, not block-addressable. Intel says it will introduce Optane brand XPoint SSDs (meaning NVMe-access drives) and XPoint DIMMs, meaning XPoint chips mounted on DIMMs to access the memory bus directly, instead of the relatively slower PCIe bus used by the NVMe XPoint drives.

An Intel presenter, Frank Hady, Intel Fellow and Chief 3D XPoint Storage Architect, provided some Optane numbers at the Seventh Annual Non-Volatile Memories Workshop 2016 at UC San Diego recently.

We learnt that Optane had this kind of profile:

- 20nm process

- SLC (1 bit/cell)

- 7 microsec latency, or 7,000 nanoseconds

- 78,500 (70:30 random) read/write IOPS

- NVMe interface

XPoint’s bandwidth is not clear at this point.

If we construct a latency table looking at memory and storage media, from L1 cache to disk, and including XPoint, this is what we see:

With a seven-microsecond latency XPoint is only 35 times slower to access than DRAM. This is a lot better than NVMe NAND, with Micron’s 9100 being 150 times slower than DRAM for writes and 600 times slower for reads.

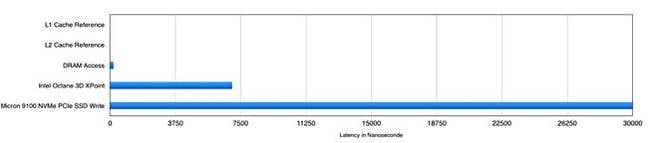

If we chart five items from the table horizontally to show this, here is the result:

It’s obvious that XPoint is much closer to memory latency than NAND. XPoint can be used as a caching technology for NAND (or disk), in which case not much changes – a cache is a cache. Or it can be used as a form of memory, meaning that applications would execute on data fetched directly from XPoint instead of, or as well as DRAM.

XPoint chips mounted on DIMMs can be expected to have even shorter latencies than seven microseconds, and this is likely to be the XPoint storage-class memory (SCM) form factor.

System and software changes

With SCM applications won’t need to execute write IOs to get data into persistent storage. A memory-level (zero-copy) operation moving data into XPoint will do that. This is just one of the changes that systems and software will have to take on board when XPoint is treated as storage-class memory, meaning persistent memory.

Others include:

- Development of file systems that are aware of persistent memory

- Operating system support for storage-class memory

- Processors designed to use hybrid DRAM and XPoint memory.

We’re bound to see Linux having its code extended to support XPoint storage-class memory.

Microsoft is working on adding XPoint storage-class memory support into Windows. It will provide zero-copy access and have Direct Access Storage volumes, known as DAX volumes; presumably the DAS acronym is so widely understood to mean disk drives and SSDS connected directly to a server by SATA or SAS links as to be unusable for XPoint Direct Access Storage. DAX volumes have memory-mapped files and applications can access them without going through the current IO stack.

That stack of code is based on disk drive era assumptions in which IO stack traversal time was such a small proportion of the overall IO time that it on its own did not matter. But, with XPoint access time at the single digit microsecond level, stack efficiency becomes much more important.

There will be specific SCM drivers to help ensure this, and there are more details in this video:

Microsoft NVM presentation at the NVM Summit 2016 - Storage Class Memory Support in the Windows OS. Click image to go to video.

XPoint effects on servers and storage

XPoint-equipped servers will suffer much less from being IO-bound. Their caching will be more efficient. Ones using SCM will be able to have larger application working sets in memory and spend less time in writing data.

Other things being equal, XPoint-equipped servers should be able to support more VMs or containers when XPoint caching is used, and many more VMs or containers when XPoint NVMe SSDs are used, and more again when XPoint SCM is used.

Storage arrays will be able to respond faster when using XPoint caching, and faster again, overall, when using XPoint SSDs. They can be expected to use an NVMe fabric link with remote direct memory access, and function as shared XPoint direct-attached storage.

Fusion-io tried to introduce storage-class memory with its original PCIe flash cards but the company was too small to get an SCM-level changes into operating systems. The combined Intel and Micron pairing is certainly large enough for that, with both companies well aware of the need for XPoint-supporting ecosystems of hardware and software suppliers.

If, and it is most certainly an “if” still, XPoint delivers on its potential then we are going to see the virtual elimination of SAS and SATA-type storage IO waits and, with SCM, a substantial increase in overall server efficiency.

Should XPoint under-deliver, then other resistive RAM (ReRAM) technologies may step forward. We know SanDisk and Toshiba are working in that area but none of them are as ready as XPoint for prime time. Let’s hope it lives up to its extraordinary promise and delivers the XPoint goods. ®

* All the latency numbers used here are as accurate as I can get them but there can be a lot of variance so treat them as indicative rather than absolute.