This article is more than 1 year old

Web servers should give browsers a leg-up, say MIT boffins

If servers can pre-fetch all the cruft linked inside a page, browsers will go faster

Latency plus complexity, rather than bandwidth, are what strangles Web performance, and a bunch of MIT boffins reckon browsers haven't kept up.

To get around that, they've proposed a scheme called Polaris – not the same thing as the Mozilla browser privacy project – to focus on the order in which page objects are loaded.

The boffins' paper (PDF here) will get an outing at the Usenix Symposium on Networked Systems Design and Implementation later this month. Its lead author is MIT PhD student Ravi Netravali, with co-authors Ameesh Goyal and Hari Balakrishnan (also MIT) and Harvard's James Mickens (who got involved in 2014 when he was a visiting professor at MIT).

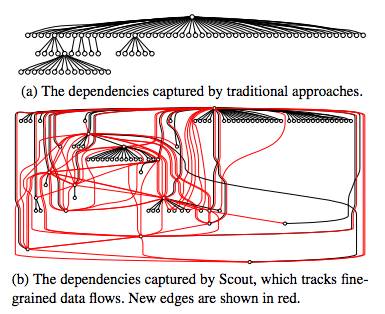

The paper says that with a more fine-grained understanding of the dependencies on a Web page, the network and the client's CPU can be better utilised to get a page displaying faster.

With ad sites, trackers, and third-party services delivering images and fonts, those dependencies have multiplied in the last decade, so that a page like Weather.com has dozens of them. Those dependencies get overlooked in current browsers, Netravali argues, so he and his collaborators have looked at how all the dependencies can get captured.

As Natravali explained to MIT News:

“As pages increase in complexity, they often require multiple trips that create delays that really add up. Our approach minimises the number of round trips so that we can substantially speed up a page’s load-time.”

Those dependencies are why pages load so slow

Image: from Netravali's paper

This far-more-complete understanding of what's in a page comes from a tool called Scout, which “tracks fine-grained data flows across the JavaScript heap and the DOM” (document object model – The Register).

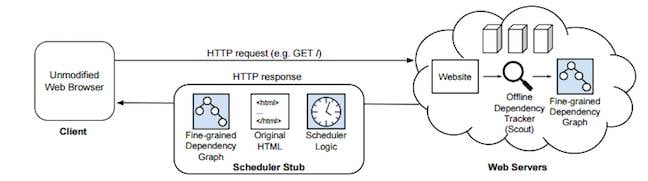

To help the browser make decisions about what to do with dependency information, the paper offers “Polaris, a dynamic client-side scheduler that is written in JavaScript and runs on unmodified browsers; using a fully automatic compiler, servers can translate normal pages into ones that load themselves with Polaris”.

The point of this, the paper explains, is that Polaris “can aggressively fetch objects in a way that minimises network round trips.”

The paper continues: “Experiments in a variety of network conditions show that Polaris decreases page load times by 34% at the median, and 59% at the 95th percentile.”

The heavy lifting is done at the server-side, so users don't have to get new browsers. Scout creates the dependency graph, and a “stub” is sent to the browser along with the unmodified HTML. The stub includes a copy of the dependency graph, and the scheduler logic the browser needs.

Polaris does the heavy lifting server-side

Image: Netravani's paper

Getting the scheduling right is particularly important for users on high-latency links – either because they're on the wrong side of the world from a Webserver, or because they're using a higher-latency mobile connection.

Polaris’ benefits increase as network latency increases. For example, at a link rate of 12 Mbits/s, Polaris provides an average improvement of 10.1 per cent for an RTT of 25 ms. However, as the RTT increases to 100 ms and 200 ms, Polaris’ benefits increase to 27.5 per cent and 35.3 per cent, respectively”, they claim.

Polaris was tested on 200 sites, they say, including Weather.com, the New York Times, and ESPN.com. ®