This article is more than 1 year old

Diskicide – the death of disk

Tech Unplugged sees Reg presenter unplugged

How might disk death in the data center come about?

These are the notes used at a Tech Unplugged event in Amsterdam on September 24, and they present a scenario in which data center disks could stop spinning. Is it a sensible scenario? Read on.

I try and connect the dots in the industry, the dots being the myriad individual events that happen and which I write about on The Register. I see pictures of the industry that can be made by connecting these dots together. This session is about one way of connecting these dots; a way that points to the death of disk in the data center.

Let's see what dots are involved and how I connect them, and then you can decide if it seems reasonable.

I'm going to begin back in the 1930s, before most of us were alive...

Remember punched cards; the original way of storing digital files and getting them loaded into a computer’s volatile memory? They were followed by paper tape, and then by magnetic tape – which is still in use for some backups and for archiving – but is thought too slow for online storage of primary and secondary data.

It was replaced by disk, much faster at random access and good enough at sequential data reads.

Well, disk speeds have been capped at 15,000 rpm while capacities have risen and risen, so the basic IO rate from disk has barely moved, while servers have become multi-core, multi-socket, and virtualized – meaning they want data delivered through a fire-hose and disk can't keep up.

Disks' slow delivery of data is opening a door for flash to enter the data center because it delivers data faster.

Disk development difficulties

Disk technology is developing to boost capacity while access performance is barely getting a look in.

We have hybrid notebook disks with a flash cache inside the disk enclosure, but these are not very popular, users preferring to have SSDs instead of slightly accelerated disks. SSDs are so much faster than spinning drives. So much more power-efficient and lighter, too, that it's becoming normal for laptop computers to use them instead of disks.

So for disk, the future is a capacity play. Can shingled recording and heat-assisted recording solve the flash problem disks are facing?

Shingling and HAMR

Shingling disks to increase capacity means that data tracks are partially overwritten, meaning whole blocks have to be re-written if a few bytes change; so performance is slowed. It gives you maybe 20-30 per cent more capacity at the cost of slowing write performance.

Shingling is a sticking-plaster to boost capacity until HAMR (heat-assisted magnetic recording) comes along and also, for Seagate, until helium-filled drives arrive.

HAMR is dauntingly difficult to implement and we're not likely to see HAMR drives, at least from Seagate, until the second half of 2018. Even then they may not have more capacity than PMR drives.

Seagate is also working on helium-filled drives as a way of increasing capacity, following HGST's lead. It is also making noises about having two read/write heads per platter, but we have no availability dates.

Disk drive technology has no hope of catching up with flash in performance terms, and its capacity improvement rate seems to be slowing as PMR runs out of steam and replacement tech is difficult and costly to implement.

Hitting the flash wall

Flash has had to face up to its own technology development problem. Single-level cells (SLC or 1bit/cell) have the most endurance at any particular cell size, with 2-bits/cell MLC having a shorter life, and 3-bits/cell TLC having an even shorter working life. Each cell size shrink also shrinks endurance – dramatically.

You can compensate for this with better controllers using DSP techniques and better error correction, but when there aren't enough electrons in a cell at a particular voltage level and the bit value is hard to detect and drifts over time, then there is only so much you can do.

After 16nm it seems we're unlikely to be able to shrink cell size again, and so flash capacity increases via cell shrinks have hit a wall ... but NAND foundries have become able to have multiple layers of NAND, 2D planar layers, laid down in a 3D structure and so raise capacity without shrinking the cell size or increasing the footprint of the flash chip.

We have 32 layers currently, 48 coming, and a possibility of having 128, so four times more capacity than a 32Gbit 3D chip.

They have also done this while using larger cell sizes as well, which has an extra benefit.

Step back the process geometry and build up layers

Samsung is producing 3D flash chips using 4x geometry I understand – that is a cell size between 49 and 40nm along one axis. SanDisk and Toshiba are producing 12nm planar NAND, 1X class. The step back to 4X has the side effect of greatly increasing endurance, and that means that TLC flash, 3 bits/cell, can have enterprise-class endurance and be used in flash arrays.

Solidfire is already using 3D TLC flash in its arrays. So too are Dell and Kaminario, with Dell hitting a $1.66/GB all-flash array street price point using 3D TLC flash drives from Samsung; "approximately the same price per gigabyte as high-end 15K HDDs and up to 24 times performance improvement, up to six times the density, lower latency, and lower power consumption."

Samsung has demonstrated a 16TB SSD using 3D TLC technology. The highest capacity disk drive is a 10TB HGST helium drive.

For some time, all-flash array vendors have said post-dedupe flash cost/GB is the same as disk.

We are hearing the 3D 4bits/cell per quad level cell (QLC) technology is coming, and this will lower the cost/GB of flash even further.

3D TLC flash lowers the cost/GB of flash and increases chip capacity so that SSDs can exceed disk capacity and match and beat high-performance disk cost. 3D QLC flash will push flash prices even lower.

Storage moving to servers

It's becoming apparent that there is a trend for primary data to move from disk to flash, and also to move closer to servers. Diablo Technologies' flash DIMMs are an example of that. So too is Mangstor's NX6320 all-flash array, which uses an NVMe fabric link to have shared flash storage accessed at PCIe bus speeds, effectively forming a second tier of memory inside a server's memory address space.

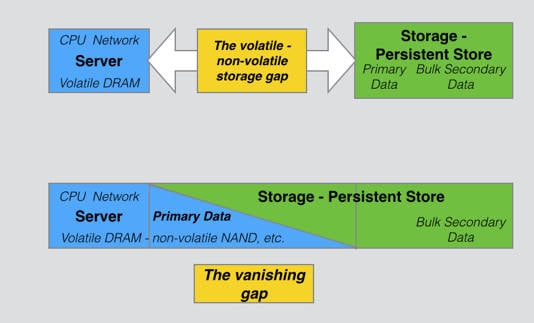

Closing the server-storage gap

EMC's rack-scale all-flash DSSD array will use the same approach.

You cannot do this with disk drive storage because of disk latency, or with traditional networked SAN and filer arrays either, because of network latency.

Possible flash takeover of disk market

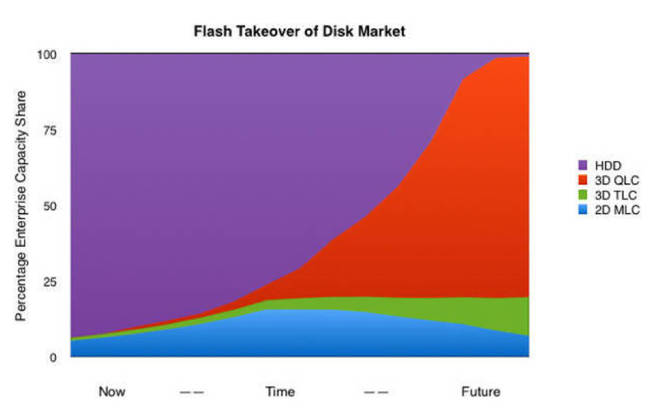

Given what we have heard, then, we can construct a scenario in which 2D planar MLC NAND is replacing 15,000 rpm disk drives today to store primary data. 3D TLC NAND will start to replace 10,000rpm disk drives and 3D QLC flash will begin the replacement of 7,200rpm disk drives.

Flash taking over from disk; a scenario

And so, if our scenario is correct, we will see disk-free, all-flash data centers appearing, much as Violin Memory would like.

The picture on the ground is mixed.

I've been told by HDS that it has some customers with all-flash data centers.

But Nimble Storage says its hybrid arrays have replaced an IBM all-flash RamSan – because they had better sequential data access performance.

Nevertheless, Nimble expects to introduce an all-flash array next year.

Requiascat in pace hard disk drives and spin no more

So disk can never match flash in performance, and flash is increasing drive capacity more than disk and faster than disk, and also its cost is heading towards parity with disk. Cogito ergo sum – and disk is dead in the data center. Requiascat in pace hard disk drives and spin no more.

What I have described in broad brush strokes is how and why disk drives could be replaced by flash drives. None of the disk drive manufacturers are saying that this will happen. Indeed Seagate resists the idea strongly, saying SSDs and PCIe flash are a data access accelerant, not a disk drive replacement.

According to the disk makers, data may flee the enterprise data center and go into hyper-scale clouds, but it will be stored on high-capacity spinning rust, and not in flash chips. No sir, no way, and, by the way, there isn't enough flash foundry capacity to build the flash chips we'd need.

King Canute once tried to hold back the tide – he failed. A boy tried to stop a leak by putting his finger in a hole in a dyke. He failed.

And tape manufacturers once said disks were too bulky, expensive, and error-prone. They would never catch on.

But they did. And now we have flash, and the world turns on its axis again, and tomorrow ... flash could kick spinning rust out of the data center and disk become the new tape ... or ... flash could meet disk and fail to topple it. What do you think?

Isn't this storage business a fascinating one? Anything could happen – and it probably will. ®