This article is more than 1 year old

Tintri T850: Storage array demonstrates stiff upper lip under pressure

Watch out, performance hogs. This is NOT a contention-based model

Hybrid performance quirks

The one thing that everyone wants to read is the hardest section of this review to write. Tintri is a hybrid storage array that consists of both spinning rust hard drives and flash SSDs. Understanding this is core to understanding the performance characteristics of the array in the real world.

Tintri positions its arrays as faster and more capable than traditional magnetic hard drive arrays. Tintri also positions its hybrid units as good enough to go up against real world all-flash arrays. Based on my experiences with the unit, in most situations, for most workloads, it's probably right.

Tintri takes a "flash first" approach to storage. New writes are first written to flash. Data that has become "cold" through disuse is eventually demoted to magnetic disk. Hot data is kept in flash to ensure workloads have the best possible performance. Data in flash is deduplicated and compressed to make the maximum use of the flash.

You can "pin" workloads, meaning that they will get priority for flash resources over other workloads. You can also assign fixed minimum or maximums of IOPS to individual VMs in order to ensure minimum performance, or to manually prevent noisy VMs from impacting the rest of the array.

It is absolutely possible to run the array out of flash. Contrived scenarios are not required to achieve this. What is worth noting, however, is that Tintri arrays do not "fall off a cliff" when flash is full.

The performance degrades reasonably gracefully once flash is full. If you push the unit hard enough you can eventually have so much traffic coming off the disk that performance become asymmetric and bizarre, but I had to get into some pretty contrived synthetics to make anything that off occur.

In the real world you can and will encounter I/O hitting magnetic disk. Reads are especially vulnerable to this as Tintri's algorithm for determining when data is "cold" is a little aggressive. Let's examine the use case of a large virtual file server running on top of the Tintri.

The virtual file server in question is a Windows 2008 R2 VM with a 100GB operating system vdisk and 6x 2TB data vdisks, all thin provisioned. There is about 5.4TB worth of files (mostly JPEG images) stored in the VM.

The typical workload pattern has tens of gigabytes of new files loaded onto the file server each night. The next morning those images as well as images that may be weeks or months old are accessed by production staff. This is mostly read intensive, as typically changes are saved as metadata into an SQL compact edition database associated with each job rather than altering the images themselves.

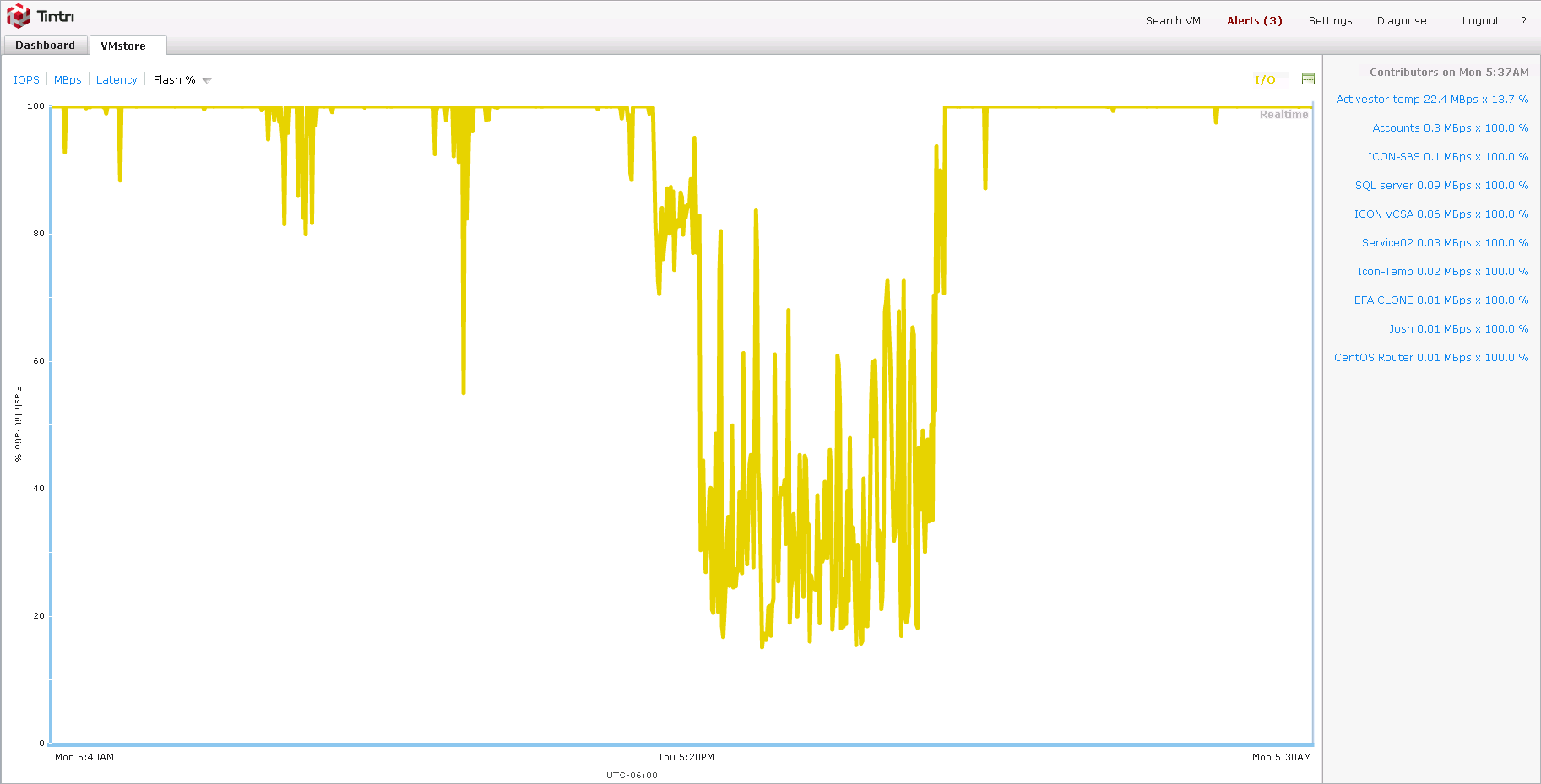

The flash persistence algorithm moves files out of flash fairly quickly. Files dumped into the system on a Friday will be on magnetic disk come Monday. The flash hit ratio dropped to 13.7 per cent for the file server VM bringing the overall hit ratio for the array below 20 per cent.

At first glance, these aren't great headline numbers. I'm sure Tintri doesn't want a review saying "flash hit ratio of 13.7 per cent". What's important to discuss here is what happened when the hit ratio dropped this low.

First off, nobody noticed that this had occurred. I didn't notice until I went looking for stats. The client in question is highly performance sensitive so if there had been the slightest abnormality I would have gotten a wave of emails. None occurred.

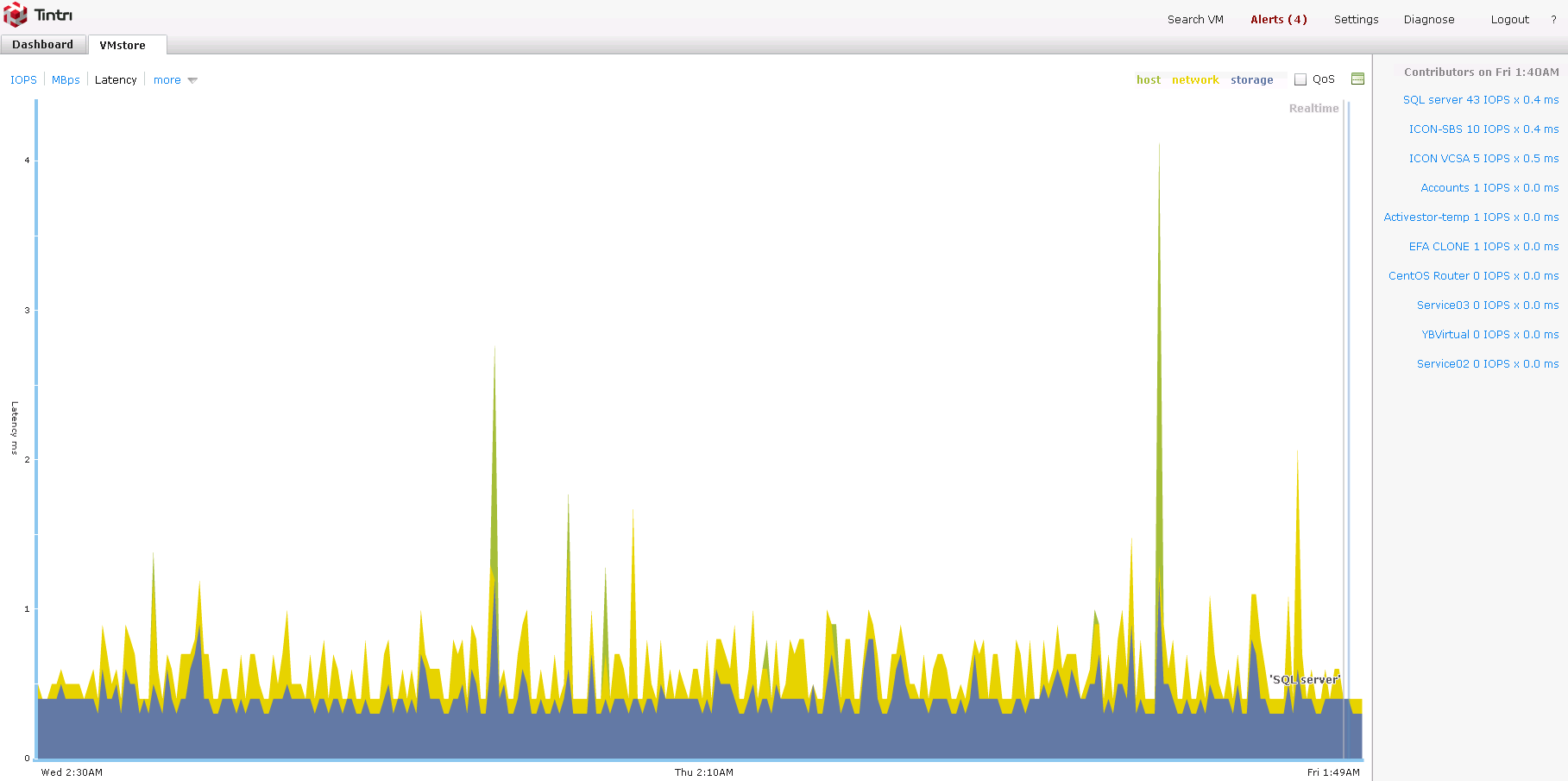

As you can see, overall system latency didn't take much of a hit, even when I zoom in a little to see the affected time frame more closely.

New writes to the Tintri were still going to flash. All the other VMs served from that system did their jobs without complaint. The other VMs using the array are a Windows Small Business Server (including Exchange server), a test/dev snapshot fork of the file server VM, and a Microsoft SQL server.

In addition, there are roughly a dozen VDI VMs, a mail filter, an NFV VM, the VMware VCSA, some image rendering VMs and about three dozen test and dev VMs I loaded up to stress the Tintri (and the rest of the network) in order to help profile things as part of refresh cycle planning.

Without the addition test and dev VMs, this client was bringing an entire rack's worth of traditional magnetic storage to its knees just trying to get the daily dozen done. The Tintri T850 soaks up all that work – and my stress testing VMs besides – and just asks for more.

The above is one of the reasons why benchmarking and profiling this storage array is so difficult. Taken out of context, some of the numbers you can generate can look pretty bad. In context and with some understanding of how things are working it becomes clear that the unit is much more than the sum of any given subsystem. It must be evaluated as a whole.