This article is more than 1 year old

MIT shows off machine-learning script to make CREEPY HEADS

Fifty shades lines of code

Don't write code when you can re-use code is a well-known principle in writing software, but using it to replace thousands of lines of code with 50 seems positively parsimonious.

That's what MIT is claiming with an upcoming demonstration of what it calls “probabilistic code”, an approach which it says it's applied to the field of vision for the first time.

MIT is coy about the details – for example, its http://newsoffice.mit.edu/2015/better-probabilistic-programming-0413 self-promotion doesn't provide a code sample to let mere mortals see what probabilistic code looks like – but that's because it's going to show its work off at a conference in June.

Lead author Tejas Kulkarni says “The whole hope is to write very flexible models, both generative and discriminative models, as short probabilistic code, and then not do anything else. General-purpose inference schemes solve the problems”.

The idea is that instead of writing inference algorithms specific to the task – such as using 2D pictures to build 3D models – a much smaller program could send problems off to existing general-purpose inference algorithms and choose the best output.

That, MIT claims, would mean instead of writing thousands of lines of code to tackle a new problem, just a handful of lines of code – the scale of a script – could handle the task.

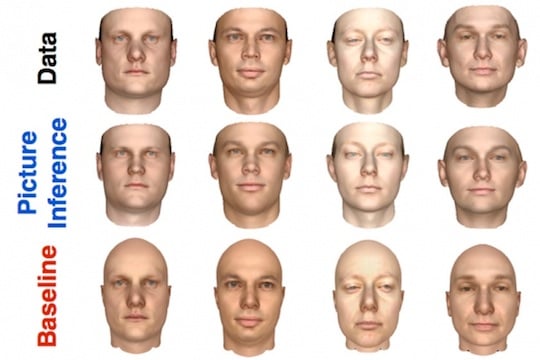

Its demo, shown off in the image below, tries to take 2D images of a head and build them into 3D models. In Picture, the coder describes the features of a face – two symmetrically distributed eyes, with two central features (nose and mouth) below them – in the syntax of the language.

From the 2D images at the top, MIT made the 3D models (middle row)

The bottom row shows the output of an earlier version of Picture. Image: MIT

In case you're thinking it all sounds too easy, you're right: Picture also relies on machine learning, so before it can perform its fifty-shadeslines-of-code dance, it needs to be trained. Quoting from the release: “Feed the program enough examples of 2-D images and their corresponding 3-D models, and it will figure out the rest for itself.”

MIT describes the probabilistic approach like this: if you wanted to determine the colour of each pixel in one frame from Toy Story, you have a large but deterministic problem, because you know how many pixels there are, and you know how many colours you have to sample.

“Inferring shape, on the other hand, is probabilistic: It means canvassing lots of rival possibilities and selecting the one that seems most likely,” MIT says.

In that respect, Picture looks to El Reg to be something of a meta-language: it still uses buckets of code in the form of pre-existing inference algorithms, with Picture running through difference algorithms and picking the “best” result.

This, however, could also alleviate the workload for future coders, according to researcher Tejas Kulkarni.

“Using learning to improve inference will be task-specific, but probabilistic programming may alleviate re-writing code across different problems,” he says. “The code can be generic if the learning machinery is powerful enough to learn different strategies for different tasks.” ®