This article is more than 1 year old

Everything you always wanted to know about VDI but were afraid to ask (no, it's not an STD)

All you need to make virtual desktops go

Scenario 4: Mixed workload storage

In researching this article, I talked to several VDI experts and dozens of sysadmins who've been through the minefield with workloads far different from the ones I maintain. The number one piece of advice that these folks will give a newbie is "deploy VDI on its own infrastructure." Mixing and matching with other virtual workloads is frowned upon, and for good reason. Unless you know exactly what you're doing, mixing VDI and general virtualisation will get you into a heap of trouble.

We don't all have the luxury of following this advice. I've been doing VDI for about a decade now, and far too many deployments have been on mixed storage. Pilot projects are often run on existing infrastructure, and smaller shops are generally lucky to have centralised storage at all. In many cases, dedicated infrastructure for VDI just isn't going to happen.

Being able to support mixed workload storage is the Holy Grail: one storage technology for all scenarios. The problem is that while every storage vendor and their mum claims that the kit they're shifting is a "one size fits all" panacea for all ills, nothing out there actually is. If you want to alienate every storage vendor on the planet, this is the elephant in the room to discuss. (Hey there guys, how y'all doing?)

While all-flash arrays absolutely are the "one size fits all" from a workload perspective, for the overwhelming majority of companies out there, all flash is simply too expensive, especially when you would need to put all workloads on it.

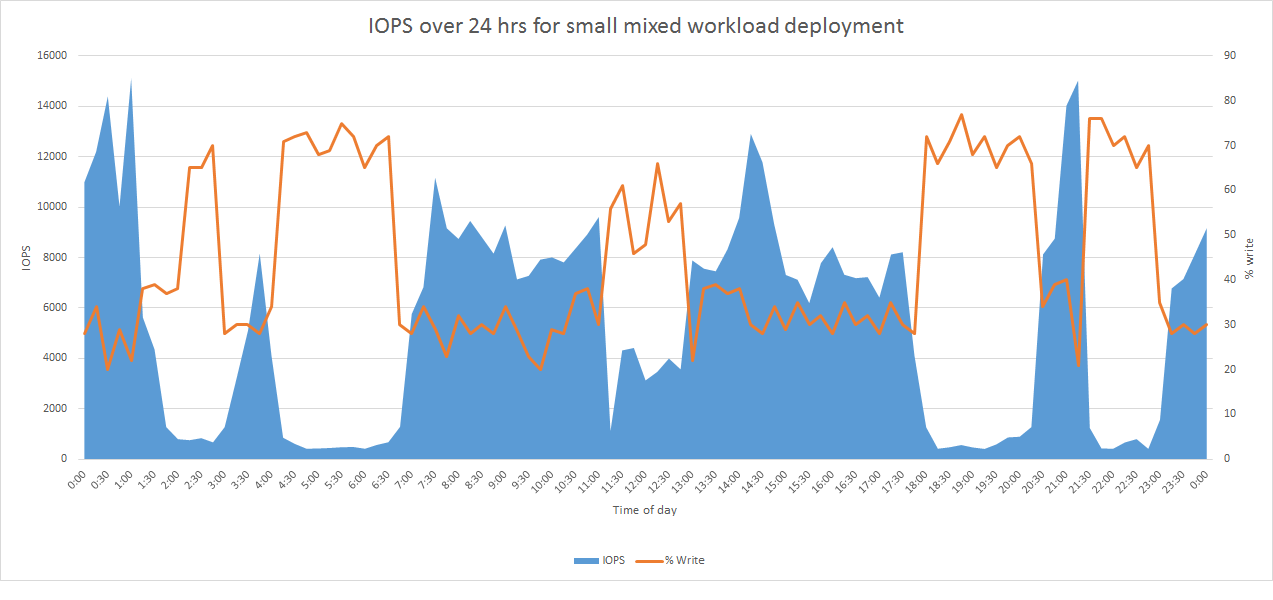

Hybrids, server SANs and host-based write caching all battle it out on features. Replication, active-active clustering, deduplication, compression ... competition is so fierce that trying to pick the right one can be confusing. All these features rely on there either being enough downtime to do their various background processes or enough wiggle room in the IOPS load to meet demand while doing their storage voodoo in real time.

If these solutions become overwhelmed - or their flash fills up - active workloads have to start going to spinning rust. In this situation the entire virtualisation infrastructure will go from "awesome" to "unusably slow" in an instant. This is rare, but it does happen. I've seen the change in IOPS be so sudden and dramatic that over 40 per cent of VMs simply stopped responding and ultimately, crashed.

Bear in mind as well that mixing and matching different VDI workload classes can have a similar (though usually not as dramatic) "mixing" effect. I've had VDI experts tell me that in large deployments they create separate infrastructures for each class of workload just to avoid this.

In some cases it is merely separate cluster: GPU-accelerated workloads on systems with nVidia GRID cards, standard workloads on CPU-only servers. In other cases, they've mixed storage as well; high-demand clusters got caching software and SSDs installed, low-demand clusters did not.

Practical considerations for mixed workloads

If you must work with mixed workloads, management software can make all the difference. One of the reasons I'm such a fan of Tintri is that its management software is aware of the above issue and keeps an eye on how much performance you have remaining. It will alert you if you start getting close to the red line so that you can do something about it before everything goes pear-shaped. Several other vendors have similar systems.

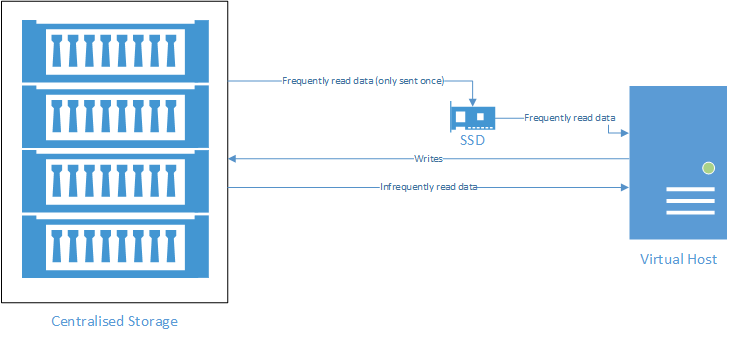

I've spent the past two years swimming in storage, and the biggest bang for the buck I've found for mixed workloads environments is pairing a host-based read cache (AutoCache) with a primitive hybrid central storage (CacheCade). Price and simplicity are what ultimately mattered. My customers don't have money to burn and they don't have the knowledge required to fiddle with a bunch of nerd knobs "optimising" their storage every time they make a change.

It's the simplicity that sells it; if the central storage turns to glue, the VMs can still read the vast majority of what they need to read without having to ask the centralised storage for that information. Most VMs won't even notice that central storage has temporarily slowed to a crawl.

My anecdotal example is a data center in which the backup software would detect if it hadn't been run in the past X hours and trigger if this was so. A power grid failure had the data center down for two days. When everything came back online the staff immediately logged into their VDI instances and started doing a lot of write-intensive analytics work.

The central storage eventually collapsed under the combination of that write strain, sysadmins taking the opportunity to patch several servers, database integrity checking and the backups for all VMs triggering at the same time. Two months later it happened again, this time with host-based read caching installed and the network not only didn't collapse, it was usable throughout the recovery process.

The takeaway here is that if you plan to run your VDI mixed in with other workloads, model everything and monitor your storage usage in an automated fashion. Server workloads do all sorts of things that demand huge amounts of IOPS for prolonged periods of time. Unchecked storage demand conflicts can seriously degrade the VDI experience for your users.