This article is more than 1 year old

Enterprise storage: A history of paper, rust and flash silicon

Has anything really changed since the punch card era?

The processes of reading and writing data could also be carried out in a single head unit. This sped up data-writing so that computers could output information to storage very much faster, making it possible to back up data to tape for long-term storage.

At the same time, the mechanical elements of a tape drive could also be progressively shrunk so that the original floor-standing units gave way to drives mounted on the front of computers.

A huge number of different tape sizes and formats were developed for mainframe computers and minicomputers, wiping out paper tape. However, data access speed was limited by the fact that a tape drive only has a single read/write head. To get at any individual file on the tape that file had to be located and passed by the read/write head; the tape is fed sequentially past the read/write head.

Solving this problem created the first example of networked or shared storage. A group of drives and racks of tape reels were collected together in tape libraries, shared by several computers, leading to the glory days of SAN (Storage Area Networks) and NAS (Network-attached Storage) that we are still enjoying today.

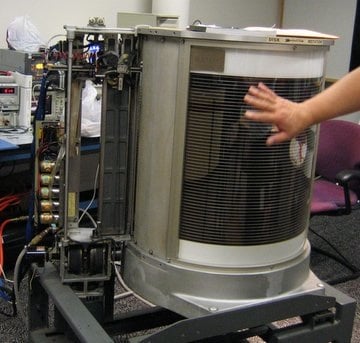

Oracle Streamline tape library

But it wasn't all good news. With tape, error checking and correction became more important as binary signals could be degraded, and various schemes were devised to provide binary value certainty or error presence, necessitating data replacement. Such schemes became progressively more sophisticated as the bit area became smaller and more prone to error.

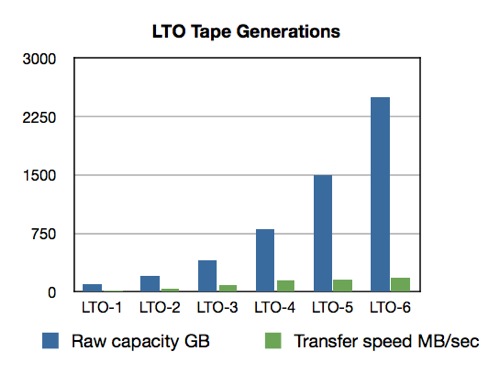

Tape technology advanced at a steady rate, increasing data capacity and data transfer speed. The accompanying chart shows how the LTO format increased capacity and speed through generations, from LTO-1 in 2000 to LTO-6 at the end of last year:

As computer processors became faster and faster, the problem of how long it took to locate or write data on tape became more of a problem. But this mismatch between processor speed and data storage speed has been a constant factor in computer history and helped spur every major storage technology development.

Each time an advance in computer data storage technology solves the pressing problems of the preceding storage technology, fresh problems come along that lead in turn to its replacement. So it was with tape, when IBM thought to flatten it and wrap it around itself in a set of concentric tracks on the surface of a disk.

Disk sends storage into a spin

IBM announced the 350 RAMAC (Random Access Method of Accounting and Control) disk system in 1956. Having a flat disk surface as the recording medium was revolutionary but it needed another phenomenally important idea for it to be practical. Instead of having fixed read/write heads and moving tape as with tape drives, we would have moving read/write heads and spinning disk surfaces.

The IBM 350 RAMAC disk system

Suddenly, it was much quicker to access data. Instead of waiting for a tape to sequentially stream past its head to the desired data location, a head could be moved to the right track. This enabled fast access to any piece of data; data selected, as it were, at random. And so we arrived at random access.

RAMAC had two read/write heads which were moved up or down the stack to select a disk and then in and out to select a track. Disk technology then evolved to having read/write heads per recording surface, eliminating the disk platter seek time latency. Multiple moving read-write heads enabled random data access and this solved the tape I/O wait problem at a stroke. It was an enormous advance in data storage technology and getting a disk head to move across a disk surface while being super-close to it has been compared with flying a jumbo jet a few feet above the ground without crashing.

RAMAC provided 5MB of capacity using 6-bit characters and 50 x 24-inch disks, with both sides being recording surfaces. Disk drives rapidly became physically smaller and areal density increased so that, today, we have 4TB 4-platter drives in an enclosure roughly the size of four CD cases stacked one on top of the other.

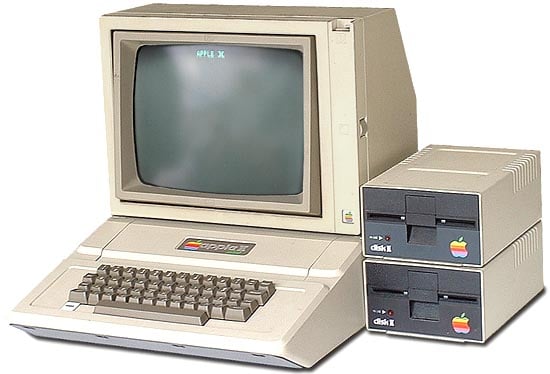

A key development was the floppy disk, a single bendable platter held in an enclosure and inserted into a floppy disk drive; a throwback to tape days in that respect, but they were cheap, so cheap. Personal computer pioneers seized on them for data storage but they were but a stopgap because once the 3.5-inch hard disk drive format and bay and SCSI (Small Computer Systems Interface) access protocol were crafted PC and workstation owners wanted them for their superior data access speed, reliability and capacity.

Apple II with two floppy disk drives

The 3.5-inch format became supreme and is found in all computers today; mainframes, servers, workstations and desktop computers.

The incredible rise in PC ownership drove disk manufacturing expansion, supplemented by the rise of network disk storage arrays. Applications in servers were fooled into thinking they were accessing local, directly-attached disks, when in fact they were reading and writing data on a shared disk drive array.

These were accessed either with file protocols (filers or NAS) or as SAN arrays - raw disk blocks - by applications such as databases. Typically, SANs were accessed over dedicated Fibre Channel links, whereas filers were accessed over Ethernet, the Local Area Network (LAN).

Disk drive manufacturing became steadily more expensive as recording and read/write head technologies grew more complex. Those companies that were best at organising their component supply chains, building cost-effective and reliable products, managing their costs, and selling and marketing their products, were able to make profits and fund their operations.