This article is more than 1 year old

Mmmm, TOE jam: Trev shoves Intel's NICs in his bonkers test lab

If you want to impress me, make kit that 'just works'

Convergence

The newer units are Converged Networking Adapters (CNAs), and the features they offer make a real difference. I'll be honest when I say that I never really grokked the concept behind CNAs. What exactly does one of these little blighters do, and why should I care?

To figure out if these cards were worthy of the respect earned by their predecessors, I first had to figure out what makes CNAs special.

It isn't the ability to carry the protocol. iSCSI doesn't need a "special card" to work, and neither does FCoE. So long as you have the right software, you should be able to make any network card speak storage protocols; those ancient Intel Pro/1000s work just fine with iSCSI. For a long time, I looked at this "converged networking" stuff as marketing gimmickry and stuck to caring about practical things, like "will the OS talk to the NIC?".

The world looks a lot different when you start pushing the cards into the red all day long. My new testlab affords me the opportunity to test storage at speeds that can saturate a 10GbE link. Start slamming a non-converged NIC with that kind of traffic while adding in traditional traffic (SMB, NFS, SIP, etc) and you can watch your latency climb. Eventually, your I/O requests may start timing out altogether. Not a good look.

What the CNAs bring to the table is a limited form of layer-3 awareness and manipulation capability coupled with expanded offload capabilities that don't impinge upon the host CPU or depend on the operating system.

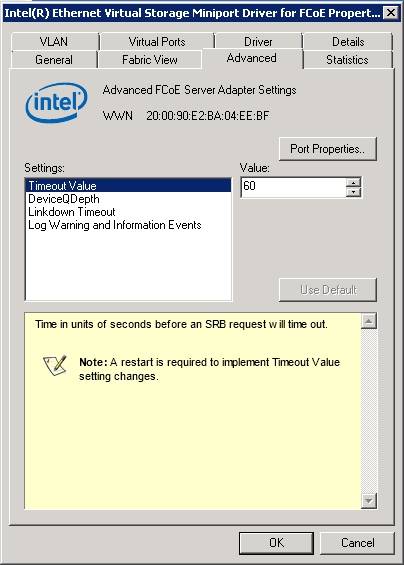

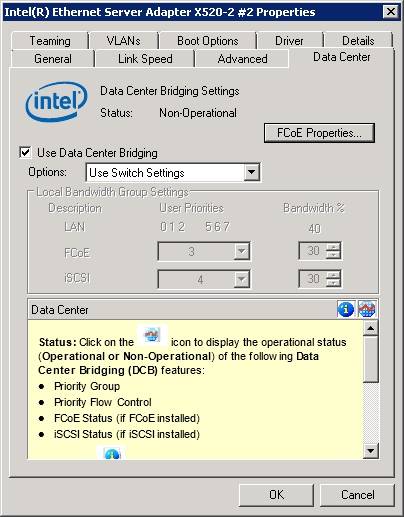

FCoE or iSCSI traffic can be tagged for QoS throughout your network (assuming your switching infrastructure plays ball.) Intel's CNAs can also guarantee minimum amounts of bandwidth be made available to a specific traffic class. Do you need iSCSI to always have 40 per cent of the available bandwidth? Just poke the settings and you're good.

Want to control the NICs from afar, or using an OS that doesn't make configuring these aspects of the driver easy? Not a problem: the Intel NICs default to prioritising packets based upon the settings provided by the switch. This is the whole Datacenter Bridging concept and it works far better than I would have believed.

Better than good enough

Back in the bad old days of Windows Server 2003, one of the very first things a systems administrator did when they got a new server was disable any TCP Offload Engine (TOE) settings in the network cards. Early TOE configs caused problems at the OS level, at the driver level and – with some vendors – at the NIC level.

It would be a lie to say that I never had TOE issues with Intel cards, but they have always given me far less trouble than others. These new NICs seem quite capable of carrying the torch: every system I've tried slotting them into, they have worked. They provide not simply bare-bones functionality, but a wide range of features that seem to "just work."

I can sustain 1,200 MiB/sec out of these cards using iSCSI. That's within the error bars of the fastest storage I can build, and it will do it without any noticeable impingement upon the host CPU. They tag and guarantee traffic and they do all of this without tinkering, Googling getting inventive with language or even deploying blunt objects.

Intel's networking team hasn't let me down with its latest cards. The ARK is one of the better product information websites our industry has built, and Intel's networking team seem set to continue working with nerds the world over to ensure that these cards work wherever they need to. Here's hoping they keep it up for another 10 years. ®