This article is more than 1 year old

AMD aims at big data crunchers with SeaMicro SM15000

'Petabytes are everywhere'

Earlier this week, ahead of the kickoff of the Intel Developer Forum, cheeky AMD launched its next generation of microservers sporting both Intel and AMD chips and a revamped SM15000 chassis that links storage arrays directly into the system "Freedom" interconnect fabric at the heart of the SeaMicro system it acquired earlier this year.

So how good is this interconnect at supporting storage, and how does it stack up running big-data jobs against traditional x86 clusters and storage?

"For the server community, storage was an afterthought," explained Andrew Feldman, cofounder of SeaMicro and now general manager of AMD's Data Center Solutions Group that has coalesced around the $334m acquisition of SeaMicro from earlier this year. "We just hung as many disks as we could off the server. We built servers that did not have any flexibility in their compute-to-storage ratio and that could not fully utilize disks."

And perhaps more importantly, the servers themselves were not architected for the radical change that has been going on in corporate applications for the past two decades since the internet became commercialized.

In the past, Feldman said, applications were pointed at employees, and systems in the data center supported relatively few users, generally hundreds to thousands. The applications did a complex set of work, but had simple and predictable traffic patterns within the data center and across the north-south axis that linked the data center out to end users over the network. The workloads were predictable, often batch-oriented, and the data sets were relatively small.

Fast forward to now, and your applications are not pointed towards services inside the firewall, but outside towards thousands to millions of customers.

The applications themselves are myriad, often with relatively simple compute needs, but there are a lot of applications that end users can hit, and the traffic patterns between interconnected apps running across a cluster of servers are unpredictable, bursty, and cutting across networks and racks of servers and storage as much as they are pumping data out of a system to the outside world through the firewall.

In addition, the volume, variety, and velocity of data generated by applications that companies want to hoard for future analysis and correlation is enormous.

"Petabytes of data used to be rare, uncommon," said Feldman. "Now, petabytes are everywhere."

SeaMicro was founded with the idea that servers are underutilized and therefore gangs of microservers using relatively wimpy processors linked by a fancy-schmancy and ultrafast interconnect were more appropriate to modern applications.

If server utilization is low, so is disk utilization, ranging between 20 and 40 per cent on NAS and SAN arrays, according to Feldman. And while that is better-than-average server utilization, disk utilization (in terms of capacity and I/O operations per second) is still too low, considering the high cost of disk storage.

Directly attaching storage to server nodes is not good because of its inflexibility – even if it is simple – and using Ethernet connectivity and switching to create NAS arrays or Fibre Channel switches to create SAN arrays created storage networks that are as expensive and complex as the server networks.

The AMD SeaMicro SM15000 server-storage cluster

By stretching its Freedom 3D mesh/torus interconnect from the server nodes out to external disk arrays in the SM15000 server chassis, AMD wants the SeaMicro box to look and smell like DAS to each node in the cluster, making it easy to manage, but have the kind of data sharing attributes of SAN or NAS.

As El Reg previously reported, the SM15000 chassis has 64 virtualized disk drives that are already shared by the 64 server-node cards inside the chassis.

The ASIC at the heart of the Freedom interconnect, which also does load balancing across the nodes and virtualizes network links between the nodes inside the chassis (so they can share work) and to the outside world (through Gigabit and 10 Gigabit Ethernet links), has been extended to allow for the attachment of up to sixteen disk enclosures. The largest of the enclosures has 84 disk drives, and with the 64 in the chassis, that allows the Freedom interconnect to see up to 1,408 disks.

The way it works, Feldman explained to El Reg, is that the same fabric extension ports that allowed for the Freedom fabric to virtualize and create the Ethernet links were converted into SAS disk channel extenders that provide multiple links into the 3D mesh/torus interconnect. The disk drawers link by this SAS pipe to the fabric and have no idea they are not talking directly to the server.

The same disk virtualization electronics on the SeaMicro ASIC that simulated DAS drive links between server nodes and individual disks (or RAID arrays of disks shared by multiple nodes) was extended to create links between the nodes and the external disk arrays created by AMD for the SeaMicro box.

The ASIC is in effect simulating a SAS disk controller with RAID 0, 1, 5, and 6 data protection across groups of disks, if you want it. Each I/O module in the SM15000 machine (and there are eight of them in total) has two 10GbE ports and two SAS fabric extender ports, which is how you get sixteen 10GbE ports and sixteen drawers coming out of the chassis.

With the fattest 5U drawers, which hold 336TB of raw capacity using 84 3.5-inch, 4TB disk drives, the SM15000 fully loaded gives you 5.37PB of external disk capacity that nonetheless looks like it is inside the box because of the fabric extension. The server nodes will see it as internal disk, just like those 64 other drives. This 5U drawer will run you $83,000 loaded up.

If you want a 2U draw that supports 48TB of disk, and therefore 768TB across the sixteen virtual SAS ports, these cost $19,000 a pop. AMD is subcontracting the manufacturing of these arrays to unspecified ODMs, and is multisourcing the disk and flash drives that can slide into the 2.5-inch or 3.5-inch drive bays available in the different array form factors.

While Feldman did not give out any precise feeds and speeds on how much I/O operations the Freedom fabric – which has a bi-sectional bandwidth of 1.28Tb/sec – could handle, he told El Reg that the SM15000 would be able to "push IOPS that will make the system look better than flash arrays" at handling flash drives, and that there was "plenty of headroom to spare today" with the Freedom interconnect.

Feldman also said that AMD recognizes that it needs to continue to support existing SeaMicro customers who have been using Intel Atom and Xeon nodes up until now, even as it gets ready to ship its "Piledriver" Opteron node in November alongside a new "Ivy Bridge" Xeon E3-1200 v2 node that will be available at the same time.

Going forward, AMD is committed to not only making SeaMicro technology available for sale to server OEM customers, like AMD sells chipsets and processors today, but also to providing many generations of AMD and Intel processors inside the compute nodes.

When asked about the forthcoming Ivy Bridge nodes from AMD at IDF, Diane Bryant, general manager of Intel's Data Center and Connected Systems group, laughed and said that Intel worked hard to get Intel Atom and Xeon chips into the SeaMicro machines before the AMD acquisition and that "we love all customers."

AMD might have been thinking it could mothball the SeaMicro server biz and ramp up the SeaMicro tech biz – that was certainly the impression that AMD's top brass gave us when the deal was done earlier this year – but selling the SeaMicro technology is going to take time, particularly with Intel making so much noise about server interconnects in the past year.

"I think we're going to be in both for a long time," Feldman said, referring to the raw-component and full-system businesses.

Stack it up

So how does the new SM15000 with those future Opteron processor nodes and fabric-extended disk arrays stack up against a traditional rack of servers to run something like a Hadoop big data workload? Here's Feldman's math on it:

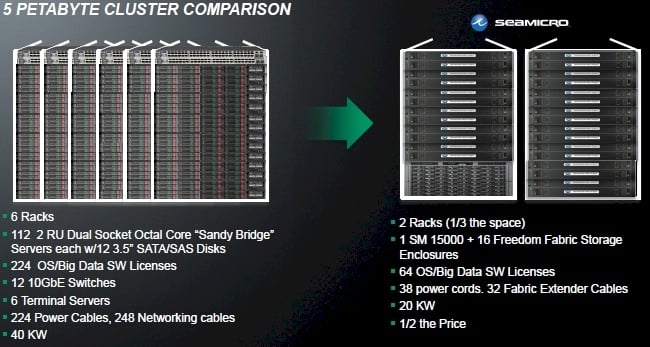

The big data comparison, SeaMicro versus traditional servers

Assume you want 5PB of storage and enough machines to drive it. On the traditional cluster side, start with the current eight-core "Sandy Bridge" Xeon E5 processors. You'll buy 112 2U rack servers, each with a dozen 3.5-inch disks. You'll need 224 operating system and Hadoop licenses per node, and the same number of power cables and network cables. You'll need another six terminal servers and a dozen 10GE top-of-rack switches, too.

This will eat up six racks in the data center, and you will have a lot more compute power than you might otherwise need, at 1,792 cores. (Hadoop is more I/O intensive than it is compute intensive.) This setup will burn about 40 kilowatts.

Now, on the SeaMicro side, you'll have a single SM15000 using the eight-core Opteron nodes for a total of 512 cores across the 64 nodes in the system. You will need only 64 operating system and 64 Hadoop licenses.

You'll add sixteen disk enclosures to get those 1,408 drives across the system and a total of 5.6PB of raw capacity. You'll need 38 power cables and 32 Freedom fabric extender cables. The machine will take up only two racks (that's one third of the space), only 20 kilowatts of juice (half the power) and cost half as much dough.

It will be interesting when AMD runs some benchmarks to prove the performance of such machines is equivalent. TPC-H and Hadoop benchmark tests seem to be in order, at a minimum. ®