This article is more than 1 year old

3D processor-memory mashups take center stage

'I have seen the future, and it is stacked'

How low can you go?

The University of Michigan's "Centip3De: A 3930DMIPS/W configurable near-threshold 3D stacked system with 64 ARM Cortex-M3 cores: The UoM's oh-so-cutely named Centip3De takes 3D chippery in a different direction – that of near-threshold computing (NTC).

NTC's focus is not to crank up processors with a boatload of juice in order to get their transistors switching at high frequencies, but just the opposite: to use just enough power to carry them over their operating-voltage threshold.

The advantage of NTC is clear: less power consumption – especially important if you're stacking compute and memory layers in the same chip, and don't want to watch the entire assemblage melt before your very eyes.

The disadvantage is equally clear: at low voltages, transistors switch slowly. However, if you have a large number of transistors in a large number of compute cores working on a highly parallelized workload, the voltage-supply math can work in your favor.

"By running at a lower voltage, we can have a higher energy efficiency and we can regain some of that performance loss by having many layers of silicon," UoM PhD student David Fick told his ISSCC audience.

One problem with NTC is that the ideal – most efficient – operating voltage for a compute core is lower than that required for its associated cache memory. The Centip3De solves this problem by running the cache memory at four times the clock of the compute cores – but cleverly clusters four cores per cache unit, and manages the cache distribution among them.

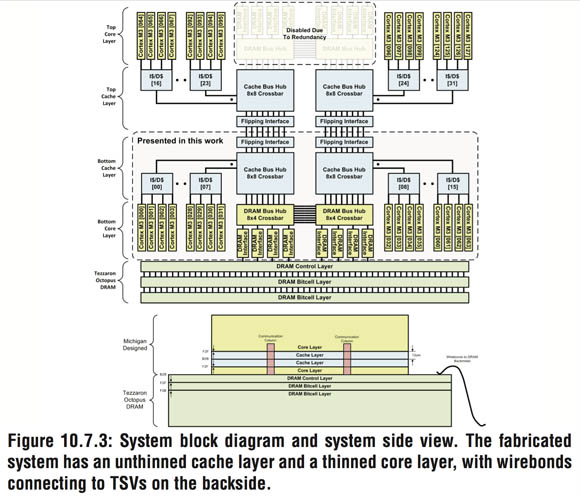

The current Centip3De is a two-layer prototype, but the team plans a seven-layer future (click to enlarge)

For example, if each core is running at 10MHz, as Fick showed in one example, the cache could run at 40MHz. The cores each see a single L1 cache, and the clustering allows them to share it at their own core operating frequency with single-cycle latency.

What's more, the Centip3De's cache design also allows one core to take over more cache space, should it need it, as long as another core's cache space could be reduced. There could conceivably be core data conflicts within the cluster, but Fick says that their team's architectural simulations had shown that "this was not a dominant effect."

In addition, cores could be entirely shut down – dynamically, of course – and their power could be passed to another core, thus increasing their frequency. You could, for example, have four cores in a cluster running at 10MHz each, or one at 40MHz, depending upon the needs of the workload. Entire clusters can be shut down, as well, and their power shunted to adjacent clusters.

Today's two-layer Centip3De processor and DRAM layers are of different process sizes (click to enlarge)

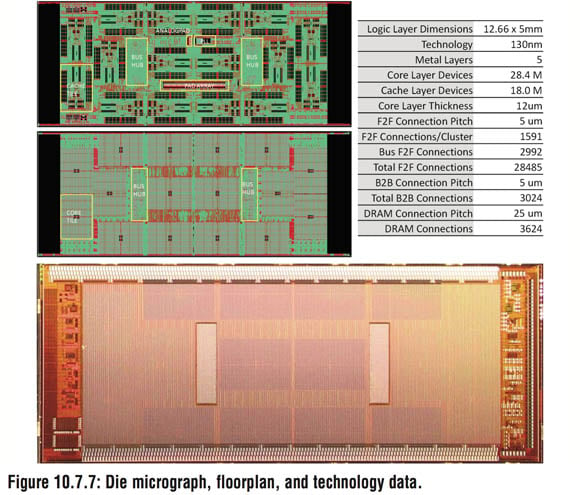

The current Centip3De chip was built using a 130nm process. The paper presented at ISSCC says that if the cores running at 10MHz in the prototype chip were baked using a 45nm SOI CMOS process, that'd translate to 45MHz per core. Fick told his audience that if the process were scaled to 32nm, those 10MHz cores could operate at 110MHz.

Those higher clock speeds would, of course, be throttled down if the compute cores were operated at near-threshold voltages. *