Original URL: https://www.theregister.com/2014/08/15/feature_hack_proof_computing_and_the_demise_of_security/

Hackers' Paradise: The rise of soft options and the demise of hard choices

How it all went wrong for computer security

Posted in Personal Tech, 15th August 2014 09:04 GMT

Opinion John Watkinson argues that the ubiquity of hacking and malware illustrates a failure of today’s computer architectures to support sufficient security. The mechanisms needed to implement a hack-proof computer have been available for decades but, self-evidently, they are not being properly applied.

The increasing power and low cost of computers means they are being used more and more widely, and put to uses which are becoming increasingly critical. By critical, I mean that the result of a failure could be far more than inconvenience.

Recently we became aware that hackers had found it was possible to open the doors of a Tesla car. But that’s not particularly exceptional: vulnerability is becoming the norm. Self-driving cars are with us too, and who is to blame if one of these is involved in a collision? What if it transpires that it was hacked?

It is not necessary to spell out possible scenarios in which insecure computers can allow catastrophes to occur. It is, in my view, only a matter of time before something really bad happens as a result of hacking or some other cause of IT unreliability. Unfortunately, it seems that it is only after such an event that something gets done. Until then complacency seems to rule.

In the classic Von Neumann computer architecture, there is an address space, most of which is used to address memory with the remainder used to address peripherals. The salient characteristic of the Von Neumann machine is that the memory doesn’t care what is stored in it.

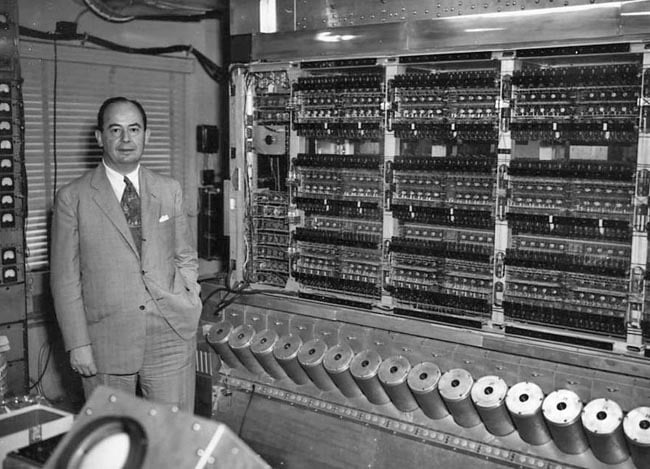

John von Neumann was an inspiration but there was no room for sloppy programming in his designs

Photo: Alan Richards, courtesy Shelby White & Leon Levy Archives Center, Institute for Advanced Study (IAS)

Memory could contain instructions, stacks, data to be processed or results. Whilst this gives maximum flexibility, it also makes the system vulnerable to inappropriate programming. One incorrect address could mean writing data on top of the instructions or the stack or sending random commands to peripherals.

Before the Orwellian term “Information Technology” had been dreamed up by someone who probably called a plumber to deal with an overflow, we had computing. Computers were relatively expensive and had to be seen as a shared resource. In that case it was even less acceptable for a bug in one user’s code to bring the whole system down. Something was included in the system to prevent that.

One of the functions of memory management was effectively to isolate users or processes from one another and from the operating system. It didn’t matter whether the bug was due to an honest mistake, incompetence or malice, it would not compromise the whole system. The proliferation of hacking suggests that we are today forced to assume that malice will take place, rather than being surprised or disappointed after the event.

The cost of a CPU is largely a function of the word length, so there is pressure to keep the word length down to the precision needed for most jobs. On the odd occasion where this was not enough, double precision could be used where the processor would take two swipes at a longer data word that resided in a pair of memory locations.

The processor word length also limited the address range the processor could directly generate. As memory costs began to fall, it became possible to afford more memory than the CPU could directly address. This would be an ongoing phenomenon and another function of memory management would be to expand the address range.

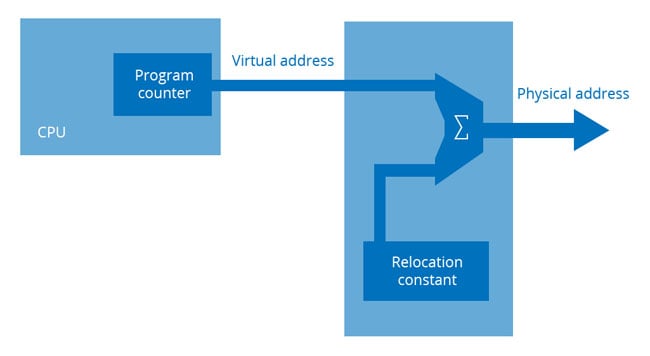

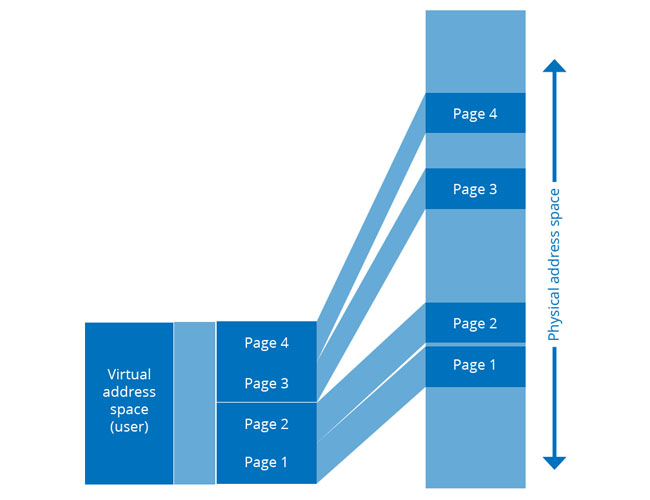

Fig.1, below, shows a minimal memory management system. The memory management unit (MMU) fits between the address bus of the CPU and the main memory bus. The MMU has some registers that are in peripheral address space. The operating system can write these registers with address offsets, also known as relocation constants.

Fig.1: A simplified memory management system – the program counter in the CPU no longer addresses memory directly, but produces a virtual address which enters the MMU. A relocation constant is added to the virtual address to create the physical address in memory

The relocation constant is added to the address coming out of the CPU, known as the virtual address, in order to create the actual RAM address, known as the physical address. The process of producing the physical address is called mapping. The term comes from cartography, where the difficulty of representing a spherical planet on flat paper inevitably caused distortion, so things weren't where you thought they were.

Mainframe thinking

In the 1970s, mainframe computers began to give way to minicomputers. Although minicomputers were addressing a new and different market, they were architecturally descended from mainframes and retained many of the same features. The processors were still microcoded by the computer companies and thus did exactly what the designers wanted.

UNIX developers Ken Thompson and Dennis Ritchie working on a DEC PDP-11 minicomputer

In a typical 16-bit minicomputer, the address range could access 64K bytes, of which 8K of address space might be reserved for peripheral control. The addition of an MMU would allow 18-bit addresses to be created, meaning that 248Kbytes of memory would be accessible. These numbers seem small by the standards of today, but the principle can be applied to memories of any size.

When data/code corresponding to multiple users or programs is simultaneously in different areas of a large RAM, in order to change user or process it is only necessary to change the relocation constant so that the same CPU memory space is mapped to a different physical space. In this way, each program or user appears to have exclusive use of a virtual memory corresponding to the entire addressing range of the CPU. Alternatively, one user could have access to significantly more memory.

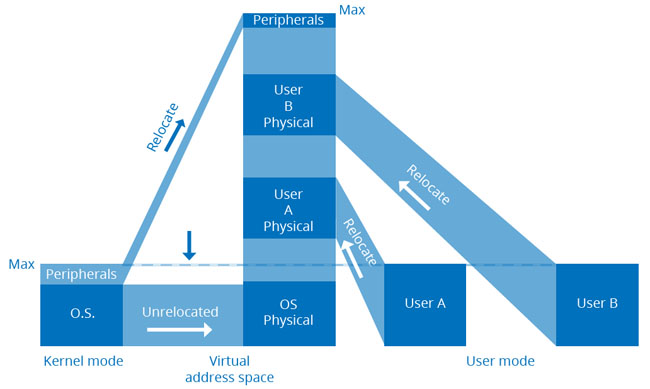

Fig.2, below, shows that typically peripheral addresses were at the top of physical address space, whereas the operating system would be resident at the bottom of physical memory, so that the machine could start up un-relocated and then configure the MMU. There would never be any ambiguity about handling conditions, such as interrupts or traps, because these would always force the program counter by hardware to an instruction at a constant physical location, which would be guaranteed to be in the operating system.

Fig 2: Typical minicomputer memory management system: the operating system in kernel mode is not relocated, except for the peripheral address page which is at the top of address space. Users A and B in user space both see the same unrestricted virtual address space which is relocated to different physical memory spaces. Users cannot access peripherals directly and have no access to the operating system

Since the full virtual address range of the CPU is mapped to RAM alone, it also follows that the user is unable to address peripherals. This can only be done via the operating system by submitting a request.

In the classic time sharing computer – apart from the operating system code area – one area of memory was currently in use by a process and being addressed by the CPU, one area was being prepared for the next user (by being loaded with the appropriate data from magnetic rotating memory) and a third area had just dropped out of use at the end of the previous time slice. That area of memory would need to be written back to the rotating memory, unless it had not been changed since it was loaded.

Another function of memory management was to determine if a given memory area had been modified since it was loaded. If no modification had taken place, the memory contents still reflected what was already on the rotating memory, so no write was needed, which saved time. The system worked rather like a juggler, where the balls in the air were in rotating memory and at the end of a time slice, one would come down into RAM and another one would go up to mass storage.

Since the MMU sits across the system bus, there isn’t much it doesn’t know, so it can tell if a given physical memory page is being read or written. If a page that is supposed to contain code that is only to be read sees an attempted write, it can prevent it and cause a trap.

In practice, MMUs were more complex than the simple example of Fig.1. The virtual address space from the CPU was broken into pages. These were contiguous in virtual space, but as Fig. 3 shows, there was no need for them to be contiguous in physical memory.

Fig.3: The use of page-based MMUs mean that contiguous virtual address space could be non-contiguous in physical memory. Any page that has not been modified need not be written back to rotating storage before being overwritten

An MMU could have one relocation constant per page and pages could be placed in any available physical memory space. The use of more and smaller pages meant that fewer of them would be modified, needing less to be written back to rotating memory.

Lock and load

With a conventional disc controller, DMA (direct memory access) transfers could only take place to or from a contiguous memory area, defined by a starting physical address and a word count. Non-contiguous pages needed one disk command per page.

However, by incorporating a form of MMU into the disc controller, which was loaded with the same relocation constants as the main MMU, the disk controller could perform a single DMA transfer to a series of non-contiguous pages. The DEC Vax was able to do scatter/gather transfers.

Memory management old school style: Dec Vax-11/780 Architecture Handbook 1977-8 extract [PDF]

Source: Bitsavers

CPUs were equipped with local general purpose registers that could be written and read faster than main memory, speeding up iterative processes. At the end of a time slice, the contents of these registers would have to be copied to RAM so they could be stored on the rotating memory and restored when the user’s next time slice came around.

But what registers could the operating system use whilst it was doing that? In practice the processor had two, sometimes three sets of general purpose registers out of which only one set could be multiplexed to the ALU (arithmetic logic unit) at any one time. One of these sets was the kernel set which was for the exclusive use of the operating system.

At the end of a user time slice it was only necessary to set the multiplexer to kernel mode and the register contents were still there from before. Thus the operating system could dip in and out of using the CPU with minimal overhead. Another advantage of multiple register sets is that in principle, nothing the user or process does can affect the operating system.

VAX-11/780: gone are the days when computer architects could sleep easy in their beds

Whilst the performance of these machines in terms of processing speed and memory size seems modest by modern standards, it would be extremely unwise to mock them, because three decades ago they incorporated all of the essential features necessary to build a hack-proof computer. Instead it is we who deserve the mockery for building, programming or tolerating machines that, however powerful, are obviously deficient in the security department and not getting any better.

The combination of kernel and user register sets in the CPU with hardware memory management and a small amount of hard-wired logic that no software of any kind could circumvent, meant that with a competent operating system, these machines were essentially bomb proof.

Processes had access only to an area of RAM that was under the control of the MMU, which in turn was controlled by the operating system. Processes had access only to certain parts of the CPU in user mode and since the entire virtual address range of the processor was mapped to memory, no direct access to any peripheral device was possible.

Any violation of MMU rules, such as an attempt to write to a read-only page, would result in a trap. A trap is a hard-wired process that forces the CPU into kernel mode and vectors the program counter to a constant location in physical memory. The operating system residing there wakes up in an error-handling routine that inspects the MMU registers and immediately alerts it that it is under threat. The offending process will be aborted.

Security? We've heard about it – Dec Vax 11 Architecture reference manual 1982 extract [PDF]

Source: Bitsavers

Since malware relies on having access to the whole computer in order to do harm when the code is executed, malware on such a machine will be defeated, because even if it manages to get into the machine as a bona fide piece of code, as soon as it runs, it will find it has no direct access to anything except an area of RAM. It can’t mess with the operating system because it will run in user mode. It can’t mess with the mass storage because only kernel processes can reach the physical addresses of peripherals.

Today we are awash with malware, viruses and the rest. Their very existence, their success from their perverted standpoint, gives away the fact that security in most of today’s computers is sadly lacking, even if it doesn’t tell us the reason. We have a worldwide anti-virus industry that for a small fee will close your stable door after the horse has bolted.

Essentially, we have dispensed with the door locks, fired the night watchman and security consists of circulating photographs of known burglars so we can recognise them after they have entered our property. Not good enough.

Skunk deeds

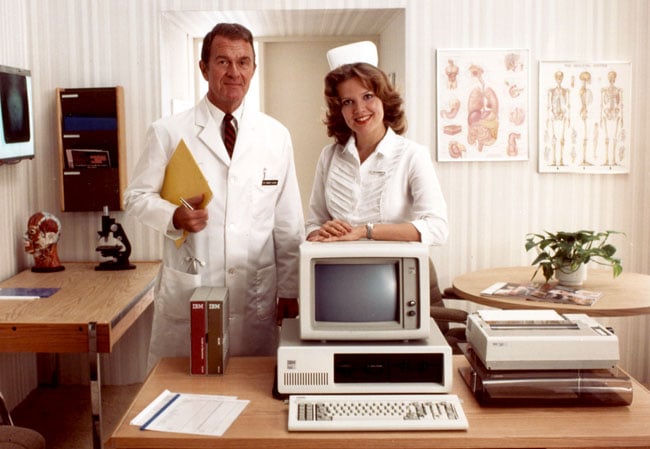

I may have got it wrong, but I think I can see what happened and how this all came to pass. No one expected IBM to respond to the emerging microcomputer market. In fact no one, IBM included, thought it could. IBM was a massive monolith with the time constants of a drowsy brontosaurus. To its immense credit, IBM realised this and was able to re-invent itself and "disrupt" itself, before someone else did it for them. Had this not happened, IBM would not have survived.

An entirely different breed of health professional emerged to keep the Personal Computer protected from viruses

Essentially, IBM created a kind of skunkworks in which what would become the PC was put together at breakneck speed. A result of that was that the architecture of the microcomputer did not descend from mainframes, as it had in minicomputers, but grew out of microprocessors.

The microprocessors came from chip manufacturers, not computer companies, and many of the hardware and software techniques that had evolved in mainframes and minicomputers for reliability and security were either simply not understood, omitted for economy or not subject to scrutiny. We all know where the operating system came from.

Possibly, also on account of that breakneck speed, some things were not anticipated. The PC was assumed to be a stand-alone device, which, being microprocessor based, would only be able to run one application in a moderate amount of memory that could be directly addressed.

The pace of change should have given us a computing experience along the lines of Concorde...

Source: Creative Commons British Airways Concorde G-BOAC 03 by Eduard Marmet

A computer on a network is no longer standing alone and is prone to attack from an external source: the internet was not anticipated. Before the PC, everyone involved in computing had and needed specialist knowledge and was enthusiastic about the progress of the discipline.

Practically no one would have contemplated doing deliberate harm. Once the computer became a networked consumer product, it would be exposed to the whole gamut of human behaviour from altruistic to malicious. The change in the nature of the user was not anticipated.

So instead of a de-rated Concorde, what we got was an upgraded Wright Flyer.

There are so many processor types available that I couldn’t claim to be familiar with the insides of all of them. Readers may be able to shed some light. But it is axiomatic from the amount of memory that today’s machines have that there must be memory management of some kind.

I cannot see how an OS could handle multiple processes without having a kernel mode. It follows that there must be at least some hardware support for security measures outlined above. Perhaps it’s all there?

...but instead we're taken for a ride on the Wright Flyer

I think a large clue has to be that some operating systems, naming no names, seem to be more vulnerable to malware than others. If the restriction was in the hardware that wouldn’t be possible, so the sad conclusion has to be that the operating systems simply aren’t competent.

I suppose the only saving grace is if it turns out that because of the complexity, it is not possible to perform a meaningful test of whether a system is or is not hack proof. If that is true then perhaps we have to abandon the Von Neumann architecture for secure applications and instead have computers in which the OS runs in a completely separate processor.

In one sense I don’t care what the reason is. Whatever the reason, what we currently have is lamentable – scandalous even. If there is ever going to be an Internet of Everything, this isn’t how to go about it. No one in life-supporting disciplines such as aviation will touch PCs with several barge poles and for good reason. If we are to put our house in order and move away from the present squalor, that’s one place we could look.

Will computers of the future require a V62 from Swansea?

I think we also need to take on board that creating and sending viruses is every bit as antisocial and criminal as going around assaulting people and smashing up their property. It's a sorry state of affairs made possible by the present anonymity of computing.

We have licence plates on cars so that bad drivers can be identified. Perhaps one day it will become the law that no computer message can be sent whose sender cannot be identified. Perhaps not, but spare me the howls of protest and come up with a better idea. ®

John Watkinson is a member of the British Computer Society and a Chartered Information Systems Practitioner.