Original URL: https://www.theregister.com/2014/05/29/storage_silo_shatters/

Loved-up shared storage silos in SHOCK SPLIT!

Good news or bad news? Depends if you're a channel bod...

Posted in Channel, 29th May 2014 08:31 GMT

Blocks and Files A previously uniform shared storage architecture is splintering apart as server virtualisation, dedupe, flash and the cloud shatter the old order – giving us five steps to heaven, or hell, depending on your viewpoint.

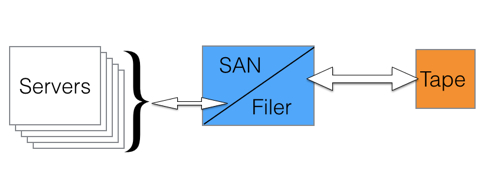

The old shared storage order was a bunch of servers linked across a network to a storage area network (SAN) or network-attached storage (NAS or filer) with old data backed up and archived to tape.

Networked storage - the starting state

Networking was Fibre Channel for blocks and TCP/IP over an Ethernet LAN or aWAN for files. Simple and straightforward. And then a far-reaching change came along.

a

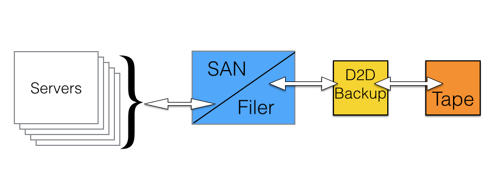

Storage step 2 - deduping backup to disk arrives.

The provision of deduplication made backup to disk practical, with a much lower effective cost/GB than before. This, paired with the attraction of fast disk access compared to slow tape access, has caused a significant decline in the use of tape as a backup medium. There has been turmoil in the tape automation supply market with format consolidation to LTO and DAT, a smaller number of suppliers and dreadfully difficult trading conditions leading to many loss-making quarters, and years, for suppliers like Overland Storage, Quantum and Tandberg.

The flip side of this has been a series of boom years for EMC with its Data Domain deduping backup-to-disk business.

While this was happening, server virtualisation was also on the rise, with EMC once again buying a company and driving the usage of its technology to a dominant position.

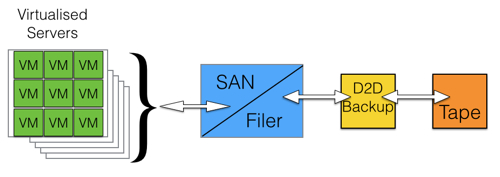

Step 3 - server virtualisation.

The effect of virtualised servers, with each virtual server needing its own access pipe to networked storage, was to point up the scalability limitations of networked storage.

Then ISCSI SANS came along providing block data access over Ethernet and they were positioned as cheap SANs.

SAN and NAS became unified and a separate scale-out approach was developed – in contrast to the traditional scale-up and then fork-lift upgrade when the scale-up limits were reached.

Scale-out meant a networked storage resource could support more servers/virtual servers, but their data access was slowed by network latency and disk data access latency. The answer to that problem was flash.

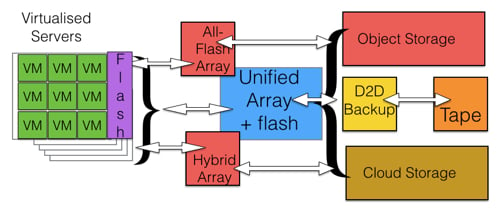

Step 4

Flash appeared inside arrays, with SSDs replacing some hard disk drives and also being used as a cache inside the array, front-ending the disks.

Startup vendors thought freshly designed array software could make better use of flash, extending its endurance by reducing the number if writes for example, and several kinds of flash array arrived:

- all-flash arrays from the likes of Texas Memory Systems and Violin Memory, followed by newer startups such as Pure Storage, Kaminario, Whiptail and Solidfire;

- hybrid flash/disk drive arrays combining flash speed with disk drive capacity from suppliers such as Nimble Storage, Tegile and Tintri; and

- all-flash versions of disk drive arrays, such as EMC's VNX, HDS's VSP, HP's 3PAR and Dell's Compellent also arrived on the scene.

Flash storage also became de rigeur in servers with direct-attach SSDs and PCIe flash cards, popularised enormously by Fusion-io.

Cloud storage was cheap and became even cheaper

At the same time, both object storage and cloud storage stepped up onto the storage stage. Cloud storage was cheap and became even cheaper, with Amazon and other suggesting its use for test and dev, and then archive and then near line storage.

EMC's Centera had been threading object storage product, known as a CAS (Content-Addressable Store) but it took the energy of startups like Amplidata, Caringo, Cleversafe and Scality to bring object storage technology to greater prominence.

For those firms, object storage technology supposedly solved a file system management problem as the number of files in a system climbed to the billion level and beyond. There isn't a single standard object access method and so Amazon's S3 became one default because of its market strength, and NAS protocol access was also provided – with relatively few applications having a pure object access protocol in use.

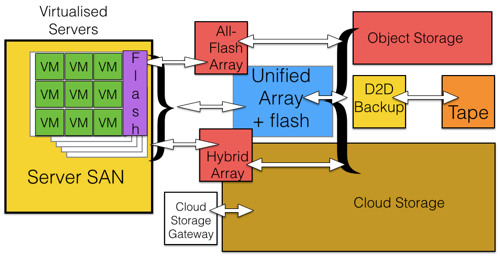

So now we see an abundance of overlapping storage silos, and the situation is about to get worse, or better possibly.

Storage Step 5 - Server SAN

Building on iSCSI block storage, such as the HP-acquired Left Hand Networks and its availability as a software-only SAN running in servers and aggregating their storage into a shared SAN resource, the idea of the ServerSAN has taken the storage world by storm.

The whole scene has been validated by – who else – EMC, with subsidiary VMware's VSAN product and its own ScaleIO technology.

Its proponents say the whole great confusing morass of networked arrays can be swept away. Their primary data access functions get taken over by the server SAN and their secondary or near line data storage role can go to the cloud, which can also take over data protection (backup) and archive. That's the elusive storage simplification which some foresee.

Others pour derision on this idea and say there are many different storage use cases, each requiring dedicated technology. A unifying management and provisioning layer can be used to cover the differences and enable a software-defined storage entity to use underlying differentiated hardware resources – think EMC's ViPR.

This is a much simplified recent history of storage. For proponents of server SAN and cloud storage these two technologies promise armageddon for networked storage array suppliers, both the mainstreamers - Dell, EMC, HDS, HP, IBM and NetApp - and the newbies, both all-flash and hybrid.

The newbies say the mainstreamer's legacy products will become extinct because they now provide bed-of-breed shared storage, while looking for weaknesses in the ServerSAN idea. They'll inevitably focus on inter-server communications and server CPU cycle usage, amongst other things, like data management software maturity.

Suppliers like Nasuni say the cloud can be used for primary storage with local caching appliances. Hyper-converged server suppliers like Nutanix and Simplivity are offering server SANs too. We expect both types of server SAN implementations to sprout cloud storage gateway functionality.

We have many storage technologies now competing for the primary data storage role: server SAN, all-flash arrays, hybrid arrays, all-flash legacy arrays and caching cloud storage gateways. There has to be a shakeout as individual use cases coalesce into more generalised use cases.

We also have cloud and object storage and tape storage competing for the longer term backup and archive role, with some cloud services using object storage and also tape storage. Here Facebook's Open Vault technology – with spun-down, shingled disk media – may have a role to play.

If Armageddon is coming to networked, shared storage array suppliers then it will come at slow speed, encroaching into data centres like glacier melt flowing slowly downhill. If vendors can see it coming they can take steps to step aside and avoid being putting their business into the ultimate cold storage.

If not, we will have a jolting series of business events over the next few years. We are certainly living in interesting storage times. ®