Original URL: https://www.theregister.com/2014/02/18/intel_releases_mission_critical_two_four_and_eight_socket_xeon_e7_v2_line/

Better late than never: Monster 15-core Xeon chips let loose by Intel

New mission-critical CPUs are mission-critical to Chipzilla's critical money-making mission

Posted in Systems, 18th February 2014 18:00 GMT

Intel's long-reigning top dog in the x86 server market, the Xeon E7 "Westmere-EX" of April 2011, can finally enjoy a well-deserved retirement: its "Ivy Bridge–EX" replacement, prosaically named the Xeon E7 v2 series, has finally arrived.

Count the compute cores on this Xeon E7 v2 die – notice anything, well, odd?

When the Westmere-EX Xeon E7 chips were released oh so long ago, it seemed reasonable to assume that their follow-on would be a "Sandy Bridge–EX" Xeon series, seeing as how the Sandy Bridge microarchitecture was the next "tock" in Intel's "tick-tock" cadence of following a new process technology tick with a new microarchitecture tock.

Didn't happen. In September 2012, Intel told The Reg that it would skip the 32nm iteration of its Xeon E7 v2 processors based on the "Sandy Bridge" microarchitecture. Instead, Chipzilla would use that same basic microarchitecture, but bake it using the 22nm, Tri-Gate Ivy-Bridge process technology.

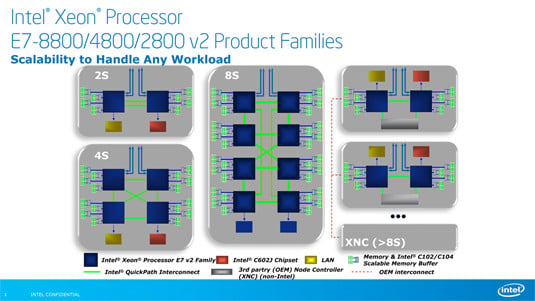

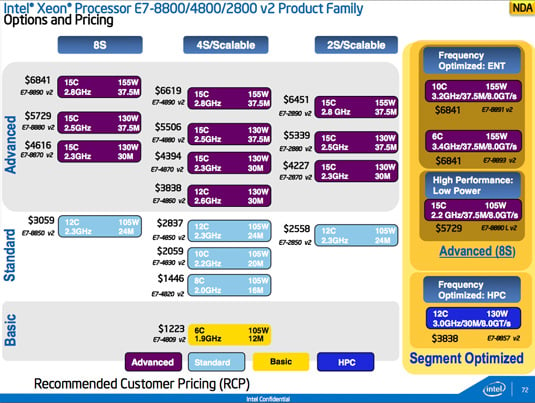

Intel had said that these "Ivy Bridge–EX" Xeons would appear in the fourth quarter of last year. They didn't – but now they're finally here, and available in three series: the two-socket E7-2800, four-socket E7-4800, and eight-socket (you guessed it) E7-8800.

From what Intel performance marketing spokesman Frank Jensen told us, the new versions of the Xeon E7 v2 series should be worth the wait, with an average 2x performance improvement over their predecessors. Note that what Jensen said is not "up to 2x" but an "average of 2x", citing the performance of the new top-of-the line four-socket Xeon E7-4890 v2 in a series of benchmarks against the former top-dog Xeon E7-4870.

Save your pennies

Before we dip into some of the details of this new chippery, do know that these babies are far from impulse buys. When we were briefed on their impending availability last month, the highest-priced 15-core Xeon E7 v2s were projected to run a cool $6,841. Prices may have changed by Tuesday morning's rollout, but don't bet on it.

Intel's top-drawer E7 v2 Xeon costs as much as 20 3.5GHz quad-core Core i7-4770Ks (click to enlarge)

Sharp-eyed Reg readers may have thought they caught a typo in that last paragraph. But no, the E7 v2s with the highest core counts do have 15 cores – an odd number of cores, indeed (pun intended) – arranged in three ranks of five cores each with base (pre-Turbo) clock rates of 2.2GHz to 2.8GHz.

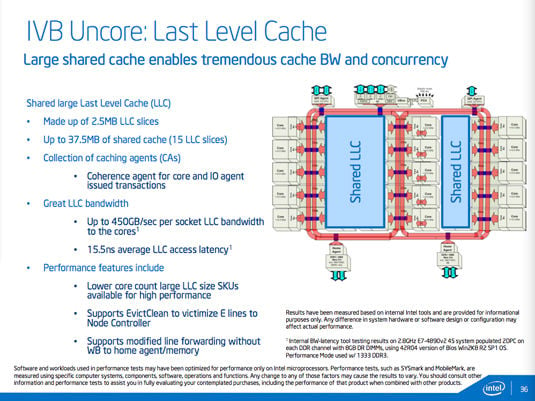

Each of the cores is co-located with a slice of up to 2.5MB of last level cache (LLC), so with a 15-core Xeon E7 v2, simple arithmetic gets you up to a hefty maximum of 37.5MB of total LLC.

However, although those cache slices may look like they're associated with their neighboring cores, they're not that limited – they form a unified, shared LLC that uses three ring busses, each synchronous with the cores' clock, with which to communicate among the cores.

Overall, the LLC system maxes out at around 450GB/sec of LLC bandwidth per socket. Not too shabby.

Intel claims that the average latency in such communication is about 15.5 nanoseconds, and Intel principal engineer Irma Esmer refined that number a bit for us, saying that latencies range from about 11ns to 20ns in the 2.8GHz part.

In addition to the 15-core, 37.5MB LLC part maxing out at a base core clock of 2.8GHz with a TDP of 155 watts, Intel is also offering a plethora of other parts, including a 15-core part running at 2.2GHz that consumes only 105W, and an HPC-targeted part that has but 12 cores, but which runs at a base clock of 3.0GHz while eating up 130W.

Socket two me. Or four. Or eight. Or more.

In addition to stuffing the new Xeon E7 v2 into eight-socket, four-socket and two-socket servers, OEMs can take the E7 v2 series beyond eight sockets by adding their own node controllers – Intel's QPI interconnects can take OEMs to eight sockets gluelessly, but beyond that, OEMs are on their own.

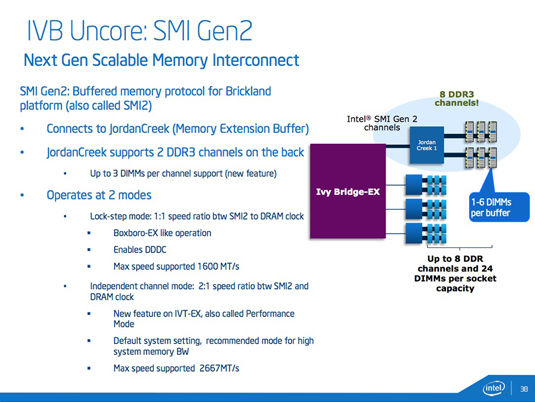

The Xeon E7 v2 series also offers a dual-mode memory scheme enabled by the new JordanCreek memory extension buffer chips. These nifties can support two next-generation scalable memory interconnect channels (SMI Gen2) that handle three DIMMs apiece – up from two in the previous generation – thus supporting up to six DIMMs per channel.

Four JordanCreeks can be tasked to each socket, so we're talking eight DDR3 channels and a hefty 24 DIMMs per socket. To handle all that memory, those channels are a broad eight bytes wide, Esmer said, resulting in up to 2.667 giga-transfers per second (GT/s).

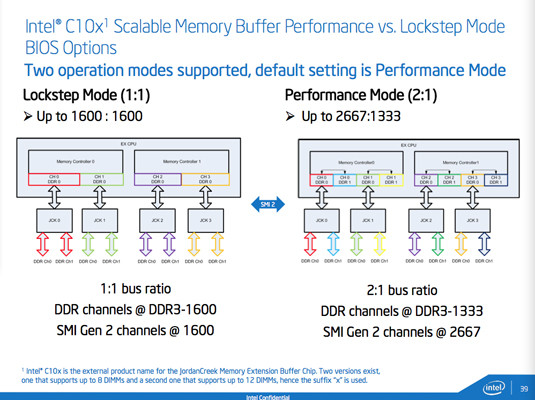

But that's not the nifty part. What's trés cool is the dual-mode nature of JordanCreek's SMI Gen2. It can operate in what Intel calls "Lockstep Mode", in which it operates in a 1:1 ratio with the memory bus, which can crank along at up to 1600MHz. In the new "Performance Mode", however – also known as "Independent Channel Mode" or "Two-to-One Mode" – it'll double up 1333MHz DDR3 to the full 2667MHz we mentioned earlier.

As you might assume, Lockstep Mode is there for when each and every bit, nibble, and byte is precious – think financial transactions – while Performance Mode is there when balls-out performance is the order of the day, and it's the default setting in the BIOS that Intel is providing.

One more advantage of Performance Mode: the 1333MHz DIMMs used in this mode are often cheaper than the 1600MHz DIMMs used in Lockstep Mode – although, as Esmer pointed out, "People who are buying this probably are not shy about populating them" with pricey DIMMs.

So how effective are these SMI Gen2 memory improvements? Esmer – an engineering type not prone to marketing pap – summarized the enhancements thusly: "The bandwidth increase is massive."

When is three more than four?

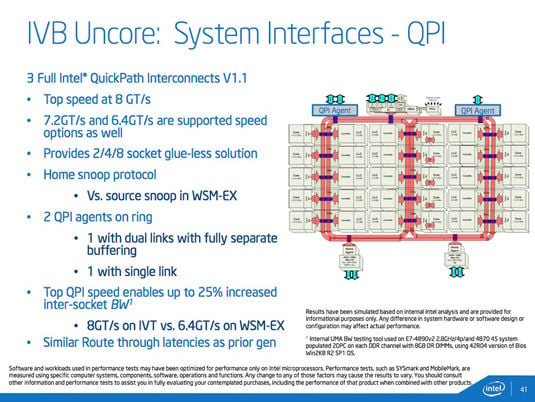

As we mentioned above, the Xeon E7 v2 processors connect to other sockets over QPI – Intel QuickPath Interconnect. Its Westmere predecessor had four such links, but the new Ivy Bridge parts have but three. "But three is sufficient," Esmer said, "because we have integrated IO."

Socket-to-socket transfers over these three QPI links max out at 8GT/s – a 25 per cent bump up from the 6.4GT/s of the Ivy Bridge Xeon's Westmere predecessor – but that speed can be dialed down for compatibility, Esmer said.

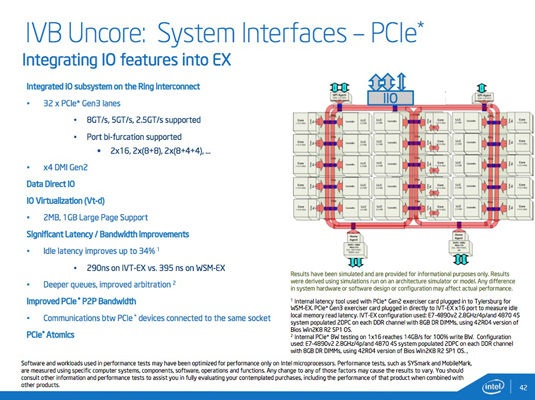

That integrated IO to which Esmer referred comprises 32 PCIe 3.0 (Gen3) lanes and four DMI Gen2 connections per socket. Those 32 PCIe lanes are a step down from the 40 lanes in Intel's E5 v2 line, and she was candid when asked about that drop. "I don't know the reason why we chose 32," she said, noting that some customers, when informed of the drop, had asked for more.

"I don't think that we would have changed if we knew what we know now. There's no technical reason for not having it, basically. We could have – it's just we didn't."

That niggle aside – after all, 32 lanes per socket is plenty for most applications – there's a lot to like about the integrated IO. Latency improvements, for example: in the Westmere part, idle latency was 395 nanoseconds; that been shrunk to 290ns on the new chips, and improvement of 34 per cent.

Overall, Esmer said, integrating the IO on the Xeon E7 v2's die improves aggregate four-socket system IO by a whopping 3.7 to 3.9 times over a Westmere-based four-socket system with dual IO hubs. The Westmere system in her example had a total of 64 PCIe Gen2 lanes and the Ivy Bridge Xeon E7 v2 had 128 Gen3, so any bandwidth increase, while impressive and welcome, is not surprising – although the increase of nearly 4X is.

With little power comes great responsibility

Also unsurprising is that the Xeon E7 v2 is more power-efficient than its predecessor – after all, the Westmere Xeon E7 was baked in a 32nm planar process, while the new Ivy Bridge Xeon E7 v2 is based on Intel's 22nm Tri-Gate process.

There are other power assists in the new chip as well, including running average power limiting (RAPL) at the socket and DRAM levels, a power-management feature introduced by Intel in the Sandy Bridge generation – which, remember, is the Xeon E7 generation that Intel skipped over.

Esmer also said that the Xeon E7 v2 has significantly lower idle power – as low as around two-thirds the idle power than a comparable four-socket Westmere-based system. In the data centers into which these babies will be installed, they'll rarely be left idling, but even when Esmer's exemplary Westmere and Ivy Bridge systems are cranked up to consume equal amounts of power, the Xeon E7 v2 will provide 40 per cent more system throughput than its predecessor. Or so we were told.

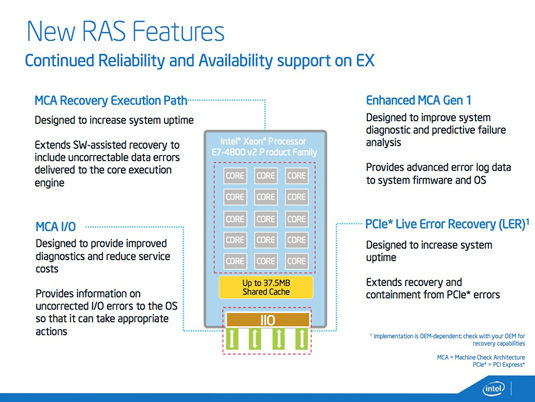

Seeing as how the Xeon E7 v2's target markets include "mission critical" data-center servers and HPC installations, it stands to reason that RAS (reliability, accessibility, and serviceability) features would be on the minds of the chips' designers – and it appears that they were.

In addition to the RAS features already in the current Xeon E7 line (PDF), Intel has upgraded the new chips' machine check architecture (MCA) and PCIe live error recovery (LER) capabilities – although the latter relies on OEM implementation.

RAS has always been something that the RISC chips found in data centers and HPC implementations did better than Intel's x86 chippery: those expensive, powerful chips have traditionally been better equipped for mission-critical applications.

Intel, however, has been inching up on those RISC chips in terms of RAS capabilities, one generation at a time. In fact, this time out even Intel's marketing folks are getting into the act, contributing what they do best: a new moniker. Meet the Xeon E7 v2 line's "Run Sure Technology".

RAS is not the only area in which Intel's x86 server chips are increasingly successfully when competing against their RISC rivals, said Intel's global enterprise segment marketing manager for the datacenter group Sajid Khan at the Xeon E7 v2 prebriefing. The new hotness: Chipzilla is raking in more mazuma.

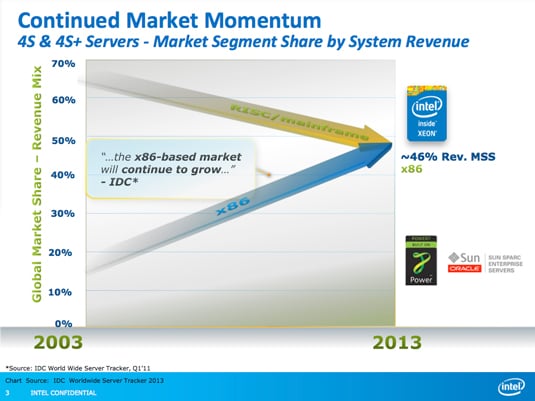

In the past decade, Khan said, x86 chips have risen from under 20 per cent of the four-socket market revenue to virtual parity with the RISC/mainframe systems.

These are just revenue figures – x86's market-share lead is around 4X, Intel says (click to enlarge)

What originally fueled that rise, Khan said, was price: x86 chips were significantly less expensive than their RISC counterparts – and they still are, in most cases. The performance of x86 chips, however, lagged behind classic server RISC and mainframe processors until recently, he said.

When parts based on Intel's Nehalem architecture appeared in this market in 2011 in the form of the 32nm Westmere chips, Khan said, the x86 price advantage was joined by another advantage. "Across multiple performance benchmarks, we started taking leadership positions," he told us. "So it wasn't just about pricing, it wasn't just about price/performance – which at the outset was our strong point – it was about raw performance, as well, across multiple benchmarks."

In 2013, he said, these advantages raised revenues of x86-based four-socket-and-above systems to around 46 per cent of total revenues in the mission-critical space. Due to x86 systems being cheaper than their RISC-based and mainframe competition, however, that 46 per cent revenue share translated to a market share of around 80 per cent, up from Kahn's 2003 baseline, when x86 essentially split the market volume 50/50 with RISC-based and mainframe systems.

With a current mission-critical market-share split of 80/20, you might think that the transition is pretty much complete. Kahn would agree with you, but only to a point. "We believe a good chunk of that has happened – it's continuing, though," he said. "There's that continued momentum to move mission-critical environments away from RISC and Unix and onto Xeon and primarily Linux-based platforms."

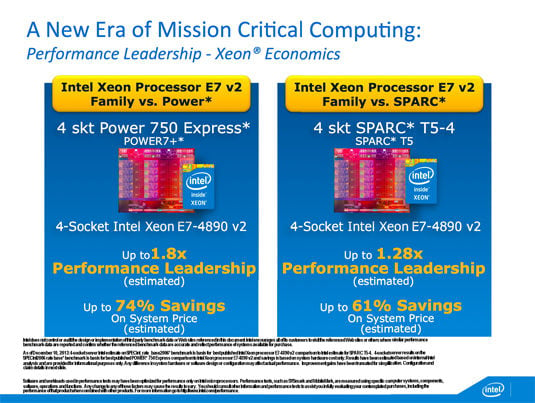

Khan was more than happy to offer a couple of examples as to why it x86 chips were trouncing its rivals, comparing SPEC CPU2006 benchmark results – SPECint_rate_base2006 numbers, specifically – from tests of the IBM Power 750 Express, Oracle Sparc T5-4, and Intel Xeon E7-4890 v2 in four-socket systems.

According to Khan, not only did Intel's new mission-critical processors whip the competition, they did so despite costing less on a full-system basis:

Intel's marketing says butt is being truly kicked; click here for test details (click to enlarge)

Of course, affordable high-performance computing is all well and good, but in mission-critical applications, the ability to remain up and running without failure is also a, well, mission-critical consideration.

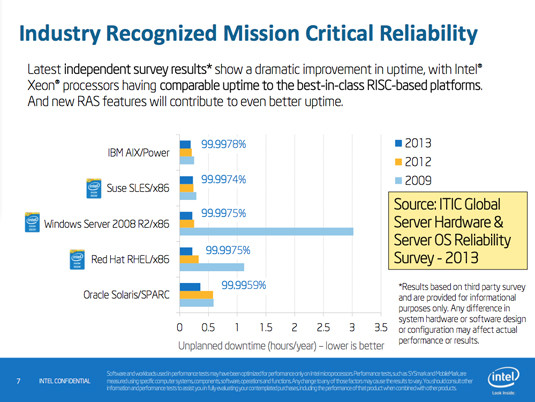

Khan was ready for those questions as well, with data from three surveys – not scientific testing, mind you, just surveys – conducted by Information Technology Intelligence Consulting (ITIC).

When comparing the results of the ITIC's 2013 survey with those conducted in 2009 and 2012, a clear pattern emerged: x86 systems are now in the major-league uptime ballpark.

In fact, according to those admins and C-level execs who participated in the survey, even Windows Server – which stunk up the place relative to AIX and Solaris in 2009 – now has a better uptime percentage than does Solaris:

The variances are, of course, minuscule, being in the range of thousandths or even ten-thousandths of a percentage point, but the implication is clear. "The data speaks for itself," Khan said, noting that not only have x86 systems running Linux-powered Suse SLES and Red Hat RHEL, along with Microsoft Windows Server, passed Oracle Solaris, but Suse SLES – according to those surveyed, at least – has an annual uptime only 0.0004 per cent less than that of the champ, IBM AIX.

Of course, as Kahn freely admitted, this ITIC study is hardly scientific, based as it is on a survey rather than on cold, hard, objective data. That said, if his earlier contention that a Xeon E7 v2–based system can outperform a four-socket Sparc T5-4 by 1.28x at a savings of "up to" 61 per cent, Oracle-based systems don't look all that compelling.

Khan also trotted out three case studies of companies that have standardized on Intel for mission-critical applications: one private-cloud migration conducted by Veyance, a second being a services switcheroo featuring Moody's, and a third investigating business-intelligence TCO involving Essar. Understandably, seeing as how these studies became part of Khan's presentation the companies had glowing things to say about their experiences – but they do provide some interesting reading, in any case.

So do many other features of the Xeon E7 v2 series that was announced this Tuesday at a press event at the Exploratorium museum of science and art in San Francisco. Their latency-reducing speculative snooping, for example, or some of the new RAS features in the fluffily named Run Sure Technology. But for this article, at least, enough is enough – we're well into TL;DR territory.

Rather than nattering on, we'll leave you with one quick comment that an Intel staffer made at the Xeon E7 v2 prebriefing – which just happened to be on the same day that his company announced its Q4 2013 financial results – that shed some light on the importance of the mission-critical chip series to Chipzilla's success.

One reporter asked him what high-end Xeons contribute to the earnings as expressed in the financial report. "Oh, a lot," he responded. "We are the bottom line." ®