Original URL: https://www.theregister.com/2013/11/25/review_das_jbod_subsystem_aic_xj30004243s/

Dude, relax – it's Just a Bunch Of Disks: Our man walks you through how JBODs work

Trevor Pott puts AIC's DAS subsystem through its paces

Posted in Storage, 25th November 2013 15:17 GMT

Sysadmin blog I'm one of those terrible people who "learn best by doing" and have always had a difficult time wrapping my head around exactly how high availability using "JBOD" external disk chassis systems was supposed to work. But my initial ignorance can work for both of us as we learn together.

As luck would have it, AIC was interested in having me do a review of its new XJ3000-4243S external disk chassis. The AIC device turned out to be a great unit and the extended tinkering time allowed me to learn quite a bit.

Plug it in, plug it in

To grok how the JBOD systems work, let's cover some storage basics. Those of you who've taken apart a computer in the past decade will probably be familiar with SATA connectors. You have a power cable and a data cable, you hook one of each up to a hard drive and the data cable gets hooked up to the system in one of three ways.

The first method is plugging the hard drive directly into the motherboard. Here the SATA controller is generally built right in to the southbridge, the motherboard's BIOS picks up the disk and the operating system (hopefully) can use it without any further intervention.

Next up you have the Host Bus Adapter (HBA) – an add-in card that provides you some ports to attach your disks but typically does not do hardware RAID. The last method for attaching disk to system is the RAID card: basically an HBA with hardware RAID processing capabilities, its own BIOS to configure and manage the RAID, RAM cache and even a backup battery unit.

Hard disk attachment to systems can thus range from fundamental integrated feature of virtually any system motherboard to RAID cards that are entire computers in their own right handling the disks on their own and merely presenting a "virtual" disk to the system in the form of the post-RAIDed usable storage volumes.

Unlike the SATA ports on your motherboard, the SAS ports on those HBAs and RAID cards typically are not presented as individual ports. They are presented as mini-SAS connectors which are four ports in a single physical connector. If you've used RAID cards with SATA drives you've probably seen this directly in the form of a break-out cable that turns an internal mini-SAS port into four SATA cables.

Given the serial nature of SAS, however, four ports does not mean that a mini-SAS connecter can use a maximum of four drives. These mini-SAS connector can be attached to SAS expanders to host dozens of drives off a single connector. If you've ever wondered what the relevance of 12Gb/sec per SAS port was when most hard drives can supply maybe 1/10th of that, this is why.

Two ports are better than one

In the world of SATA and SAS, almost any HBA or RAID card that is designed for SAS drives can handle SATA drives. It is perfectly possible to use each of these attachment methods without ever using SAS drives or even understanding the advantages SAS brings to the table.

I won't even try to cover the full range of differences here – for that I recommend Scott Lowe's piece on the topic – but the item that concerns the use of JBODs is that unlike SATA disks, SAS drives have two data connecters on each drive.

Storage systems manufacturers and I have a history of strong disagreements about the concept of "redundancy". Too many of them call a system "fully redundant" when all that it really has is redundant power supplies, RAID to survive the odd dead disk and two HBAs each connecting to a different port on those SAS disks so that you can survive the loss of even the HBA.

SAS expansion is how a server like the Supermicro 6047R-E1R36L can run 36 drives off of a pair of four-port mini-SAS connectors. Indeed, it's designed with SAS expanders that support dual HBAs so if you wanted to roll your own storage server that was just like the big guys, this is exactly what you'd use.

That sounds all fine and good, but I've experienced motherboard failures, RAM failures and even dead CPUs often enough that I can't put just one of these systems into a client's business and cross my fingers. Even with "four-hour enterprise repair" I'm uncomfortable: without the storage system nothing works. If those four hours happen at the wrong time of day, that can end some of my clients.

Two at the same time

Here's where the JBOD systems come in. SAS isn't just "SATA drives that can survive the loss of an HBA". SAS disks can be shared by two systems at the same time! JBOD units such as the AIC XJ3000-4243S I tinkered with are exactly how you do it.

Being external devices, JBODs use an external mini-SAS connector instead of an internal one. You get the relevant cable and plug your HBAs from two systems into the JBOD unit and you're on the road to a high-availability setup. To really get redundant you'd add two HBAs into each server and use two JBODs; that way you can lose either server or either JBOD and your storage cluster will continue.

You'll need an operating system that understands this, of course, but that doesn't look to be too big a problem. Windows's failover cluster manager makes this push-button simple in Server 2012 and *nix options certainly are available.

Among the *nix options available, Nexenta seems the most viable. They maintain their own Solaris fork with a laser focus on ZFS-based storage including exactly these sorts of JBOD configurations.

The device itself

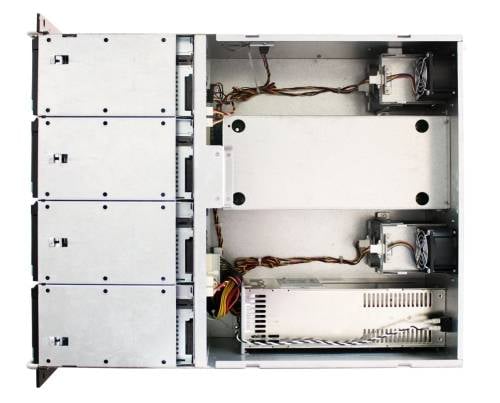

The unit we tested is by no means the top-end offering. We only got to play with 24 disks in a single chassis, AIC can cram 60 disks into 4U with these things.

Even though we only got to play with the mid-range offering, I was not happy to see the XJ3000-4243S leave the lab. It has served well during its tenure, and there is something to be said for the ability to plug 24 disks into the thing and run disks tests against them all simultaneously. It cut about three weeks off our disk diagnostics and RMA backlog all on its own.

All the bits are there; JBOD setups as described above are a tried, proven solution set with enough maturity for there to be multiple players at every point in the market. The margins aren't outrageous and the $/GB of redundant, highly available storage can go up against the many object storage solutions that are floating around. How the hardware holds up would seem to be the determining factor.

My tests of the XJ3000-4243S showed that it was a fantastically built unit. It has hot-swappable power supplies that survived my rigorous "let's see if we can break it" testing. It's got hot-swappable fans that are easy to replace and take punishment. We tested a number of drives ranging from 5400 RPM SATA to 15,000 RPM SAS disks and it handled them all just fine.

It has a 1+1 600w redundant 80 Plus PSU, tool-less rail kit and the onboard firmware for the SAS expanders make it upgradable.

The XJ3000-4243S handled temperature extremes to 45C (impressive, as it's only rated to 35C) and had some of the least frustrating hot-swap trays I've ever used. If you want to chain up more JBODs, you can; it has its own external ports and so you can get a lot more disks in your cluster than just what's in the pair of JBODs.

Fitness for purpose

If your goal is to slam each and every disk to the absolute red line and eke out every conceivable IOPS from every spindle and NAND chip, this is not the solution for you. As discussed above, a JBOD attaches multiple hard drives to a far smaller number of SAS ports via the SAS expanders and the mini-SAS connecter to the server's HBA. You can use up all of the interface bandwidth fairly easily, depending on what you are doing to the disks.

Object-based storage systems can scale out to more physical disks than the JBOD high-availability setups that these devices are typically used to create, but there's nothing that says you have to buy JBODs to run them in HA setups. AIC and other JBOD chassis vendors offer models that are designed to maximise the number of disks per system as opposed to the focus on high availability.

There is nothing that – in theory – stops you from building object storage arrays where an individual "system" controls an entire rack's worth of disks. I can't find anyone doing that, however, largely because that is an awful lot of spindles to be made unavailable if a single server dies. Getting the balance just right is the focus of many a doctoral thesis and the fevered marketing of everyone from Supermicro to Nutanix.

You can now officially attach more disks to a single server than most sane people would ever want to. If your goal is to build a Massive Array of Idle Disks (MAID) then I can't see why you'd ever use anything else. Even if you are building some big, sexy storage backend that will eventually become a SAN for virtualisation, Nexenta's numbers on this argue that you can do quite well with this arrangement.

The next few years are going to contain the most bitterly fought battles the storage wars have seen for some time. Enterprise SAN vendors are under siege from all sides. JBOD HA approaches will sound off object-based storage, virtual storage appliances and network-backed block-level replication schemes. If you're a storage vendor then you are most certainly living in interesting times. ®