Original URL: https://www.theregister.com/2013/11/08/doctor_who_telly_special_effects_tech/

Classic telly FX tech: How the Tardis flew before the CGI era

How the special effects boys made magic in the 1960s, 70s and 80s

Posted in Personal Tech, 8th November 2013 15:00 GMT

Doctor Who @ 50 These days it’s all done with computers, of course.

CGI – short for Computer-Generated Images, or Imagery – was a well established visual effects technique long before Doctor Who was rebooted in 2005, so it was never in doubt that on-set mechanical effects would be duly combined with CGI visuals during post-production. Both the new series’ CGI and the picture compositing work was handled by The Mill until it closed its UK TV branch in April 2013.

A rare use of CGI in classic Who: Sylvester McCoy’s opening titles

Source: BBC

TV production, composition and storage is now entirely digital, so computers are a necessary and inherent part of the production process. No so in the 1960s and 70s, during the classic series’ lifetime. Back then the final product was analog: two-inch Quad videotape masters made from edited videotaped studio footage and telecined 16mm film from location work and model shots

By the end of the Doctor Who’s initial run, computers were already being used in TV graphic design, model photography and video effects. Think, respectively, of Oliver Elmes’ title sequence for Sylvester McCoy’s Doctor, of the motion-control opening Time Lord space station model shot of the mammoth Trial of a Time Lord season, and of the various pink skies and blue rocks applied to extra terrestrial environments during the Colin Baker and McCoy eras.

Yet all these computer applications ultimate still resulted in analogue footage. A sequence shot on analogue videotape would be digitised, tweaked in a gadget like Quantel’s Paintbox rig, and then converted back into the analogue domain to be edited into the rest of the (also analogue) material. During the early 1980s (1983 in the case of Doctor Who) the BBC moved from two-inch Quad tapes to the more compact, more sophisticated one-inch C Format tape, but it was still analogue.

Want to pull the Doctor apart? You’ll be wanting Paintbox then

Source: BBC

Paintbox was introduced in 1981 and didn’t achieve widespread use until the decade’s middle years. Than as now doing effects digitally was relatively easy – it’s just about altering or combining pixel colour values. If one of Image A’s pixels in the framestore has a certain RGB value, write the output pixel value from stored Image B instead. Creating a good “green screen” shot is a little more complicated than that, of course, but that is essentially the algorithm for, say, superimposing a shot of the Doctor onto Raxacoricofallapatorius or one of RTD’s other worlds with outlandish names.

Creating the same shot entirely with analog kit was something else, however. So how did Doctor Who’s special effects technicians make the Tardis and all those silver-sprayed washing up liquid bottle spacecraft seem to fly through the stars, and make the Doctor appear to be slugging it out with little green men on alien sands when yet another Buckinghamshire quarry shot simply would not do?

Back in the 1960s, even the basics of colour video signal manipulation were unavailable to the special effects boys. As tight as Doctor Who’s budget was, its designers and the model makers who turned blueprints into physical objects for photography were able to come up with incredibly detailed work.

Existing footage doesn’t do the Dalek city model work justice. Here it’s filmed alongside actors in a false-perspective shot

Source: BBC

Looking back at early stories on DVD, most of which, though restored, still derive from low-quality film or video duplicates at many stages removed from the original footage, it can be hard to appreciate how good the original imagery was, though back then tellies used a mere 377 lines to display the picture.

The first Doctor Who stories, recorded in 1963, were transmitted using the System A format. Devised by EMI, System A streamed television pictures as sequential fields of alternating lines, two fields interlaced together forming a single frame. The moving picture was transmitted at a rate of 50 fields every second – so 25 frames a second – to harmonise it with the frequency of the mains electric current driving studio lighting. To have used a different frequency would have introduced strobing picture interference.

System A actually supported 405 scan lines in a frame, but only 377 were used for the picture, the rest being left in order to allow slow display circuitry to have caught up with the incoming signal back at the start of a new field. System A not only had 65 per cent of the vertical resolution of later standards, but it couldn’t do colour.

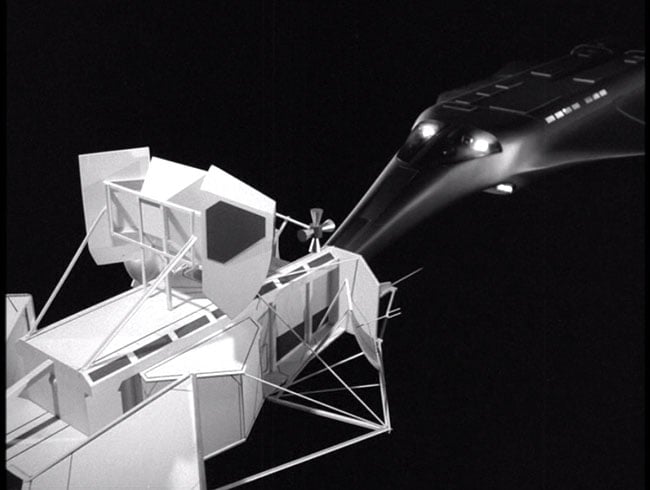

There’s some nice model work in The Space Pirates - but no stars

Source: BBC

Video might be fine for capturing actors’ performances on brightly lit studio sets, but it wasn’t really any good for model work. Videotape made models look even more like models than they did anyway, as some of the model shots in Frontier in Space, Carnival of Monsters and, later, Full Circle demonstrate this flaw perfectly - so effects shots were filmed, allowing photographers to use more subtle lighting.

So effects shots were filmed, telecined to videotape and then edited into the master tape. Rockets, say, were mounted in front of a suitably starry backdrop, typically a back-lit black cloth with tiny circles cut where the photographer wanted the stars to appear. Sometimes the technicians didn’t even bother with stars. Quite a few of the later Patrick Troughton stories, most notably The Space Pirates, are full of ship models moving across the screen against a plain black background.

Best of PALs

In the early days, spacecraft were shot at standard camera speed. Only later would filmed effects be captured at high speed to ensure that, when slowed down to the correct speed, their jerky movements would be smoothed out thereby creating a better sense of scale. Ian Scoones’ work on the Jaggaroth spacecraft in City of Death, shot in 1978, is still gorgeous to look at more than 30 years on.

You could do some clever stuff with CSO, if you were careful

Source: BBC

Effects shots which required actors to appear in the shot would be achieved by masking off a section of the set in black, then filming the thesps doing their thing. The film or videotape would be rolled back through the camera, and re-exposed, this time to a real or a model set masked to ensure the already exposed areas of the film were not exposed a second time. The result: one piece of film containing two separately shot images which combine into a single shot. This is how the lifts in the Dalek city on Skaro were shot in the second Doctor Who story, The Mutants, later renamed The Daleks, were achieved.

This trick, like almost all of the early approaches to effects work, derived from cinema technique, despite the use of videotape and electronic cameras. It wasn’t until the adoption of the PAL (Phase Alternation Line) TV system – developed in 1962, established as a European standard in 1963, and put in use by the BBC in 1967 on BBC 2; BBC 1 followed in 1969 – that colour became possible and engineers could begin playing with the signals to see what could be achieved entirely in the video domain.

PAL extended the number of lines to 625 - only 576 are used for the visible picture - again transmitted to form 50 alternate-line fields every second. Each field was interlaced with its predecessor to display 25 frames each second. Like System A, PAL primarily transmits a monochrome image - the main signal defines the brightness of a given point on the screen, called its ‘luminance’. Within that main signal, there is a quadrature amplitude modulated sub-carrier sending the colour information - a signal that black and white TVs would simply ignore and which was configured not to interfere with the luminance signal.

CSO behaving badly

Source: BBC

PAL’s colour signal transmitted red and blue colour levels - ‘chrominance’ - with the green level derived by taking the combined red and blue signal strength at a given point away from the luminance level at that same point. That saved bandwidth. So did transmitting colour data for effectively half the number of lines, though that was the result of PALs alternation of the colour signal’s phase to help correct phase errors by cancelling them out. Fortunately, the human eye’s colour resolution is less effective than its ability to detect changes of brightness, so the lower colour resolution was not a problem.

One upshot of all this became known by BBC engineers as Colour Separation Overlay, or CSO. Engineers working for the broadcasters on the independent network called it Chromakey. Whatever the moniker, the essence of the technique involves substituting one picture signal for part of another, the substitution keyed to a specific colour, hence the ITV name.

The signal from the foreground camera was run through a ‘key generator’ which essentially created a video mask derived from the key colour. After the key signal had been adjusted for hardness or softness, it was used to control the video mixer being used to combine the output from the foreground camera and the signal from the background camera.

Foreground, rocks and Daleks. Background: Dalek saucer and... er... technician’s screwdriver

Source: BBC

Unlike CGI, the CSO mixing was done live, before the shot being recorded, so any errors remained for all to see. Studio-recorded TV in the 1970s and 80s generally lacked the time to remount shots with iffy CSO, so directors just had to live with glitches, of which there are many to be spotted in Doctor Who episodes from the period.

We’ve all seen them: dark or coloured lines at the borders between keyed picture components; areas of the foreground picture appearing to disappear, the result of studio lighting casting light of the same hue as the key colour and incorrectly triggering superimposition, or the key colour backdrop itself reflecting off a shiny object; and of course hair or glass not looking right because the intensity of the key colour had dropped out of range while passing through it.

The key colour - usually yellow in early 1970s Doctor Who episodes, and blue later on - had to be carefully selected to avoid replicating natural human skin tones. With the colour set, parts of the set into which separately shot images would be later dropped - radar screens, windows, stuff like that - would be painted in the key colour. If the lighting was a bit off, the apparent colour intensity would fall below that the mixer was expecting to key an image to. Costumes had to be designed to ensure they didn’t include the key colour. Naturally, that didn’t always happen.

Chromakey monkey asleep at the wheel? The yellow section should have shown a futuristic monitor display

Source: BBC

And sometimes Vision Mixers, the guys charged with making the effect happen, simply forgot to key in the image to be superimposed. Watch out for unexpected large areas of yellow or blue behind the Doctor or others. Examples are legion, particularly when yellow was used as the key colour - blue might just occasionally be mistaken for sky.

With time for patient, careful set-up, CSO could be quite effective, and there’s plenty of good CSO in classic Doctor Who too. The best examples are barely noticeable. But time was not a plentiful commodity during the recording of a Doctor Who episode and the results could be very poor indeed.

Enter Computers, stage left

CSO had some inherent limitations too. Before the advent of motion-controlled camera rigs, it was impossible to move the cameras photographing the shot’s two components in perfect harmony. Nudge one and the background, say, would move while the foreground didn’t. Many a nicely keyed shot was spoiled by this kind of disjointed movement. It meant that CSO really couldn’t be used for anything other than static shots.

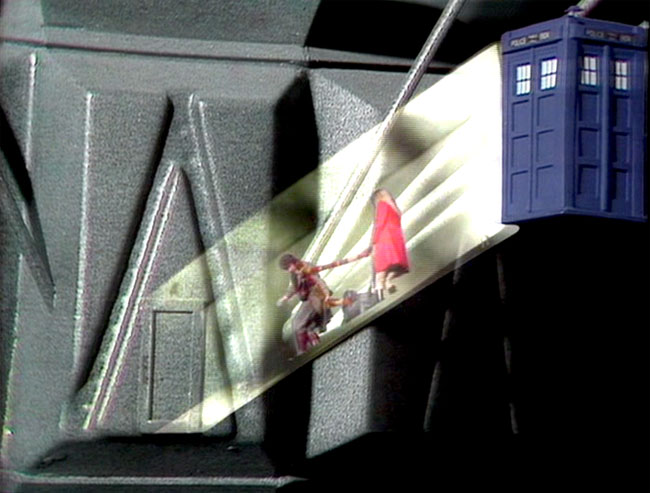

Scene-Sync, used only once in Doctor Who, motion-controlled CSO cameras

Source: BBC

It didn’t stop directors from trying, of course. A common technique was to film stock footage of smoke or rain in order to superimpose it on a previously recorded tracking shot. But while the background shot reflected the camera’s pan, the smoke or rain shot didn’t, rather giving the game away. Of course, you could make exactly that kind of mistake with the laborious optical printing process used by the movie business at the time, and many films did, but it seemed worse somehow on the small screen.

Eventually, Evershed Power Optics engineer Reg King came up with Scene Sync, a motion control system which could co-ordinate two cameras’ movements to allow CSO background and foreground shots to move in harmony. A motion detection rig fitted to the first camera picked up pan and tilt movements and relayed them by cable to a slave unit which controlled the second camera. With careful calibration, the system could scale down movements to match the different scale of the subjects being shot. A camera recording a background model at 1:10 scale had to be panned a tenth as far as the master camera moved in order for the movements to match up.

Scene Sync was first tried on Doctor Who during the recording of Season 18’s Meglos - which was also the only time it was used on the show. The production team allowed the story to be a guinea pig for the new technique, in exchange for which they got to use Scene-Sync for free. While the Meglos experiment provided valuable experimental data that would be used to refine the technique for other shows, including The Borgias and Jane at War, the Doctor Who production team found the process’ manual calibration too time consuming to rely upon it.

This was never going to look right

Source: BBC

In any case, just as computers were being used to control camera movements - a trick that goes back to the mid-1970s when it was pioneered on the likes of Star Wars - they were also moving into the video effects arena. As we’ve seen, Newbury, Berkshire-based Quantel launched Paintbox in 1981, and it soon became easier and cheaper to do picture composition and adjustment in the digital domain.

Paintbox comprised custom hardware that could grab two video fields, digitise them into a frame and store it. A keyboard, a tablet and a stylus was all a trained operator needed to combine video sequences, adjust the colours and paint in new ones - hence the name. In 1982, Quantel introduced Mirage, a box which allowed frames to be replicated, scaled, distorted and bounced around the screen. TV opening titles would never be the same again. As Paintbox quickly defined the look of early 1980s pop videos, so Mirage defined how a decade of television was presented.

Doctor Who benefited in particular from Paintbox, not merely because it soon proved a more flexible, more efficient alternative to CSO, but it allowed those archetypal quarries-as-alien-landscape shots to be made to look even more extraterrestrial by colouring the rocks blue, painting the sky purple or dropping in shots of smoking volcanoes in the background. It also allowed director Lovett Bickford to pull Tom Baker to bits in The Leisure Hive.

Quantel’s Paintbox could easily be over-used...

Source: BBC

Shots of the Cheetah People planet in 1989’s Survival, for instance, are way ahead of comparable ‘alien panorama’ effect shots from just three or four years previously. Survival was the last classic Doctor Who story. In the years that followed, Quantel kit was improved but eventually replaced by desktop computers running off-the-shelf photo editing software. Couple that with 3D modelling software and it became possible to assemble complex CGI shots, initially static ones, then panning shots and later, panning shots with small amounts of animated movement - smoke, birds and such - to convince the viewer he or she w actually seeing what they’re seeing.

With the basic principle established, it was just a case of waiting for ever more powerful hardware to render all this imagery more quickly, to allow the pictures to be much more detailed, or both. Today, Doctor Who looks far more impressive than it ever has because of these resources, and better than it did eight years ago when it returned to TV screens.

Of course, time and the budgets that determine how many person-hours are available continue to limit what effects shots can be achieved, which is why even now Doctor Who rarely goes OTT beyond a couple of episodes a series. But even a small number of carefully crafted tweaks can only do so much to sell a shot and convince the viewer he or she is looking at Second World War London, subterranean Silurian cities or rotating black holes at the edge of the universe.

...but it could also be applied to great effect to add moons and active volcanoes

Source: BBC

Though it’s also nice to know - particularly given Doctor Who’s long and much-mocked tradition of wobbly sets and iffy FX - that production team’s reach still exceeds its grasp. Even today there are glitches, if you know where to look... ®