Original URL: https://www.theregister.com/2013/08/25/heterogeneous_system_architecture_deep_dive/

The 'third era' of app development will be fast, simple, and compact

Will Intel and Nvidia join the HSA party, or insist on going it alone?

Posted in Software, 25th August 2013 20:00 GMT

Hot Chips At the annual Hot Chips symposium on high-performance chippery on Sunday, the assembled chipheads were led through a four-hour deep dive into the latest developments on marrying the power of CPUs, GPUs, DSPs, DMA engines, codecs, and other accelerators through the development of an open source programming model.

The tutorial was conducted by members of the HSA – heterogeneous system architecture – Foundation, a consortium of SoC vendors and IP designers, software companies, academics, and others including such heavyweights as ARM, AMD, and Samsung. The mission of the Foundation, founded last June, is "to make it dramatically easier to program heterogeneous parallel devices."

As the HSA Foundation explains on its website, "We are looking to bring about applications that blend scalar processing on the CPU, parallel processing on the GPU, and optimized processing of DSP via high bandwidth shared memory access with greater application performance at low power consumption."

Last Thursday, HSA Foundation president and AMD corporate fellow Phil Rogers provided reporters with a pre-briefing on the Hot Chips tutorial, and said the holy grail of transparent "write once, use everywhere" programming for shared-memory heterogeneous systems appears to be on the horizon.

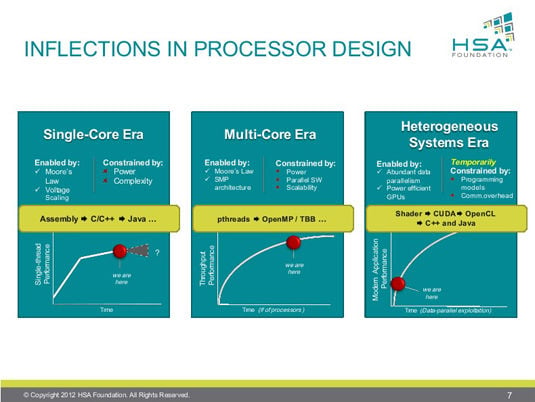

According to Rogers, heterogeneous computing is nothing less than the third era of computing, the first two being the single-core era and the muti-core era. In each era of computing, he said, the first programming models were hard to use but were able to harness the full performance of the chips.

"In the case of single core," Rogers said, "we started with assembly code, then we went to much better abstractions: structured languages, objected-oriented languages, managed languages. At each stage you give up a little bit of performance for massive improvements in productivity, and the platform volumes grow extremely fast as programmers can use the platforms much more efficiently."

The same thing happened in the multi-core era, he said, moving from direct-thread programming to directive programming to task-parallel runtimes. In heterogeneous programming, however, that progression is just beginning. "We've gone from people writing shaders directly," he said, to proprietary languages such as CUDA, to open-standard languages such as OpenCL and C++ AMP.

"But ultimately," he said, "where the platform is going with HSA is to full programming languages like C++ and Java and many others."

Exactly how HSA will get there is not yet fully defined, but a number of high-level features are accepted. Unified memory addressing across all processor types, for example, is a key feature of HSA. "It's fundamental that we can allocate memory on one processor," Rogers said, "pass a pointer to another processor, and execute on that data – we move the compute rather than the data."

Full memory coherency, for another example, eliminates the need for software to manage caches. An architecture-queuing language, Rogers said, will allow an application or a library to dispatch packets to a GPU in what he called a "vendor-agnostic" manner. To enable preemption and context switching for a variety of applications and application types, HSA will support time-slicing throughout the entire collection of processor types.

Rogers took pains to emphasize that HSA is "defined from the outset" to be an open platform, with its specifications owned by the Foundation and delivered by means of a royalty-free standard. "It's designed from the ground up to be ISA-agnostic for both the CPU and the GPU – obviously that's very important," he said, a shared goal that's reflected in the range of hardware, operating system, tools, and middleware companies that have signed on as Foundation members.

HSAILing into the future

The Foundation's first spec, the HSA Programmers Reference Manual version 0.95 – which the HSA Foundation "affectionately" refers to as the HSAIL spec, or HSA Intermediate Language – was published this May, and is available for download on the Foundation's website. Additional specifications are under development for the HSA system architecture, the HSA runtime software, and tools such as a debugger, a profiler, and so forth.

HSAIL is a virtual, explicitly parallel ISA for parallel programs that's finalized by a JIT compiler that the Foundation, understandably, calls a Finalizer. HSAIL is "ISA independent by design," Rogers said, for both CPU and GPU. "There's nothing about HSAIL or HSA that constrains [independent hardware vendors] from their innovation in terms of how they implement the specification, and yet it guarantees compatibility for software."

The Foundation, Rogers said, has also defined what he characterized as a "very comprehensive" relaxed-consistency memory model for HSA. "We made sure during the design that it was compatible with all of the high-level language memory models, some of which were under development at the same time as HSA, so we tracked them in real time," he said, using as examples the C++11, Java and .NET memory models, and saying that HSA is compatible with all of them.

The HSA software model simplifies sending data to the GPU. But although applications can drive work to hardware directly, Rogers said, few application developers will choose to do that. "Many will go through optimized domain libraries and task-queuing libraries that will be optimized directly to the hardware queues," he said.

HSA and the OpenCL open source standard for parallel programming for heterogeneous systems are intended to coexist. "HSA is an optimized platform architecture for OpenCL, it's not an alternative to OpenCL," Rogers said. "It runs OpenCL applications extremely well," and doing so results in "immediate" performance improvements and efficiencies, with wasteful copies eliminated and dispatch latencies reduced.

OpenCL 2.0, by the way, was announced by Khronos at SigGraph last month, and Rogers said that its published specifications and features are in "considerable alignment" with the planned direction of the HSA platform.

When introducing a new platform such as HSA, Rogers said, it's "extremely important" to provide programmers with good libraries to take advantage of that platform. In the case of HSA, those libraries are now available in an OpenCL, C++ AMP template library called Bolt.

"It has the scan, sort, reduce, and transform routines that you'd expect," he said, along with more-advanced routines such as heterogeneous pipelines to make it simple for programmers to run pipelines back and forth from the CPU to the GPU.

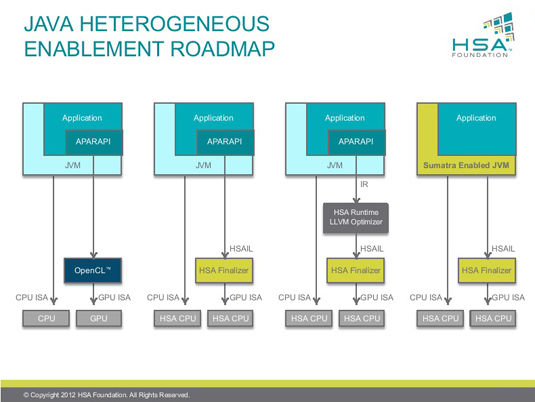

HSA for Java is particularly interesting, Rogers said, due to what he called the "predominance of Java in server installations, data centers, and cloud servers." He pointed to the open source Aparapi library, a Java-bytecode-to-OpenCL runtime converter that supports parallel processing on GPUs or thread management on multi-core CPUs.

"We looked at what the roadmap should be for Java," Rogers said when displaying the slide below. "The left column shows the Aparapi stack on OpenCL, and then you can see a progression where we take Aparapi directly to the HSA Finalizer, and then through the [low level virtual machine] optimizer to that Finalizer."

As Java evolves, a new JVM will enable data-parallel code without third-party help (click to enlarge)

While each of those three steps will boost performance, Rogers says that fourth step is where HSA will really shine. "The ultimate goal," he said, "is to put heterogeneous acceleration directly into the Java virtual machine, because Java virtual machine features and core features of the Java language naturally get more adoption than third-party libraries."

That where Project Sumatra comes in, an open source, open-JDK project cosponsored by AMD and Oracle that's targeted for release in Java 9 in 2015, and which is designed to enable developers to write and execute data-parallel algorithms in Java with GPU acceleration, somewhat similar to what Java 8's Lambda feature does for multi-core CPUs. (More information on Sumatra can be found here, here, and here.)

Shrinking apps will result in more apps

HSA, Rogers promised, will be smart enough to know when to pass what data to what processor, be it CPU, GPU, or some other accelerator. Haar face detection, for example, works by running pixels of an image of a face through several passes called "cascades", some that benefit best from a CPU and some from a GPU, but which require both per cascade.

Processing of suffix array data structures, on the other hand, which are used for text and "big data" analytics, is a pipeline in which some stages work best on the GPU and some on the CPU. HSA will be smart enough to performing the compute-unit balancing in the Haar face detection and the stage-switching in the suffix data array processing.

It's not just performance that HSA is aimed at, however – it's also code complexity. "A huge motivation in the HSA architecture," Rogers said, "is ease of programming."

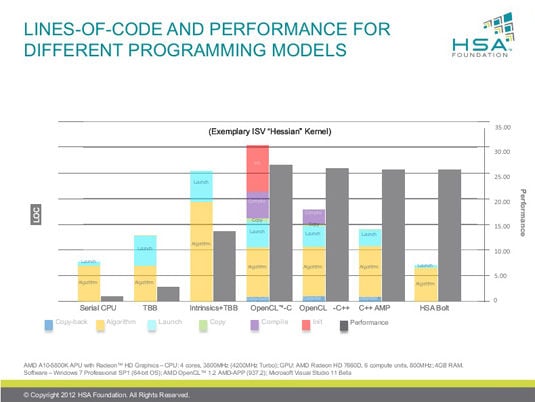

He cited examples of various computing systems running the Hessian kernel used in image processing to illustrate HSA's ability to both boost processing and simplify code. The tests were run on a serial CPU, threading building blocks (task-parallel runtime acceleration), added intrinsics (CPU assembly code), OpenCL with C semantics, OpenCL with C++ kernel language, C++ AMP, and finally HSA using the Bolt library.

In the serial CPU example, code was compact and straightforward, but performance was low. As code complexity (measured in lines of code) increased, so did performance, which peaked at the OpenCL with C semantics test. Code complexity dipped substantially thereafter, but performance dipped only slightly. In the test using HSA with the Bolt library, performance was marginally lower, but code complexity was substantially reduced.

"The code complexity is going down," Rogers said, "which means that the number of applications will go up."

The final HSA specifications should be finished mid-2014 – but as Rogers cautioned, "Honestly, closing specifications in a foundation or consortium with a lot of members is an inexact science." ®

Bootnote

Conspicuous in their absence from the HSA Foundation membership list are Nvidia, promoter of its own CUDA parallel-processing platform, and Intel. "The HSA Foundation has invited both companies, and we would welcome either or both of them to join us," Rogers said when their absence was pointed out during the pre-briefing's Q&A session.

"I will say that over time it's my hope they will join in. Ultimately it's good for the industry, it's good for the application developer, it's good for end users when we get to open standards and the hardware companies compete based on performance, power, and extension features. Having multiple different but equivalent ways of doing the same thing really doesn't benefit anyone."