Original URL: https://www.theregister.com/2013/06/25/the_future_of_moving_images_the_eyes_have_it/

The future of cinema and TV: It’s game over for the hi-res hype

All you've ever been told sold about moving pictures is wrong

Posted in Personal Tech, 25th June 2013 10:18 GMT

Feature Digital video guru and author of The MPEG Handbook, John Watkinson, examines the next generation of TV and film and reveals it shouldn't be anything like what we're being sold today.

The next time you watch TV or go to the movies, bear in mind that you are not actually going to see any moving pictures at all. The movement is an illusion and it’s entirely in your own mind.

What is actually on the screen doesn’t move. It is a series of still pictures that are each held there for a short while before being replaced.

Currently, the obsession is for ever higher pixel counts, an approach that disregards how we actually see moving images. If broadcasters have their way, we could be on course for some ridiculous format decisions.

Intuitively, you would think that the frame rate - the number of pictures per second - would have quite a large bearing on the quality of the illusion, and you would be right. Equally, you might think that the film and TV companies had done a lot of research into human vision in order to choose those rates that have been in use unchanged for decades. Unfortunately, you would be wrong.

Let’s see how we arrived at the current frame rate of movie film: 24 frames per second. Silent movies ran even slower, at about 16fps, but when the optical soundtrack was invented, it was found that the sound was too muffled at the old film speed because the optics couldn’t resolve the higher pitches in the soundtrack. So the film was sped up in order to get enough sound quality. The use of 24fps in movies has nothing whatsoever to do with any study of human vision.

American television runs at 60 fields per second, whereas in the old world we get along with 50. Do Americans have better vision than Europeans so they need a higher rate? As many Americans are descendents of émigrés from the Old World that’s not very likely. Fact is, the rates used in television were chosen to be the same as the frequency of the local electricity supply because of fears that different rates might interfere or beat with electric light. Once more, the decision had nothing to do with human vision. The entire edifice of film and TV picture rates has no foundation and it is going to have to change.

Fields of view

Any attempt to project a film at its native 24fps risked giving the audience headaches or triggering epilepsy. In practice, every film frame was flashed twice on the screen. The projector had a two-bladed shutter and a new frame was pulled down only when one of the blades was blocking the light. That gives the illusion of 48fps, provided nothing moves.

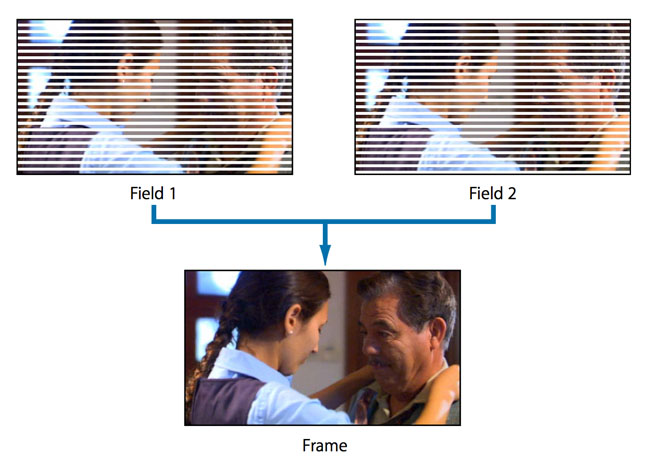

The TV industry must have taken note that the movies were short-changing their audiences by showing every frame twice, so they thought up a similar cost-saving mechanism, called interlacing. Pictures are associated into pairs, called odd and even. In one, the even-numbered lines are simply discarded to make a field, whereas in the other, the field is made by discarding odd-numbered lines. When the two types of field are presented in rapid succession, all of the lines in the frame become visible again provided nothing moves.

The proponents of frame repeat in cinema and interlace in television had made the classic mistake of assuming all that matters is the time axis. Along the time axis, frame repeat doubles the effective frame rate, cutting flicker, and two interlaced fields correctly mesh together again.

There’s a whopping and tragic flaw in these assumptions, and it’s due to the simple fact that the human eye can move. Everyone who has tried to photograph moving objects knows that if the camera is held still the image is smeared by the motion and if the camera follows or pans the motion the moving object is sharp.

The eye evolved to track moving objects of interest as a simple survival tool. In real life, the tracking eye renders the image stationary on the retina and detail can be seen despite motion. Self-evidently, the eye can only track one object at a time and everything else will be in relative motion.

However, when the eye tracks a “moving” object on a screen, the same level of detail won’t be seen, not least because the object in each frame isn’t actually moving. This can be seen in Figure 1. There are several more reasons for loss of detail, which we can examine here.

a) If the eye were fixed, then it would effectively scan the detail in a moving object such that the detail would turn into a signal changing rapidly with time that the eye can’t handle, so it would look blurred.

b) In real life the eye tracks an object of interest, so it becomes stationary on the retina, allowing detail to be seen.

In the case of cinema, the static resolution of the film is quite good in 35mm and startling in 70mm, but the audience can’t enjoy it. One reason is the frame repeat. The two versions of the same frame are identical, but to an eye tracking across the screen they appear in two different places. Thus the moving object of interest that is being tracked is blurred at low eye-tracking speeds, and at higher speeds a second image is seen displaced from the first.

The static resolution of moving imaging systems is never reached; figures like the number of lines in the TV picture or the number of pixels in a frame are for marketing to suckers who think bigger numbers must be better.

A more useful metric for a moving picture is dynamic resolution: the apparent resolution presented to a tracking eye. The present fixation with static resolution leads to completely incorrect decisions being made. It’s the reason why today’s HDTV couldn’t be dramatically better than SDTV. How could it be when the frame rates remained the same?

Retina displays

Another normal feature of eye tracking is that if the object of interest is held still on the retina in real life, everything else gets smoothly blurred. But in artificial moving images this doesn’t happen. Instead everything but the tracked object seems to jump across the field of view at the frame rate. This is called ‘background strobing’, or just ‘strobing’.

Most of the grammar of cinematography is about avoiding strobing. One approach is to use long shutter times so that motion is smeared and the strobing is harder to see. Then you have to use tracking shots to keep your subject sharp so it is only the background that moves. Cinema also uses physically huge film frames or sensors, and huge lenses. By throwing the background out of focus the strobing is diminished. In cinema everything is controlled.

Panavision Genesis One digital camera system: note the big knob for focus pulling

Click for PDF brochure

In television, there is often little control and no budget for a focus-puller. That is why they have to use higher picture rates. That will probably remain true in the future. Rates will have to go up in both cinema and television, but the disparity will remain.

One of the reasons most home movies are rubbish is that the owner of the miniature consumer camcorder doesn’t want to carry a tripod that weighs more than his camera and seemingly prefers having the image going all over the screen. The best accessory you can buy for a consumer camcorder is a cement block that adds some mass to keep it stable.

Eye tracking causes interlace to fail in television. The two fields that make a frame are presented at different times so to a moving eye the odd and even lines are never going to fit back together, and they don’t, except for marketing purposes. That HDTV programme you are watching with 1080 lines is interlaced; there are only 540 lines in each field and that’s the effective number of lines if there is any motion, but oddly enough the larger number is always the one that’s publicised.

Making pictures: the joys of interlaced scanning

A TV standard having only 720 lines, but where every line is present in every frame - so called ‘progressive scan’ - can easily outperform a system that acts as if it has only 540 lines. When the US was choosing its HDTV standards this was well known, and the standards body was informed formally by the likes of MIT, the US Military and the entire US computer industry that interlace was a dead duck. Such is the power of vested interests and steamrollering tactics that interlace was retained as a US standard for HDTV, even though it can’t display high definition except when the picture is essentially static.

You can only pull a trick like that once, and the growing body of knowledge about moving imaging has ensured that interlace rightly won’t be considered in any future standards.

That body of knowledge came about in a variety of places. Manufacturers of TV sets were interested in getting the best possible pictures out of what came from the broadcasters by increasing the display frame rate at the set. For sports programmes, there was demand for good slow motion replays. Increasing international sales of television programmes required high-performance standards convertors to get from 50 to 60fps or vice versa.

Finally, there was work on compression standards so that digital moving pictures could be delivered with lower bit rates. These diverse requirements all discovered the same thing: dealing with eye tracking is the dominant factor. The solution is called ‘motion compensation’.

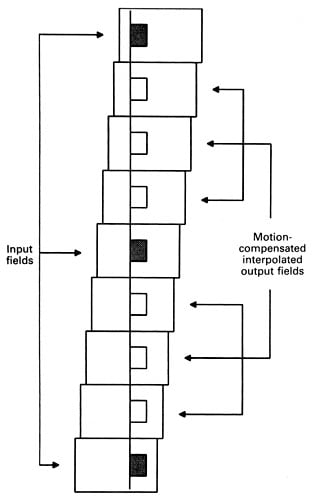

An example of a motion compensated process. This might be a slow motion process displaying video at quarter normal speed, or it might be an upmarket TV increasing the display rate to get rid of flicker. Note that the interpolated frames have to respect the motion otherwise the viewer sees judder and loss of resolution.

The principle of motion compensation is to identify the axis along which the tracking eye would watch a moving object. This is called the optic flow axis and it’s not parallel to the time axis. Suppose you want a slow motion replay at one quarter the original speed.

Between the pictures you have, it is necessary to interpolate, or calculate, three new ones. The key is that to get smooth motion, you can’t interpolate entire pictures; you have to interpolate the motion. As Figure 2 shows, every object that moves between the two input pictures has to be displayed with one quarter, one half and three quarters of the overall motion in the intermediate pictures.

The eyes have it

The interpolator has to identify the boundaries of each moving object and move it the right distance so that it stays on the optic flow axis and looks good to the tracking eye. Exactly the same process has to be performed in a TV set that increases the display rate to avoid flicker.

By definition, the least motion of an object occurs with respect to the optic flow axis. If you are trying to build a compressor, the least motion translates to the smallest differences between successive pictures, leading to the smallest bit rate to describe motion. So MPEG coders send vectors to tell the decoder how much to shift parts of a picture to make it more similar to another picture.

Sony X9 4K KD-55X9005A: enjoy UHDTV just so long as the frog doesn't move

Distilling all this knowledge down into what to do for the future is simpler than might be thought. Every photographer knows that you need a short shutter time to freeze a moving object without blurring it, but if you try it in moving images, short shutter times just make the strobing more obvious. So in practice we need to increase the frame rate to make the strobing less visible and then we can use short shutter times to improve dynamic resolution. In the cinema, higher frame rates would allow projection without the multiple flashing that damages resolution.

Interestingly, because the dynamic resolution of traditional moving images is so poor, the static resolution due to the pixel count is never obtained. This suggests that raising the frame rate can be accompanied by a reduction in the number of pixels in each image not just without any visible loss, but with a perceived improvement in resolution.

Half measures?

For example, if we reduced the static resolution of digital cinema to 70 per cent of what it presently is, nobody would notice because the loss is drowned in the blur due to poor motion portrayal and other losses. However, digital images are two dimensional, so the pixel count per frame would be 70 per cent of 70 per cent, which is near enough 50 per cent. Thus doubling the frame rate can be achieved with no higher data rate. All we are doing is using the data rate more intelligently. Half as many pixels per frame, twice as often, will give an obvious improvement.

There are some signs that the film and TV industries are waking up to this modern understanding of moving pictures. Some movies are being shot at raised frame rates, generally to audience approval. But the full potential is not being reached. Where movies are shot at 48fps, the shutter time may deliberately be kept long so that a 24fps version can be released by discarding every other frame. If the short shutter times that 48fps allows were used, the 24fps version would strobe.

Early work on a replacement for HDTV, called UHDTV, is considering 100 or 120 fps. But the static resolution fixation is still there. There is talk of broadcasting 4000 pixels across the screen at only 50/60Hz, which has to be the dumbest format ever. The abysmal dynamic resolution will restrict us to gardening programmes showing plant growth and the new sport of snail racing. ®

John Watkinson is an international consultant on digital imaging and the author of numerous books regarded as industry bibles.