Original URL: https://www.theregister.com/2013/05/08/intel_silvermont_microarchitecture/

Deep inside Intel's new ARM killer: Silvermont

Chipzilla's Atom grows up, speeds up, powers down

Posted in Personal Tech, 8th May 2013 00:07 GMT

Intel has released details about its new Silvermont Atom processor microarchitecture, and — on paper, at least – it appears that Chipzilla has a mobile market winner on its hands.

Yes, yes, we know: you've heard it all before, from Menlow to Moorestown to Medfield. Intel has made promise after promise that its next Atom-based platform would be its ticket into the mobile show, but – not to put too fine a point on it – they've failed.

Nimble, snappy, power-miserly chips based on the ARM architecture – from Qualcomm, Nvidia, Apple, Samsung, Texas Instruments, and others, including possibly your aunt Harriet – have simply eaten Intel's lunch in the mobile space during the long, slow years in which Chipzilla has attempted to move its x86 architecture down into the low-power market.

On Monday, however, the general manager of the Intel Architecture Group, Dadi Perlmutter, and Intel Fellow Belli Kuttanna gathered a group of journalists at their company's Santa Clara, California, headquarters, and "took the wraps off" Silvermont, the new Atom microarchitecture that they promise will finally allow Intel to crack the low-power chip market in a big way.

This time, it looks like they may very well be telling the truth – that is, of course, if Silvermont will provide a choice of three times the performance or one-fifth the power of the current-generation Atom compute core, as they claim.

And remember, when we say "low-power" market, we're not simply talking about smartphones and tablets – although those hot commodities are clearly key to Silvermont's future. Intel's new Atom compute-core microarchitecture will indeed appear in the Bay Trail platform for tablets ("scheduled for holiday 2013") and the Merrifield platform for smartphones ("scheduled to ship to customers by the end of this year"), but it will also find a home in the Avoton microserver platform and the Rangeley network-equipment platform ("both ... scheduled for the second half of this year"), and an as-yet-unnamed automotive platform.

Linux, Windows, and Android, of course – Perlmutter wouldn't comment on iOS, of course (click to enlarge)

In all of these platforms, Silvermont will bring a host of improvements to the Atom's compute-core architecture – an architecture that has remained essentially the same (with tweaks) since it was first announced in 2004. Code-named Bonnell, it shipped in 2008 at 45 nanometers, then was integrated into a system-on-chip, Saltwell, which shipped last year at 32nm.

Before we dig into an explication of the new architecture, we should first offer a word of thanks to the technology that makes it possible: Intel's FinFET wrap-around transistor implementation that it calls Tri-Gate. When we first wrote about that 22nm transistor technology back in May 2011, we noted that it might be Intel's last, best chance to crack the mobile market.

Now that Intel has created an implementation of the Tri-Gate transistor technology specifically designed for low-power system-on-chip (SoC) use – and not just using the Tri-Gate process it employs for big boys such as Core and Xeon – it's ready to rumble.

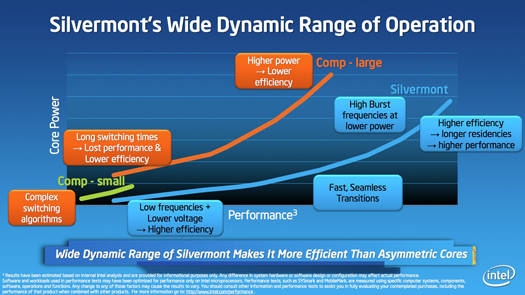

Tri-Gate has a number of significant advantages over tried-and-true planar transistors, but the one that's of particular significance to Silvermont is that when it's coupled with clever power management, Tri-Gate can be used to create chips that exhibit an exceptionally wide dynamic range – meaning that they can be turned waaay down to low power when performance needs aren't great, then cranked back up when heavy lifting is required.

This wide dynamic range, Kuttanna said, obviates the need for what ARM has dubbed a big.LITTLE architecture, in which a low-power core handles low-performance tasks, then hands off processing to a more powerful core – or cores – when the need arises for more oomph.

"In our case," he said, "because of the combination of architecture techniques as well as the process technology, we don't really need to do that. We can go up and down the range and cover the entire performance range." In addition, he said, Silvermont doesn't need to crank up its power as high as some of those competitors to achieve the same amount of performance.

Or, as Perlmutter put it more succinctly, "We do big and small in one shot."

Equally important is the fact that a wide dynamic range allows for a seamless transition from low-power, low-performance operation to high-power, high-performance operation without the need to hand off processing between core types. "That requires the state that you have been operating on in one of the cores to be transferred between the two cores," Kuttanna said. "That requires extra time. And the long switching time translates to either a loss in performance ... or it translates to lower battery life."

In addition, with a 22nm Tri-Gate process you can fit a lot of transistors and the features they enable into a small, power-miserly die – but that's so obvious we won't even mention it. Oops. Just did.

Little Atom grows up

But back to the microarchitecture itself. Let's start, as Kuttanna did in his deep-dive technical explanation of Silvermont, with the fact that the new Atom microarchitecture has changed from the in-order execution used in the Bonnell/Saltwell core to an out-of-order execution (OoO), as is used in its more powerful siblings, Core and Xeon, and in most modern microprocessors.

OoO can provide significant performance improvements over in-order execution – and, in a nutshell, here's why. Both in-order and OoO get the instructions that they're tasked with performing in the order that a software compiler has assembled them. An in-order processor takes those instructions and matches them up with the data upon which they will be performed – the operand – and performs whatever task is required.

Unfortunately, that operand is not always close at hand in the processor's cache. It may, for example, be far away in main memory – or even worse, out in virtual memory on a hard drive or SSD. It might also be the result of an earlier instruction that hasn't yet been completed. When that operand is not available, an in-order execution pipeline must wait for it, effectively stalling the entire execution series until that operand is available.

Needless to say, this can slow things down mightily.

Not so with OoO. Like in-order execution, an OoO system gets its instructions in the order that a compiler has assembled them, but if one instruction gets held up while it's waiting for its operand, another instruction can step past it, grab its operand, and get to work. After all the relevant instructions and their operands are happily completed, the execution sequence is reassembled into its proper order, and all is well – and the processor has just become quite significantly more efficient.

You might ask why all processors don't use OoO when it's obviously so much more productive. Well, the answer to that logical question is the sad fact of life that you don't get something for nothing. OoO requires more transistors – a processor's basic building blocks – and more transistors require both more room on the chip's die and more power.

To the rescue – again – comes Intel's 22nm Tri-Gate process. Now that Silvermont has moved to this new process, Intel's engineers decided that they now have the die real estate and power savings to move into the 21st century, and Silvermont will benefit greatly from that decision.

OoO is not the only major improvement in Silvermont. Branch prediction – the ability for a processor to guess which way a pipeline will proceed in an if-then-else sequence – has also been beefed up considerably. "With our branch-processing unit," Kuttanna said, "we improved the accuracy of our predictors such that when you have a branch instruction and you have a misprediction – hopefully which happens quite rarely – you can recover from the misprediction quickly."

A quick recovery will of course improve a processor's performance. "But more importantly," Kuttanna said, "when you recover quickly you can flush the instructions that were speculating down the wrong path in the machine quickly, and save the power because of it."

Saving power is a Very Big Deal in the Silvermont architecture – after all, Atom's raison d'être is low power consumption. One of the many new feature that Silvermont includes is its ability to fuse some instructions together so that, essentially, one operation accomplishes multiple tasks.

"The key innovation on Silvermont," Kuttanna said, "is that we take the [Intel architecture] instructions that we call macro operations, which have a lot of capability built-in within the instructions, and we execute those instructions in the pipeline as if they're fused operations."

Typically in Intel architecture – IA, for short – you have operations that load an operand, operate on it, then store the result. In Silvermont, Kuttanna said, most of those operations can be handled in a fused fashion, so the architecture can be built without wide, power-hungry pipelines and still achieve good performance. "Using the fused nature of these instructions," he said, "we're able to extract the power efficiency out of our pipeline – and that's one of our key innovations in this out-of-order execution pipeline."

There have also been improvements made to the latencies in the execution units themselves, as well as latencies to and from high-speed first and second-level caches. Kuttanna also promised that latencies have also been improved in Silvermont's access to memory, partially due to improved, hardware-based prefectching, which stuffs data into the level-two (L2) caches from memory when it believes it will soon be needed.

"The whole thing is around the theme of building a balanced system," Kuttanna said. "It's not about just delivering a great core. You gotta have all the supporting structure to deliver that performance at the system level."

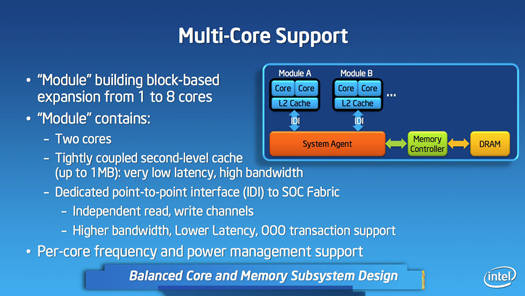

That supporting structure will, well, support Silvermont varieties of up to eight cores. Those cores will be paired, two-by-two (although a single-core version was also mentioned) with shared L2 cache. Those caches will communicate with what Intel calls a "System Agent" – what some of you might also call a Northbridge – over a point-to-point connector called an in-die interconnect (IDI).

The System Agent – as did a Northbridge – links the compute cores to a system's main memory. One big difference in Silvermont versus Bonnell/Saltwell is that those earlier microarchitectures communicated with their compute cores over a frontside bus (FSB). IDI is a big improvement for a number of reasons – not the least of which being that it's snappier than FSB.

In addition, the clock rate of an FSB is closely tied to the clock rate of the compute core – if the compute core slows down, so does the FSB. IDI allows a lot more flexibility, and it can thus be dynamically adjusted for either power savings or performance gains.

There's that power-saving meme again.

Remember hyperthreading? Fuggedaboutit

We should note that one thing Silvermont has dropped from its predecessor microarchitectures is the ability to run two instruction threads through a core simultaneously. "With Saltwell," Kuttanna said, "we had hyperthreading on the cores. We had four threads operating on two physical cores. With Silvermont we dropped the support for hyperthreading because it wasn't the right trade-off for us with Silvermont where we were ... going after higher single-thread [instructions per clock cycle (IPC)]."

According to Kuttanna, the "return on investment" for keeping hyperthreading in Silvermont wasn't worth it, and the microarchitecture's designers chose instead to increase that all-important IPC rate by adding OoO processing and increasing the number of compute cores in high-end parts.

Silvermont's modular architecture will pack up to eight cores, two by two – or one (click to enlarge)

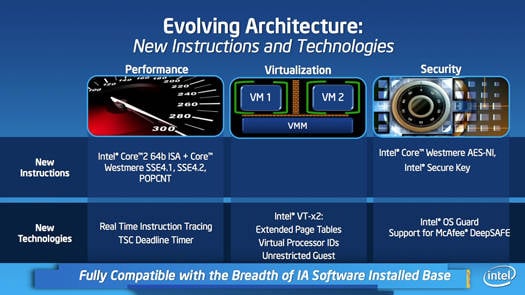

In addition to maturing with such additions as OoO and improved branch prediction, Silvermont also grows up with the addition of some new instructions. "We had the luxury of looking at what's already developed within this mature architecture we have with IA," Kuttanna said, "and focus on what are the ingredients that actually will be useful in the market segments that we are trying to target with the Silvermont-based products."

Among those ingredients that Intel has added to Silvermont are streaming single instruction, multiple data (SIMD) media-processing extensions from the Westmere-level Core instruction set, namely SSE4.1 and SSE4.2, along with POPCNT, plus AES-NI encryption/decryption for enhanced security.

Hardware-level virtualization capabilities are also enhanced with the addition of Intel's second-generation VT-x2, which adds capabilities such as extended page tables that allow the guest operating system within a particular platform to manage its memory space more efficiently, Kuttanna said.

"We also have other features such as virtual processor IDs," he said, "that allow us to retain some of the states within our [translation lookaside buffers (TLBs)] when you're going through a [virtual machine] transition." And if that's a bit opaque for you, don't break your brain trying to figure it out, just know that it'll make life easier for developers working on virtualization implementations for Silvermont systems.

Speaking of gearheads, their lives should be also made easier by the addition of new debugging tools. "We've introduced a new technology with Silvermont called Real Time Instruction Tracing that we'll make available to our OEM partners to help them debug both the hardware and software that's running on these platforms," Kuttanna said.

Okay, so Silvermont has a wide range of improvements over its Atom predecessors – but what will all those tweaks add up to in terms of performance? Quite a bit, if Perlmutter and Kuttanna are to be believed. As we mentioned earlier, Intel claims an overall 3x improvement in performance or a 5x improvement in power savings.

As for exact clock speeds, wattages, core-counts, and the like, they'll be announced along with the upcoming platform announcements. "When we talk about Bay Trail, Merrifield, et cetera, we'll give you the specific power and performance numbers," Kuttanna said.

Both presenters at the Silvermont rollout emphasized that simply counting cores in mobile processors is not a useful way of determining performance – and, of course, since they were debuting their new Atom core, they could be expected to make that point. But they were adamant.

"When it comes to cores, especially in the mobile space and phone," Kuttanna said, "there's a trend to be increasing the number of cores and providing the perception that it always leads to better performance." Not so, he said, displaying a slide that showed a dual-core Silvermont far outpacing an unnamed quad-core competitor in performance per watt.

The title of that slide, by the way, was "Not All Cores Are Created Equal".

"It's all about how you design your cores and the operating range of your cores to take advantage of the sweet spot in that operating range," Kuttanna said – the sweet spot meaning good performance at a reasonable power draw. "Our dual-core solutions can actually outperform the quad-core solutions."

Again, Perlmutter was more matter-of fact. "You could put in eight lawn mowers and you could not fly a jet," he said.

In that vein, we'll find out soon enough if Silvermont will enable Intel's mobile ambitions to take off, but – conceptually, at least – it appears that liftoff might finally be possible after years of acceleration down what has proven to be a long, long runway. ®