Original URL: https://www.theregister.com/2013/03/26/object_recognition/

Roomba dust-bust bot bods one step closer to ROBOBUTLERS

Crisp packets, used tissues no problem for 10-GFLOPS brain

Posted in Personal Tech, 26th March 2013 11:00 GMT

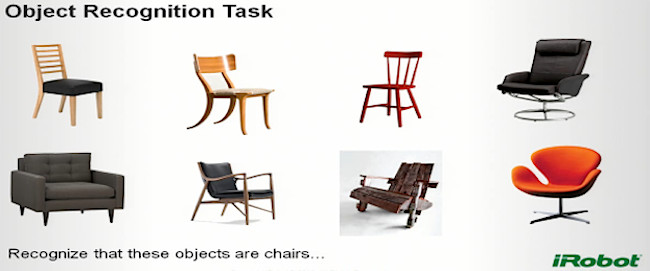

GTC 2013 We know that all of the objects in the picture below are chairs, right? But show this picture to a computer and see what happens. Getting computers to recognise generic objects is a hugely difficult task that’s complicated by variations of the same object (club chairs vs. office chairs vs. folding chairs) and by other objects in the computer’s field of view that can confuse the machine.

Wait, why would a computer need to sit on a chair? (Source: iRobot)

Researchers at iRobot have been working hard on object recognition. iRobot is best known as the company behind the Roomba roving dust-buster bot, but it has been doing a lot more than just moving around the dander.

At last week’s GPU Technology Conference in California, a team from iRobot claim to have developed the first real-time generic object recognition algorithm. It’s based on the Deformable Part Model (DPM), which is based on the idea that objects are made of parts, and the way those parts are positioned in relation to one another is what defines a person from a chair or a car from a boat.

The "deformable" term in the name of this model is key – it refers to the ability of this model to understand that parts of the same object will look very different when viewed from various angles. One example used in the academic paper Object Detection with Discriminately Trained Part Based Models [PDF] (by Felzenszwalb, Birshick, McAllester and Ramanan) explains how the model can correctly identify a bicycle when viewed head-on or from the side.

A hundred-thousand calculations per pixel or 10 billion per image

Not surprisingly, the concepts and maths that are the foundation of the DPM are highly complex. But they make the DPM robust when dealing with viewing angles, different scales, cluttered fields of view, and other visual noise that would confound other algorithms. After the software is trained, which consists of showing the model about 1,000 objects in order to learn that particular class of objects, it can identify complex objects with a high rate of accuracy. As long as you don’t need to do it quickly, that is.

Running DPM is highly compute-intensive. Each pixel requires about 100,000 floating-point operations, 10,000 reads from memory and 1,000 floating-point values stored. A VGA image consumes 10 billion floating-point ops, loads a billion floats and stores 100 million. For more context, using the LINPACK benchmark as a metric, an iPad 2 can crank out 1.65 billion floating-point ops a second, while a middling desktop i5 system can drive around 40. So while it’s compute-intensive, it’s not insurmountable.

Not insurmountable, that is, unless you’re talking about high-definition images, which would ramp up the number of compute operations by at least a factor of four. Or if you’re using a lot of object classes or trying to identify many different objects in the same image. This can increase computational intensity by orders of magnitude, taking it out of realm of problems that can be easily handled with small systems.

To speed up the process and bring it to real time, the iRobot guys looked at various accelerators, specifically graphics processors, FPGAs and DSPs. Their goal was to identify which components offered the best efficiency per watt and efficiency per watt gain over time - and found that all three technologies were in the same ballpark.

But the programmers opted for GPUs because, for them, the chips were easier to develop for than FPGAs and DSPs, as the team was comfortable writing C++ code and compiling it for Nvidia's CUDA system.

In their implementation, they ran almost every function in the GPU, using the CPU primarily for inputting and transferring the image entirely to the GPU. Their first attempt, using 20 different object classes, benchmarked at 1.5 seconds, which was 40-times faster than their CPU-only implementation. However, it was a disappointment when compared with what the CPU+GPU hardware could potentially deliver, at only 6 per cent memory efficiency and 3 per cent computational efficiency. So, back to the drawing board to see what could be done.

The first task was to optimise the kernel and reduce kernel calls – which paid off in a big way. Memory efficiency was increased to 22 per cent of potential, and computational efficiency more than tripled to 10 per cent. Overall, these optimisations dropped the time to identify 20 classes of objects from 1.5 seconds to 0.6 seconds, a near three-times improvement relative to their first GPU effort, but 100-times faster than what they could do with CPUs alone.

To drive home the real time part of the their achievement, the iRobot folks showed a video of an experiment in which objects were placed in front of a camera attached to a laptop – not just any laptop, but an Alienware equipped with dual Nvidia GeForce GTX 580M GPUs. The system worked very quickly, identifying model cars, planes, and other objects often before they were completely out of the hand of the experimenter.

Using iRobot's research to search and destroy… household clutter

The system could also identify disparate items, such as tubes of crisps and bog roll, when they were placed in cluttered backgrounds, and could correctly identify an object despite seeing only part of it (such as the legs and torso of a horse). Compound objects, like a horse and rider, were also quickly labeled.

It’s a great demonstration of just how far machine vision has come. There are many applications for this kind of technology, particularly when it can be accomplished in real time, as demonstrated by iRobot in its GTC 2013 session. It’s far more important and potentially life-changing than the ability to identify Pringles and toilet paper, of course. It would increase point-of-sale accuracy and speed by identifying items at the checkout that aren’t easily bar coded or scanned, or don’t have RFID chips. Plus it could allow very fast tracking or sorting of objects speeding by on a conveyor belt in a warehouse, while allowing haphazard item placement. ®