Original URL: https://www.theregister.com/2013/03/25/sgi_total_pangea_ice_x_supercomputer/

Oil giant Total shells out €60m for world's fastest private super

2.3 petaflops now, double that in 2015

Posted in HPC, 25th March 2013 20:38 GMT

Total Group wants to do a better job finding oil lurking in the Earth's crust, and these days that means getting more computing power to turn the jiggling of the planet into pretty 3D pictures that show where the Black Gold might be hiding. To that end, Total is paying Silicon Graphics €60m over the next four years to build the largest privately owned supercomputer in the world – and to keep it in that position.

The plan for the "Pangea" supercomputer, which will be installed at Total's Jean Feger Scientific and Computing Centre (CSTJF) in southwest France, is to build a CPU-only system based on SGI's ICE X systems. The ICE X machines were previewed back at the SC11 supercomputer conference back in November 2011 and shipped the following spring after the launch of Intel's Xeon E5-2600 processors, on which the clusters are based.

SGI, you will recall, was a little too eager to peddle the ICE X machines ahead of that rollout, and while it bragged that it had booked $90m in orders for the machines in January 2012, many of those initial deals were either unprofitable or marginally profitable, causing SGI to restructure and get a new CEO a month later. Presumably Jorge Titinger, who is running SGI now and who put a firewall around those unprofitable deals, is making sure SGI is making dough on the Pangea super going to Total.

The Pangea supercomputer at Total in France will be one of Europe's most powerful machines

Total currently has an SGI Altix ICE 8200EX supercomputer based on quad-core Xeons and clustered with InfiniBand networks, which runs its applications on SUSE Linux Enterprise Server 10 plus the ProPack math library extensions from SGI.

That cluster has 10,240 cores and a peak theoretical performance of 122.9 teraflops, and it burns 442 kilowatts, giving it a power efficiency of 278 megaflops per watt. It dates from 2008, when it was the 11th most powerful machine in the world, but it is not particularly impressive by Top500 standards.

While Total is getting a serious upgrade for its computing capacity, what is perhaps equally important are the more sophisticated cooling systems that SGI has cooked up for the ICE X machines. These supers have a number of different options.

The "Dakota" two-socket blade servers used in the ICE X clusters pack 72 blades in a rack and have an open-loop air cooling system for the racks. If you want to do a better job controlling airflow in the data center (or, better still, you have no choice because you have to eliminate all inefficiencies that you can), then you can wrap four of the ICE X server racks in an outer skin called a D-Cell. It basically turns these four racks into a self-contained baby data center (thermodynamically speaking), and you can do closed-loop cooling inside the cell skin and warm water cooling to remove the heat from the cell itself.

If you want to push compute density, then you go with the doubled-up "Gemini" IP115 server blades, which sandwich either an air-cooled or water-cooled heat sink onto the Xeon processors. The Gemini blades have half the memory and disk slots as the Dakota blades, which may be a lot to give up for some HPC shops, but they cram twice as many server nodes into a rack, which is a very big deal.

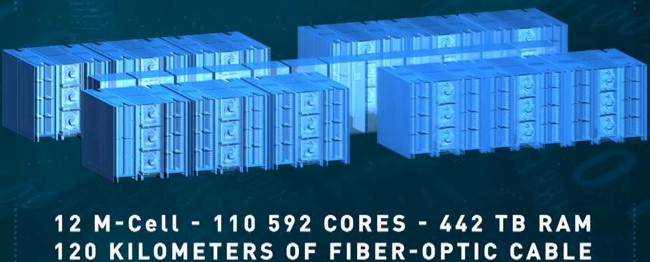

If you go with the four-rack wrapper around the Gemini blade racks, which is called an M-Cell by SGI, then you can get 576 server nodes in 80 square feet of space. For Pangea, Total is going for the density and is opting for the Gemini blades and the M-Cell enclosures. So that is 72 blade servers with 288 processors and 9 TB of memory per rack.

The M-Cell has four of these racks plus two water coiling jackets. Take a dozen of these and lash them together with an FDR InfiniBand network from Mellanox Technologies, and you have a system with 110,592 cores and 442TB of main memory linked with over 120 kilometers of fiber optic cable. (OK, look, the French presentation actually spelt it fiber. Go figure... ) The whole shebang will have a peak theoretical performance of around 2.3 petaflops, generated solely from eight-core Xeon E5-2670 processors spinning at 2.6GHz.

If you wanted to go with air cooling only on the Gemini blades, you would have to stick with 95 watt Xeon parts, but by going with the water-cooled heat sinks, you can jump up to 115 watt parts. You cannot go nuts and use 130 watt Xeon E5 parts in a Gemini blade, however.

Water will come into each M-Cell on Pangea at 25°C and exit at 35°C, and the water courses through its veins at 250 cubic meters per hour to draw the heat off the processors. The plan is to capture the heat generated by the supercomputer and heat the surrounding Scientific and Technical Center. (You might want to overclock a rack or two and put in a hot tub.)

The Pangea system that SGI has sold to Total is not just about compute, of course. The deal also includes an array of InfiniteStorage 17000 arrays running the Lustre file system with a total capacity of 7PB and 300GB/sec of bandwidth into and out of the system. There is also 4TB of tape backup thrown into the storage system. Adding up both the compute and storage parts of Pangea, Total will need 2.8 megawatts to keep the system humming, running its seismic imaging and reservoir modeling applications on SUSE Linux.

The interesting bit is that the Pangea system has neither Tesla GPU coprocessors from Nvidia nor Xeon Phi coprocessors from Intel. SGI could cook up some coprocessor blades to yield a balanced ratio of CPUs to coprocessors, but that doesn't mean Total's applications will run well on them. What Total is planning to do, however, is double the performance of the ICE X machine in 2015, so it doesn't fall behind its peers in the oil and gas industry in the flopping arms race.

That you can get a 2.3 petaflops supercomputer based only on x86 processors with 7PB of storage, then double its performance in two years for €60m ($77.3m at prevailing currency exchange rates) is astounding. Just scribbling some figures on the back of a drinks napkin, if you assume that the compute portion of Pangea is about a two-thirds of the cost, and about two-thirds of the total cost of the computing is in the initial system, with a third going into the upgrade, then Total is getting Pangea for around $15,000 per teraflops, and the upgrade to double the performance in 2015 will cost half that.

That $15,000 per teraflops is only about 25 per cent more expensive per flops than what the University of Illinois paid for the "Blue Waters" ceepie-geepie built by Cray from AMD Opteron processors and Nvidia Tesla coprocessors. Those are very rough estimates with a lot of wiggle in them, of course, but the price that Total is paying is a lot less than the $45,000 per petaflops that Cray was charging when the XE6 supers came out several years ago. You gotta love Moore's Law. Well, unless you are trying to extract profits from flops.

Assuming that the compute side of Pangea generates most of the heat (let's call it two-thirds again), then this machine will deliver around 1,225 megaflops per watt (at peak theoretical performance), which is a factor of 4.4 times better than the machine Total currently has. This is probably what matters most, after the ability to run existing code for seismic analysis and reservoir modeling unchanged. ®