Original URL: https://www.theregister.com/2013/03/05/emc_xtremsf/

One day later: EMC declares war on all-flash array, server flash card rivals

Rolls out XtremIO array, renamed VFCache

Posted in Storage, 5th March 2013 11:47 GMT

EMC is announcing its XtremIO all-flash array and, just 24 hours after Violin Memory's PCIe card launch, a line of XtremSF server flash cards with XtremSW Cache software that effectively expands and renames the VFCache server flash card product line.

It appears that the planned Project Thunder server networked flash cache product built from VFCache cards has been discontinued.

XtremSF is a line of fast single-level cell (SLC) and enterprise multi-level cell (eMLC) flash cards using the PCIe bus, with capacity points of 350GB and 700GB for the SLC cards and 550GB, 700GB, 1.4TB and 2.2TB for the eMLC cards. The existing VFCache line of server PCIe flash cards is included in the SLC XTremSF card product line.

These cards are sourced externally by EMC from two suppliers, although EMC is not revealing who they are. As a reminder, both Micron and LSI say they have certified to supply VFCache hardware. Fusion-io thinks LSI could have been dropped with Virident replacing it for XtremSF hardware. A Violin source reckoned that the XtremSF 2.2TB eMLC is the Virident 2200 Performance product and XtremSF 550GB eMLC is the Virident 550 Standard product. He thought that two XtremSF cards to be shipped later will come from Micron.

LSI's marketing director Tony Afshary said: "The extent of LSI’s relationship with EMC on host caching is with the current VFCache product. We cannot speculate on [today's] announcement."

EMC says that the XtremSF cards can be used as local storage for servers, flash DAS (direct-attached storage), or as cache. They have a half-height, half-length PCIE card format and use 8 PCIe lanes. EMC says they have a low CPU overhead, not requiring the level of server host CPU utilisation needed by Fusion-io's ioDrive cards for example, and are engineered for high endurance, consistent performance through an advanced Flash Translation Layer, and real life workloads.

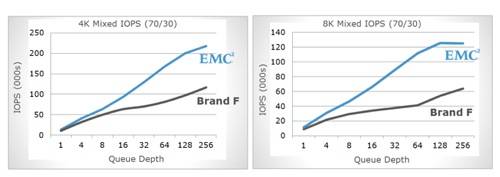

EMC says the XtremSF cards can deliver 1.3 million IOPS. PCIe flash card market leader Fusion-io is smack in the middle of EMC's sights with competitive performance comparisons published by EMC showing a 1.2TB ioDrive 2 card from Fusion-io being able to run at around 115,000 IOPS with a 70/30 read/right mix of 4K blocks and a queue depth of 256. The XtremSF 2200 delivers around 215,000 IOPS in the same situation.

XtremSF and Fusion-io IOPS (Brand F) comparison

EMC has also tested the XtremSF and Fusion-io cards over a greater than 5 hour run period and found its cards quickly outperformed Fusion-io cards with 4KB random write IOPS and eventually overtook the Fusion-io card with 64KB sequential writes in MB/sec terms.

XtremIO array

As expected from EMC's XtremIO acquisition, this is a scale-out all-flash array using X-Brick nodes built with non-proprietary hardware providing sub-millisecond data access services. The XtremIO contribution is software, which includes inline deduplication using 4KB blocks, thin provisioning with 4KB allocations, VMware provisioning through VAAI, writeable snapshots and clones with a 4KB page or block size, and data protection through something called "Flash-optimised zero configuration," which is something like a flash version of RAID-6 that minimises writes to the flash so as to aid its longevity.

XtremIO array performance with 1, 2 and 4 X-brick nodes

The system is built from clustered X-Bricks, linked by InfiniBand, and performance scales linearly, as does capacity, as X-Bricks are added to the cluster. The chart above shows a quartet of X-Bricks reaching the million IOPS mark with 4KB random reads, although it achieved less than half that with random writes. EMC says: "The XtremIO system exceeds 150,000 functional 4K mixed read/write IOPS, and 250,000 functional 4K read IOPS for each X-Brick … and over 1.2 million functional IOPS and 2 million functional 4K read IOPS when scaled out to a cluster of eight X-Bricks."

It calls them "functional" IOPS because they are measured "under the real-world operating conditions found in today’s demanding production environments — which is typified by sustained mixed read/write, long-term, sub-millisecond latency, fully random transaction patterns with all data services enabled and operating while filled nearly to capacity." Just marketing then - they are ordinary IOPS.

Servers link to an X-Brick cluster across Fibre Channel or Ethernet (iSCSI).

We understand the X-Bricks have dual active:active controllers and a set of 16 x 200GB 2.5-inch MLC solid state drives offering 1.9TB of usable capacity. (There are probably other capacities available.) That means an 8-node cluster has 25.6TB raw capacity and 15.2TB usable.

The workload is balanced across the cluster and deduplication is cluster-wide across all volumes. Data reduction ratios of between 5:1 and 30:1 can be achieved, the latter with highly redundant virtual server workloads like VDI. A VMware View white paper on VDI and XtremIO can be found here (PDF).

Asked if the array integrated with VMAX, VNX or Isilon arrays, Barry Ader, EMC's flash business unit manager, said: "At general availability it does not. I can't predict what will happen in the future."

The array features VPLEX and PowerPath integration right out of the gate. There is no single point of failure in an XtremIO array cluster. It is managed through a single pane of glass and comes with a set of CLI commands for script-based management and monitoring as well.

EMC included a complementary customer quote it its release from Brian Dougherty, the Chief Data Warehouse Architect at CMA: “Using XtremIO we are able to engineer our Oracle RAC systems to be faster, more scalable, and handle more concurrent users, while at the same time requiring only 20 per cent of the previous footprint in our data centre. We've identified immediate storage cost savings of nearly $500,000 by leveraging XtremIO and will achieve similar savings by reducing our Oracle CPU core license requirements since XtremIO nearly eliminates I/O wait time."

The XtremIO array is coming soon we are told and is entering a kind of limited shipping called Directed Availability, under which it is available to selected customers only while any initial kinks are worked out.

XtremSW software

EMC has renamed its VFCache v1.5 software XtremSW Cache 1.5. It is a write-through cache with deduplication that is supported on Windows, Linux and VMware, and provides cache coherency for Oracle RAC environments, and is available separately from the XtremSF hardware.

EMC XtremSF half-height half-length PCIe flash card

VFCache server caching was going to be included as an end-point in EMC's FAST (Fully-Automated Storage Tiering) in which data is moved to a storage responsiveness tier of media based upon the need for its access. XtremSW Cache inherits this intention. EMC also says XtremSW Cache will receive more capabilities in the future, like cache coherency and membership of "a broad, device-independent Flash software suite—the EMC XtremSW Suite."

EMC says it wants the suite to provide a software layer to abstract flash across the data centre and states:

Beginning with a release later this year, EMC will provide customers with pooling, cache coherency, deeper EMC storage array integration and specific enhancements for VMware environments.

Thunder dies

With such a layer there is no need for Project Thunder, EMC's notion of putting multiple VFCache cards in a networked box providing a server networked cache. Thunder dies away. Ader confirmed this: "Once we purchased XtremIO and XtremSF cards we basically felt all the use cases for customers were covered." He said Project Thunder technology would be used over time in other EMC products.

EMC blogger Storagezilla wrote: "The Thunder hardware has been shelved and the Thunder data path and data services software has been absorbed by XtremIO, XtremSW Cache and parts of the XtremSF Suite ‘later this year’".

EMC does not say that the Xtrem SW suite will support the XtremIO array and Ader gave the same answer to a question about this as to the one about XtremIO array integration with other EMC drive arrays. So not with the first version of the product but perhaps later.

Josh Goldstein from EMC's XtremIO business said XtremSF cards work with the XtremSW cache, adding "from a caching perspective, absolutely." The card and SW can sit in front of an XtremIO array and VMAX, VNX and Isilon arrays and cache data coming to the host server.

Dan Cobb, the chief technical officer for EMC's Flash Business Unit, mentioned EMC's RecoverPoint any-to-any replication and Federated Live Array Migration and said; "We'll obviously take a look at other use cases over time. RecoverPoint and Federated Live Array Migration are culturally compatible with what we are doing."

It is understood that XtremSW Cache software works with third-party PCIe cards, such as ones from Fusion-io.

The competition

EMC is now opening a competitive front against every other all-flash array vendor and against every enterprise PCIE flash card vendor. On the ground-up designed flash array front the competitors include GreenBytes, Huawei Dorado, IBM/TMS, Kaminario, Nimbus Data, Pure Storage, Skyera, SolidFire and Whiptail; nine other vendors. More are coming; both HP and NetApp have in-house all-flash arrays under development. On the PCIe card front there are 15 or more other vendors.

Violin Memory's 6000 array is currently the industry leader in the all-flash array market, and it kicks XtremIO's ass capacity-wise; the 6332 has up to 32TB usable capacity whereas an 8-node XtremIO cluster tops out at 25.2TB usable with 16 x 200GB SSDs inside each node. A 6-node Whiptail INVICTA has up to 72TB, but then it hasn't got deduplication, and neither has the Violin array. EMC is not a raw usable capacity leader, but may look considerably better once the effects of dedupe are taken into account.

Performance comparisons will have to wait until we have common performance measures across the various vendors.

Fusion-io CEO and co-founder David Flynn said EMC is trying to get to where Fusion-io was five years ago. The CPU usage point is wrong, as batching up I/Os to reduce writes to flash delays applications, he claims. Each app needs its I/Os completed as fast as possible.

Flynn said: "You have to look at it holistically from the application point of view rather than the storage point of view." Fusion-io has a customer who found they could support twice as many VMs per server with an MLC ioDrive2 card from Fusion-io compared to an EMC PCIe card using SLC flash. The Fusion-io card in fact used less server CPU resources than the EMC card.

A Violin Memory spokesperson said EMC presented information about MB/sec and IOPS but not latency, saying: "I'm not sure what these 'sustained' graphs are supposed to signify? The problem once the PCIe card "warms up" is they throw huge latency spikes as the grooming process tries to clear up new flash to keep up with the write load. That is what customers complain about and slows down applications."

Violin retorts it has consistent latency numbers: "All Violin products (Array and cards) use vRAID which is our erase/write hiding algorithm that allow the product to perform under load while keeping latency low (no spikes). It is the long MLC erases that kill you – reads get caught behind them and queue up. This is the core IP."

He also says there is: "No mention of price … With all the margin stacking it is understandable."

Stephen O'Donnell, chairman and CEO of GreenBytes, said: "With today's solid state data centre announcement, it's clear that EMC has recognised that proprietary hardware is no longer differentiated and announced to the world that the true value of storage has moved inextricably into software. This thinking by EMC absolutely validates GreenBytes' patented vIO software-only virtual storage appliance that leverages vendor agnostic and best of breed hardware.

"A word of advice, if I were an EMC customer, I would be somewhat concerned about the maturity of this new software as it typically takes over three years to mature a storage stack."

EMC says we can expect higher capacity XtremSF cards in the future. XtremSF 550GB and 2.2TB eMLC capacities are available now, globally. The 700GB and 1.4TB capacities should be available on EMC price lists later this year - no ship date is mentioned. ®