Original URL: https://www.theregister.com/2013/02/14/guide_to_student_cluster_compos/

Could you build a data-nomming HPC beast in a day? These kids can

Everything you need to know about the undergrad cluster sport

Posted in HPC, 14th February 2013 09:31 GMT

Analysis Student cluster-building competitions are chock full of technical challenges, both “book learning” and practical learning, and quite a bit of fun too. I mean, who wouldn't want to construct a HPC rig against the clock and kick an opponent in the benchmarks? Here's what involved in the contests.

Whether you’ve been following the competitions obsessively (sponsoring office betting pools, making action figures, etc.) or this is the first time you’ve ever heard of them, you probably have some questions.

There are rules and traditions in these competitions, just as there are in cricket, football and the Air Guitar World Championships. Understanding the rules and traditions will make these events more interesting.

Student clustering triple crown

In 2013, there will be three major worldwide student cluster events. The United States-based Supercomputing Conference (SC) held the first Student Cluster Competition (SCC) in 2007. The contest has been included at every November SC conference since, usually featuring eight university teams from the US, Europe, and Asia. As the first organisation to hold a cluster competition, SC has pretty much established the template on which the other competitions are based.

The other large HPC conference, the imaginatively named ISC (International Supercomputing Conference), held its first SCC at the 2012 June conference in Hamburg. The competition, jointly sponsored by the HPC Advisory Council, attracted teams from the US, home country Germany, and two teams from China. It was a big hit with conference organisers and attendees – big enough to justify expanding the field to include nine teams for 2013.

The third entry is the newly formed Asia Student Supercomputer Challenge (ASC). The finals of their inaugural SCC will be held this April in Shanghai, China (yes, the Shanghai in China). At least 30 teams from China, India, South Korea, Russia and other countries have submitted applications seeking to compete in the finals. This competition is sponsored by Inspur and Intel. More details in upcoming articles.

How do these things work?

All three organisations use roughly the same process. The first step is to form a team of six undergraduate students (from any discipline) and at least one faculty advisor. Each team submits an application to the event managers, answering questions about why they want to participate, their university’s HPC and computer science curriculum, team skills, etc. A few weeks later, the selection committee decides which teams make the cut and which need to wait another year.

The groups who get the nod have several months of work ahead. They’ll need to find a sponsor (usually a hardware vendor) and make sure they have their budgetary bases covered. Sponsors usually provide the latest and greatest gear, along with a bit of financial support for travel and other logistical costs. Incidentally, getting a sponsor isn’t all that difficult. Conference organisers (and other well-wishers like me) can help teams and vendors connect.

The rest of the time prior to the competition is spent designing, configuring, testing, and tuning the clusters. Then the teams take these systems to the event and compete against each another in a live benchmark face-off. The competition takes place in cramped booths right on the trade show floor.

All three 2013 events require competitors to run the HPCC benchmark and an independent HPL (LINPACK) run, plus a set of real-world scientific applications. Teams receive points for system performance (usually "getting the most done" on the scientific programs) and, in some cases, the quality and precision of the results. In addition to the benchmarks and app runs, teams are usually interviewed by experts to gauge how well they understand their systems and the scientific tasks they’ve been running.

The team that amasses the most points is dubbed the overall winner. There is usually an additional award for the highest LINPACK score and sometimes a “fan favourite” gong for the team that does the best job of displaying and explaining its work.

While many of the rules and procedures are common between competition hosts, there are some differences:

SC competitions are gruelling 46-hour marathons. The students begin their HPCC and separate LINPACK runs on Monday morning, and the results are due about 5pm that day. This usually isn’t very stressful; most teams have run these benchmarks many times and could do it in their sleep. The action really picks up Monday evening when the datasets for the scientific applications are released.

The apps and accompanying datasets are complex enough that it’s pretty much impossible for a team to complete every task. So from Monday evening until their final results are due on Wednesday afternoon, the students are pushing to get as much done as possible. Teams that can efficiently allocate processing resources have a big advantage.

ISC competitions are a set of three day-long sprints. Students run HPCC and LINPACK on the afternoon of day one but don’t receive their application datasets until the next morning. On days two and three, they’ll run through the list of workloads for that day and hand in the results later that afternoon.

The datasets usually aren’t so large that they’ll take a huge amount of time to run, meaning that students will have plenty of time to optimise the app to achieve maximum performance. However, there’s another wrinkle: the organisers spring a daily surprise application on the students. The teams don’t know what the app will be, so they can’t prepare for it; this puts a premium on team work and general HPC and science knowledge.

Don't blow a fuse: hardware dreams spawn electrical nightmares

When it comes to hardware, the sky’s the limit. Over the past few years, we’ve seen traditional CPU-only systems supplanted by hybrid CPU and GPU-powered clusters. We’ve also seen some ambitious teams experiment with cooling, using liquid-immersion cooling for their nodes.

Last year at SC12, one team planned to combine liquid immersion with over-clocking in an attempt to knock the clocks, er, socks off its competitors. While their effort was foiled by logistics (their system was trapped in another country), we’re sure to see more creative efforts along these lines.

There’s no limit on how much gear, or what type of hardware, teams can bring to the competition. But there’s a catch: whatever they run can’t consume more than 3,000 watts at whatever volts and amps are customary in that location. In the US, the limit is 26 amps (26A x 115V is just under 3000W). At the ISC’13 competition in Germany, the limit will be 13 amps (13A x 230V is, again, just under 3,000W) The same 3,000-watt limit also applies to the upcoming ASC competition in Shanghai.

This is the power limit for their compute nodes, file servers, switches, storage and everything else with the exception of PCs monitoring the system power usage. There aren’t any loopholes to exploit, either – the entire system must remain powered on and operational during the entire three-day competition. This means that students can’t use hibernation or suspension modes to power down parts of the cluster to reduce electric load. They can modify BIOS settings before the competition begins, but typically aren’t allowed to make any mods after kickoff. In fact, reboots are allowed only if the system fails or hangs up.

Each system is attached to a PDU (Power Distribution Unit) that will track second-by-second power usage. When teams go over the limit, they’ll be alerted by the PDU – as will the competition managers. Going over the limit will result in a warning to the team and possible point deduction. According to the HPAC-ISC Student Cluster Challenge FAQ, “if power consumption gets well beyond the 13A limit, Bad Things™ will happen” meaning that the team will trip a circuit breaker and lose lots of time rebooting and recovering their jobs.

On the software side, teams can use any operating system, clustering or management software they desire, as long as the configuration will run the required workloads. The vast majority of teams run some flavour of Linux, although there were Russian teams in 2010 and 2011 who competed with a Microsoft software stack, and they won highest LINPACK at SC11 in (appropriately enough) Seattle.

Performance feats

It’s amazing how much hardware can fit under that 3kW limbo bar. At SC12 in Salt Lake City, overall winner the Texas Longhorn team used a 10-node cluster that sported 160 CPU cores plus two Nvidia m2090 GPU boards. Texas had the highest node count, but other teams used as few as six nodes and as many as eight GPU cards.

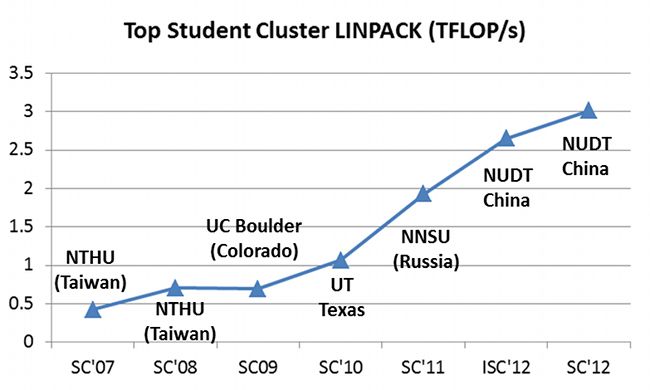

The growing performance of student-built HPC mini-beasts

Look at the performance ramp from November 2009 to November 2012. In three years, student LINPACK scores went from .692 teraflops to 3.014 teraflops. In absolute terms, that’s an increase of 335 per cent. You can also compare it to a loose interpretation of Moore’s Law and assume that compute performance doubles every 18 months.

Using this measure, 2009’s .692 score would double to 1.38 in a year and a half, then double again to 2.77 at the three-year mark. So our student clusterers are doing a pretty good job from that perspective. They also compare favourably to the world's top 500 supercomputers list during the same period.

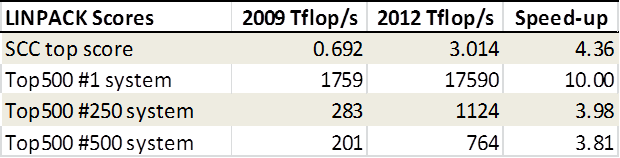

The LINPACK score of the number-one system on the top 500 supers went up by a factor of ten over the last three years versus the student clusterers who managed to wring 4.36 times more LINPACK bang out of their best hardware.

How the undergrads compare to the really big boys

While the kids are humbled by the results at the top of the list, they’re eking out slightly better performance improvements than systems at the median and end of the list.

Compelling competition

Speaking for myself (and probably untold millions of maniacal fans worldwide), these competitions are highly compelling affairs. The one thing I hear time and time again from students is, “I learned so much from this”. They’re not just referring to what they’ve learned about HPC systems and clusters, but what they’ve learned about science and research. And they’re so eager and enthusiastic when talking about this new knowledge and what they can do with it – it’s almost contagious.

For some participants, the SCC is a life-changing event. It’s prompted some students to embrace or change their career plans – sometimes radically. These events have led to internships or even full-time career-track jobs.

For many of the undergraduates, this is their first exposure to the world of supercomputing and the careers available in industry or research. Watching them begin to realise the range of opportunities open to them is very gratifying; it even penetrates a few layers of my own dispirited cynicism.

The schools sending the teams also realise great value from the contests. Several universities have used the SCC as a springboard to build a more robust computer science and HPC curriculums – sometimes building classes around the competition to help prepare their teams. The contests also give the schools an opportunity to highlight student achievement, regardless of whether or not they win.

Just being chosen to compete is an achievement. As these competitions receive more attention, the number of schools applying for a slot has increased. Interest is so high in China that annual play-in cluster competitions are held to select the university teams that will represent the country at ISC and SC.

With all that said, there’s another reason I find these competitions so compelling: they’re just plain fun. The kids are almost all friendly and personable, even when there’s a language barrier. They’re eager and full of energy. They definitely want to win, but it’s a good-spirited brand of competition. Almost every year we’ve seen examples of teams donating hardware to teams in need when there are shipping problems or when something breaks.

It’s that spirit, coupled with their eagerness to learn and their obvious enjoyment, that really defines these events. And it’s quite a combination. ®