Original URL: https://www.theregister.com/2012/08/07/ibm_flex_system_x220_x440_servers/

IBM shoots higher and lower with x86 Flex Systems

Plus: Expansion node for GPUs, flash, and other goodies

Posted in Systems, 7th August 2012 23:26 GMT

Big Blue is adding two new compute nodes based on recent Xeon processors from Intel and a PCI expansion node that can be used to strap on GPUs, flash storage, and other system goosers as it continues to flesh out its Flex System modular servers - the basis of its PureSystems integrated server-storage-networking stacks and the PureApplication automated cloudy tools that ride on top of the stack. IBM .

Processors from AMD are still missing in action in the Flex System compute nodes, and Alex Yost, vice president in charge of the PureSystems stacks at IBM, would not comment on any plans IBM might have about adding Opteron processors to the Flex System compute nodes at any time in the future.

Obviously, if there was enough customer demand, the company could whip together a system using Opteron 4200s and 6200s with relative ease. But for now, the Flex System machines are limited to Intel's "Sandy Bridge" Xeon E5 processors as well as Big Blue's own Power7 processors.

IBM launched the "Project Troy" Flex System iron back in April, which features a 10U rack-mounted chassis that puts up to 14 horizontally mounted single-width server nodes into the chassis.

Like blade servers, these Flex nodes have onboard and mezzanine cards for server and storage networks and integrated Ethernet, InfiniBand, and Fibre Channel switching. But by reorienting the servers and making them half as wide as the chassis as well as considerably taller than a BladeCenter blade server, IBM can cram regular components into the server nodes without causing overheating issues. IBM is also providing double-wide nodes that double up the processing and memory capacity compared to the single-wide nodes.

The Flex x220 server node

With the initial Flex System iron, which El Reg went through in great detail in the wake of the announcement, IBM had a single-wide node, called the x240, based on Intel's Xeon E5-2600 processor as well as two-way and four-way nodes based on its own Power7 chips. The two-way Power7 node is the p260 and the four-way, double-wide node is the p460.

Later that month, IBM came out with a series of machines called PowerLinux that have their firmware altered so they can only run Red Hat Enterprise Linux or SUSE Linux Enterprise Server, and IBM offered a low-priced Flex System p24L node that was based on the existing p260 node except that it had lower prices for processors, memory, disks, and Linux licenses than plain vanilla Power7-based nodes.

Intel has of course added some more Xeons to the lineup since then, namely the Xeon E5-2400s and the Xeon E5-4600s, and it is these processors that are being added to the Flex System iron today.

The Xeon E5-2400s that debuted in May are aimed at two-socket servers, just like the E5-2600s that came out in March. But they have more limited memory and I/O capacity both between their sockets across the QuickPath Interconnect and to the outside world through the "Patsburg" C600 chipset. The E5-2400s also sport lower prices, and for compute-intensive workloads, they are the better option in many cases compared to the E5-2600s.

The E5-4600s use the two QPI links per socket in the E5 design to link four processors together into a single system image that has lots of memory and I/O capacity and that in many cases obviates the need to go with a more expensive and less-dense Xeon E7 processor.

The Flex System x220 node has two processor sockets mounted in the center of the mobo, and all nine models in the Xeon E5-2400 lineup are supported in the machine. The Xeon E5-2400s come in versions with four, six, or eight cores and varying L3 cache sizes and different features.

The x220 node has with six DDR3 memory slots per socket for a maximum of 192GB in total capacity using 16GB sticks. IBM could easily double this to 384GB by using fatter sticks, and very likely will at some point.

Memory can run at up to 1.6GHz, and IBM is using low-profile memory to cram everything into the node. In some cases, solid state drives attached to the node lid are adjacent to the memory area below them on the motherboard, so every millimeter of space counts.

Both regular 1.5 volt and 1.35 volt (known as low-voltage memory, at least for now until it becomes the new normal) memory can be used. You have to install both processors to use maximum memory since the memory controllers are on the processors themselves; if you only install one CPU, the capacity halves.

The Flex x220 node has two hot-swap 2.5-inch disk bays in the center front of the node, and these can be configured with either disk or flash drives as suits customer needs. The machine has software RAID for mirroring and striping (using an LSI ServeRAID C105 controller), and if you want to do it with hardware, you can snap in a ServeRAID H1135 and not waste CPU cycles on this RAID work.

You can add four internal flash drives and four drives that mount in the front (two in each 2.5-inch bay) for a total of eight drives and then do a RAID 5 or RAID 6 across those flashies if you snap in the ServeRAID M5115 controller. IBM is supporting 200GB flash drives now, but Yost says in the fourth quarter, IBM will add support for 400GB units, doubling the flash capacity to 3.2TB per node.

Some models come with a Gigabit Ethernet LAN-on-motherboard (LOM) interface welded on, others don't. In terms of slots, there is a dedicated PCI-Express 3.0 x4 slot that can only be used for the ServeRAID controllers.

The node has a dedicated node connector that runs at PCI-Express 3.0 x16 speeds that can link to an external PCI expansion node (more on this in a second) plus two mezzanine cards that snap into the back of the board (on the right hand side in the picture above) that each have an x8 and x4 link out to the Flex System chassis backplane for linking into switches for external networks and storage.

The Flex x220 server will be available on August 24.

As El Reg predicted back in early April when the Flex System iron first came out, IBM has indeed come up with an expansion chassis that allows for PCI-based peripherals such as Nvidia Tesla GPU coprocessors or Fusion-io flash storage (just to name two possibilities) to be linked to the two-socket x86-based Flex System nodes.

Adding in GPUs, flash drives, what else?

This expansion node is only supported on the new Flex x220 node using the Xeon E5-2400 processor and the existing Flex x240 node using the Xeon E5-2600 processor. The expansion connector on the x220 and x240 nodes hangs off of the second processor socket in these machines, so you have to have both Xeon E5 CPUs installed in the node to use the PCI expansion chassis.

An interposer cable runs from the server node to the expansion node, which then links into a PCI-Express 2.0 switch on the board. This switch has two x16 links to two mezzanine I/O ports for extra I/O into the backplane of the Flex System chassis; it also has two x16 links and two x8 links to peripheral slots.

Note: These do not run at PCI-Express 3.0 speeds, which basically double the bandwidth over PCI-Express 2.0 slots, and the mezz cards in the expansion node will also run at PCI-Express 2.0 speeds. You can plug in PCI-Express 3.0 cards and run them in 2.0 mode, however, which will impede performance in many cases.

In any event, you have two full-height, full-width PCI-Express x16 slots on the left and two low-profile x8 slots on the right. There's enough power in the card to support four low-profile, two full-height, or one double-wide PCI-Express peripherals in each expansion node.

Physically, you should be able to get two x16 peripherals and four x8 low-profile peripherals in the node. There's only room, power, and cooling for one Nvidia Tesla M2090, which is not a lot, but you can use the other two low-profile slots on the other side with one M2090 in there.

Four Xeon E5s in a double-wide pod

The Flex System x440 is the x86 companion to the Power7-based p460 node, and it uses the Intel C600 chipset and the two QuickPath Interconnect ports per socket to glue four of them together into a shared memory box that can handle much larger workloads than the two-socket variants can.

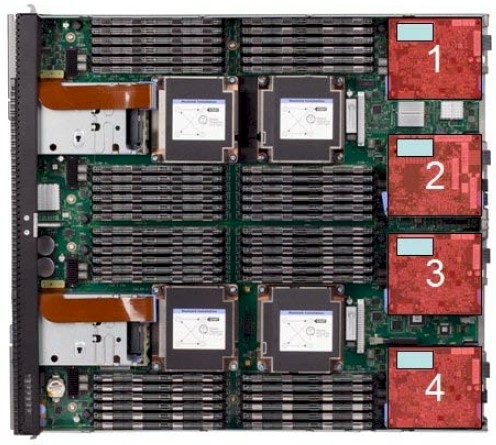

The Flex x440 double-wide, quad-socket server node

IBM is supporting the versions of the Xeon E5-4600 processors with four, six, or eight cores in the box, and it looks like IBM is supporting all eight possible options processor-wise, which is something that it probably would not be able to do in a BladeCenter machine because of the skinniness of the blades. (Oddly enough, you could get the same seven four-way servers into the same 10U space.)

The Flex System x440 machine has a dozen memory slots per socket like other E5-4600 machines to deliver up to 1.5TB of memory capacity using 32GB load-reduced DIMM (LRDIMM) DDR3 memory sticks. The machine needs a lot of space for the processors and memory, and therefore it only has room for two 2.5-inch disk drives instead of the four you might expect.

IBM no doubt expects customers to use Storwize V7000 disk arrays for application and systems software storage and only use local disks for the server node operating system (if that). In many cases, customers will just put flash storage in these bays to boost I/O performance and leave everything on external disks.

Internals of the Flex x440 server node

The machine has two Emulex BE3 LOM interfaces, each with two 10 Gigabit Ethernet ports, on the motherboard, and if you don't want to use the integrated 10GE ports you can buy a variant of the machine without them (presumably at a lower cost).

The server node has four mezzanine cards that link the server to the midplane of the chassis and then out to the integrated switches. Each mezz card has one x16 and one x8 connection running at PCI-Express 3.0 speeds. The same flash kit that is available for the x220 node is also available on the x440 node, but it will not be available until October 18. The x440 itself ships on August 24.

The two Flex System nodes can run Microsoft Windows Server 2008, Red Hat Enterprise Linux 5 and 6, and SUSE Linux Enterprise Server 10 and 11. VMware's ESXi 4.1 Update 2 and ESXi 5.0 Update 1 hypervisors are also supported on the nodes. Pricing information was not available at press time for the new iron. ®