Original URL: https://www.theregister.com/2012/05/16/nvidia_vgx_gpu_virtualization/

Graphics shocker: Nvidia virtualizes Kepler GPUs

VGX revs virty desktops, fluffs gamy clouds, changes everything

Posted in Virtualization, 16th May 2012 02:11 GMT

GTC 2012 You game-console makers who still want to be in the hardware business, look out. You console makers who don't want to be in the hardware business (this might mean you, Microsoft), you can all breathe a sigh of relief: after a five-year effort, Nvidia is adding graphics virtualization to its latest "Kepler" line of GPUs.

The Kepler GPUs, previewed in the GeForce line back in March, are the stars of the GPU Technical Conference that Nvidia is hosting this week in San José, California – but some of the most interesting news about them was kept under wraps until Tuesday's keynote by Nvidia cofounder and CEO Jen-Hsun Huang.

The feeds and speeds of the GeForce discrete graphics cards for desktops and laptops and the Tesla K10 coprocessors are now out there, but for his GTC keynote Huang revealed VGX, a set of extensions to the GPU architecture that allow for a GPU to be virtualized so it can be shared by multiple client devices over a private network, or render images remotely and stream them down from VDI or gaming clouds.

Huang said that more than five years ago, Nvidia's engineering team "just started dreaming" about cool things they might do, and decided then and there that they wanted to take GPUs into the cloud, both for remote graphics and remote computation.

The computation part is relatively easy, but the funny thing about graphics cards rendering images is that they just don't like to share. And, more importantly, they're chock full of state that needs to be managed by whatever virtualization layer (called a hypervisor for GPUs just as it is for CPUs) that manages the carved-up bits of the GPU.

During the Q&A session following his keynote introduction of VGX feature, Huang explained that because graphics chips have so much pipelining and so many registers, as well as so many cores and threads, are "inherently unfriendly" to being diced and sliced and virtualized.

A CPU, by comparison, might have tens of threads and a bunch of registers with kilobytes of data that a hypervisor has to juggle, but a GPU – such as the Kepler chip – has many thousands of threads and many megabytes of state data from all of those threads that have to be managed.

Simply put, GPU virtualization is many orders of magnitude more complex than CPU virtualization.

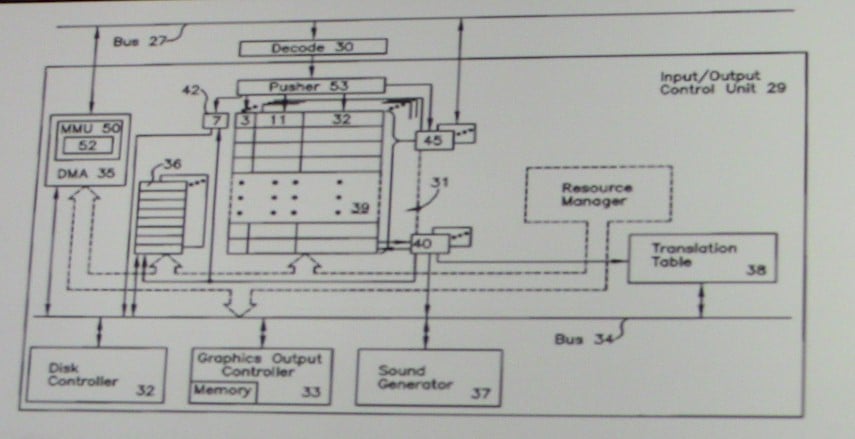

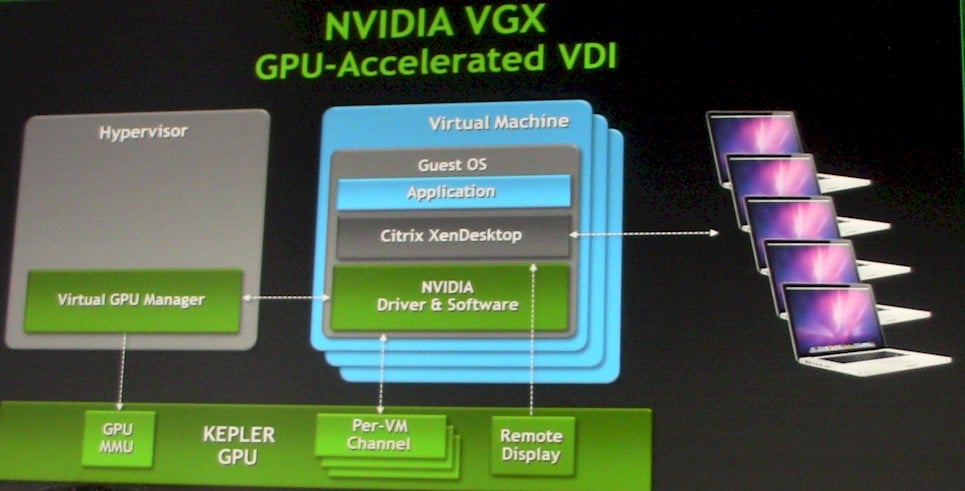

Nonetheless, Nvidia has figured out how to build a VGX GPU hypervisor that integrates with XenServer from Citrix Systems and allows for a Kepler GPU to be carved up into as many as 256 virtual GPUs. These virtual GPUs can be tied to a specific virtual machine running on a hypervisor on a server and managed just like a real GPU, with the ability to allocate more CUDA cores to a virtual PC or server image for its graphics needs.

VGX is not just about cutting up a big GPU so it can be used by multiple virtual PC images in a virtual desktop infrastructure (VDI) setup. VGX also allows for the Kepler GPU sliced up into virtual GPUs that can see which VM is asking for what stream, and render its frame buffer directly to that VM instead of going through the CPU.

Huang explained that current VDI implementations such as those based on the Receiver client from Citrix Systems have a "software GPU" that gets in the way. His approach puts a hypervisor with multiple virtual GPUs on the backend server, in this case running XenServer, and you can get the software-based GPU out of the loop.

Huang says that the Kepler GPU could support as many as 256 virtual GPUs, and he conceded that people might want to take this virtualized CPU-GPU stack and install it on their home PCs or office PCs so they could be accessed from any outside device such as another PC, a smartphone, or a tablet, and still offer the same experience and functionality as the home device.

In effect, your PC can become your personal cloud, rendering your applications remotely.

To get the VGX ball rolling, Nvidia has cooked up something called a VGX board, which has four Kepler GPUs with 192 cores each – that's a mostly dud chip, considering that a standard Kepler GPU has 1,536 cores – and 16GB of memory that is carved up into four segments and used as a frame buffer for each Kepler GPU.

This card, which is passively cooled and designed to slide into servers like the Tesla GPU coprocessors, is able to provide virtual GPUs for about 100 virty PCs, or 25 per GPU on the card. There's no word yet on when these VGX cards are going to be available or what they'll cost, but presumably they will be less expensive than a full-on Kepler GeForce or Tesla card.

Nvidia is partnering with the key server makers, plus Microsoft, VMware, and Citrix for hypervisors and for end-user virty client software to support the VGX hardware and hypervisor.

Citrix, which is arguably today's VDI leader, seems to have the VGX pole position. But the point of VGX is not to be tied to any particular hypervisor or client device, Huang explained. You don't need anything but a bit of software like Receiver (which is free) or the VMware or Microsoft analogues for VDI, and for cloudy gaming all you need is a decoder that is compliant with the H.264/MPEG-4 standard on your client device.

Heavenly gaming

In conjunction with the VGX features embedded in all Kepler GPUs, Nvidia announced that it is working with online gaming companies to create a cloud platform for online gaming akin to that which OnLive has created with its own proprietary video compression and rendering chip.

This cloudy gaming platform, called the GeForce Grid, is not something that Nvidia plans to build and operate itself, but is rather a variant of Kepler GPUs and VGX virtualization, plus other gaming software code, that will allow a single Kepler GPU to handle up to eight game streams and beam them out over the internet to PC clients while doing all of the rendering for the game on back-end servers where the game is running.

The amount of power consumed per stream will be about half of what you could do using Fermi GPUs, according to Huang.

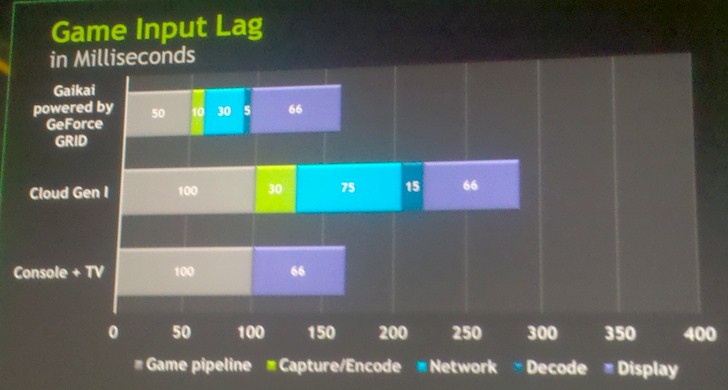

Another dollop of secret sauce in the GeForce Grid is fast streaming, which captures and encodes a game frame in a single pass and does concurrent rendering, reducing server latency to as low as 10 milliseconds per frame. This reduction can more than make up for the increased network latency of having your console in your hand – be it an iPad, a smartphone, or a PC – where you are playing a game tens or hundreds of miles away from the server on which the game is actually running and being rendered.

Online gaming company Gaikai, an OnLive competitor, worked with Nvidia to demonstrate Hawken running streamed from its data center 10 miles away from the San Jose convention center, on an LG Cinema 3D Smart TV with a wireless USB gamepad. To our eyes, it looked like it was running on a local console, with no lags or jitter.

As you can see from the chart above, Nvidia and its online gaming partners think they have the latency issue licked when the GeForce Grid software is running on data centers that are not too far from players.

Interestingly, the speed of light is an issue: it takes 100 milliseconds or so to circle the globe under the best of circumstances, and several times more than that going through networks. But the idea is very simple: "What's good for gamers is good for Nvidia," as Huang put it. And Bill Dally, chief scientist at Nvidia, put it even better: "A cloud allows a kilowatt gaming experience on a 20-watt handheld device."

With cloud gaming, you don't have to buy a console – and you don't even have to buy a game to play on it. In fact, Gaikai is distributing games through retailing giant's Walmart.com. You can just go there and click on a game, pay for the right to play it, and off you go. No downloading for 36 hours, no console to buy. All you need is internet access that is fast enough to stream HD video – basically, if you can watch Netflix, you can play games online with GeForce Grid.

"We are not currently planning on hosting the servers ourselves," said Huang. "But if it makes sense, we are not against it."

The plan is for gaming companies and telcos to build their own GeForce Grids and come up with the pricing and billing, and the desire is to get a Netflix-style price of around $10 per month for access to a fat catalog of games. The game makers get out of manufacturing media and packaging, and the gamers get instant access to games on the cloud.

The trick is to make it ubiquitous – and, of course, more profitable. ®