Original URL: https://www.theregister.com/2012/02/21/mellanox_40ge_switch_ramp/

Mellanox: Just jump straight to 40GE networks

Unless you really want only 10GE

Posted in HPC, 21st February 2012 15:08 GMT

Network adapter and switch maker Mellanox Technologies is riding the wave of upgrades to 10 Gigabit Ethernet switches and is excited about boosting sales of 10GE adapter cards when Intel launches its "Sandy Bridge-EP" Xeon E5 processors sometime this quarter. But Mellanox CEO and chairman Eyal Waldman has his eye on a bigger prize: peddling 40GE switches and network interface cards.

"If you look at the Romley systems, 10GE is not enough," Waldman tells El Reg, referring to the code-name of the server platform designs coming out of Intel that use the impending Xeon E5 processors and their "Patsburg" C600 chipset. "For many customers, going from Gigabit Ethernet straight to 40GE is what makes the most sense."

This may sound a bit contrarian, given that the ramp to 10GE networking is just building some momentum in the data center after being available for a decade. Server makers are just now starting to plunk 10GE ports down on their motherboards, and the cost of 10GE switches has come down considerably in the past two years.

Mellanox was just recently bragging about how it slashed prices for 10GE adapters and switches because it wanted to accelerate the adoption of 10GE networking in the data center as well as its burgeoning share of the 10GE NIC market, jumped from 4 per cent of ports in the first quarter of 2011 to 24.6 per cent in the fourth quarter - the classic hockey stick. But Waldman wants more, and wants to get customers thinking about the jump to 40GE – particularly after having just slashed the prices on 10GE adapters and switches by roughly in half last month.

To that end, Mellanox is kicking out a new ConnectX-3 network card for servers that runs at 40GE speeds, matching the existing SX1036 switch launched last May.

The new ConnectX-3 EN card, code-named "Osprey", is a single-port 40GE adapter card that plugs into PCI-Express 3.0 peripherals slots on servers and has been certified to run with the forthcoming Xeon E5 servers. These are, at the moment, the only servers that have PCI-Express 3.0 support, and probably will be for some time.

Interestingly, the PCI-Express controller resides on the Xeon E5 chip itself, which is the first such integration for PCI-Express 3.0 but certainly not the first time a PCI controller has been on chip. Oracle's T Series CPUs, Tilera's Tile processors, and even Intel's old i960 RISC processor had integrated PCI on the die, just to name a few. But the jump to PCI-Express 3.0 is significant because the old PCI-Express 2.0 bus does not have enough bandwidth in an x8 slot to drive a 40GE Ethernet card or a 56Gb/sec InfiniBand card, and the PCI-Express 3.0 bus can drive it.

On the switch front, Mellanox already sells the SX1036 top of racker, which can be used as a 64-port 10GE switch or a 36-port 40GE switch. So you can now go 40GE end-to-end if you want, and the Xeon E5 server can drive the NICs. What you can't get, however, are list prices for the 40GE adapters and switches out of Mellanox.

But Waldman is a sporting type and tells El Reg that the company plans to charge around 2.5 times the cost of 10GE adapters and switch ports for the 40GE products. That gives you better bang for the bandwidth – if you do the math, 37.5 per cent better. Perhaps equally significantly for heat-sensitive data centers, the bandwidth per watt is much better for the 40GE switches, too.

Waldman knows that a 36-port 40GE switch is not going to cover all the needs of the data center market, and says that the company is also launching a hybrid 10GE/40GE switch, the SX1024, and will eventually get modular switches in the field that sport 108, 216, 324, and 648 ports in a 21U chassis.

The SX1024 switch pairs 48 ports of 10GE (with SFP+ interfaces) to talk down into the rack to servers, with a dozen ports of 40GE uplinks (with QSFP interfaces) to talk up to the aggregation layer of the Ethernet network. The SX1024 comes in a 1U chassis, and supports special cables that can convert four of those 40GE ports into a 16 10GE ports. Port-to-port latency is 250 nanoseconds, and this particular switch has 1.92Tb/sec of non-blocking throughput. In typical operation, it burns 154 watts.

The SX1024 switch is, of course, based on Mellanox's SwitchX InfiniBand-Ethernet ASIC, which debuted last April and was rolled into the SX1036 back in May. But there's another benefit of its 40GE switches that the SwitchX ASIC gives Mellanox: Afterburners.

The SwitchX ASIC has 2.88Tb/sec of throughput and 4Tb/sec of switching and routing bandwidth and is also the backbone of the Fourteen Data Rate (FDR) InfiniBand switches sold by Mellanox, which includes the 36-port unmanaged SX6035 and SX6036 managed top of rackers and the 640-port SX6536 director switch.

Because SwitchX can already rev to 56Gb/sec on InfiniBand, with the turn of the golden screwdriver and some money for software licensing, the 40GE switches above can be upgraded to run at 56GE speeds. No one else in the networking racket is offering this capability. Mellanox has to do a deep sort on the SwitchX chips to make sure they can run at the higher 56GE speeds.

And thus far, no one is offering a single ASIC that does either Ethernet or InfiniBand and promises to be able to change protocols on the fly in the future as Mellanox is planning to do.

"Our goal, a few years down the road, will be that if you ask what protocols customers are using, we won't know," Waldman said.

Mellanox is also looking for network bandwidths needs to rise a lot faster than they have in the past few years. "I think the transition to 40GE will be very fast," he explained.

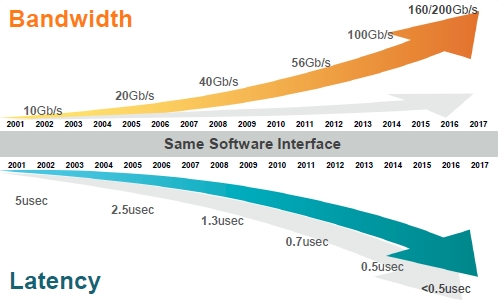

Mellanox: Bandwidth and low latency hungers are never sated.

To make the point, Waldman trotted out statistics for InfiniBand adoption. Double Data Rate (20Gb/sec) InfiniBand took a long time to take off, but QDR InfiniBand (running at 40Gb/sec) accounted for 4 per cent of revenues in its first quarter of availability, then hit 12 percent in the second quarter it was available, followed by 32 per cent in its third quarter and 52 per cent by the fourth quarter.

FDR InfiniBand (at 56Gb/sec) is on a similar track, representing 4 per cent of sales in the third calendar quarter last year (when products were first available), and accounted for 13 per cent of revenues in the InfiniBand line in the fourth quarter. ®