Original URL: https://www.theregister.com/2012/02/06/emc_vfcache/

EMC crashes the server flash party

Lightning strike with thunder to follow

Posted in Channel, 6th February 2012 05:01 GMT

The perfect server flash storm hitting storage arrays has generated EMC's well-signalled Lightning strike; VFCache has arrived, extending FAST technology from the array to the server. Project Thunder is following close behind, promising an EMC server-networked flash array.

This is a major announcement and we are covering it in depth.

FAST (Fully-Automated Storage Tiering] moves data in an EMC array into higher-speed storage tiers when it is being accessed repeatedly and server applications don't want to wait for slow disks to find their data.

EMC boasts that its customers have purchased 1.3EB of fast-enabled since January 2010, and it has shipped more than 24PB of flash drive capacity, more than any other storage vendor. Times have changed and flash in the array is no longer enough.

Fusion-io has attacked the slow I/O problem by building server PCIe bus-connected flash memory cards holding 10TB or more of NAND flash, and giving applications microsecond-class access to random data instead of the milliseconds needed from a networked array. The threat here is that primary data could move from networked arrays into direct-attached server flash storage.

EMC's response is to put hot data from its arrays into a VFCache (Virtual Flash Cache) solid state drive in VMware, Windows or RedHat Linux X86 servers from Cisco, Dell, HP and IBM. This provides random read access performance equivalent to Fusion-io once the cache has warmed up and is loaded with hot data.

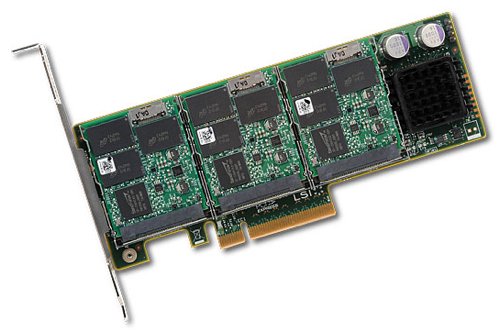

VFCache is a 20-300GB PCIe-connected flash memory card, using, as rumoured, a Micron SLC card (P320h we think) or LSI WarpDrive SLC flash, Micron being the primary supplier. (Micron has just tragically lost its CEO, Steve Appleton, in a plane crash after winning what must have been a hotly-desired OEM contract.) The P320h is a fast flash card, doing 750,000 random 4K block read IOPS.

Micron P320h

The EMC cache increases 4KB - 64KB block random read I/O speed but not write I/O speed. VFCache will not cache read I/Os larger than 64KB. There is no write caching.

We're told "testing in an Oracle environment showed [an] up to 3X throughput improvement and 50 per cent reduction in latency." EMC asserts that "VFCache is the fastest PCIe server Flash caching solution available today." This does not necessarily mean it is faster than Fusion-io's server solid state storage; that is not a "caching solution" in the way VFCache is.

Storage array and cache interoperability

EMC says VFCache works with EMC VMAX, VMAXe, VNX and VNXe array FAST. Does VFCache only work with EMC VMAX and VNX arrays? No, indeed not; VFCache is storage-agnostic and will work with all 4Gbit/s and 8Gbit/s Fibre Channel-connected block storage. No change is needed in the back-end block arrays.

We're told that, by working in conjunction with EMC FAST on the storage array, VFCache offers coordinated caching between the server and the array. How does this work? EMC says VFCache's caching algorithms promote the most frequently referenced data into the cache. Okay, but this isn't co-ordinated caching between the array and the server. This is VFCache doing its own caching on the server irrespective of whatever caching the array is doing. For example, EMC doesn't say the array will not cache data that VFCache is caching.

LSI WarpDrive SSD

There appears to be no active interaction between VFCache and array FAST at all, EMC saying VFCache is transparent to storage, application, and user.

With writes, the VFCache driver writes data to the array LUN and, when that completes, write data is asynchronously written to the flash cache. It appears the back-end array is not involved in managing VFCache at all; in fact; it doesn't even know VFCache exists.

Limitations and futures

VFCache has to be disabled and removed for vMotion to take place, it being a local resource for the virtual machine. It's not possible to configure automatic ESX server failover if it's being used and things like vCenter Site Recovery Manager with it or use it in a cluster that uses vMotion to balance workloads.

The VFCache card can have separated off DAS partitions for server app use but data loaded into them is not written to the back-end array. This is called split-card mode. It should only be used for temporary data, stuff that doesn't need safeguarding by being written to the back-end array

EMC will add deduplication to VFCache later this year, increasing its effective capacity. It does not say where the deduplication will be done, with our assumption being that it will be less burdensome on the host CPU to have it carried out on the card itself.

There will be additional capacity points in the future and VFCache will be more deeply integrated with EMC's storage management products and with the FAST architecture. This could be a hint that EMC's storage arrays will co-ordinate more actively with VFCache.

VFCache and Fusion-io

Fusion-io has sold to early adopters in EMC's view. It believes Fusion-io-type server DAS approaches do not protect data against a server crash or provide data sharing. By storing the server's data in a back-end array it scan be protected via snapshots, replication, etc, and made available to other servers. Management is also easier. Mainstream server flash use won't happen unless these data protection and management features are added.

Specifically, MC says VFCache is less of a drain on server resources than a Fusion-io flash store because because VFCache hands off flash and wear-level management to the PCIe card itself, whereas the host CPU does this for the Fusion-io product. It says Fusion-io CPU overhead could be up to 20 per cent higher than that with VFCache.

Development and Project Thunder

EMC will add deduplication technology to VFCache later this year, enabling an effective increase in capacity. Clearly this will best be carried out on the card to avoid burdening the host CPU. Whether that will be the case remains to be seen.

There will be larger capacity points for VFCache, possibly going beyond 300GB, and different form factors, adding blade environments to the current rack form factor. It will also integrate better with EMC storage management technologies, and there will be additional integration with FAST architecture. This means active co-ordination between caching by VFCache and EMC's VMAX and VNX array FAST capabilities. We'll be seeing:

- Enhanced VMAX/VNX array integration – hinting, tagging, pre-fetch for even greater performance

- Distributed cache coherency for active-active clustered environments

- VMAX and VNX management integration

EMC has also announced Project Thunder, a "low-latency, server networked flash appliance that is scalable, serviceable, and shareable. It is intended to "deliver I/Os measured in millions and timed in microseconds."

This suggests it will use a fast server-appliance interconnect such as InfiniBand, which Oracle uses in its Exadata systems, or some form of PCIe I/O virtualisation. EMC confirmed this and said it will be working with its customers in a second quarter early access program to determine the interconnect to use.

It will be "optimised for high-frequency, low-latency read/write workloads" and will build upon the PCIe technology in VFCache. In effect this will provide a combination of the functionality offered by Fusion-io and Violin Memory products; the speed of directly-attached flash and the sharability of a networked flash memory array.

El Reg thinks other mainstream storage, PCIe flash storage, server/storage and flash array vendors will be forced to follow suit, giving a boost to either InfiniBand or IOV high-speed server-storage interconnect technologies, or both.

Storage flash landscape

VFCache is a fast fix to the threat - and opportunity - posed by Fusion-io and other PCIe flash caching suppliers to EMC on the one hand, and networked flash arrays such as those from Violin Memory, WhipTail and Nimbus now, and startups like Pure Storage, SolidFire and XtremIO on the other.

As with the introduction of SSDs into storage arrays, EMC is the first mainstream vendor to jump on the server PCIe flash bandwagon. We understand Dell is actively working in this area and expect HP, IBM and NetApp to follow suit together with Fujitsu and, maybe, HDS. It represents a recognition that disk drive latency is no longer acceptable for primary data access and that network latency is also becoming unacceptable.

Virtualised, multi-socket, multi-core, multi-threaded servers demand faster I/O than Ethernet and Fibre Channel networked disk drive arrays can provide. EMC has seen that there is a need for mainstream enterprise-class data centre I/O speed and flash dash is the way to get it. ®