Original URL: https://www.theregister.com/2011/12/12/ibm_vs_oracle_data_centre_optimisation/

Oracle and IBM fight for the heavy workload

Seconds out

Posted in Databases, 12th December 2011 22:45 GMT

IBM and Oracle agree about little these days, and they are coming at it from different angles, but both IT giants believe that some companies don't want general-purpose machines; they want machines tuned to run a specific stack of software for a particular kind of workload.

IBM calls them "workload optimised systems" and Oracle refers to "engineered systems". What are these machines and will they sell?

Oracle is making a lot more noise about its Exadata and Sparc SuperCluster machines than IBM is with its Smart Analytic System, DB2 PureScale database clusters and Netezza data warehousing appliances.

But as a relatively new player in the systems game after spending $7.4bn to acquire Sun Microsystems in January 2010, Oracle has to make a lot of noise if it wants to be heard.

Sun Microsystems failed as a volume x86 server player and Oracle knew it would have to take a different tack to compete against Big Blue in the systems business.

More than a year before the Sun deal was done, the HP Oracle Database Machine featured a storage server tuned for the Oracle database called Exadata. It set the stage for the current Exadata database clusters, the Exalogic application server clusters and their Sparc-based SuperCluster brethren.

The original HP-Oracle appliance had eight ProLiant servers nodes to run a parallel implementation of Oracle's 11g database, glued together by Real Application Clusters (RAC) clustering software. This allows a database to be parallelised, giving it fault tolerance and scalability.

The Exadata storage nodes compress database files using a hybrid columnar algorithm so they take up less space and can be searched more quickly. They also run a chunk of the Oracle 11g code, pre-processing SQL queries on this compressed data before passing it off to the full-on 11g database nodes.

The database and storage nodes in the HP-Oracle Database machine ran Linux and were linked together with a 20Gbps InfiniBand network.

Oracle sees the light

Then Oracle bought Sun and had a religious conversion to hardware. Specifically, it put its faith in Sun's Constellation server, with its faster InfiniBand networking and flash-accelerated server platforms.

And in September 2009, between the time it announced the Sun deal and the time it closed it, Oracle kicked out a revved-up line of machines, named Exadata after the storage servers at the heart of the system. They supply much of the system's oomph when running Oracle database workloads for online transaction processing (OLTP) or data warehousing.

Oracle has sold 1,000 of these Exadata machines to date and says it will sell another 3,000 before its fiscal 2012 ends in May.

There are currently two Exadata database appliances, and it is likely that Oracle will add another one at its 2012 OpenWorld customer event in San Francisco.

Exadata examined

The Exadata X2-2, launched in September 2010, is an upgrade of the first generation of Exadata machines based on Sun hardware, which was called the Exadata V2.

The Exadata X2-2 comes in quarter, half and full-rack configurations. It is based on two-socket server nodes using Intel's Xeon 5600 processors, while the Exadata X2-8, which also came out in September 2010, is based on Intel's Xeon 7500 processors.

The Exadata X2-8 database nodes have eight processor sockets and the Xeon 7500 processors have more cores per socket, as well as fatter main memories. This means the machines can run heavier database instances and rely less on RAC for scalability.

The Oracle Exadata X2-8 OLTP/BI clusterbox

A rack of Exadata X2-2 clusterage has eight Sun Fire X4170 servers, each with two six-core Xeon X5675 servers running at 3.06GHz, as well as the 11g and RAC database nodes. Each node has 96GB of main memory (expandable to 144GB), four 300GB 10K RPM SAS disks, two QDR InfiniBand ports and four 10-Gigabit Ethernet ports.

The rack has three of Oracle's own 36-port QDR InfiniBand switches, which are used to cross-couple the database nodes and link them to the Exadata storage arrays. The Exadata units are based on the Sun Fire X4275 two-socket Xeon server with Oracle's own F20 FlashFire PCIe flash memory modules. Each of these has 96GB of flash capacity and plugs into PCI-Express 2.0 slots on the machines.

These X4275 servers have room for a dozen disks, and Oracle is offering two disk options: high-performance 600GB SAS disks that spin at 15K RPM, or high-capacity 2TB SAS drives that spin at 7.2K RPM.

The rack has 14 of these Exadata arrays, yielding 168 cores with 5.3TB of flash cache to chew through data before passing it on to the database nodes.

The Exadata database nodes can run Oracle's Linux or the Solaris 11 Express development release, and presumably soon the Solaris 11 production release. The Exadata storage servers run Linux.

Oracle says the Exadata X2-2 has 25GBps of disk bandwidth using the faster disks and 75GBps of bandwidth coming out of those flash drives, which have been tuned to the 11g database to keep the hottest data on the flash rather than the disks.

The rack of Exadata X2-2 can load data at a rate of 12TB per hour and has 45TB of usable uncompressed capacity, delivering 50,000 disk I/O operations per second (IOPS), and up to 1.5 million out of the flash.

The high-capacity option delivers three times as much usable database capacity, but only half the disk IOPS. Up to eight racks of the Exadata X2-2 machines can be linked through InfiniBand switches, but it is not clear how well RAC scales across these clusters.

Count the cost

Whether customers choose the high-capacity or high-performance options, the price is the same: $1.1m per rack. Each disk drive in the Exadata storage server also requires a $10,000 software licence, which is $120,000 per storage node, and $1.68m across all 14 storage nodes.

Oracle's database software is not included on this machine and neither is RAC. The 11g Enterprise Edition database costs $47,500 per core, with a 0.5 scaling factor down to $23,750, and RAC costs $23,000 per core, or half that with the scaling factor to $11,500. So with 96 cores total in the rack, the Exadata X2-2 will cost $4.47m at list price.

The Exadata X2-8 takes out the eight two-socket servers in the X2-2 full-rack configuration and replaces them with two eight-socket Sun Fire X4800 servers, announced in June 2010. These database nodes are equipped with Intel's Xeon X7560 processors, which have eight cores running at 2.27GHz.

The X4800s are configured with 1TB of main memory each, more than ten times as much main memory as in the X2-2 database nodes. The X4800 servers have eight 300GB SAS disks spinning at 10K RPM and eight QDR InfiniBand ports to link to the switches that hook into the Exadata storage nodes and to other nodes in the cluster.

Oracle is careful not to give performance benchmarks that compare the X2-2 to the X2-8, but obviously the feeds and speeds of the Exadata storage are the same. The X2-2 has 96 cores, compared with 128 for the X2-8, but the Xeon 5600 cores run faster than the Xeon 7500 cores.

But you have to take into account the overhead of using RAC. A two-node cluster should have less overhead than an eight-node cluster, and with 1TB of memory, you might be able to get an entire database in one node. In any event, you can glue as many as eight X2-8 racks together, and Oracle Linux and Solaris 11 Express are options for the database servers.

What we do know is that a rack of Exadata X2-8 costs $1.65m, 50 per cent more than a rack of the X2-2 machines. The 11g and RAC stack costs $2.26m across those 128 cores, which is 33 per cent more than the software on the X2-2 machines. The Exadata storage software costs the same.

All told, an X2-8 costs just a little more than $5.59m at list price, 25 per cent more than a configured rack of the X2-2 systems.

While Oracle is keen on pitching the Exadata machines as being suitable for either OLTP or data warehousing workloads, it is fair to assume that those running OLTP jobs will prefer the fatter database nodes, if only because they more closely resemble the fat SMP nodes most customers are used to.

No matter what nodes customers want, Oracle has been open about pricing and offers configurations such as two database servers and three Exadata storage servers to let companies start out small.

IBM embraces diversity

Unlike Oracle, which is pitching the Exadata machines as suitable for transaction processing or data warehousing, IBM has different machines for different purposes – and would very likely argue that its big System z and Power 795 SMP servers are better in many cases for OLTP than Oracle's Exadata clusters.

IBM does have a parallel implementation of its DB2 database for AIX, called PureScale, and has sold Parallel Sysplex for transaction processing on up to 32 mainframes in a cluster since 1994. It has sold a parallel system clustering technology for its AS/400 systems and DB2/400 database called DB2 Multisystem since 1995.

And in September 2011, IBM combined PureScale with highly tuned WebSphere middleware to create yet another variant of the parallel database called WebSphere Transaction Cluster Facility. This is aimed at the very intensive transaction processing environments – think reservation systems and financial processing systems – that used to be the domain of IBM's z/Transaction Processing Facility environment for mainframes.

IBM doesn't just do data warehousing, either. There are x86-based Netezza appliances with hardware-accelerated data chewing (akin to Oracle's Exadata), as well as the Smart Analytics System range, which are tuned versions of x86, Power or mainframe servers with InfoSphere Warehouse and Cognos analytics software all set up and pre-tuned for the machines.

DB2 PureScale is a feature of IBM's database, not a separate line of machines like Oracle’s; it is available only on Power Systems at the moment – and only running AIX.

There was talk in October 2009, when PureScale was announced as Oracle was Sunning up its Exadata clusters, that PureScale would be ported to Windows and Linux systems, but this has not happened. There is no way IBM will offer DB2 PureScale clustering on HP-UX or Solaris, although there is no technical reason why it couldn't.

Like Exadata clusters from Oracle, DB2 PureScale makes use of InfiniBand clustering to link multiple server nodes equipped with AIX and DB2 together.

The PureScale setup has a designated database access node, which functions like the head node in a parallel supercomputing cluster. It manages the locking of database fields as transactions are processed and the locking and unlocking of memory in all of the nodes in the cluster as they seek information from each other as part of the OLTP cranking.

The nodes are linked fairly tightly using the Remote Direct Memory Access features of InfiniBand, which means the processors are cut out of the networking stack, unlike TCP/IP clustering techniques. The central caching server is mirrored so it is not a single point of failure.

IBM says this PureScale approach cuts down on the intra-node communications that normally happen in a parallel database implementation. Also speeding up intra-node communications is the fact that PureScale makes use of the 12X remote I/O port on Power processors. This 12X I/O port is a variant InfiniBand that IBM has tweaked to allow remote I/O drawers crammed with disk controllers and disks or SSDs.

Netezza in the net

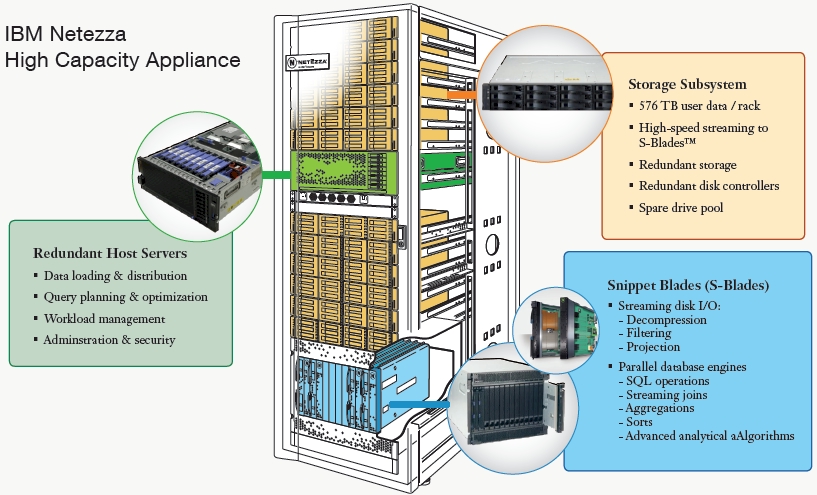

If you want to do data warehousing, IBM acquired Netezza in September 2010 for $1.7bn, and for good reason. Netezza is an upstart data warehouse appliance maker which has heavily customised the open source PostgreSQL database and created a field programmable gate array (FPGA) co-processor that does SQL pre-processing, much like Oracle does with the Exadata storage servers inside its Exadata clusters.

Not only that, the company built its TwinFin appliances on IBM's BladeCenter blade servers and looked to be getting just a bit too cosy with Japanese server maker NEC. IBM could not afford to let Netezza fall into the hands of competitors. It stands to reason that over the long haul, IBM will be able to take some of the techniques used in the Netezza appliances and apply them to its various parallel database systems.

The original Netezza appliances were based on Power architecture (and did not come from IBM, but one of its OEMs). The TwinFins, based on IBM's blades, came out in August 2009. They pair an HS22 two-socket Xeon 5500 blade with a co-processor blade which has eight FPGAs on it – one for each x86 core.

This combination is called an S-Blade; the FPGA speeds up the filtering of data moving off storage before being passed on to the PostgreSQL database, as well as doing complex sorting and joins of database tables and managing compression.

In the wake of the acquisition, IBM rebranded this parallel database machine the Netezza 1000. A single rack of the Netezza 1000 offers 12 of these S-Blades, which have a total of 96 x86 cores (the same as the Exadata X2-2). The machine has 32TB of usable data space uncompressed, and offers load rates of 3TB per hour and backup rates of 4TB per hour.

The HS22 blades run Red Hat Enterprise Linux 5.3, and IBM has tools to port databases from DB2, Informix, SQL Server, MySQL, Oracle, Teradata, Sybase and Red Brick databases to the Netezza variant of PostgreSQL.

The Netezza 1000 appliance can scale up to ten racks in a single image, two more racks than the Exadata appliances.

In June IBM fattened up the Netezza appliances with a C1000 machine, which has four S-Blades and a dozen disk enclosures in a rack with 144TB of uncompressed database space. (There are obviously a lot fewer processors to chew through this data per rack.)

The machine scales up to eight racks, offering 32 S-Blades with 256 cores and FPGAs and 1.15PB of user space. Netezza gets a little less than a 4:1 ratio with data compression, boosting that user capacity.

IBM charges about $2,500 per terabyte for the high-capacity Netezza appliances and about $10,000 per terabyte for the regular appliances. Disks are cheaper than CPUs and FPGAs.

The other IBM machines

While Oracle was busy eating Sun Microsystems and before IBM had acquired Netezza, IBM rolled out a line of parallel data warehousing and analytics machines call the Smart Analytics System.

The original machines were based on clusters of mid-range Power 550 servers configured with dual-core Power6 processors, 32GB of memory and Gigabit Ethernet switches from Juniper Networks linking the nodes together. Fibre Channel adapters linked out to shared DS5300 disk arrays, cross-coupled to four server nodes.

The server nodes in the original Smart Analytics System ran AIX 6.1 and IBM's General Parallel File System, as well as Tivoli System Automation to manage each node. One node in the cluster is equipped with Cognos 8 modules, including BI Server, Go Dashboard and BI Samples.

The other three machines are carved up into a dozen logical partitions that run IBM's InfoSphere Warehouse variant of DB2 V9.5 database and offer 12TB of user space. The setup could expand to 53 database nodes, supporting about 5,000 named users and offering 200TB of space in 19 racks.

In April 2010, IBM widened the Smart Analytic System fleet to include a Power7-based system cluster. It also added variants based on System x x86 servers and System z mainframes, while updating the underlying database to the DB2 V9.7 release and offering flash storage as an option to boost performance on I/O heavy SQL processing.

The Smart Analytics System 5600 is based on IBM's System x3650 M3 server, a 2U rack server that has two Xeon 5600 series processors from Intel. IBM is plunking the six-core Xeon X5670 running at 3.33GHz into the server nodes, with 8GB, 32GB or 64GB memory options.

The machine can have up to 288GB of main memory and has room for 16 disks or SSDs. These server nodes are paired with IBM's DS3500 disk arrays. They have 24 x 2.5in media bays, can support up to 192 devices using add-on enclosures, and can attach to the servers through Fibre Channel or SAS adapters.

The server nodes run SUSE Linux Enterprise Server 11. The software stack includes the same InfoSphere Warehouse extensions to DB2 and Cognos 8 analytical tools as the original Smart Analytics System, and Fusion-io SSDs are options to disks for boosting IOPs.

IBM is also offering an x86 variant of the Smart Analytics System called the 5710, which is based on the System x3630 M3 server. This is a 2U rack server with 14 x 3.5in or 28 x 2.5in disks. The machine is based on 3.06GHz Xeon X5667 processors and maxes out at 192GB of main memory. So you can put more disks in this 5710 node but less memory than in the 5600 node.

The Smart Analytics System 7700, announced in October 2010, is an upgrade of the original cluster, and is based on IBM's entry Power 740 server announced in August 2010. The Power 740 is a two-socket server that can be equipped with Power7 processors with four, six or eight cores running at between 3.3GHz and 3.7GHz. The machine tops out at 256GB, but should be able to do 512GB once IBM supports 16GB DDR3 memory sticks in the box.

Depending on the model, you can have six or eight 2.5in 600GB SAS drives in the unit. This 7700 parallel system uses the same DS3500 external disk arrays and runs the InfoSphere Warehouse and Cognos 8 stack on AIX – interestingly, on AIX 6.1 still, not on the AIX 7.1 that was announced last year and tuned for Power7 iron.

IBM's Smart Analytics System 7700 DW/BI cluster

Finally, because IBM has to show love to its mainframe base, it also rolled out the Smart Analytics System 9600, which uses z/OS partitions on a System z mainframe as the database server and z/Linux partitions to run the Cognos analytics.

In a base configuration, the setup is based on the System z10 BC midrange mainframe. (It has not yet been updated to the System zEnterprise 114 announced in July with faster mainframe engines.)

The mainframe version of the Smartie box has two partitions on an entry BC-class mainframe, one running z/OS and DB2 and the other running Linux and the Cognos tools. The machine can run databases up to 100TB in size, and you can expand capacity with Parallel Sysplex clustering as needed. IBM tosses in some DS8700 external storage arrays to hold the data, and says that the 9600 box can support up to 10,000 users.

Pricing information was not announced for the Smart Analytics System 9600, but a rack of the 5600-class machines runs to $2.14m, while the 7700-class racks costs $4.49m. If you want to flesh out the 5600-class rack with Fusion-io SSDs, then you are in for $3.68m.

IBM is not yet offering SSDs on the Smartie 7700s, but probably will when the Power7+ processors come out.

Parallel universe: SunCluster and Exalogic

These are not the only parallel machines in the Oracle and IBM arsenals. There are several more important ones, and no doubt more will come out from Big Larry and Big Blue, as well as their competitors, as customers seek to solve specific problems with their clusters.

Oracle can't ignore the Sparc/Solaris base, which doesn't want to run Linux and needs a Sparc platform on which to run Oracle11g/RAC applications. In late September, ahead of its OpenWorld conference, Oracle rolled out a Sparc SuperCluster configuration, which marries its Sparc T4-4 quad-socket servers with the Exadata storage servers and some generic ZFS disk arrays.

The Sparc SuperCluster T4

Specifically, the Sparc SuperCluster rack has four of the Sparc T4-4 servers, each with four eight-core 3GHz Sparc T4 processors. Collectively, the four nodes, used to run Oracle 11g as well as application code, have 4TB of memory and depending on disk options, between 97TB to 198TB of disk capacity.

The rack has multiple QDR InfiniBand switches and 8.66TB of flash in the Exadata storage arrays, yielding 1.2 million IOPs. There are also a couple of Oracle's ZFS Storage 7320 arrays in there. The database nodes can run Solaris 10 or Solaris 11. Prices for the SuperCluster were not divulged.

The other interesting engineered system from Oracle is the Exalogic Elastic Cloud, a tuned machine to run a virtualised implementation of Oracle's WebLogic application server. In a full-rack configuration, the Exalogic cluster has 30 1U rack servers with a total of 386 Xeon X5670 cores spinning at 2.93GHz.

Each node has 96GB of main memory, just like in the Exadata X2-2 nodes, and 40TB of external disk in the rack. QDR InfiniBand links the nodes together so they can share work and 10-Gigabit Ethernet switches link the app serving cluster to the outside world.

The secret sauce in the Exalogic cluster is called Cache Coherence. As the name suggests, this is a piece of gridding software that allows those 30 servers to look like one giant app server to the outside world.

The Cache Coherence balances, synchronises, caches and partitions the app serving data and workloads under the covers.

Oracle is using its own implementation of Red Hat's Enterprise Linux, its riff on the Xen hypervisor and the JRockit virtual machine to run the WebLogic app server. (JRockit and WebLogic came to Oracle through, you guessed it, an acquisition.) Pricing for the Exalogic clustered app servers has not been announced.

Ask Dr Watson

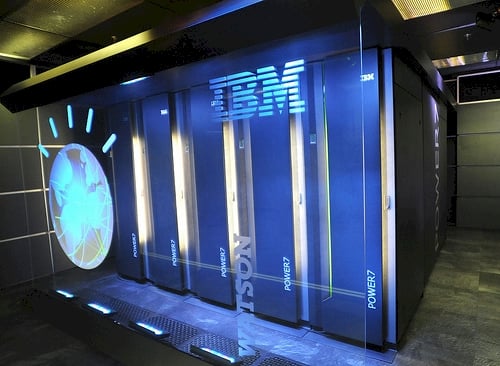

IBM deployed its initial Watson question-answer machine on a BlueGene massively parallel supercomputer, but decided to promote the then-new Power7-based Power 750 workhorse server by challenging humans on the US Jeopardy! game show.

Watson was as much a publicity stunt as it was science, and with all the money Big Blue put into the project it needed to get something tangible out of it besides a win for the propeller-heads at IBM Research at Yorktown Heights, New York.

IBM's Watson QA System

The Watson machine consists of ten racks of Power 750 servers with a total of 2,880 Power7 cores and 16TB of main memory.

Watson drew on that memory and the fast 10-Gigabit Ethernet switches from Juniper linking the nodes together, as well as the parallel nature of the DeepQA software stack that searched through databases to find keywords and give responses.

The machine obliterated Ken Jennings, who with 74 wins has held the top rank in the game the longest, and Brad Rutter, who racked up a record $3.25m on the show during his reign earlier in the decade.

DeepQA creates an in-memory database of text, based on the Apache Hadoop MapReduce algorithm and related HDFS file system created by Yahoo!, to mimic the search-engine operations of Google.

The stack also includes a bit of code called Unstructured Information Management Architecture (UIMA), a framework created by IBM database gurus back in 2005 to help them cope with unstructured information such as text, audio and video streams. The UIMA code performs the natural language processing that parses text and helps Watson figure out what a Jeopardy! clue is about.

IBM is working with doctors and researchers at Columbia University, speech-recognition experts at Nuance and insurance company Wellpoint to commercialise Watson as a medical expert system.

I saw the Watson machine in beta testing only weeks after it won the Jeopardy! show and when it was stuffed to the gills with medical journals and encyclopedias. And it did a pretty good job at differential diagnosis, even if it is still not quite as convincing as House. ®