Original URL: https://www.theregister.com/2011/11/01/calxeda_energycore_arm_server_chip/

Calxeda hurls EnergyCore ARM at server chip Goliaths

Another David takes aim at Xeon, Opteron

Posted in Channel, 1st November 2011 16:45 GMT

Calxeda, formerly known as Smooth-Stone in reference to the river rock that the mythical David used in his sling to slay Goliath, doesn't think the server racket can wait for the 64-bit ARMv8 architecture (announced late last week) to be designed and tested in the next few years.

And that is why Calxeda has spent the past several years tweaking the 32-bit ARMv7 core to come up with its own system-on-chip (SoC) and related interconnect fabric suitable for hyperscale parallel and distributed computing where nodes have only modest memory needs.

Today, Calxeda takes the wraps off its much-anticipated ARM server processor, which has been given the name EnergyCore in reference to the fact that like other ARM chips used in smartphones and tablets, it is focused on doing computing work for the least amount of energy possible. The idea is that by switching to ARM cores, Calxeda can do a unit of computing work burning less juice than an x86 chip from Intel or Advanced Micro Devices, the Power chip from IBM, the Sparc T from Oracle, or the Itanium from Intel.

The EnergyCore ECX-1000 Series chips, as the first EnergyCores will be called, are based on the Cortex-A9 designs from ARM Holdings. The ECX-1000 chips are in fact based on a quad-core implementation of the Cortex-A9 chip, but like other server implementations of the ARM chips, such as the X-Gene announced last week by Applied Micro Circuits, there is a lot more to these chips than the core.

There is a slew of other stuff, including a fabric interconnect and a management controller that would otherwise be an add-on for the system board, on the chip. One big difference between the EnergyCore and X-Gene is that the latter is based on the 64-bit ARMv8 and won't ship until the second half of next year if all goes well at Applied Micro. And that will be early silicon. It remains to be seen when server makers will pick up the X-Gene chip and actually get it into servers, but that might take until 2013.

Calxeda thinks there's money to be made now, and for some workloads, the EnergyCore chips are going to fit the power bill. "ARM does for the processor world what Linux did for the operating system world," Karl Freund, vice president of marketing at Calxeda, tells El Reg. "It opens up the chip market to a whole lot of innovation."

The ECX-1000 chips are implemented in a 40 nanometer process and are manufactured by Taiwan Semiconductor Manufacturing Corp, which seems to be the foundry of choice for server chip makers that don't have their own wafer baking facilities. Each Cortex-A9 core runs at 1.1GHz or 1.4GHz and includes a scalar floating point unit that can do single-precision or double-precision operations as well as a NEON SIMD media processing unit that has 64-bit and 128-bit registers and that can also do floating point ops.

The EnergyCore implements ARM's TrustedZone security partitioning capabilities, just like other ARMv7 cores. Each core on the ESX-1000 chip has its own power domain, which means it can be turned on and off as needed or not to conserve power and reduce heat dissipation.

The Calxeda ECX-1000 ARM server chip

Each Cortex-A9 core in the ECX-1000 chip has 32KB of L1 data cache and 32KB of L1 instruction cache each, plus a 4MB L2 cache that is shared across those four cores. These cores have an eight-stage pipeline and can do out-of-order execution as is traditional for modern x86 and RISC processors.

Incidentally, the Cortex-A9 reference designs support from 16KB to 64KB of total L1 cache with up to 8MB of L2 cache spread across those cores and clock speeds as high as 2GHz. The cores process 32-bit instructions, but have a 64-bit data path to memory with another 8 bits for ECC data correction for a total of 72 bits. The ECX-1000 has a DDR3 memory controller that can support regular 1.5-volt DDR3 main memory or the 1.35-volt DDR3 low-power memory; 800MHz, 1.06GHz, and 1.33GHz memory speeds are supported.

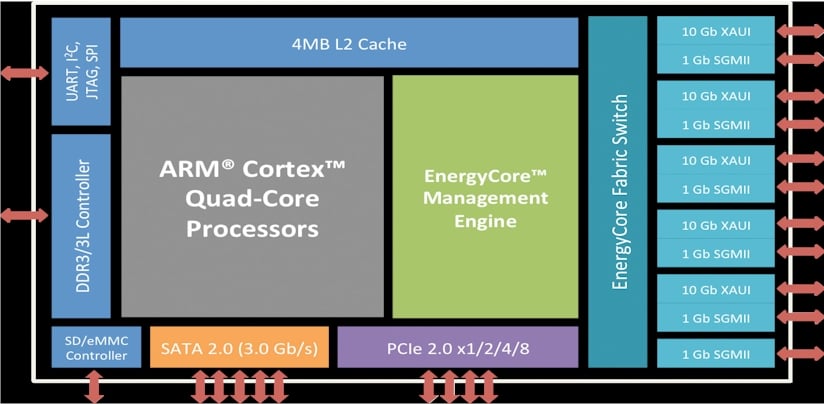

Here's a block diagram of the other goodies that are baked into the ECX-1000 ARM chips:

The ECX-1000 has four PCI-Express 2.0 controllers and one PCI-Express 1.0 controller and they can support up to two x8 lanes and four x1, x2, and x4 lanes. It also has an integrated Secure Digital/enhanced MultiMediaCard (SD/eMMC) controller on the chip. The chip also has a SATA 2.0 controller capable of supporting up to five 3Gb/sec ports; older 1.5Gb/sec SATA devices are compatible with this controller, but newer 6Gb/sec peripherals are not.

The ECX-1000 also sports what Calxeda calls the EnergyCore Management Engine, which is akin to a baseboard management controller (BMC) in a server motherboard to provide out-of-band management for the processors and peripherals in the system. According to Karl Freund, vice president of marketing at Calxeda, these on-board BMCs burn anywhere from one to four watts of juice and have an average manufacturing cost of $28 per node, so integrating this on the chip cuts down on both power consumption and costs for large-scale server clusters.

This integrated management controller on the chip manages secure booting for the ARM cores, supports the IMPI 2.0 and DCMI management protocols, does dynamic power management and power capping, and provides a remote console over the serial-over-LAN (hilariously abbreviated SoL) protocol.

This on-chip control freak has one other very important job: configuring and optimizing the bandwidth allocated between the ECX-1000 cores and the EnergyCore Fabric Switch that is also etched onto the chips. Yup, not only is the ECX-1000 a quad-core chip with integrated controllers, it has its own switches. Bitch.

Weaving a fabric from on-chip switches

The EnergyCore Fabric Switch is an 8x8 crossbar switch with 80Gb/sec of bandwidth. The switch links out to five 10Gb/sec XAUI ports and six 1Gb/sec SGMII ports that are multiplexed with the XAUI ports. The fabric switch can provide three 10 Gigabit Ethernet MACs and has IEEE 802.1Q VLAN support on those ports.

The EnergyCore Fabric Switch doesn't just link the chip to the outside network through virtual ports, but is used to link EXC-1000 chips to each other in a cluster. It is, in effect, a distributed Layer-2 switch.

The fabric switch on each ECX-1000 chip has three internal 10Gb/sec channels for linking to three adjacent chips on a single system board, plus five external 10Gb/sec channels for linking out to adjacent nodes in a complete system.

With those five external channels, the fabric switch can implement mesh, fat tree, butterfly tree, and 2D torus interconnections of system nodes. With those five lengths, the switch fabric can do a hop from node to node through the fabric in under 200 nanoseconds, according to Freund, and those five external 10Gb/sec virtual ports coming out of each switch can lash together up to 4,096 ECX-1000 chips into a cluster.

Calxeda is not trying to do cache coherency over one to four ECX-1000 sockets on a system board or across the 1,024 possible system boards that the integrated fabric switch scales to. And it is not particularly worried about latencies as parallel workloads pass data around this switch fabric.

"If you look at the workloads we are aiming at, they are not latency sensitive," says Freund. This includes offline analytics like MapReduce big data chewing, Web applications, middleware and Memcached, and storage and file serving. It would be interesting to see how a network of these puppies runs a shared-nothing database cluster.

The topology of the EnergyCore Fabric Switch can be changed on the fly from one style to another and the settings are stored on flash memory on the chip package. Bandwidth can be dynamically allocated in 1Gb/sec, 2.5Gb/sec, 5Gb/sec and 10Gb/sec virtual pipe sizes by the fabric switch and presents two Ethernet ports to the operating system.

The idea is to eliminate the top-of-rack Layer 2 switch that is typically used in a cluster these days with the on-chip switch. While it is possible to build a cluster with 4,096 ECX-1000 chips by using 10Gb/sec XAUI cables and the four ports coming off the Calxeda board to cross link them all, Freund says that most companies will put two real 10GE switches in a rack and use these like end-of-row switches and only lash together 72 four-socket nodes (about a half rack of servers) with the integrated fabric.

The other important thing about that EnergyCore Fabric Switch is that is has dynamic routing, which means you can get around congestion in a network of nodes and also, in conjunction with that management controller, optimize operations for latency or reduced power consumption – or boosted power consumption if you have some work that needs to run faster.

Aiming at microservers as well as clusters

Calxeda plans to offer a two-core chip with most of those switching features turned off running at 1.1GHz as well as a 1.1GHz version with four cores and everything activated. The plan also calls for a clock speed ramp to 1.4GHz on the four-core version in the ECX-1000 line. The two-core version will be aimed at microservers, with their modest I/O and small scalability requirements per node, and Freund says that the two-core variant of the ECX-1000 chip will burn only 1.5 watts per socket, and a stick of low-voltage DDR3 memory will add another 1.26 watts.

The full-on four-core version running at 1.4GHz with all the features turned on and its memory will consume under 5 watts. That four-core version of the chip, by the way, burns 3.8 watts at the full 1.4GHz speed and can idle at under a half watt. That's 2 watts for the four cores and 4MB of L2 cache, 1.5 watts for the fabric switch, management engine, I/O and network controllers, and one 1Gb/sec Ethernet link running, plus 1.26 watts for that single low-volt DDR3 4GB memory stick.

The ARM cores have 14 power states and can come out of a very low power state in microseconds, which is useful as workloads change.

To get server makers started – and to get them excited about the possibilities of building servers with the ECX-1000 chips – Calxeda has put together a four-node server reference card, as shown below:

This board has one memory slot per processor socket, and the 32-bit machine tops out as 4GB of memory. The board has extra SATA ports, but only the four on the right are activated at this time. The boards have two PCI-Express-style connectors running along the bottom, which is how the servers draw their power and implement their fabric interconnect.

In this implementation, the reference design, called the EnergyCard, has four 10Gb/sec links running into the to the system board. You can do four complete servers for 20 watts on a board that is 10 inches long.

It's hard to imagine server makers won’t be lining up to get their hands on these EnergyCards. And if they want to start selling them right away, Calxeda is good with that, too. The chips will be able to run Canonical's Ubuntu and Red Hat's Fedora Linuxes to start; Windows Server 8 could eventually get there if Microsoft gets interested. (So far, it has made no commitments, even with Windows 8 for clients and mobile phones getting an ARM port.)

The ECX-1000 chips will sample in late 2011, right on time, and volume shipments of the chips will start in the middle of 2012. That is about when Applied Micro will begin sampling its 64-bit X-Gene chip, which has its own crossbar switch but one that implements up to 128-way symmetric multiprocessing across 64 of its two-core chips.

It will be interesting to see how these two ARM server chips compete against each other as well as against other RISC chips and Intel Xeon and AMD Opteron processors. And remember, graphics chip maker Nvidia, which sells ARM-based SoCs for smartphones and tablets, has also promised ARM chips of its own designs for PCs and servers. Thank heavens for a little competition to keep Intel and AMD honest. Or whatever you might call it. ®