Original URL: https://www.theregister.com/2011/10/31/expert_clinic_6/

Building cloud-optimised networks

Combining fabric infrastructure, operational nous, and service delivery models

Posted in Networks, 31st October 2011 11:45 GMT

Expert Clinic Three experts look at building cloud-optimised networks from the operational point of view, a fabric-based infrastructure point of view, and a service delivery model point of view.

Each viewpoint is valid, different, and not enough on its own. Taking the three together gives us an idea of the complexity of cloud undertakings. Building and operating cloud-optimised networks is surely not a simple exercise.

First off, Freeform Dynamics' Andrew Buss contrasts Private Clouds with their relatively predictable workloads with unpredictable Public Clouds, having both unpredictable growth and peak loads. A necessary way to cope with that is prioritising important applications so their service levels remain high throughout such events. It is, he asserts, vital to test out infrastructure changes before putting them into practice.

Andrew Buss - Service Director Freeform Dynamics

Cloud computing is all about providing services as reliably and transparently as possible to the end-user. One of the pivotal underpinnings of cloud is that applications are not set up on dedicated infrastructure, but instead are accessed as service via communications networks such as the Internet.

When it comes to Private Cloud, the workloads and connectivity are typically architected and managed together with insight into both current needs and planned future growth strategy. This means there is a reasonable degree of predictability in setting up and managing services, although our research shows that getting it to run like clockwork still remains challenging for many.

Public Cloud, on the other hand, is another matter entirely. Customers may come and go unpredictably, as will the workloads they wish to run. This leads to three main issues that need to be addressed.

It is vital to understand service dependencies and test changes before putting them into production.

The first challenge is that of coping with growth through the addition of new customers. Adding incremental capacity needs to be as predictable and seamless as possible for networking – as well as storage and compute – and must not require more people to manage unless there is a step change in what is being put in place. The end result though, is scalability in one direction for a Cloud provider, which is up. It is difficult to cope with an overall drop in demand without removing capacity that has been paid for. Therefore it is vital that effective use is made of the investment in infrastructure before adding more.

The second challenge is dealing with peaks of demand. Workloads are unpredictable, and can require significant additional capacity to cope if all workloads are to be catered for. Having a mix of customers so that individual peaks are spread out can alleviate some of this. It is also often easier for large-scale service providers to absorb the impact of peak loads due to their overall size and capacity.

In order to increase utilisation of the network and deal with peak demand while using networking equipment efficiently it will generally be necessary to prioritise important workloads by identifying them and allowing them to run in preference. Other, less critical traffic or applications can be slowed or delayed. This will usually be accomplished through either service level agreements or tiering of services, where those paying for the privilege have priority of access.

The third, and arguably most important, aspect of Public Cloud networking is not so much to do with the physical equipment, but more the change management involving people, policies and processes. In recent major service outages, such as Amazon AWS, or Microsoft Office 365, it was not equipment failure that led to widespread and unexpected service outages. Instead, it was making changes to the established setup that caused problems.

It is vital to understand service dependencies and test changes – even going so far as to simulate or model them where possible – before putting them into production. And it is just as important to be able to roll back changes where there is an impact on service quality. For this to be effective, there needs to be a measurement system in place that pro-actively monitors end-to-end service quality that can quickly flag up when things are starting to veer away from normal limits before the problem snowballs.

Andrew Buss is the Service Director for Freeform Dynamics. He is is responsible for managing the company’s services portfolio for both end user and vendor organisations, and has been an industry analyst since 2001.

For networking pre-sales manager Duncan Hughes, a cloud-optimised network is all about fabrics, using as a model the classic Fibre Channel storage area network fabric. He says fabrics are "self-aggregating, scale efficiently, and are lossless and deterministic." There also needs to be an Application Delivery Controller function to pool multiple client connections into one or more virtual machines and improve server efficiency. Building a cloud-optimised network infrastructure is do-able.

Duncan Hughes - Pre-Sales Engineering Manager at Brocade

The cloud-optimised network connects to virtualised resource pools and physical clients, servers, and storage using a single logical network. Ultimately, a cloud-optimised network can consolidate multiple physical networks onto a common physical infrastructure.

Ethernet fabrics and storage fabrics are implemented at Layer 2 to flatten the network, reducing capital and operating costs. Like storage fabrics before them, Ethernet fabrics are self-aggregating, scale efficiently, and are lossless and deterministic. In Ethernet fabrics, all switches are aware of all devices so virtual machine mobility does not require manual reconfiguration of the network. Finally, the fabric is extensible between data centers via core routers and Ethernet tunnels in the IP network. Virtual machines with their applications will now move across a server cluster “stretched” between the Private and Public Cloud data centres. Cluster traffic runs through the Ethernet tunnel. Storage traffic can also be tunneled over IP using the industry-standard Fiber Channel over IP (FCIP) protocol so that application data can be quickly replicated between public and Private Cloud data centres.

In cloud computing, the compute and storage networks are Layer 2 fabrics: Ethernet fabrics for server clusters and storage fabrics for shared storage. This may be a good time to consider how the word “fabric” is used in this context. Fabrics have several important properties, including self-aggregation, self-healing, transparent internal topology, and equal-cost multipath routing at Layer 2. For the compute pool, Ethernet fabrics eliminate Spanning Tree Protocol (STP) and manual configuration of Inter-Switch Links (ISLs) and have self-aggregating ISLs (trunks) with load balancing and automatic link failover. They include distributed intelligence so that network policies and devices are known at every port. When virtual machines move across network ports, port configuration and policies are consistently applied to application traffic. Traffic in Ethernet fabrics is not restricted to “north-south” flows, which move up and down network tiers. Instead, Ethernet networks are flattened so they can efficiently span multiple server racks, reducing latency and avoiding congestion on ISLs.

The cloud-optimised network is designed to reduce cost, improve agility, and extend virtualization across the data center. A key enabling technology is the Ethernet fabric. Compared to classic hierarchical Ethernet networks, the Ethernet fabric delivers higher levels of performance, utilization, availability, and simplicity. For shared storage, the Fibre Channel storage fabric (SAN) has been an industry standard for more than a decade. With Ethernet fabrics, shared storage is extended to iSCSI and emerging FCoE storage. Virtual machines rely on shared storage networks for their mobility and storage fabrics are the most cost-effective solution whether the storage traffic relies on Fibre Channel or Ethernet for transport.

In cloud computing, the compute and storage networks are Layer 2 fabrics

Client access to applications relies on TCP/IP, HTTP, and HTTPs—which requires Secure Socket Layer (SSL) to secure client connections to applications. An Application Delivery Controller (ADC) pools multiple client connections into one or more virtual machines hosting the virtualised web, application, and database servers. This reduces the workload on virtual machine, resulting in improved server efficiency. An ADC also improves server efficiency via SSL offloading, traffic shaping, and load balancing of multiple client connections.

In addition, built-in connection and traffic monitoring per application can integrate with virtual machine orchestration software, so virtual machines come online or go offline as client workloads change. To provide cost-efficient scalability, an ADC with fast hardware for scale-up and clustering for scale-out is preferred. Finally, load balancing can extend between data centers directly supporting hybrid clouds so that customer-owned data centres can efficiently use Public Cloud computing services to handle intermittent spikes in application workloads cost effectively.

Duncan Hughes is a pre-sales Engineering Manager at Brocade, joining when Brocade acquired Foundry Networks, where he was also a systems engineering manager, having previously been at Anite Networks.

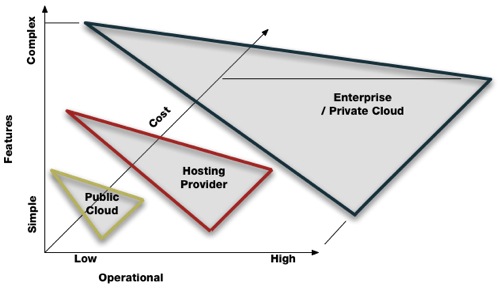

Our third expert, Greg Ferro, takes a different tack and looks at the differences in cloud networking complexity between public, restricted application clouds, then service provider clouds with a range of applications, and finally Private Clouds. He makes points about where the complexity lies in each that you might find pretty shrewd.

Greg Ferro - Network Architect and Senior Engineer/Designer

Cloud networking is a much abused term. For very large companies hosting mono-functional applications such as Facebook or Google, cloud networking usually means cheap, generic, dumb, volume and [hopefully] programmable networking hardware.

For Hosting Providers that are hosting a diverse range of applications, cloud networking is about features, functions and capabilities that allow for the hosting of diverse services and security models that separate customer data in shared systems.

For Enterprises, who are building networks for Private Clouds, the requirements are for complexity to support a wide range of applications, operational consistency, features and long term ownership costs.

The Public Cloud will barely use networking features at all as they drive into rapid change based on software development

If you map these requirements into a three dimensional graph, you get an interesting result.

Why this difference ? Because the hyperscale clouds spend their money on software design that moves the complexity into their software. Since their core business values are in software development, it makes sense to use more software to solve scaling problems.

For platform providers, the focus is on delivering infrastructure for a wide range of possible software solutions. Therefore, the use of more infrastructure to offer more features and services is balanced against software automation to keep costs low.

Private Cloud in corporate networks won’t change much from today. A wide range of features are needed to handle with complex solutions and support many applications, competing requirements that drives operational complexity.

The Private Cloud network has the largest surface area because it delivers the most capability, while the Public Cloud will barely use networking features at all as they drive into rapid change based on software development.

The result ? Networking will continue to be a diverse and feature rich market. Your choice for building a network strategy for your business will depend on your service delivery model - Feature, Low Cost and Simple to Operate Networks means that complexity has shifted into your software layer, it didn’t go away.

Greg Ferro describes himself as Human Infrastructure for Cisco and Data Networking. He works freelance as a Network Architect and Senior Engineer/Designer, mostly in the United Kingdom and previously in Asia Pacific region. He is currently focussing on Data Centre, Security and Application Networking technologies and spending a lot of time pondering design models, building operational excellence and creating business outcomes.

It's obvious that a reduced application Public Cloud is quite quite different from a Private Cloud. The need for fabric-style Ethernet is most deeply felt in Private Clouds and that is probably where Duncan Hughes' thoughts apply most strongly. But Andrew Buss' points apply to all three kinds of cloud because the things simply have to work and work all the time. To misquote Apollo 13's mission control chief: "Service delivery failure is not an option."

Unfortunately the recent history of cloud services has shown that not to be true. It is, we would submit, absolutely vital to combine operational points like those of Andrew Buss, and Ferro's service delivery characteristics with the fabric-orientated views of Duncan Hughes. The cloud is a combinatorial beast and has to be approached and operated that way. ®