Original URL: https://www.theregister.com/2011/06/19/xeon_e7_analysis/

Xeon E7 servers run with the big dogs

Gives chase to RISC and Itanium foxes

Posted in On-Prem, 19th June 2011 11:00 GMT

Deep Dive Intel has come a long way in the server racket, and the new "Westmere-EX" Xeon E7 processor, launched in April and making its way into systems now, is arguably its most sophisticated processor for servers to date.

The Xeon E7 processors cram ten cores onto a single die, but the Xeon E7 design is a bit more than taking an eight-core "Nehalem-EX" Xeon 7500 processor and cramming two more cores onto the chip.

This complete system design marries lots of cores and execution threads to big gobs of shared L3 cache and the high-bandwidth QuickPath Interconnect (QPI) allowing for vendors to create machines that can, in theory, scale from 2 to 32 processor sockets in a single system image.

This kind of scalability is not available in systems based on current Xeon 5600 processors from Intel or those based on Opteron 6100 processors from AMD.

With the high core, thread, and socket counts, large memory capacity and bandwidth, high I/O bandwidth, Xeon E7 systems rival all but the very biggest RISC/Itanium or mainframe systems. And, Xeon E7 machines can do it running Linux or Windows, which have been tuned to scale across the cores, threads, and sockets.

Nehalem-EX chip architecture

With the "Nehalem-EX" Xeon 7500 processors announced in March 2010, Intel at last shifted its high-end chips away from the slow front side bus architecture.

For a long time this was a limitation in system scalability, requiring vendors to make their own chipsets and L4 caching mechanisms if they wanted to build machines with more than four sockets.

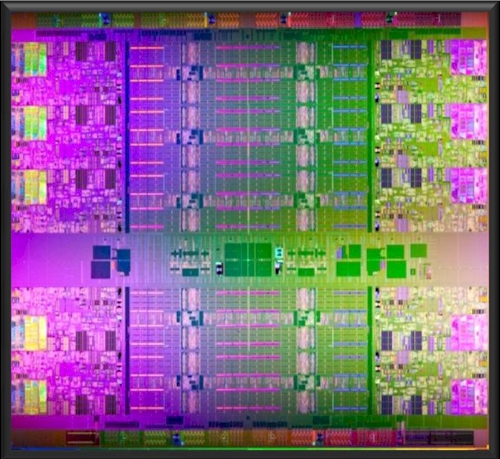

The Nehalem-EX processors also introduced a new chip architecture that put the processor cores on the outside of the chip and the shared L3 caches on the inside of the chip, linked by a super-fast ring interconnect.

This lets the cores share data more efficiently than prior designs, which had cores and their L3 caches cookie-cuttered every which way on the die. This L3 cache ring interconnect design with cores out is to be used in all new high-end Xeon and Itanium processors.

The Xeon E7 chips that came out this year was designed in Intel's Bangalore chip lab and is manufactured using the company's 32 nanometer processes.

The chip weighs in at a whopping 2.6 billion transistors- not much more than 2.3 billion transistors in the prior Xeon 7500 chips. Those extra 300 million transistors were used to put two more cores onto the chip and to boost the aggregate L3 cache size by 25 per cent to 30MB.

Strangely enough, the shrink from the 45 nanometer processes used with the Xeon 7500 chips from 2010 and the 32 nanometer processes for the Xeon E7s in 2011 was not used to add more cores to the design, although it looks like the Xeon E7 was actually a twelve-core design with the bottom two cores chopped off, as you can see:

So why isn’t the Xeon E7 a twelve-core chip, as you might expect?

First, Linux and Windows and the several virtual machine hypervisors can only scale so far right now, so adding a lot more cores does not necessarily get Intel anywhere.

Moreover, as much as customers like more cores – especially in virtualized environments, where they tend to pin one virtual machine on one core for the sake of simplicity – there are plenty who want higher clock speeds.

So Intel used some of the shrink from 45 nanometers to 32 nanometers to boost the cores by 25 per cent and to boost the clock speeds by between 6 and 13 per cent.

And, the die size was also reduced – from 684 square millimeters for the Xeon 7500 to 513 square millimeters – and that means Intel can cram more chips on a single 300-millimeter wafer. That cuts wafer costs.

At the same time, a smaller die size increases Intel's Xeon E7 yields because, in theory, using a mature process (as the 32 nanometer wafer baking process is thanks to its ramp last year on PC chips) combined with a smaller die lowers the probability that some booger will screw up all or some of a particular Xeon E7 chip.

When you add it all up, staying at ten cores instead of a dozen would make more money for Intel, particularly for workloads where HyperThreading can blunt some of the advantage that AMD has with its twelve-core Opteron 6100s, which do not support simultaneous multithreading.

As the code-name for the Xeon E7 processors suggests, the chip is based on the "Westmere" family of cores, a tweak of the Nehalem cores that adds a few features that are important for servers.

As the code-name for the Xeon E7 processors suggests, the chip is based on the "Westmere" family of cores, a tweak of the Nehalem cores that adds a few features that are important for servers.

The first is the Trusted Execution Technology (TXT) feature, which Intel originally introduced on its vPro Core PC chips as a means of securing hypervisors running on PCs.

With TXT, the BIOS, firmware, and hypervisor of a machine are checked against a last-known-good configuration at boot time. If there is not a match, the BIOS, firmware, or hypervisor boot is halted until malware is removed from the system.

The Westmere class of chips also includes instructions for directly processing the AES algorithm that is commonly used to encrypt and decrypt data.

If you don't think this is a big deal, database giant Oracle did some tests with encrypted 11g R2 databases and has seen an order of magnitude performance increase for database encryption/decryption compared to doing it the hard way with the raw processor (as was the case with Nehalem and earlier families of Xeon server chips).

And, of course, the Xeon E7 processors support Intel's TurboBoost capability, which lets some cores run at a slightly higher clock speed in the event some of the cores are not doing any useful work and are put to sleep.

More reliable

There are the obligatory increases in reliability that make the Xeon architecture more like the Itanium processor, which had a lot of the machine check architecture (MCA) features that only high-end RISC and mainframe systems used to have.

With the Xeon E7s, for example, there is a double device data collection (DDDC) RAS feature, which allows a system to correct from two memory errors without crashing. This feature was supposed to come out in 2008 with the original "Tukwila" Itaniums.

The modified Tukwilas launched as the Itanium 9300s in March 2010. Intel said it has added 25 new RAS features to the Xeon E7s, many of them from Itanium chips.

The Westmere-EX chips also get new-and-improved "Millbrook" memory buffer chips, which allow a four-socket machine using Intel's "Boxboro" 7500 chipset to scale up to 2TB of main memory and a two-socket box to support up to 1TB.

This is double the memory that plain-vanilla Xeon 7500 systems, announced last year, could support – if you discount the memory extension technologies that Cisco Systems, IBM, and Dell added into their own designs. A beefier variant of the Millbrook buffer chip allows for 1.35 volt DDR3 memory to be used instead normal 1.5 volt sticks.

Xeon E7 vs Itanium

So how do the Xeon E7s stack up to Itanium chips and their predecessors at the high end of the Xeon line? Answering the former is tough, but the latter is easy.

As far as Intel is concerned, Itanium has to fend for itself – and so does the Xeon architecture.

Skaugen: Xeon no longer bottom

Kirk Skaugen, general manager of Intel's Data Center Group, was blunt about it talking to investors in May 2011.

"When I picked up the Data Center Group, we made one strategic flaw and that was artificially protecting Itanium and not making Xeon 64-bit," he explained. "Going forward, if the Atom micro-architecture is the right architecture, we'll go do that."

Later in the talk, Skaugen said that the situation was "no longer Itanium at the top and Xeon at the bottom" and that the chip giant was thrilled to have 20 design wins already for the Xeon E7s in mission-critical systems, with machines with 8, 16, and 32 sockets in the works.

The instruction sets of Itanium and Xeon processors are so different that it is hard to make direct comparisons. But generally speaking, Xeons will have more cores and higher clock speeds at any given time, although that may not necessarily yield more throughput on back-end systems.

Not cheap as chips

At this point, top-bin Xeon E7 chips are considerably more expensive than Itanium parts; it seems likely that next year's eight-core "Poulson" Itaniums will be more expensive than the current Itanium 9300s.

Thanks to server virtualisation and supercomputer clustering Intel has made several important changes in recent years at the high-end of the Xeon, driven by the need for more memory and I/O and bandwidth.

The Xeon 7400 processors using the old front side bus were designed solely for four-socket and eight-socket machines.

But for the Xeon 7500s launched in March 2010, Intel tweaked the Boxboro chipset to allow companies to make machines supporting two, four, or eight sockets gluelessly – meaning without having to resort to creating a unifying chipset to glue multiple motherboards together into an NUMA or SMP system. Intel did this at the request of server makers, which wanted to get fatter memory capacities on two-socket servers.

'We made a strategic flaw in artificially protecting Itanium and not making Xeon 64-bit'

At the same time, Intel created a special variant of the Nehalem-EX processors called the Xeon 6500, which was aimed at high performance computing clusters, which only scaled across two server sockets, and which had slightly lower prices compared to their Xeon 7500 equivalents.

With the Xeon E7s, Intel has gone all the way and split the chip product line into three variants aimed at machines with differing numbers of sockets. The E7-28XX machines are aimed at two -socket servers and come in versions with 6, 8, or 10 active cores; prices range from $774 to $4,227 apiece when bought in 1,000-unit trays.

If you want to use a Westmere-EX chip in a four-socket server, then you need an E7-48XX chip, and clock-for-clock and core-for-core you are going to pay a slight premium for that (prices range from $890 to $4,394 for similar SKUs.

Now, if you want to build a box that has eight-sockets, then you need to get an E7-88XX variant, which only come with 8 or 10 cores and which have yet another price uplift. Call this the scalability tax.

Intel has not said if it is simply disabling QPI links on the chips or making good use of chips that have errors in some of their QPI links. We would like to think it was the latter and not the former, but it is probably a mix of the two.

In general, SKU for SKU, a Xeon E7 processor delivers about 40 per cent more performance than the Xeon 7500 processor it replaces. This is accomplished by a mixture of larger L3 cache, higher clock speeds, larger main memory capacity, and other tweaks.

CPU comparison table

Here is a table comparing the Xeon E7 processors to the current "Westmere-EP" Xeon 5600 processors for two-socket midrange servers as well as the new Xeon E3-1200 "Sandy Bridge-DT" processors for single-socket boxes:

The table above (also available as a xls file here) shows the core and thread count for the chips (not all chips have HyperThreading), as well as their clock speed and amount of on-chip L3 cache memory. The table also shows which chips support TurboBoost and HyperThreading, the maximum power dissipation of the chip (thermal design point, or TDP), and price per chip when bought in 1,000-unit trays.

Cost per Oomph

We have also added another metric that is somewhat useful in comparing across the Xeon architectures called Cost Per Oomph. This metric takes the number of active cores and multiplies it by the clock speed and then divides it into the cost of the chip to give a relative price/performance metric. Cost Per Oomph ignores the effect of HyperThreading on relative performance and also the relative difference of theoretical instructions per cycle across the processor architectures.

Generally speaking, the Cost Per Oomph of the Xeon E7 processors is quite a bit higher than for the Xeon 5600s, and that is the price you pay for memory scalability and higher core counts. Unless you buy a Xeon 5600 system that has its own memory scaling electronics (as Cisco offers), you top out at either 6 or 9 memory slots per socket, or 192GB or 288GB per two-socket machine using 16GB memory sticks.

The Xeon E7s can support 16 slots per processor, and that works out to 512GB on a two-socket machine using 16GB sticks, or 256GB using somewhat cheaper 8GB sticks. Also, the Xeon E7s can support 32GB memory sticks, doubling up to 1TB of memory if need be across those 20 cores and 40 threads. In general, the Xeon 5600s have higher clock speeds but lower cache memory sizes.

You have to pick your chips carefully based on the characteristics of the workloads. The good news is that the Xeon E7, Xeon 5600, and Xeon E3 chips are all absolutely compatible, although there may be some tuning required to get closer to that theoretical "oomph".

The Xeon E3-1200 chips, based on the Sandy Bridge cores, are just wickedly inexpensive compared to the Xeon E7s, but then again, they are limited to 2 or 4 cores and a single socket. (In the table, the models shown with the asterisk are Xeon E3-1200 chips with integrated HD Graphics GPUs and are really aimed at uniprocessor workstations rather than servers.

A bunch of benchmarks: Xeon E7 systems vs. rest of world

Machines using the Xeon E7 processors are just getting into the field now, so the benchmark test results are a little thin. No one has done and published TPC-C online transaction processing test results using the Xeon E7 processors yet, but there are a few tests that have been done on the SAP Sales & Distribution (SD), SPECvirt_sc2010 server virtualization tests, and TPC-H data warehousing. The TPC series of tests are important because they include pricing as well as performance metrics.

Let's take a gander at the SAP SD test first, which is a two-tier (database and application tiers) test that simulates users logging into the sales module of the SAP ERP suite and running transactions.

IBM System x3850

On workhorse four-socket rack servers, IBM has run the SD test on its System x3850 with four of the ten-core Xeon E7-4870 processors (2.4GHz) running Microsoft's Windows Server 2008 R2 and its own DB2 9.7 database and was able to support 14,000 users with an average response time of 0.92 seconds at 99 per cent CPU utilization.

HP ProLiant BL680c G7

An HP ProLiant BL680c G7 blade server with the same processors and core count (40) was able to handle 13,550 users on the SD test, and an HP BL620c G7 blade with only two E7-2870 processors (also 2.4GHz and with a total of 20 cores) was able to handle 6,703 SD users with sub-second response at nearly full CPU utilization. (HP was running Windows and SQL Server 2008.) By comparison, a two-socket DL380 G7 using six-core Xeon X5680 processors running at 3.33GHz could handle 5,075 SD users running the Windows stack.

Clearly, the Xeon E7s have the performance advantage on two-socket boxes compared to their baby Westmere-EP brethren.

They also have the performance advantage over the current crop of Opteron processors. A ProLiant DL585 G7 with four of AMD's Opteron 6180SE processors running at 2.5GHz (that's a total of 48 cores) can support 9,450 users – about a third fewer than the four-socket Xeon E7 machine tested.

IBM Power 730, 750 and 780

The Xeon E7s have an advantage over some RISC/Unix machines tested as well. For instance, a two-socket Power 730 from IBM with a dozen 3.7 GHz Power7 cores total running SUSE Linux Enterprise Server 11 and DB2 9.7 was only able to handle 5,250 SD users. A Power 730 with two eight-core Power7 processors running at 3.55GHz running AIX 7.1 and DB2 9.7 was able to handle 8,704 SD users.

That brackets the performance the of two-socket Xeon E7 blade server above from HP, which supported 6,703 SD users. A four-socket Power 750 system – a workhorse box in the IBM Unix lineup – equipped with four eight-core Power7 chips running at 3.55GHz is able to handle 15,600 users. That's only an 11.4 per cent advantage over IBM's own four-socket System x3850 box using the Xeon E7s.

IBM can scale its Power 780 up to eight sockets and 64 cores running at 3.8GHz, supporting 37,000 SD users, which is significantly better than the eight-socket, 80-core HP ProLiant DL980 G7, which could only handle 25,160 SD users running the Windows stack. Windows and the Boxboro chipset are having trouble scaling linearly beyond four sockets. No recent Sparc or Itanium systems have been run through the SD gauntlet lately to make comparisons.

The SPEC server virtualization test

The data in the SPEC server virtualization test is a little thin, but instructive just the same and illustrates the advantages that the Xeon E7s have over the Xeon 5600s for virtualized workloads.

IBM ran a BladeCenter HS22V server using Red Hat's KVM hypervisor through the SPECvirt_sc2010 test, which loads up machines with Java application serving, Web serving, and mail serving workloads into a "tile" running on a VM.

You scale up the workload by adding more tiles to the machine and measuring the aggregate throughput in a normalized rating figure. A blade with two Intel X5690 processors running at 3.46GHz attained a rating of 1,367 on the SPECvirt_sc2010 test supporting 84 virtual machines.

That blade had 288GB of main memory using 16GB sticks, so IBM was not holding back on the memory. IBM then took a two-socket Westmere-EX blade with two E7-4870 processors running the same Red Hat stack. (Yes, those are for two-socket machines, but IBM has its own eX5 chipset with memory MAX5 main memory extenders).

With two of these E7 chips, which run at 2.4GHz, and 640GB of main memory (16 slots on the blade and another 24 on the MAX5 extender for using 16GB sticks), the BladeCenter HX5 blade from IBM was able to attain a rating of 2,144 on a total of 132 VMs across 20 cores. Those extra cores are balanced against extra memory, and hence the machine can support more VMs.

This is one thing that is driving up server average selling prices in recent quarters, and this trend will continue. The reason is not that you can just put more VMs on a Xeon E7 or Opteron 6100 blade, but you can, if need be, make a very fat VM for a heavy workload like a database or email server. (There are no RISC or Itanium systems tested as yet on the SPEC server virtualization tests.)

TPC-H

That leaves the TPC-H data warehousing benchmark. There are some comparisons that can be made between Sparc, Power, Itanium, and Xeon systems on the version of the test with ad hoc queries banging against simulated 1TB data warehouses.

Dell PowerEdge R910

Dell booted up Red Hat Enterprise Linux 6.0 and the VectorWise 1.6 database from Ingres onto a PowerEdge R910 server. This machine was equipped with four Xeon E7-8837 processors running at 2.67GHz, each with eight cores (not ten) fired up; the machine was equipped with 1TB of main memory and a mere 2.3TB of disk.

The box was able to process 436,789 queries per hour and cost $384,935 after discounts, yield a bang for the buck of 88 cents per QPH. IBM pit the Windows stack on its System x3850 X5 with eight Xeon E7-8870 processors running at 2.4GHz, 2TB of memory, and nearly 7TB of flash drive capacity and was able to push 173,962 QPH at a cost of $1.37 per QPH after a 28.6 per cent discount.

Oracle Sparc Enterprise M8000

Last week, Oracle was bragging that a Sparc Enterprise M8000 server with 16 of the quad-core Sparc64-VII+ processors running at 3GHz was able to do 209,534 QPH – less than half of the query throughput of the Dell box.

And after discounts, the Oracle machine cost $2.1m, yield a cost of $10.13 per QPH. The Oracle box was configured with Oracle 11g R2 Enterprise Edition and Solaris 10 (Update 8/11) and had a whopping 47 per cent discount to get to that performance. To be fair, Oracle only put 512GB of main memory on the box and had 11TB of disk on its box. Maybe it should have added memory and cut back on disk.

IBM Power 780

IBM's Power 780 with eight of its eight-core Power7 chips running at 4.1GHz was also tested on the TPC-H test at the 1TB level. With 512GB of memory and just under 4TB of solid state disk, this Power Systems machine could handle 164,747 QPH running Red Hat Enterprise Linux 6.0 and the Sybase IQ database; after discounts, the machine cost $1.12m and yielded a cost of $6.85 per QPH after a 33 per cent discount.

HP Integrity Superdome 2

An HP Integrity Superdome 2 server running HP-UX 11i v3 (the September 2010 update) and Oracle's 11g R2 Enterprise Edition was able to do 140,181 QPH at a cost of $12.15 per QPH after a 21.8 per cent discount. That Superdome 2 machine was configure with sixteen of Intel's four-core Itanium 9350 processors, which run at 1.73GHz and have 24MB of L3 cache. This test was run over a year ago, and HP didn't use flash to boost performance or cut down on disk drive costs. The Superdome 2 machine had 512GB of memory and 580 disks weighing in at 42TB.

If the TPC-H test reveals anything, it is that the choice of software can matter as much as hardware, and vendors have done everything in their power to avoid direct comparisons of different servers running the same database and operating system. ®