Original URL: https://www.theregister.com/2011/04/22/seti_goes_cloud_and_open_source/

ET, phone back: Alien quest seeks earthling coders

Open source joins Search for Extra Terrestrial Intelligence

Posted in SaaS, 22nd April 2011 22:08 GMT

It was the year of Star Wars and Close Encounters, and in the flatlands of Ohio, a man stumbled upon something possibly alien in origin.

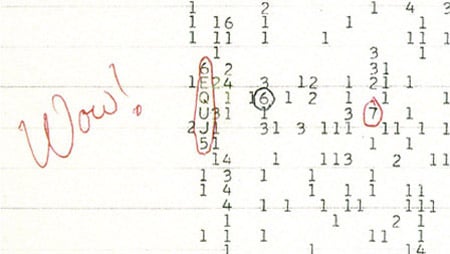

On August 17, 1977, Ohio State University astronomer Jerry Ehman was sitting at his kitchen table, pouring over pages of printouts from the SETI Project's Big Ear radio telescope's computers. On these pages were line after line of numbers and letters. A cluster of six characters jumped out at Ehman and he circled them in red ink. Next to that circle he wrote: "Wow!".

Big Wow!: the printout of the signal from somewhere near Sagittarius

SETI stands for the Search for Extra Terrestrial Intelligence, and in the 34 years since Ehman's discovery astronomers have been agonizing over the precise meaning of what has become known as the "Wow! signal". It's non-terrestrial in origin, meaning it's not man-made and didn't originate from Earth. Wow! was traced back to a cluster of 100,000 densely packed stars in the Sagittarius constellation.

But was this just a noisy star, or a signal sent from a distance race? And if this was first contact, why did the sender transmit just once? The mystery is made worse by the "what if" factor: what if Ehman had received the data sooner, or if modern computers were available to crunch the data?

The data was three days old by the time Ehman spotted Wow!, meaning that if it were a message, the sender could have moved on for lack of a reply.

Such a delay wasn't unusual: it was standard practice to distribute Big Ear printouts once a week. Meanwhile, the kinds of computers Ehman might rely on today were still in the future: Steve Wozniak had only just built the Apple I and II. The most computing power SETI had at the time was an already 12-year-old IBM 1130 – powerful for its time but with limited memory and no GUI input.

SETI Institute's research director Jill Tarter, in a conversation with The Reg at SETICon in Santa Clara, California, last year, lamented the missed opportunity and the lack of computing power. "Back in 1977, the computers were just dumping numbers to paper readout that somebody collected every week," she told us.

And this isn't the only time that computers have let us down. Not so long ago, in 2003, people got in a plasma storm over whether a signal branded SHGb02+14, identified by the SETI@home project, was a promising candidate sent from the depths of space. SETI@home is the effort sponsored by the University of California at Berkeley that runs in the spare cycles of a distributed network of three million volunteers' PCs, crunching radio-telescope data.

"We had two signals that are unexplained because of the way they were gathered, and it was not possible to follow up on them," Tarter says of Wow! and SHGb02+14.

Thirty-four years after Wow! the SETI Institute is getting serious. SETI has now finished a major overhaul of the hardware and software behind a massive expansion in research, taking the hunt for extra terrestrial intelligence through to 2020.

SETI has installed its first-ever, off-the-self, Intel-based servers – from Dell – in the belief that commodity machines are finally powerful enough to processes in real time the huge quantities of data it receives from space. The data itself is being sucked down by a brand-new radio telescope array being built in northern California that was funded initially by Microsoft cofounder Paul Allen and called the Allen Telescope Array (ATA).

Building faster PCs

In a major shift of research culture, SETI is also opening up to outsiders, making the ATA's data available to the public, and posting data on the cloud – Amazon's cloud, to be precise. It began releasing ATA data to setiquest.org in February 2010.

Last month, SETI finished the job of open sourcing the code of its closed search program that runs on the Dell servers and that processes ATA's data. The next step is to invite coders outside of SETI to start building new search algorithms that could help its scientists find the next Wow!.

"We couldn't have done this five years ago or a year ago," Tarter tells us. "We are finally at the point where commodity servers are fast enough where we can throw away the custom signal processing gear we built." Yes, you read that right: SETI had custom-built its own systems in order to process radio-telescope signals from space, believing it could do a far better job than the brains in Palo Alto or Round Rock.

"Now, for the very first time, we can get the heck out of the cathedral," she tells us. "In the past [people outside SETI] couldn't help us because you needed to know the intricacies of the special-purpose hardware in order to develop code or do any work with us." Now you can.

Bigger, deeper, more data

The new systems, going open source and hopping on the cloud, all reinforce an expanded research remit intended to give SETI, founded in 1984, a roadmap to 2020. SETI's current mission – called Prelude – is the largest in the organization's history, and will require more space data to be processed in the hunt for the next Wow!. Prelude is searching the radio spectrums 1.4 to 1.7 GHz, 2.8 to 3.4 GHz, and 4.6GHz. That compares to the prior Phoenix mission between 1995 and 2004 that searched the 1.2 to 3GHz range. Big Ear in 1977 scanned in the megahertz range: 8.4 million 0.05MHz channels.

ATA is scanning 30 million channels simultaneously for signals buried deep within the cosmic noise. It's also observing two stars at the same time to help rule out interference from terrestrial signals. That means ATA is now generating between 100TB and 200TB of data each day that scientists must comb through.

If SETI's plan pans out, there will be more data to sift. The goal is to scan between 1GHz and 10GHz – more that 13 billion channels each 0.7 Hz wide – and to do that for about one million stars. ATA will grow, too, from today's 42 telescopes to 350.

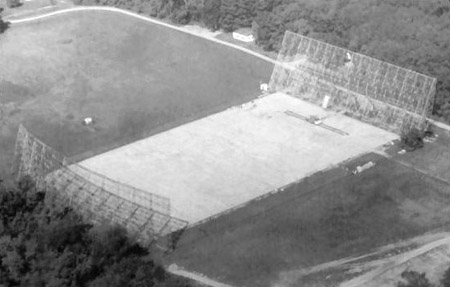

Another 308 to go: some of ATA's first 42 radio telescopes, funded by Paul Allen

The logic is simple: search more sky and you'll have more chances of finding something. "Suppose we are searching for the wrong kind of signal. We are doing a fantastic job in narrow-band signals but perhaps it's very wide-band, plus with lots of time structure," Tarter says.

"I'm not going to identify that with my narrow band detector. I'm much more likely to find something if I also look for broadband signals, complex signals, highly modular signals, noise-like signals. If we expand the volume of the search in the parameter space we are exploring, that's got to be better than only one type of signal."

More data means SETI needed faster real-time processing. During the era of Phoenix, SETI's white coats built their own PCs to process signals because the systems from Silicon Valley's server makers were deemed too slow.

SETI needed a system that could collect the radio frequencies from different telescopes, combine and process feeds, and scan and process data. To do this SETI built PCs using components it bought between 1997 and 1999. It had 32 machines running 1GHz Pentium IIIs and 2.66GHz Xeon chips, with 18.3GB hard dives and between 512MB and 1GB of DRAM. SETI added Motorola Blue Wave boards with one or two DSP chips for added horsepower, and customized circuit boards for specialized processing.

In the past, SETI researchers had used Digital Equipment Corp's PDP-11, and prior to that Big Ear ran the IBM 1130, a machine IBM started producing in 1965. Legend has it that their 1130 checked out prematurely and the SETI team was left hanging after a mouse built its nest in the machine's air intake, helping to burn out a hard drive. IBM couldn't guarantee that a repair could be successfully made.

Now, though, SETI has come up to date. It's thrown out the 32 machines it built and quit the OEM biz completely. In those old machines' place are 64 quad-core Dell 6100 servers donated by Dell, Google, and Intel. "This is the technology capability we've been looking for. We've got it, we should run with it," Tarter said.

The search software that's running on the PCs – called SETI on the ATA (SonATA) – has been rewritten, as well. However, it was recoded not only so it could run on the quad-core servers, but also as part of a larger move towards releasing the APIs to the community under an open source license.

SonATA contains 440,000 lines of code written mostly in C, C++, and Java. That's not big by industry standards – Microsoft's Windows contains more than 50 million of lines of code, while the Linux kernel 2.6.34 saw more than 400,000 lines of code added just last year. As such, SETI Institute's director of open-innovation Avinash Agrawal, an ex–Sun Microsystems senior director of engineering that SETI hired in 2009 to drive the tech side of its great opening up, tells us this was "just" a simple porting project.

Licensing, the final frontier

Agrawal's team finished open sourcing SonATA on March 1, releasing it under GPLv3 – you can get the code on GitHub. The internal deadline was actually December 1, but the weeks between December and early 2011 were spent in QA before moving to production. "While, strictly speaking, we did not meet the deadline, we did pretty well," Agrawal told us after wrapping up the port and releasing the code to open source.

Agrawal tells us that the biggest challenge was licensing – going through thousands of lines of code to find what could be released under open source, and making the rest of the code safe from a patent perspective.

Your algorithms wanted

Working with licensing specialist Palamida, Agrawal identified 71 licenses with different restrictions and permissions. His team either found alternatives or wrote around the encumbered code. "It took a long time to go through each license, and either affirm that it was OK to distribute or replace the software with something else," Agrawal said

The next phase is bringing onboard the outsiders who SETI hopes can bring a fresh perspective and spot missed opportunities by coding new algorithms. Some older algorithms have already been released as source code, but the plan is to release newer algorithms this summer.

SETI wants algorithms to be submitted to the setiQuest Program where they will be reviewed by SETI's engineers and scientists; if they're considered good enough for real-time analysis, SETI will consider combining them with SonATA. "What we look for are better ways of searching, algorithms that allow us to cover more data, or those that are faster," Agrawal said.

Big Ear: the better to hear you with, ET

One target ripe for a fresh look using new algorithms is an area of space called the Water-Hole band of 1,420MHz to 1,720MHZ that SETI has been looking at for years. About 20MHz of this band is blocked by strong interference coming from signals on or around Earth that's generated by satellites and cell phones.

"Instead of filtering it out we basically ignore that band," Agrawal told us. "Jill says: 'Maybe there's something in that we don't know – let's investigate further.' That will require us to have more precise information about radio frequencies and require us to analyze the data much more than what we are able to do today."

SETI has already begun collecting names of people interested in working on what it's calling its first "citizen science" application. You can register here.

The testing ground for new algorithms is Amazon's cloud. ATA data is being uploaded once a week to the online etailer's service after Amazon donated 40TB of S3 storage and 45,000 hours of EC2 processing per month for a five-year period. Also running on Amazon servers is a layer of middleware built by clustered CouchDB database start-up Cloudant that provides a set of sandboxed environments to test SETI's data sets.

When you upload a new algorithm, each of the environments takes that algorithm, runs it on its own data set, and then the results are collated. The goal is to try different algorithms on multiple data sets. "Once we find a good algorithm that does what we want faster or better, we will move the algorithm to our real-time environment," Agrawal says.

Cloudant's service marries CouchDB with Amazon's Dynamo for consistent hashing, created to make data in Amazon's cloud constantly available by managing its state. The NoSQL shop provides SETI with eight nodes, each knowing where the data in the other nodes sits. Cloudant works with JavaScript and Erlang, and can support Python and Ruby. It was updated to work with Octave, an open-source version of Matlab typically used by scientists to process data and produce images that are computationally very intensive.

SETI's modernization comes after decades of what amounts to "getting by" – making do with computers like the IBM 1130 and leaping from one round of funding to another. It's been a struggle to realize the vision of SETI's scientists due to technological and financial constraints.

Listening is better than watching

MIT professor and theoretical astrophysicist Philip Morrison, along with physicist and CERN director Giuseppe Coconni, published an influential article in 1959 that discussed using microwave radio to search for communications from space. The first SETI search program, Project Ozma, followed just two years later at the National Radio Astronomy Observatory in Green Bank, West Virginia. It was a single 85-foot antenna that searched 1,420 MHz.

Over the decades, there have been different SETI projects at different ratio telescopes such as Big Ear, but funding has been generally limited – or cut – by politicians. Sometimes the ideas were ahead of their time. A NASA study team in 1971 was convened to create the design for an array of up to 1,000 radio telescopes to detect Earth-type radio signals from up to 1,000 light-years away. That project, called Cyclops, was abandoned because it was too expensive.

Cyclops, realized

It was only in 1997 that SETI scientists held a series of workshops looking at future goals and how to achieve them when it was felt the Cyclops concept could be delivered. The workshops produced SETI 2020: A Roadmap for the Search for Extraterrestrial Intelligence, which includes a review of Project Cyclops by Tarter and the technological changes since its proposal. Among the ideas discussed are large arrays of small radio telescopes called Large Number of Small Dishes, or LNSD in acronym-friendly boffin-speak.

ATA funding was secured in 2001 from Microsoft's Allen, covering the first phase of construction, and tests and initial observations came online in 2007. Currently a network of 42 dishes sitting in the Cascade Mountains north of Lassen Peak and the imposing Mount Shasta in northern California, ATA is a joint project of the SETI Institute and the University of California, Berkeley.

Just after ATA came online and started churning out its first data, Tarter evangelized the opening-up of the SETI program and making ATA data available. She called for "citizen scientists" to become involved on the occasion of her receipt of the TED Prize in 2008. The prize is awarded to what the nonprofit TED organization calls "exceptional individuals" who are granted one wish to change the world. Tarter's wish: to "empower Earthlings everywhere to become active participants in the ultimate search for cosmic company."

Watch the skies: SETI Institute's Jill Tarter looking for the next Wow!

The ATA might be SETI's biggest radio telescope yet, but the physics of funding are still tough. A nonprofit with around 130 employees, SETI continues to rely on the generosity of NASA, organizations such as Intel, Google, and Amazon, and individuals such as Allen. Ironically, SETI now has more work to do with proportionally less resources thanks to its expanded mission, just as US politicians have become fanatical about cutting funding for sponsor organizations such as NASA. This is unfortunate, as there remains the small matter of the remaining 308 dishes planned for ATA, which will likely need another Allen-style white knight.

Economics might also explain why SETI has changed from your typical high-walled research outfit running closed-source software into a full-blooded open sourcer surfing the crowd outside.

Opening its code, data, and culture could help SETI overcome its limited headcount and funding by bringing in fresh ideas without necessarily owning them. Putting data on the cloud, meanwhile, means that SETI can avoid the path followed by many a research organization of building or buying its own supercomputer to crunch vast amounts of data.

SETI's conversion has also been assisted by the presence of some open thinkers and supporters.

Sitting on SETI's board of trustees is Greg Papadopoulos, the former chief technology officer of Sun Microsystems, now owned by Oracle. Hardware giant Sun enjoyed a years-long romance with open source code and culture in a doomed attempt to refloat its beached server business. The SETI board advised Tarter to change the culture and embrace the outside. "The whole world is moving to openness," Agrawal notes, "so we should move to [being] open"

Sun's former chief open source officer and current Open Source Initiative board member Simon Phipps, meanwhile, has advised SETI. Phipps tells us he helped SETI on licensing, community dynamics, and project structure. Phipps became involved at the request of then–OSI board member Danese Cooper. Cooper, now Wikimedia Foundation CTO, had helped launch setiQuest.org last year, and promoted the release of the first APIs at the 2010 O'Reilly Open Source Conference (OSCON) in Portland, Oregon.

In the background is tech-book publisher, open source conference organizer, and coiner of memes Tim O'Reilly. O'Reilly has taken an active interest in drumming up support among his open source peers, reading between the lines of Cooper's blog. Wikimedia's CTO says she was approached by O'Reilly to help on SETI. Tarter, meanwhile, was given a speaking platform at last year's OSCON.

Hopefully for SETI, embracing open source code and community will pay off better than it did for Sun. Tarter is certainly optimistic as SETI moves to the next phase of its project by releasing code and algorithms later this year.

Into the unknown

"Perhaps some folks out there might find efficient algorithms that we've developed for finding linear patterns in two-dimensional [frequency and time] plots," Tarter says, "... other people might devise tutorials to help us understand this. It's TBD, because we just don't have this culture. This is new to us and I'm sure we are going to stub our toes a whole lot of times, but I'm hoping people are passionate about SETI enough that they'll be forgiving."

So what happens if some SonATA crowd-sourced algorithm finds a new Wow!? First, Tarter reckons she will crack open the bottle of champagne that the team keeps on ice. Next, they'll go back to work trying to validate the signal against known interference by drawing further on open sourced APIs that have been crowd-sourced and battle-tested in the cloud.

"Finding the signal is only the first piece," Tarter says. "That doesn't say whether that signal is our technology or there's – and that's a very, very important piece. We are looking at ways for the public to get involved in that." ®