Original URL: https://www.theregister.com/2011/04/05/intel_xeon_e7_launch/

Intel charges premium for Xeon E7 scalability

Socket to me, Westmere

Posted in Channel, 5th April 2011 19:29 GMT

Intel has refreshed its high-end x64-based server processors with the launch of the "Westmere-EX" chips, now known to the outside world as the Xeon E7s, and it wants some more money for them than you might have been budgeting.

In an indicator of the confidence that Intel has in its dominance of the x64 server racket, the company is not just charging a premium for higher core counts and faster clock speeds, which is to be expected. But the company is now charging a premium for the ability of a chip to scale the Xeon E7 processors across two, four, or eight processor sockets in a single system image.

Specifically, there are three different families of Xeon E7s. The E7-2800 series can be used in machines with two sockets, while the E7-4800s can be used in four-socket machines and the E7-8800s are used in eight socket-boxes. With the prior "Nehalem-EX" Xeon 7500 processors launched in March 2010, server makers could use the same chips in a machine with two, four, or eight sockets as they saw fit. There were eight different Xeon 7500 processors that had 4, 6, or 8 cores and that consumed 95, 105, or 130 watts of juice at various clock speeds and L3 cache sizes.

To appease supercomputer customers who wanted cheap two-socket nodes that had more memory scalability than is available in the Xeon 5600s, Intel crimped the Nehalem-EX line to create three different Xeon 6500 chips. Intel also had two chips - the Xeon E7530 and E7520 - that could not be used in eight-socket boxes.

This idea of bifurcating the high-end Xeon lineup is no longer not an experiment for a niche market, but a formal strategy for the wider commercial space. Well, it is actually trifurcation, if you want to be technical.

The premium for socket scalability is not huge, but will presumably bring Intel extra money as its server partners use Xeon E7 machines to try to dislodge proprietary mainframe and midrange systems and RISC/Itanium boxes running Unix and OpenVMS. And, that extra dough will help make up for aggressive pricing that Intel has set for many of the Xeon E7 chips compared to their Xeon 7500 predecessors.

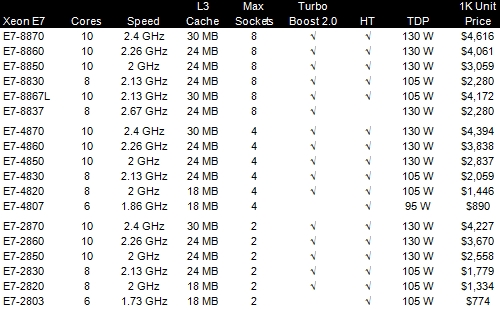

Take a look at the Xeon E7 lineup:

As you can see, the Xeon E7s come with six, eight, or ten cores, depending on the make and model, with varying amounts of L3 cache per chip. If you have a two-socket box and you want a ten-core chip running at the new top-end speed of 2.4GHz, then the E7-2870 will cost $4,227 a pop if you buy in 1,000-unit trays from Intel. The same exact chip that is tweaked to scale to four sockets, the Xeon E7-4870, costs $4,394, a 4 per cent premium just to scale. And the E7-8800 costs $4,616, a 9.2 per cent premium over the base two-socket model to allow it to scale to eight sockets. With the prior Xeon 7500 lineup, the top-end part, the X7560, had eight cores, ran at 2.26GHz, and cost $3,692; it could be used in machines with two, four, or eight sockets.

In some cases, the most equivalent Xeon E7 chip for a low number of sockets is less expensive than its Xeon 7500 equivalent from last year. In other cases, you pay a premium. There is no simple pattern, so shop carefully. Intel will continue to sell both processors, and they are socket compatible as well.

10 cores, 20 threads, 40 per cent more oomph

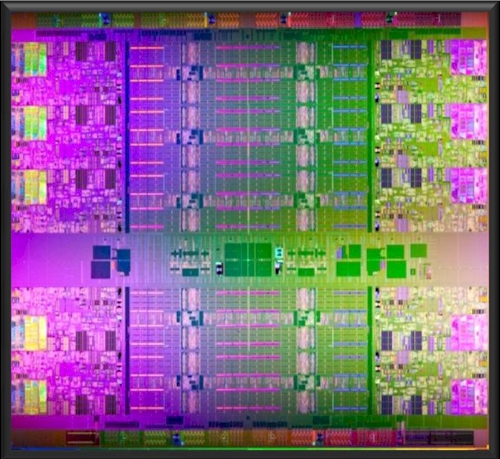

Like other Xeon chips coming out this year, the Xeon E7s are fabricated using Intel's 32 nanometer processes, a shrink from the 45 nanometer processes used with the Xeon 7500s last year. That shrink is what allows Intel to cram two more cores on the chip, but as El Reg speculated based on details Intel released at the ISSCC chip conference back in February, it looks like the Xeon E7s were designed to sport twelve cores and two of them were cut off the bottom to fit on the die. Check it out:

The Xeon E7 was designed at Intel's Bangalore, India chip lab. The Xeon E7 has 2.6 billion transistors and is 513 square millimeters in size. The chip uses a ring bus to connect cores and their L3 caches together so they can share work and data. This ring approach will be used across Xeon and Itanium processors, including the future "Sandy Bridge" Xeon E5s coming out later this year for two-socket and four-socket servers and the "Poulson" Itaniums due maybe next spring or so.

Go Westmere, young man

The Westmere cores on the Xeon E7 chips include some features not found in their Nehalem predecessors and that were originally shipped with the "Westmere-EP" Xeon 5600 processors for two-socket servers a year ago. These new features include on-chip electronics for running the Advanced Encryption Standard (AES) algorithm for encrypting and decrypting data and Trusted Execution Technology (TXT), just to name two.

The on-chip AES-NI encryption/decryption instructions radically improve the performance of the AES algorithm. Software giant Oracle says it is seeing an order of magnitude performance increase on encryption within its 11g R2 database using this feature. The TXT feature is borrowed from Intel's vPro-capable business-class PCs and is being added to Intel's server processors to harden server virtualization hypervisors against malicious software insertions prior to the hypervisor booting up.

On a conference call announcing the processors, Kirk Skaugen, general manager of Intel's Data Center Group, said that some more machine check architecture (MCA) functions were being added to the Xeon E7s to make them suitable for mission critical workloads. Skaugen called out double device data collection as one such new RAS feature. This DDDC feature, which allows a system to correct from two memory errors without crashing, was slated for the original "Tukwila" Itaniums back in 2008. Tukwila, now called the Itanium 9300, was launched in February 2010.

All of the Xeon E7 processors support Intel's HyperThreading, which presents two execution threads to software for each physical core in the processor. In some cases, HyperThreading can improve the performance of workloads, and in other cases it actually hinders performance. But in the x64 core wars, Intel is committed to using a mix of ever-increasing core counts with HyperThreading, rather than just naked cores. Advanced Micro Devices has been allergic to any kind of simultaneous multithreading to its Opteron server chips, and it seems less inclined than ever with its future "Bulldozer" Opterons, due to start shipping for revenue in the second quarter. All of the Xeon E7s except the E7-8837, the smallest of the eight-socket capable processors, have HyperThreading.

All but two of the Xeon E7 chips support Intel's Turbo Boost 2.0 technology, which allows for the clock speed of a core to be goosed if the other cores in the chip are not working hard. The exact Turbo Boost bump was not available at press time, but we will track this data down.

Another important feature of the Xeon E7s is not on the chip, but next to it. There are two new "Millbrook" memory buffer chips that are used in conjunction with the Xeon E7 processors. The new 7510 and 7512 buffer chips allow for a four-socket machine to scale up to 2TB of main memory using 32GB DIMMs, and the 7512 chip supports 1.35 volt memory. With a four-socket machine sporting 64 memory slots, and the low-voltage memory consuming about 1 watt less per stick compared to 1.5 volt memory, it can really start adding up. The "Boxboro" 7500 chipset, which is shared between the Xeon 7500, Xeon E7, and Itanium 9300 processors, can scale gluelessly (meaning without having to slap on an additional supercontroller chip) to eight sockets, 128 memory slots, and 4TB of main memory. The new Millbrook controllers also allow a two-socket E7-2800 machine to have 32 memory slots and support up to 1TB of main memory without resorting to memory extension ASICs like those developed by IBM, Cisco Systems, and Dell for last year's Xeon 7500-based servers.

Generally speaking, the Xeon E7s offer roughly 40 per cent more performance than their Xeon 7500 predecessors. The shrink to 32 nanometers has allowed Intel to add two more cores, crank the clock speeds by a bit, and also boost the L3 cache on chip from 24MB to 30MB. All of these factors contribute to the performance increase. Obviously, mileage will vary by workload.

Skaugen says that the Xeon E7 chips have been shipping for around 100 days and that 19 vendors will have 35 systems out the door within the next 30 to 45 days. We'll be looking at as many of these as we can to tell you all about them. ®