Original URL: https://www.theregister.com/2010/11/24/numascale_shared_memory_interconnect/

Numascale brings big iron SMP to the masses

The NUMA NUMA song

Posted in Channel, 24th November 2010 05:00 GMT

SC10 If you want big server iron but you have midrange server budgets, Numascale has an adapter card that it wants to sell to you. The NumaConnect SMP card turns a cluster of Opteron servers into a shared memory system, and in the not-too-distant future, probably Xeon-based machines, too.

The clustering of cheap server nodes to make fatter shared memory systems is one of those recurring themes in the systems racket. IBM's Power Systems and high-end System x cards used Sequent-derived chipsets to turn up to four physical servers into one shared memory machine. All of the new four-socket and eight-socket boxes based on Xeon 7500 processors from Intel do the same trick, which uses non-uniform memory access (NUMA) clustering to give multiple server cards in a single system a shared memory space.

There are many other designs that make use of NUMA or NUMA-like technologies to lash cheap nodes together make a single address space for applications to frolick in. The problem is, big iron with a shared memory space is expensive while distributed clusters are cheap, even if applications have to be parallelized to run on the latter machines. Ideally, you would be able to take cheaper clusters and make them look like expensive shared memory systems without actually doing much work.

This is an idea that researchers at the University of Oslo and a spinoff, Dolphin Interconnect Solutions, has been chasing for two decades. The university researchers in Norway did a lot of the work to help forge a standard called the Scalable Coherent Interface, or SCI, that was supposed to be a high-speed, point-to-point interconnect for all components in a system.

Data General and Sun Microsystems used Dolphin SCI chips in some of their systems back in the day, and 3Leaf Systems had a similar ASIC and interconnect, but according to rumors going around SC10 last week the company quietly went out of business earlier this year. (No one is answering the phones at 3Leaf Systems, so we can't confirm this.) Dolphin has still sells SCI-based embedded systems for military and industrial systems and is hoping to take the technology to a broader HPC and enterprise market.

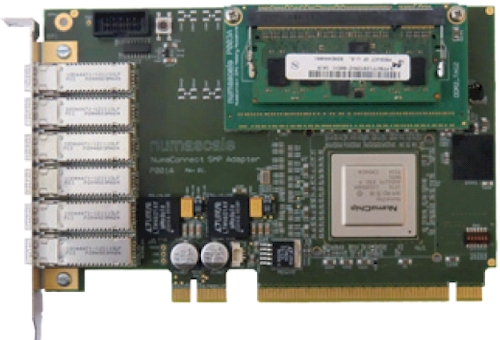

But to go after the modern commercial server racket, Dolphin has spun out a new company called Numascale in 2008 and has put the finishing touches on a single-chip implementation of its cache-coherent NUMA technology. With the NumaConnect SMP adapter card, which plugs into the HTX expansion slot of Opteron-based machines, an Opteron-based server is converted into a NUMA cluster. According to Einar Rustad, co-founder of Numascale and vice president of business development at the company, the SCI interconnect inside the NumaConnect SMP adapter runs at 20 Gb/sec, which is half the rate of QDR InfiniBand and twice that of 10 Gigabit Ethernet, obviously.

That's not what matters so much when it comes to NUMA clustering. The latency hopping from node to node in a shared-memory system using the NumaConnect SMP cards is somewhere between 1 and 1.5 microseconds, which is low enough that with proper caching a cluster of server nodes can be made to look like one giant SMP box like a high-end mainframe, RISC/Unix box, or x64 box using a Xeon 7500 and Intel's "Boxboro" 7500 chipset. The thing is, Numascale is letting you create a big bad box out of cheaper server nodes. And, because the electronics behind the Dolphin technology has been shrunk down to a single chip, it is a lot cheaper to make and therefore to sell.

The NumaChip implements NUMA clustering using a director-based cache coherence protocol with a write-back cache and a tag memory cache. The write-back cache keeps data pulled from adjacent server nodes around as it is used so the next time a node asks for it, the request doesn't have to go any further than the NumaConnect card. The tag memory is what is used to create the single, global address space that all of the other server nodes see when they are linked to each other. You have to match the server tag memory to the capacity of the memory on the Opteron server node.

Like a Dolphin

So, the entry card has 2 GB of write-back cache and 1 GB of tag memory that allows for the use of server nodes in the cluster with 16 GB of total main memory each; the midrange card has 4 GB of writeback cache and 2 GB of tag cache to support 48 GB of main memory per node; and the high-end card has 4 GB of writeback cache and 4 GB of tag cache to support 64 GB of main memory per node.

The NumaConnect SMP card: NUMA for the masses

The NumaConnect SMP adapter card that is based on the single-chip implementation of the Dolphin SCI technology implements a 48-bit physical address, which means it can see as much as 256 TB of memory as a single space, which works out to 4,096 server nodes with 64 GB each. Rustad says that the servers can be linked in a number of different configurations using the six NUMA ports on the card, including a giant ring or a 2D or 3D torus interconnect popularly used in parallel supercomputer clusters.

The latter two are interesting in that the NumaConnect cards can create a shared memory cluster for workloads than need a big memory space, but also can run applications using the OpenMP implementation of the Message Passing Interface (MPI) distributed clustering protocol for applications. Obviously, the SCI implementation does not have the same bandwidth as QDR InfiniBand, but the Numascale technology allows you to use a cheap cluster with shared or distributed memory, whatever the application of the moment needs.

The software-based vSMP Foundation clustering technology from ScaleMP, which was just recently upgraded to version 3.5, works in much the same manner but used no chips (it is implemented in low-level systems software and uses InfiniBand interconnect and the RDMA protocol as a transport). But the difference with Numascale is that its hardware-based solution supports Windows, Linux, or Solaris, while ScaleMP only supports Linux. Conversely, ScaleMP runs on any x64-based server you have, while the Numascale adapter is restricted to a fairly tight selection of Opteron-based machines that have HyperTransport HTX expansion slots.

For the moment, at least. Rustad says that there is no reason why the NumaChip could not be implemented to fit into an Opteron or Xeon socket, with a connector coming out of the chip in the socket to link it to the NUMA six ports on the card. By the way, plugging even if you use an HTX-based machine, plugging in the NumaChip eats a socket's worth of memory space in the box, so you are going to lose a processor socket no matter what you do. Obviously, for certain workloads, building a cluster based on two-socket Opteron machines is not going to offer the best memory expansion, and it may even offer worse bang for the buck per node because the nodes are CPU challenged.

The entry NumaConnect adapter card costs $1,750, while the high-end card costs $2,500. Rustad says that Numascale has 100 cards in stock now and will get another batch in February. At the moment, the company has tested the chips using a twelve-node cluster running Debian Linux and has not yet tested Windows or Solaris. The NUMA links between nodes are based on DensiShield I/O cables from FCI, which put eight pairs of wires (four bi-dictional links) into a square connector. FCI makes cables for just about any protocol you can think of.

IBM's Microelectronics Division is fabbing the NumaChip ASICs for Numascale. ®