Original URL: https://www.theregister.com/2010/11/15/sgi_mojo_cpu_gpu_hybrid/

SGI gets its HPC mojo back with CPU-GPU hybrids

Sticking it to racks and blades

Posted in Channel, 15th November 2010 14:21 GMT

SC10 If Silicon Graphics was still a standalone company and had not been eaten by hyperscale server maker Rackable Systems, there is a fairly good chance that the innovative "Project Mojo" hybrid CPU-GPU clusters, which pack a petaflops into a single cabinet, would not have seen the light of day.

And because two innovative server companies merged and come at HPC from two different angles, the Prism XL ceepie-geepies announced today at the SC10 supercomputing conference in New Orleans are coming to market precisely timed to the mainstreaming of GPUs in the HPC market.

SGI has been eagerly selling its "UltraViolet" Altix UV 1000 shared memory supercomputers, based on Intel's Xeon 7500 processors and SGI's home-grown NUMAlink 5 high-speed interconnect. And when it reported its financial results for the third quarter, it was bragging that it had sold a cumulative 63 Altix UV machines to date. While the company has not provided any sales projections for the Prism XL machines, it is safe to bet that the uptake for these hybrid, dense-pack machines will be at least as good if not better than for the Altix UV 1000s. It's horses for courses.

The high-end of the current Altix UV 1000 line, which previewed at the SC09 show last year, scales to 256 sockets, 2,048 cores, and 16 TB in a single global memory space with 18.56 teraflops behind it. This machine is heavy on the shared memory and light on the floating point operations in its four racks, and because shared memory is technically difficult to do, the Altix UV 1000s will command a premium.

The Prism XL machines - the XL is not short for "extra large," but rather "accelerator" - are not quite as dense as we were lead to believe when SGI announced Project Mojo back in June, promising to pack a petaflops into a rack by the end of this year. Yes, that sounds impossible, and yes, it is.

As El Reg anticipated, SGI was talking about single-precision floating point performance when it was talking about petaflops, and it was also talking about a custom cabinet that spans multiple rack enclosures. That single-precision petaflops is actually packed into two of SGI's new Destination 42U racks, with a third rack in the middle just for InfiniBand (for node interconnect) and Gigabit Ethernet (for node management) networking switches. You get to petaflops with a total of 504 of Advanced Micro Devices' FireStream 9350 single-wide fanless GPU co-processors, which were announced at the end of June.

The FireStream 9350s have killer single-precision number crunching at 2 teraflops per GPU, but only come in at 400 gigaflops at double precision. If you do the math, you don't need a supercomputer to see that this works out to only 201.6 teraflops DP across those 504 GPUs in the Prism XL cabinet.

Picking at it like that takes nothing away from the impressive density and packaging that the Prism XL machines offer compared to alternatives. SGI was intentionally vague to build excitement (as if all petaflops were created equal). This is a dubious strategy perhaps but certainly not a rare one in the IT racket.

Stick it to 'em

As El Reg explained in its exclusive in September about the Project Mojo designs, the Prism XL is employing a CPU-GPU packaging technique that SGI calls sticks.

Rather than starting with an idea for an enclosure - say a 2U rack or cookie sheet server - and then trying to see how many GPUs can be crammed into an x64 server before it catches fire, SGI's engineers working on the Prism XL machines started with the fanless GPU co-processors from AMD and Nvidia and the highly cored integer processors from Tilera (widely believed to be a funky MIPS variant) and the PCI-Express bus that they link into, and then architected a server to wrap around these co-processors. And so SGI created deep and skinny server chassis that puts the GPUs in the back, a modest x64 server in the middle with its memory and disks, and networking and PCI-Express slots power supplies in the front for easy access.

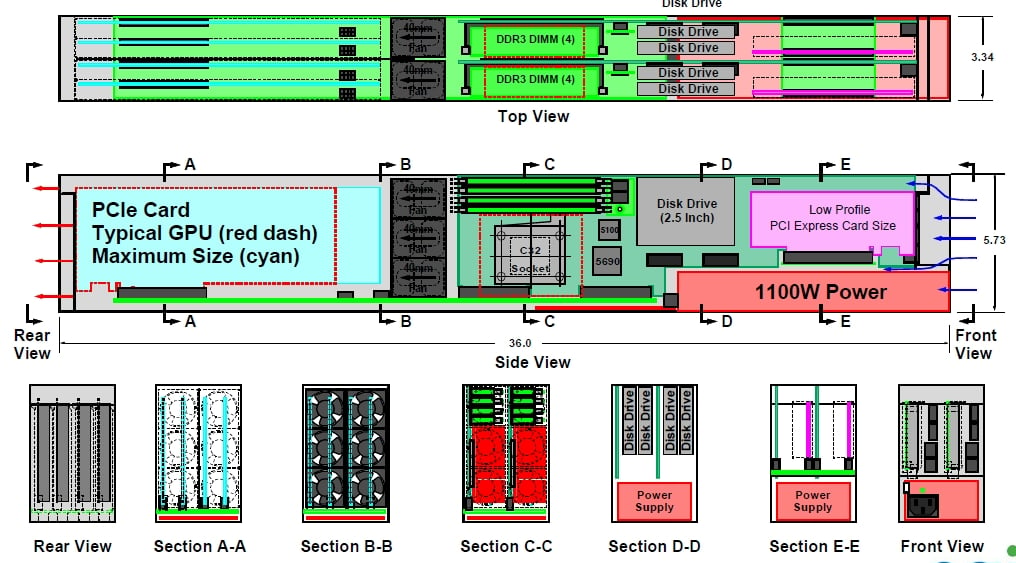

This 5.75 inch wide by 3.34 inch high by 37 inch chassis is what is called a stick. Here's the schematic drawing of what a stick looks like from the side and below:

You tip this unit on its side after wrapping it in metal, so the power supply is on the left side and the mobo and GPUs are laying horizontally.

According to Bill Mannel, vice president of product marketing at SGI, the server maker is using a reference motherboard for blade servers based on the Opteron 4100 processor from AMD for the x64 compute portion of the Project Mojo stick. This blade server uses AMD's 5690/5100 chipset and has four DDR3 memory slots running at 1.33 GHz; it has two Gigabit Ethernet ports and one Fast 10/100 Mbit port for management. The mobo also has an HTX slot that will eventually allow two adjacent boards in a stick to be configured as a two-socket, eight memory slot SMP server.

Each motherboard has room for two 2.5-inch disk drives and uses a PCI-Express extender card to pull two 8-pin power links off a single 1,100 watt power supply that is in the stick to feed one or two GPUs located in the back of the stick. The mobo has a single low-profile PCI-Express 2.0 port and also has room for two 1.8-inch disks up front near this PCI slot. Each motherboard assembly can be linked to a single double-wide GPU or Tilera co-processor or two single-wide units. A stick can have two of these C32-socket motherboard modules, side-by-side, and each blade inside the stick has six muffin fans (three high and two deep) to help move air over the GPUs.

Here's what a stick looks like populated with two double-wide M2070 GPUs from Nvidia. Again, it is tipped on its side:

SGI is being as open as it can be with the Mojo sticks, just like Rackable has always been when it comes to x64 processors used in its server platforms (and the original SGI was not until it learned the hard way that you cannot just support one chip in this world). In the initial Prism XL configurations, the x64 portion of the blade will be the Opteron 4100 motherboard, which is the cheapest one on the market that has the right features, although if customers make a fuss SGI could find a low-voltage Xeon alternative.

Nvidia's fanless single-wide M2050 and double-wide M2070 GPU co-processors are supported in the sticks (El Reg told you all about these devices here) as is the above mentioned double-wide 9370 GPU co-processor from AMD. The M2050 and M2070 are rated at 515 teraflops double precision and 1.03 teraflops single precision in terms of raw math power. The double-wide AMD 9370 has 528 gigaflops DP and 2 teraflops SP and is also supported now. Mannel says that in January SGI will support the single-wide AMD 9350, which only has 400 gigaflops DP but you can put four in a stick, which is 1.6 teraflops.

SGI will also support a PCI-Express version of Tilera's Tile64Pro processor, called the Tile Encore, which has a 64-core chip that are great at integer calculations like the kind used in image and signal processing, encryption and decryption, deep packet inspection, and other spooky workloads we can't tell you about or else we have to kill you. (You can get the scoop on Tilera's chips here.) Tilera is working on a 100-core chip for next year and hopes to scale it up to 200 cores within two years.

Obviously, there are other kinds of accelerators, such as FPGA cards, that could also be slotted into Project Mojo sticks.

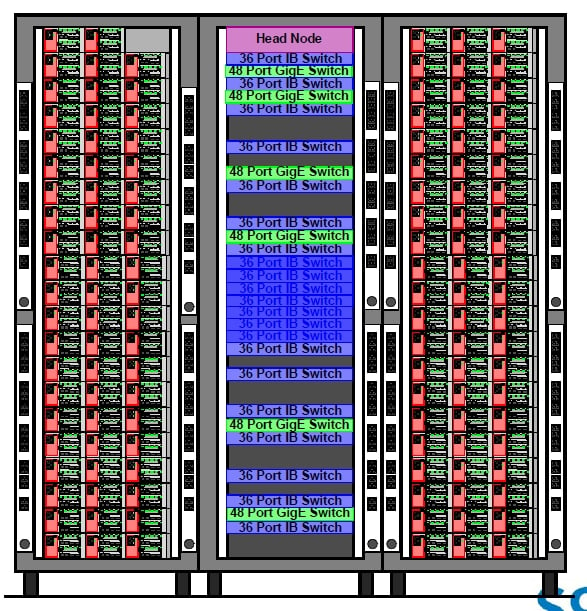

Back in June, SGI said it was working on two different racks that would eventually get ceepie-geepie sticks: a 19-inch, 42U rack called Destination (converging many different SGI and Rackable racks and simplifying its product line) as well as the special 24-inch racks used with the Altix UV 1000 blade supers. The Destination rack has a 2U enclosure that can hold three sticks side by side, and 21 of these enclosures are slid into the Destination rack thus:

You could put 126 sticks in this three-rack cabinet design, but for some reason SGI is only putting in 125. That gives you 250 double-wide or 500 single-wide GPUs and 125 Opteron 4100s acting as (for all intents and purposes) head nodes for a GPU cluster. The servers are linked with 36-port managed InfiniBand and 48-port unmanaged Gigabit Ethernet switches from Mellanox.

The InfiniBand runs at QDR (40 Gb/sec) speeds and are linked in a full non-blocking fat tree network. Mannel says that the Project Mojo system is designed to extend from one to 36 cabinets by interconnecting their InfiniBand switches, which would put you at around 9.5 petaflops using the AMD 9370 GPUs and around 14.4 petaflops using the AMD 9350 GPUs. With the Nvidia M2050s, those 36 cabinets could get you around 14 petaflops.

A fully loaded Prism XL rack using the Destination enclosure burns somewhere between 50 and 60 kilowatts under load (about half of the peak rating for the sticks) according to Mannel. The modified Altix UV 24-inch rack will hold 80 sticks instead of 63. No word on when this rack option will be available.

SGI is supporting Red Hat's Enterprise Linux 5.5 and CentOS 5.5 for operating systems on the stick servers now, with RHEL 6 coming in January. The CUDA programming environment for Nvidia GPUs and the OpenCL alternative promoted by AMD are obviously supported on the boxes, as are programming tools from Tilera, Allinea, Caps Enterprise, Portland Group, Rogue Wave, and Research Labs.

Altair PBS is used to schedule work on the Mojo accelerators, and system management is done by SGI's own Management Center Premium Edition (which it inherited from its Linux Networx asset buy a few years back). This whole shebang is known as the Accelerator Execution Environment.

SGI is showing off prototypes of the Project Mojo machines at SC10 this week, and will have them generally available in December. The price of a configured three-rack cabinet setup loaded with CPUs, GPUs, memory, disks, and switches will range from $4.5m to $5m, according to Mannel.

This is about 25 per cent less expensive than building a plain vanilla x64 cluster with InfiniBand switches using SGI's Altix supers, and it takes up a lot less space and electricity, too. ®