Original URL: https://www.theregister.com/2010/10/14/ibm_cloudburst_appliances/

IBM floats new CloudBurst stacks

Fluffy and virty x64 blades and Power7 racks

Posted in Channel, 14th October 2010 19:11 GMT

IBM has revamped its existing x64-based CloudBurst appliances as well as rolling out an alternative stack based on its own Power7-based systems. Apparently Big Blue doesn't want to be an also-ran in the race to sell preconfigured cloudy infrastructure to companies setting up virtualized private clouds.

IBM is also selling the key secret sauce for deploying virtual machines and managing them on CloudBursties, called Service Delivery Manager with the Tivoli label also slapped on it for good measure, on non-Blue platforms.

The initial CloudBurst cloudy stacks from IBM were launched in June 2009 based on the company's BladeCenter HS22 Xeon-based blade servers and its DS3400 midrange disk arrays. The machines were preconfigured with VMware's ESX Server 3.5 hypervisor and a slew of management tools and a self-service portal then called CloudBurst V1.1.

In February of this year, IBM Global Services started peddling a set of training and installation services to help companies integrate CloudBurst stacks into their existing infrastructure, and start virtualizing physical server workloads and moving them over to the fluffy gear.

With the V2.1 rev of the CloudBurst stacks, IBM is moving to the HS22V blade server, which as El Reg told you back in late February (two days before the CloudBurst services came out) are designed with a larger memory footprint specifically to better support virtualized machines.

The HS22V is based on Intel's 5520 chipset and can support quad-core Xeon 5500 or six-core Xeon 5600 processors in its two sockets. This blade can support up to 144GB of memory using 8GB DDR3 memory sticks and up to the full 288GB of memory supported on the Xeon 5600's integrated memory controller when using 16GB sticks.

In the base configuration of the HS22V blades used in the CloudBurst V2.1 stacks, IBM is putting in 72GB of base memory, which is 50 per cent more memory than the 48GB it was configuring on the HS22 blades in the original CloudBurst V1.1 stacks. Combined with the jump to VMware's latest ESX Server 4.1 hypervisor, this extra memory allows 30 or more virtual machines per blade server to be hosted.

Depending on the CloudBurst configuration (IBM sells things in small, medium, or large sizes, like T-shirts), companies should be able to support 50 to 100 per cent more VMs on the new CloudBurst setups versus the old ones. IBM's testing shows that a single blade configured with 4 GB of memory per VM can host 36 blades.

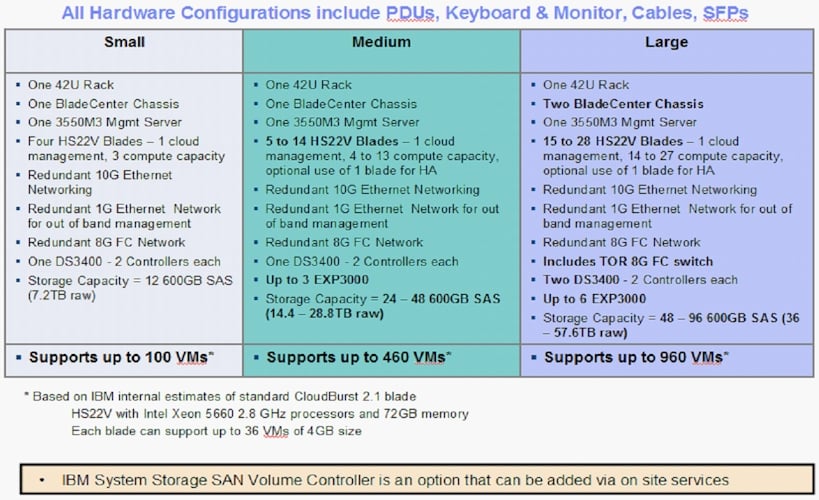

Here are the feeds and speeds of the CloudBurst V2.1 stacks using Intel's Xeon X5660 processors (which spin at 2.8GHz) in the HS22V blades:

As you can see, the small configuration is aimed at supporting 100 VMs (IBM is rounding down from 108), and the bulk of the iron in the rack is storage, switches, a management server running what is now called Service Delivery Manager (instead of the CloudBurst software), and a mostly empty BladeCenter chassis, which can hold 14 standard-width blade servers, with one of them being used per chassis to control the provisioning of blades and VMs.

In addition to boosting the processor core count and memory capacity on the blades, with the CloudBurst V2.1 private clouds IBM has put in 8Gb/sec Fibre Channel adapters out to the DS3400 disk arrays, and now allows companies to add in an out-of-band SAN Volume Controller to virtualize disk storage.

IBM has actually been shipping these three CloudBurst x64 configurations since September 30, as it turns out, with configurations ranging from four to 28 blades. An extra-large 56-blade setup (that's four BladeCenter chassis loaded up) is coming in the fourth quarter. The small CloudBurst V2.1 setup using Xeon processors sells for $220,000. You can lease this from IBM Global Services for around $6,000 a month if your credit is good and you ink a 36-month lease.

Fight The Power

On December 17, IBM will start peddling CloudBurst V2.1 configurations based on its Power 750 midrange Power7-based server. The Power 750 is the workhorse box in the midrange, and you might be wondering why IBM didn't stick with blade servers and use the new PS701 blades. The answer would seem to be more about performance and memory expandability than blade or virtual-machine density.

The PS701 blade has eight cores and a single socket, and the chips only spin at 3GHz. You can put ten logical partitions on each Power7 core, and this is the ratio that IBM is using to size up the CloudBurst V2.1 stacks based on the Power 750s. So if you do the math, if you took a base CloudBurst and yanked out the three base HS22V blades and replaced them with three PS701 blades, you should, in theory, be able to host 240 VMs using IBM's PowerVM hypervisor. That's more than twice that of the 108 VMs you can set up using the HS22V Xeon blade. Still, even at five VMs per core, the PS701 blade should have been fine at 120 VMs.

It's a mystery, and one that IBM does not explain. My guess is that IBM has Power 750 clusters sitting around, waiting to be loaded with either PureScale database clustering or CloudBurst configurations, and that — for whatever reason — Unix shops tend to prefer racks to blades and hence IBM's preference in the CloudBurst machines.

IBM doesn't sell a two-socket, single-width Power7 blade server, but you can make a two-socket blade by snapping two PS701s together — it's called the PS702 — but that doesn't improve the density at all, even though it does allow for tight SMP clustering of the two blades.

What IBM really needed was a two-socket PS711 blade server — and to put faster 3.72GHz processors in these blades, as well. And then it needed to put this into the CloudBurst setups while at the same time proving the VM density is better than on Xeon-based blades for the same performance on equivalent workloads — if this, in fact, turns out to be the case.

All IBM does say — rather vaguely — is that compared to standalone, physical x64-based servers, a Power Systems cloud based on the CloudBurst setup can be between 70 and 90 per cent less expensive on a cost per image basis than standalone x64 machines or public clouds — presumably Amazon EC2. There's no quantification for that claim, or a relative metric for the x64-based CloudBurst setups. Presumably they are even less expensive, but maybe not, given what VMware charges for ESX Server.

The Power 750 nodes used in the Power variants of the CloudBurst V2.1 stacks cram 32 cores and up to 512GB of main memory into a 4U chassis. The Power 750 does not support the higher 3.72GHz or 3.86GHz clock speeds available on other Power Systems machines, and it is a relatively expensive box. It does support 3GHz and 3.3GHz chips as well as very pricey 3.55GHz ones.

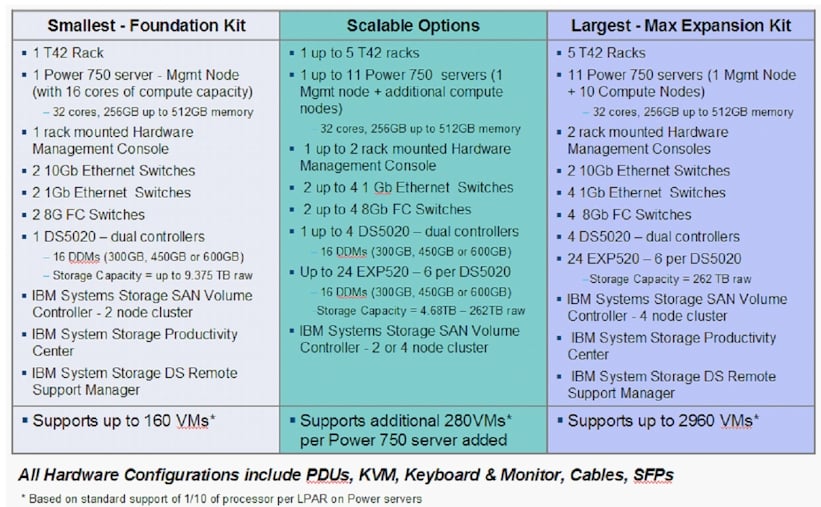

Here's what the Power 750-based CloudBurst V2.1 small, medium, and large configurations look like:

IBM is using 16 cores in the first Power 750 to run the management software for the CloudBurst, and can put up to ten additional Power 750s, plus DS5020 disk arrays, EXP520 expansion units, SAN Volume Controller, and all the switching gear needed to lash it all together across five racks. The base machine supports 160 VMs on the 16 spare cores in that single Power 750 machine, while the five-rack CloudBurst can host 2,960 VMs. The Power-based setups are using beefier disk arrays and have storage virtualization built in from the get-go.

The Xeon CloudBurst machines offer 960 VMs per rack, compared to 592 per rack for the Power 750 setups. With the PS700 blade, you could do 1,040 VMs per rack, and with a true two-socket Power7 blade, IBM could double that to 2,080 in two chassis.

Pricing for the Power version of the CloudBurst setup was not available at press time. ®