Original URL: https://www.theregister.com/2010/09/24/google_percolator/

Google Percolator – global search jolt sans MapReduce comedown

The machine that brews the Caffeine

Posted in HPC, 24th September 2010 18:49 GMT

Google Caffeine — the revamped search infrastructure recently rolled out across Google's worldwide network of data centers — is based on a distributed data-processing system known as Percolator. Designed by Google and, until now, jealously guarded by Google, Percolator is a platform for "incremental processing" — a means of continually updating the company's epic search index without reprocessing the entire thing from scratch.

As Google senior director of engineering Eisar Lipkovitz told The Register earlier this month, the new platform is a speedier alternative to MapReduce, the distributed number-crunching platform that underpinned the company's previous indexing system. Two New York-based Google engineers — Daniel Peng and Frank Dabek — discuss the platform at length in a paper they are scheduled to present at the annual USENIX Symposium on Operating Systems Design and Implementation (OSDI) next month in Vancouver.

"MapReduce and other batch-processing systems cannot process small updates individually as they rely on creating large batches for efficiency," the paper reads. "We have built Percolator, a system for incrementally processing updates to a large data set, and deployed it to create the Google web search index. By replacing a batch-based indexing system with an indexing system based on incremental processing using Percolator, we process the same number of documents per day, while reducing the average age of documents in Google search results by 50%."

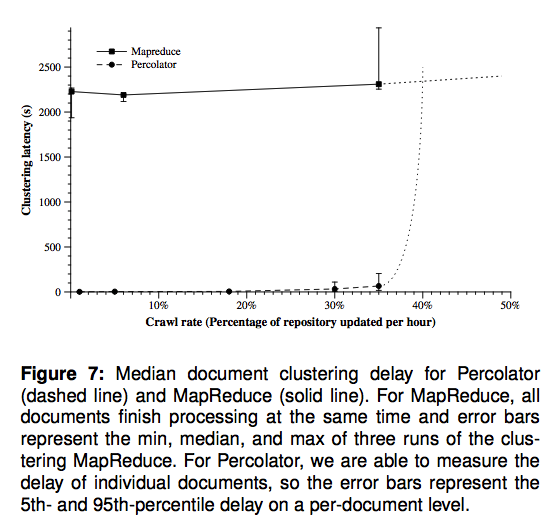

Speaking with The Register, Lipkovitz compared the system to classic database programming and the use of "database triggers." Because the index can be updated incrementally, the median document moves through Caffeine over 100 times faster than it moved through the company's old MapReduce setup. "The Percolator-based indexing system (known as Caffeine), crawls the same number of documents, but we feed each document through Percolator as it is crawled. The immediate advantage, and main design goal, of Caffeine is a reduction in latency."

“By replacing a batch-based indexing system with an indexing system based on incremental processing using Percolator, we process the same number of documents per day, while reducing the average age of documents in Google search results by 50%.”

In the past, Google's search index — an index of the entire web — was built with a series of batch operations. The MapReduce platform "maps" tasks across a vast collection of distributed machines, splitting them into tiny sub-tasks, before "reducing" the results into one master calculation. Google's webcrawlers would supply the raw data — the webpages and weblinks — and MapReduce would process this data, determining, among other things, each site's PageRank, that famous measure of how many other sites it links to.

MapReduce reduced

This worked well enough. But, the Google engineers say, it wasn't suited to rapidly updating the index. "Consider how to update that index after recrawling some small portion of the web. It’s not sufficient to run the MapReduces over just the new pages since, for example, there are links between the new pages and the rest of the web. The MapReduces must be run again over the entire repository, that is, over both the new pages and the old pages," they explain.

"Given enough computing resources, MapReduce’s scalability makes this approach feasible, and, in fact, Google’s web search index was produced in this way prior to the work described here. However, reprocessing the entire web discards the work done in earlier runs and makes latency proportional to the size of the repository, rather than the size of an update."

With the old system, the company crawled several billion documents each day and fed them, together with an epic collection of existing documents, through a sequence of roughly 100 MapReduces. Because the system was sequential, this meant that each document spent two to three days being indexed before it would actually turn up in Google's live search results.

Percolator slashes this delay by providing random access to the existing multi-petabyte index, letting Google make updates without reprocessing the entire repository. "Random access allows us to process documents individually, avoiding the global scans of the repository that MapReduce requires," the paper says. The system runs across a sea of machines, making vast numbers of changes in parallel with what the company calls ACID-complaint database transactions.

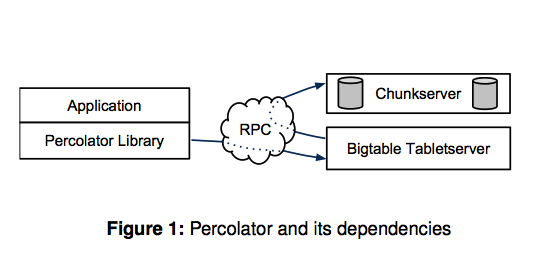

As Lipkovitz indicated, Percolator runs atop BigTable (Google's distributed database platform) and GFS (its distributed file system). Lipkovitz also explained that the system uses a new version of GFS known as "Colossus" or GFS2, but this is not explicitly discussed in the paper. (You can find more on GFS2 here).

Percolator uses the same basic interface as BigTable. Data is stored in BigTable rows and columns, with Percolator metadata stacked up in its own "special" columns. And it uses a modified version of the BigTable API, with BigTable operations wrapped in computations specific to Percolator.

Unlike BigTable, Percolator does multi-row transactions, and it offers a compute framework for executing code atop the database. This framework is built around what Google calls "observers". In essence, these provide a means of organizing the myriad transactions. "Programmers of an incremental system need to keep track of the state of the incremental computation," the paper says. "To assist them in this task, Percolator provides observers: pieces of code that are invoked by the system whenever a user-specified column changes."

Death to stragglers

In a Percolator cluster, three pieces run on each machine: a Percolator worker, a BigTable tablet server, and a GFS chunkserver. With GFS, master nodes oversee data spread across a series of distributed chunkservers, which store, yes, chunks of data. Observers hook into the Percolator worker, and the worker interfaces with BigTable. GFS, as Lipokovitz explained, is the database's underlying storage engine.

Whereas MapReduce nabbed all of the data for tens or even hundreds of webpages, Percolator executes roughly fifty BigTable operations when processing a single document.

Percolator applications are essentially a series of observers. Each observer completes a task and passes more work onto the next observer by writing to the table. There are relatively few observers per app: Caffeine uses about 10. Because the system can operate without rescanning the entire index, it's much simpler than the 100-MapReduce indexing setup of the past. And with latency reduced, Google can expand the size of its index. Caffeine's collection of documents is three times larger than that used by the old MapReduce system.

The size of the system, Google engineers say, is limited only by the available disk space.

Percolator also avoids the MapReduce "straggler" problem, where a few slow operations can hold up the entire process, and according to the Google engineers, it's easier to operate. "In the old system, each of a hundred different MapReduces needed to be individually configured and could independently fail. Also, the 'peaky' nature of the MapReduce workload made it hard to fully utilize the resources of a datacenter compared to Percolator’s much smoother resource usage."

The rub is that Caffeine uses roughly twice the resources to keep up with the same crawl rate. According to the paper, Percolator performance lies somewhere between that of MapReduce and a traditional database management system (DBMS). "Because Percolator is a distributed system, it uses far more resources to process a fixed amount of data than a traditional DBMS would; this is the cost of its scalability. Compared to MapReduce, Percolator can process data with far lower latency, but again, at the cost of additional resources required to support random lookups."

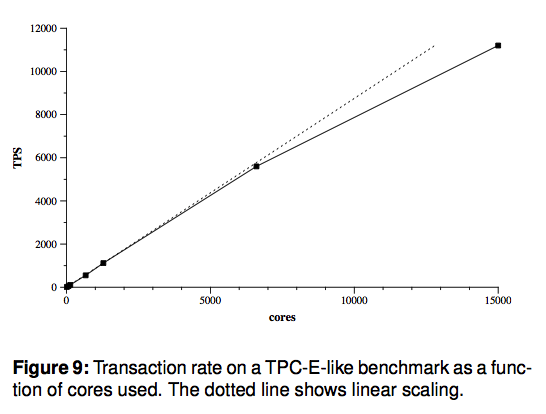

According to Peng and Dabek, the performance of the system will scale almost linearly a resources are added, as indicated by tests with the industry standard TPC-E benchmark. But that added overhead may be an issue. "The system achieved the goals we set for reducing the latency of indexing a single document with an acceptable increase in resource usage compared to the previous indexing system," the paper concludes.

"The TPC-E results suggest a promising direction for future investigation. We chose an architecture that scales linearly over many orders of magnitude on commodity machines, but we’ve seen that this costs a significant 30-fold overhead compared to traditional database architectures. We are very interested in exploring this tradeoff and characterizing the nature of this overhead: how much is fundamental to distributed storage systems, and how much can be optimized away?" ®