Original URL: https://www.theregister.com/2010/09/16/sandy_bridge_ring_interconnect/

Intel Sandy Bridge many-core secret sauce

One ring to rule them all

Posted in Personal Tech, 16th September 2010 23:27 GMT

IDF During the coming-out party for Intel's Sandy Bridge microarchitecture at Chipzilla's developer shindig in San Francisco this week, two magic words were repeatedly invoked in tech session after tech session: "modular" and "scalable". Key to those Holy Grails of architectural flexibility is the architecture's ring interconnect.

"We have a very modular architecture," said senior principal engineer Opher Kahn at one session. "This ring architecture is laid out in such a way that we can easily add and remove cores as necessary. The graphics can also have different versions."

How many cores was Kahn talking about? At another session he referred to "some future implementation with 10 cores or 16 cores." And although all of the materials presented at the conference referenced a four-core Sandy Bridge implementation, Kahn also referred at one point to a "two core product."

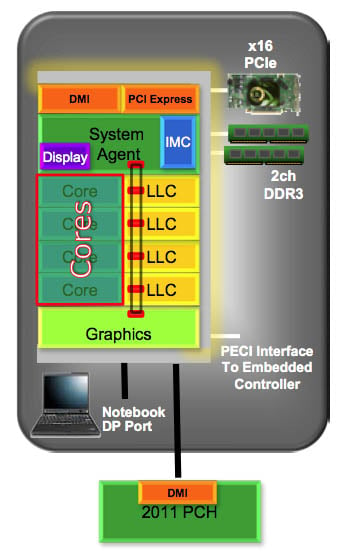

The Sandy Bridge ring interconnect manages how the various and sundry parts of the processor communicate with one another: the compute cores with one shared "cache box" per core, the graphics subsystem, and finally the "system agent" — the chip area that includes such niceties as PCIe, direct media interface (DMI), integrated memory controller (IMC), display engine, and power management.

Here, the ring interconnect is the loop that looks like a train track

"The cores talk to the ring directly, they don't talk to any other element," said Kahn. "The graphics talks to the ring, the ring talks to the cache box, and the cache box talks to the system agent. And in some cases they can talk to each other, but all communication is done over the ring."

The ring interconnect was needed due to a number of factors, not the least of which being the fact that Sandy Bridge crams so many different functions onto one piece of silicon.

"In the previous generation we really had a multi-chip package, with a separate CPU — a more traditional CPU that looked a little bit like Merom and Conroe family, the Nehalem — with cores and a last-level cache," Kahn said — in Intel's latest parlance, by the way, what previously was often referred to as an L3 cache is now known as a last-level cache, or LLC for short.

Connected to that CPU in the same package was a second chip with integrated graphics, memory control, PCIe, and more. For Sandy Bridge, "We basically dropped all that and integrated everything into one piece of silicon," he said.

The development of the ring interconnect started a relatively clean sheet of paper. "We really started from scratch," said Kahn. "Everything that connects the cores, connects to the system, memory controller — everything was redesigned for Sandy Bridge from scratch."

Not that a ring interconnect is brand new in Sandy Bridge. "The ring architecture as a concept actually started in Nehalem-EX," said Kahn, "which is an eight-core server. They needed that bandwidth for server; we actually figured out that we need similar bandwidth and similar behavior in the client space.

And the bandwidth that the ring interconnect provides is impressive. "Our bandwidth provided by this ring for each element connected to the ring connect gives 96 gigabytes per second ... if you're talking about running at 3GHz," Kahn said. "The multi-bank last-level cache for a four-core product provides upwards of 380 gigabytes per second. This is 4X of what existed in previous generations — even the two-core product is 190 gigbytes per second."

A four-fold increase in bandwidth "is really a necessity for the graphics," he said. "Probably higher than or close to what all four cores need together can be consumed by the graphics.

The scalable choo-choo

Although the bandwidth is the earlier Nehalem-EX ring design (upgraded for the Westmere-EX) was also impressive, it didn't live up to the promise of those two aforementioned magic words. "The previous generation Nehalem and Westmere have a single last-level cache with an interconnect that was shared and went all to one place so it wasn't very scalable or modular," said Kahn.

Now, thanks in part to the ring interconnect, he contends: "The addition and removal of cores, caches, and graphics blocks is quite easy in this architecture because it's so regular and it was designed up-front to be able to easily support different products."

The ring is an ingenious beast. For one thing, as Kahn explains: "The ring itself is really not a [single] ring: we have four different rings: a 32-byte data ring, so every cache-line transfer — because a cache line is 64 bytes — every cache line is two packets on the ring. We have a separate request ring, and acknowledge ring, and a [cache] snoop ring — they're used, each one of these, for separate phases of a transaction."

One nifty element of the design, and one that adds to Sandy Bridge's modularity, is the fact, as Kahn explained: "Each one of our stops on the ring is actually one clock, so we can run at core frequency between each of the cache boxes. Each time we step on the ring it's one clock."

What's modulicious and scalariffic about that is "when the cores scale up, and they want high performance and high bandwidth and low latency," he said, "the cache box and the ring scale up with it, running at exactly the same frequency so you get shorter latencies." In other words, if you increase the clock speed of the compute cores, you increase the clock speed of the ring right along with them.

While the ring may seem simple in concept, it's silicon-intensive in implementation. "We have massive routing," Kahn says. "The ring itself is more than a thousand wires, but the designers have found a way to route this over the last-level cache in a way that doesn't take up any more space."

The way that the ring interconnect communicates with the chip's various elements adds to Sandy Bridge's modularity and scalability, as well, since it doesn't really care how many cores and cache boxes it's talking to.

Kahn explained that you should "think about the ring as like a train going along with cargo," and that a ring slot without data is analogous to a boxcar without cargo. "So how do I know if I can get on the ring?" he asked, then answered: "In other busses we call that 'arbitration'. And the arbitration here is pretty simple."

How simple? Very simple. "If the train's coming along and that slot is empty... I can get on. So the decision is totally local. I don't need to see what is happening at any other place on the ring in order to know if I can get on the ring. That is 'distributed arbitration' — that makes it very simple, very localized, and very scalable."

Sandy Bridge's arbitration is far more scalable than than other methods. "If we had a central arbiter that looked at everything that was happening and decided what happens in the next clock," Kahn said, "then that wouldn't scale up to, let's say, some future implementation with 10 cores or 16 cores because there'd be too many decisions, too many inputs, and too many outputs."

And the distributed arbitration scheme works for all of the rings, not just for data: "If you're a core and you want to get on the ring and go over there," Kahn says, all that core has to do is ask: "'Is this slot empty on this ring? I can get on it.' Of course, each separate ring — we have four rings — so each one of them have their own slots, and if it's a data request it's going to look only at the data ring to get on, and so forth."

The new ring interconnect, according to Kahn, has a bright future: "This ring, in a very similar implementation, is going to go in the Sandy Bridge-EP and -EX with their large numbers of cores, and we see this ring scaling forward into the next generation as well."

And not just the next generation: "We actually believe this architecture is going to be scalable into any forseeable future that we have with Intel for the client space," Kahn said, "and, believe me, we are looking quite far ahead into the future, and we're going to be able to maintain this ring architecture for a long time."

That future will include multiple client and server Sandy Bridge parts, chips based on its follow-on Ivy Bridge, and beyond, and it will involve scaling up and down the number of core and cache-box modules, and swapping out integrated-graphics modules with more or less powerful units. ®