Original URL: https://www.theregister.com/2009/03/30/ibm_nehalem_servers/

IBM punts two racks, a blade, and a hybrid thingy

Enterprise avant garde

Posted in Channel, 30th March 2009 19:07 GMT

Nehalem Day IBM is looking for the new Nehalem EP-based servers to kick start its System x rack server and BladeCenter blade server business, which saw a steepening decline in sales as 2008 wound down.

Big Blue will today announce two new rack servers, a blade server, and some configurable compute and storage nodes for its avant garde iDataplex custom-made server clusters. It is interesting to note that the company will not, as yet, put a tower-style machine - the kind sometimes preferred by small and medium businesses - into the field.

IBM does a lot of its own direct sales to large enterprises, and the Nehalem machines it announces today are clearly aimed at enterprise customers. The SMB shops will get their towers soon enough, and besides, IBM almost certainly prefers that they use the BladeCenter S chassis - which runs on 120-volt power and which is designed for an office environment - instead of towers at this point in the history of computing.

"Lots of folks are going to announce hardware, and we did that too," jokes Alex Yost, vice president of BladeCenter products at IBM. Yost says that given the state of the global economy, IBM, like its competitors, are focusing as much on reducing costs related to administration of machines as well as powering up and cooling down the boxes. IBM is also keen on making it less frustrating to set up and support its x64 machines too.

For instance, the new machines all support the Unified Extensible Firmware Interface (UEFI), a superset of the BIOS means of setting up the iron inside a box. UEFI is backwards compatible with BIOSes. But it has a more modern user interface. It can be configured remotely. And in IBM's case, the "beep codes" that normally are used in BIOSes to tell you something is wrong are now converted into messages (available in lots of languages) that are diplayed on the Lightpath diagnostics screens on the front of the server.

The servers also include a new generation of service processor that IBM is calling the Integrated Management Module, which combines diagnostics and remote and local management of servers. This is where the extended BIOS lives. IBM has also collected more than 42 different System x and BladeCenter sites (used for patching and deploying these x64 servers) into a single tool center with just eight different tools - and all have the same look and feel.

IBM is also rolling out a tweaked Systems Director systems management tool, release 6.1, which now has a virtualization manager integrated into it. This tool can be used to manage power consumption on IBM and non-IBM x64 iron too. In addition to having lots of sensors scattered around the machines to monitor temperature and power consumption, the machines also have altimeters built in, so administration tools can take in the effects of altitude on the running of the machinery.

"This is all about telemetry," says Yost. "We have done a lot of work to simplify the task of getting the most efficiency and productivity out of the machines."

Generally speaking, Yost says that a Nehalem EP-based server will deliver about twice the performance of a two-socket Xeon DP box using "Harpertown" processors and that if you compare it to Xeon boxes from three years ago, you can get about nine times the aggregate performance in the new Nehalem two-socket machines.

Here's the significance of that comparison. Yost says that if you take 137 1U rack-mounted servers from three years ago (presumably using single-core processors), you can cram the same amount of computing capacity into a single BladeCenter chassis with 14 blade servers using Nehalem - and the return on investment for making the acquisition is about seven months just based on power and cooling costs alone.

This is clearly going to be the IBM sales pitch, and one that you will hear from all server makers starting this week. Customers moving from racks to blades tend to be interested in consolidation, and that often means virtualizing the servers. But Yost says there are some customers who replace servers on a one-for-one basis as they upgrade, and they are not interested as much in using virtualization to drive down power and cooling costs and server footprints as they are packing a lot more performance into the same thermal envelope.

Now, let's take a look at the new IBM iron, starting with the two rack servers, then move onto the blade and then finish up with the iDataplex nodes.

A closer look

The System x3550 M2 is a 1U rack server that supports dual-core and quad-core Xeon 5500s using the Intel 5520 chipset. The dual-core E5502 running at 1.86 GHz and with 4 MB of Ld cache per core is supported on this machine. The standard quad-core Nehalems - E5540 (2 GHz), E5506 (2.13 GHz), E5520 (2.26 GHz), E5530 (2.4 GHz), and E5540 (2.53 GHz) - are supported on this machine, as are the low-voltage L5520 (2.26 GHz) and the extreme variants (which run hotter), the X5550 (2.66 GHz) and X5570 (2.93 GHz).

All of these quad-core Xeons except the two lowest-speed models have 8 MB of L3 cache on the die (two banks, one for each pair of cores). The others have a single 4 MB L3 cache on the chip. These Nehalem EP chips have thermal design points (TDPs) of 60, 80, or 95 watts.

The x3550 M2 comes with 2 GB of memory standard, and DDR3 DIMMs of 1 GB, 2 GB, 4GB, or 8 GB are supported. Memory runs at either 1.33 GHz or 1.07 GHz. The mobo has eight memory slots per Nehalem processor, for a total of 16 DIMMs and a maximum of 128 GB. But the practical economic maximum (since 8 GB DDR3 memory is still kinda pricey) is probably 64 GB. To help bolster the up-time on this x3550 Nehalem system, IBM offers chipkill scrubbing, and 4 of the 16 DIMM slots can also be used as mirrors of the other DIMMs to enhance the reliability of the system.

The x3550 has six 2.5-inch drive bays and a single 5.25-inch bay that is occupied by a CD-RW/DVD combo drive. The server supports SAS or SATA drives - the usual 10K and 15K RPM suspects in both form factors - for a maximum of 1.8 TB using the 300 GB SATA drives. The motherboard has an integrated ServeRAID disk controller, plus two Gigabit Ethernet ports standard (with room for two more on a daughter card), and two PCI-Express x16 slots (one can be converted to a legacy PCI-X slot if you need to). It comes with a 675-watt power supply (you can go redundant if you want) and six fans.

The System x3650 M2 is basically the same Nehalem server, but it comes in a 2U chassis instead of a 1U box, and therefore. it has room for a dozen 2.5-inch disk drives instead of six. In addition to the 2.5-inch SAS and SATA drives, IBM is supporting a 32 GB or 50 GB SSD disk in the same 2.5-inch form factor in this machine. Presumably, this is the same SSD unit that is optional in the System x3550 M2. Also, customers can opt for configurations with four PCI-Express x8 slots instead of the two x16 slots in the x3550 M2.

The System x3550 M2 and x3650 M2 machines are certified to run Windows Server 2000, Windows Server 2003 R2 (but not yet Windows Server 2008); Red Hat Enterprise Linux 4 and 5; SUSE Linux Enterprise Server 9 and 10 as well as the related Open Enterprise Server with its embedded NetWare 6.5; and SCO UnixWare 7.1.4 and OpenServer 6. VMware's ESX Server 2.5 and 3.0 hypervisors are also certified on the boxes, but oddly enough, not ESX Server 3.5, the most current release.

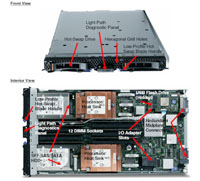

The new HS22 blade server slaps into any of IBM's existing five variants of the BladeCenter chassis. All of the same Nehalem EP processors are supported on this two-socket blade server excepting the very fastest one, the quad-core X5570 running at 2.93 GHz. (Too hot for the blade chassis to handle). The blade, which is also based on Intel's 5520 chipset, uses a mix of different DDR3 memory speeds - 800 MHz, 1.07 GHz, or 1.33 GHz, with the speed used depending on the processor and the memory configuration. (Larger memory configurations require slower memory, so shop carefully and plan ahead).

The HS22 blade has chipkill protection, but does not support memory mirroring like the x3550 M2 and x3650 M2 rack servers above. The blade has a dozen memory slots, and right now, only 1 GB, 2 GB, and 4 GB DDR3 DIMMs are supported (8 GB DIMMs will be coming eventually, says Big Blue). Depending on the configuration, a base HS22 comes with 2 GB or 4 GB of base memory, expandable to a maximum of 96 GB using those 8 GB DIMMs. Of course, these fatter DIMMs are not yet available, so the practical top-end memory capacity on the HS22 is really 48 GB.

This is somewhat problematic given the fact that the blade and server virtualization mix requires lots and lots of memory. This is why Cisco Systems is expected to support at least 384 GB of main memory on its two-socket Nehalem blade servers in its "California" system, announced last week and delivered in June or July.

California competition

IBM had not only get the 8 GB DIMMs out the door, but better get 16 GB DIMMs out there if it wants the HS22 to compete with California. At 192 GB of main memory, IBM can make a compelling case. Of course, Cisco is expected to use cheaper and lower capacity DDR3 DIMMs and just put more of them on the blade, tricking the Intel chipsets into thinking it is addressing less memory when it isn't thanks to the ASIC Cisco has designed. Even as IBM and other blade server makers adopt denser DDR3 main memory, Cisco's memory extension ASIC uses memory economics in Cisco's favor - provided that ASIC works well and doesn't cost much to make.

Speaking of virtualization, the HS22 blade server from IBM has an internal flash drive port, which can be outfitted with a 2 GB flash drive configured with VMware's ESXi skinny hypervisor. The blade has two hot-swap 2.5-inch disk bays, which can be populated with SAS drives (73 GB, 147 GB, and 300 GB at 10K RPM and 73 GB and 147 GB at 15K RPM), with SATA drives (300 GB at 10K RPM), or an SSD (weighing in at 31.4 GB).

The HS22 blade has two PCI-Express x8 slots, and has a two-port Gigabit Ethernet link standard, with up to 12 ports per blade possible with expansion cards. The HS22 also has expansion cards to deliver 10 Gigabit Ethernet and quad-data rate (40 Gb/sec) InfiniBand links. iSCSI, 8 Gb/sec Fibre Channel, and 6 Gb/sec SAS connectivity are available to reach out to disk arrays, too.

In terms of operating systems, the HS22 blade server supports Windows Server 2003 R1 and R2 as well as Windows Server 2008; Red Hat Enterprise Linux 4.7 and 5.3; SUSE Linux Enterprise Server 10; and Solaris 10 Unix. Only VMware's current ESX Server 3.5 and ESXi 3.5 hypervisors are supported on this blade.

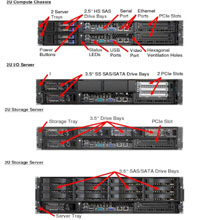

IBM is deploying the Nehalem EP processors in a variety of its iDataplex custom-designed servers for hyperscale data centers. With this launch, the Nehalem chips are being deployed in four different configurations: a compute node, an I/O node, and two storage nodes.

Like the other IBM machines, the iDataplex nodes are based on Intel's 5520 chipset. IBM is supporting the 2.26 GHz L5520 (with a 60 watt TDP), the 2 GHz E5504, 2.26 GHz E5520, and 2.53 GHz E5540 (80 watt TDP), and the 2.67 GHz X5550, 2.86 GHz X5560, and 2.93 GHz X5570 (all 95 watt TDP) Nehalem chips in these systems. The iDataplex motherboard has 16 DDR3 DIMM slots, which support from 1GB to 4 GB DIMMs right now, for a maximum of 64 GB of main memory (the same 800 MHz, 1.07 GHz, and 1.33 GHz speeds as in the HS22 blade server). When 8 GB DIMMs are available in the third quarter, iDataplex customers will be able to plunk 128 GB of memory into these nodes.

The 2U iDataplex compute node puts two of these Nehalem servers stacked atop each other into a single chassis, each with two 2.5-inch SAS drives, two Gigabit Ethernet ports, and a single PCI-Express 2.0 x16 slot. The I/O server is a single server inside the same chassis, but it has two 3.5-inch SAS or SATA drives plus three PCI-Express 2.0 x16 slots.

The 2U storage server variant of the iDataplex node has five 3.5-inch drives and a single PCI-Express 2.0 x16 slot. For customers who need an even fatter storage node, IBM takes the bottom half of the 2U storage server and stretches the box to 3Us in height, and then puts in another dozen 3.5-inch hot-swap SAS or SATA drives (for a total of 13), including that single PCI-Express 2.0 x16 peripheral slot. Any of these iDataplex nodes can support the 31.4 GB SSD sold with the HS22 blade server.

Yost says that the new Nehalem machines will be priced at about the same price points as their predecessors in the IBM lineup using quad-core Harpertown chips. In some cases, because of the richness of the configurations, prices will even rise. (Well, at list price, anyway. We'll see about street prices).

The HS22 has been shipping to selected customers already, and will be generally available on March 31. The new Nehalem rack servers have also been going out to selected customers since March began, but will not be generally available until the end of April. The iDataplex nodes have seen some early testing customers, too, but will not be available until May. Specific pricing information will not be released on this gear until it ships. ®