Original URL: https://www.theregister.com/2008/10/21/storage_suppliers_adopr_ssds/

This SSD thing is really catching on

Everyone but 3PAR has an SSD adoption strategy

Posted in Channel, 21st October 2008 10:33 GMT

SNW round-up SNW showed that there are three flash storage plays emerging: flash in the shelf, flash in the controller and flash in the server. Only 3PAR out of the top 12 or so storage vendors does not have a flash strategy.

EMC started the flash storage ball rolling by announcing its Enterprise Flash Drives for Symmetrix in January this year, followed by CLARiiON flash in August. Then IBM ran a compelling flash demo with a million IOPS coming out of a flash-based SVC product in late August. Compellent became the second storage vendor to commit to flash, days before SNW, and promising delivery by the middle of next year.

What we found out at SNW was that virtually all the major storage suppliers now have active flash product strategies, all assuming flash cost and write cycle limitation problems will be dealt with. Here are the new flash kids on the block:

HDS's storage array shelf flash play: Hu Yoshida, HDS' chief technology officer, said that solid state storage for the USPV will be available by the end of the year.

HP's end to end SSD play: Jieming Zhu, a distinguished technologist in the StorageWorks Chief Technologist Office, said, "SSD is an end-to-end system play." HP will expand blade server SDD use as "the server is arguably one of the best-placed areas to take advantage of solid state".

There is an SSD array role as a tier 0 for enterprise class transaction-type data: "The IOPS/GB of SSD is very atttractive for Oracle." An SSD'ised array has much less need of massive I/O pipes to hard drives. This suggests there might be a flash version of the HP-built Exadata server used in Oracle's Database machine.

Zhu confirmed HP is evaluating the Fusion-io SSD technology and added that SSD is also a consumer play, citing HP's PCs, notebooks, printers and cameras.

LSI: Steve Gardner, LSI Engenio Storage Group's product marketing director, said the just-announced 7900 array could get FC-interface SSDs in the future, possibly next year. The flash would be for high-activity, random read data and it would be a tier 0. The drives would be in a 3.5-inch form factor.

NetApp's flash in the PAM: Jay Kidd, NetApp's chief technology officer, described how NetApp intends to use flash in the controller (server) that runs FAS and V series arrays: "From a simplicity of deployment perspective you may want SSD in an array as tier 0 but you then have to plan what goes on it. Some of its capacity will be unallocated and be a very expensive wasted resource." He feels that placing the SSD as a cache in the controller will avoid that burden, but still accelerate I/O of the most active data.

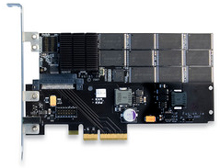

A NetApp controller presents a PCIe interface to its outside world with an internal switched 4Gbit/s Fibre Channel infrastructure linking drive trays to the PCIe bus via HBAs (host bus adapters). NetApp is using the Fusion-io (card pictured right) PCI-e-connection method but without using Fusion-io's products.

It already has its PAM (Performance Acceleration Module) card to provide a DRAM cache to the controller. That will be populated with NAND flash chips in the same way as it's populated with DRAM chips today, and NetApp will provide its own controller technology for this on the card, an ASIC. It's got one for the DRAM PAM card, an FPGA chip and, conceptually, it's simple enough to tune it to support NAND chips.

As an added wrinkle the PAM card is a read cache, not a write cache. With a write cache and several copies of data items then each copy of a cached data item has to be in cache so that, if one copy is written to then the data is kept coherent. With a read cache you only need one copy of data that has duplicate copies in the array.

Naturally enough, Kidd calls this deduplication. He says it's great for virtual desktop images (VDIs) which have lots of zeroes in them and will quickly fill a write cache - not so a read cache.

In the future the controller-to-shelf connection will change from Fibre Channel to SAS. (The number of SAS backplane using arrays grows. We know now that Data Domain uses that technology as does HDS with its new AMS, EMC with certain AX models, and HP with its ExDS9100. IBM is going to with its coming QuickSilvered SVC, and now here is NetApp planning to use it. Kidd says that there won't be 8Gbit/s Fibre Channel internal array connections.)

Pillar Data: Glen Shok, Pillar's product director, said Pillar was (like Sun - see below) looking at flash use in multiple places. One is the tier 0 storage array use case. A second is to use flash as an internal drive in the Slammer controller - data mover and manager - unit in an Axiom array where it would replace battery-backed cache. (This is remniscent of NetApp's PAM flash use.)

The third use case he mentioned is flash configured as a massive write cache - 256GB or so - which would be useful for some applications pouring data into the array. We might expect Pillar flash products by the end of 2009 or early 2010.

Shok doesn't understand how Compellent can appparently be quite a way in front of Pillar in its flash deployment, mid-2009 being Compellent's first product ship date, pointing out that Pillar and Compellent use pretty much the same RAID controller components and having, we reckon, roughly equally engineering resources and talent.

Sun's end to end SSD play: Fresh details of Sun's SSD intentions came from Jason Schaffer, its senior director for storage and networking. Sun is going to use read-optimised and write-optimised SSDs for different applications. He sees three potential flash SSD locations in a product range.

One is quasi-disk drives for storage array use. He sees both read-focussed and write-focussed SSDs here with NAND chips managed by a controller which talks SAS to its outside world. Sun and Pillar are the first vendors to make distinctions between read and write cache uses. Sun's flash drives will come from Intel - think X25-E (right), Marvell and/or Samsung. Schaffer mentioned the Fishworks (fully-integrated software - it just works) project and ZFS here.

This flash forms, along with bulk SATA drives, a hybrid storage pool for ZFS, with data automatically going to the right place.

Secondly, Sun could produce a JBOD chassis with flash drives in it instead of hard drives - JBOF? JBOSSD? Thirdly Sun could produce an HBA form factor flash card, conceptually like an ioDrive from Fusion-io.

Schaffer said: "We'll see flash in every one of our systems." It's a total flash makeover for Sun. Flashed Thumper? Oh yes.

Xiotech: Chief technology officer Steve Sicola (left) says the next-generation Emprise, the product that uses sealed canisters of drives that don't need servicing for five years, will use SSDs. The ISE (Integrated Storage Element) technology already tiers drives with 15K 3.5-inch FC, 15K 2.5-inch FC, and 7.2K FC 1TB drives. (As far as we know no other vendor uses, or can even get access to, 15K 2.5-inch FC drives and 1TB FC drives.) Again, adding a flash tier is relatively easy and the presumption is that Xiotech will go the STEC route with Fibre Channel interface SSDs.

Xyratex: LSI said its new 7900 storage array (used as IBM's DS5000) can have flash shelves added to it in future.

The EMC flash way: Chuck Hollis (right), EMC's blogger extraordinaire and VP Technical Alliances, says there is an Achilles heel in the serve flash use argument. If you flash-enable servers to accelerate application I/O then what happens if the server fails? Obviously you need two servers with some kind of failover, but that then means you need to flash-enable both servers and have two copies of everything in flash. That might be OK for four to six terabytes but it becomes impractical if you have 400 to 600TB of data.

The best way to fix that problem is to network the storage and revert to one copy: "That's called, by the way, a storage array... Servers don't do high availability (HA) access to storage. If you want to make it HA then you have to have a storage array."

Things like replication are easier with a storage array. Storage arrays are popular for a reason, and flash in a storage array is just another medium. The fundamentals, like how to get server high-availability, still apply. He says there will be a use case for server flash use, as a cheaper-then-DRAM cache for example, like Fusion-io: "But it's not shared storage."

Hollis mentioned that there's talk of Iomega bringing out a multi-level cell flash storage box in a year or two.

Storage flash agreement

There is a coherent enterprise storage array vision being presented here. All the vendors agreed that in a few years' time there will only be two kinds of array shelves, active data on flash and bulk data on SATA drives. Fibre Channel and SAS performance-oriented disk drives will wither away, squeezed out. A single drive array controller complex will talk 6Gbit/s SAS to flash and 6Gbit/s SATA to hard disk drives. No doubt 12Gbit/s SAS/SATA.

It's curious that as physical Fibre Channel SAN fabrics are having FCoE touted as their eventual future, internal array Fibre Channel use is having its end-of-life rites prepared too. ®