Original URL: https://www.theregister.com/2008/07/09/review_geforce_gtx_280/

Nvidia GeForce GTX 280

Over-priced, over-specced and over here

Posted in Channel, 9th July 2008 11:02 GMT

Review Nvidia has spent the past year waiting for AMD to give it a fight in the graphics sector. The G92 chip used in GeForce 8800 GT was little more than a die-shrink of the G80 that debuted in the original GeForce 8800 GTS and GTX.

The GeForce 9800 GTX used the same G92 chip and supported DirectX 10 with Shader Model 4.0 - just like the GeForce 8000 series - so it was hard to see why Nvidia felt the need to move from 8000 to 9000 numbering. More to the point, Nvidia decided to ignore Shader Model 4.1 and DirectX 10.1, which is part of Windows Vista SP1, so it really milked the G80/G92 architecture for all it was worth.

Zotac's GTX 280 AMP!: factory overclocked

Well, the time has come for a change: the launch of the new GT200 chip, which is used in this Zotac GeForce GTX 280 AMP! Edition as well as cheaper GeForce GTX 260 models. The GT200 is an awesome piece of silicon that packs in 240 Stream Processors - Nvidia's unified shaders - compared to the 128 in the G92 .

That change has raised the transistor count from 754m to 1.4bn, and as Nvidia has stuck with a 65nm fabrication process, the size of the GT200 has increased to an enormous 24mm² which is four times the size of a 65nm Intel Core 2 die.

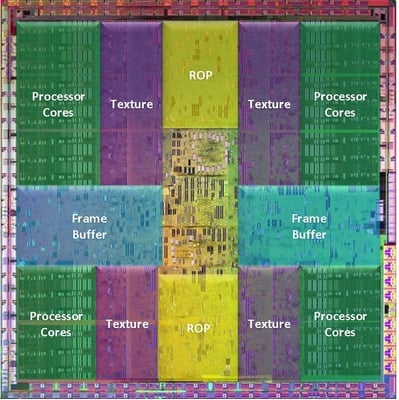

Internally, the GT200 is divided up into ten clusters of 24 shaders and eight clusters of four ROPs with the core running at 602MHz, the Stream Processors at 1296MHz and the 1GB of GDDR 3 memory at a true speed of 1107MHz to give an effective speed of 2214MHz.

The GTX 280 AMP! Edition is a factory-overclocked card with the core speed raised to a nice round 700MHz, the Shaders to 1400MHz and the memory to 2300MHz. By contrast, the junior GTX 260 has eight clusters of shaders, seven ROP clusters, and has all the clock speeds reduced slightly. As if that wasn’t enough the 512-bit memory controller in the GTX 280 has been reduced to 448 bits for the GTX 260.

Nvidia's GT200 design

The power requirement of the GTX 260 is quite steep, however, rising to 236W. It can get away with a single six-pin PCI Express power connector. The GTX 280 is in a different league, and sports both a six-pin connector and also an eight-pin one, just like the GeForce 9800 GX2.

AMD went down a similar route with the Radeon HD 2900 XT, but you could choose to plug in two six-pin connectors from your power supply if you didn’t want to overclock. With the big GeForce models you have to connect an eight-pin block or the system won’t start.

Zotac and the other Nvidia partners supply an adaptor in the package that connects to two six-pin plugs to give you the necessary eight pins, so that’s a total of three six-pin connectors you require to get this graphics card running. In all probability, you’ll be looking at a significant outlay for a new power supply on top of the startling amount of money most vendors want for the graphics card. Zotac is a new and relatively obscure brand in the UK, and pricing for the GTX 280 AMP!, regular GTX 280, GTX 260 AMP! and regular GTX 260 seems a touch steep.

The Zotac cards are straightforward reference designs so it makes sense to check out comparable models to get representative pricing, which starts at £215 for a GTX 260 and climbs to £320 for an overclocked GTX 260. The gap widens in GTX 280 land, where you can find, say, an Asus GTX 280 for around £330 and an overclocked version for £375. It’s absurd to call a £330 graphics card ‘cheap’ but it’s hard to see why you’d consider a GTX 260 when Asus charges just a £10 premium for the GTX 280.

In appearance, the GTX 260 and 280 are very similar, and are big double-slot cards out of the same mould as the 9800 GX2. There have been subtle changes to the construction to assist cooling and to reduce the transfer of heat from the graphics card to your motherboard and chipset but the family history of the GTX 280 is quite apparent.

Double slotter

It’s worth spelling out that the GT200 chip doesn't support DirectX 10.1 even though the differences between DX 10 and 10.1 are relatively minor. If you’re spending £400 on a graphics card you might be none too impressed by the idea of missing out on a gaming feature as you are unlikely to buy another graphics card in the near future.

When we tested the Zotac GTX 280 AMP!, we wanted to be sure the system didn’t restrict this beast of a graphics card so we used an Intel Skulltrail motherboard with dual quad-core processors overclocked to 4GHz. We also tested a selection of graphics cards working back through the GeForce 9000, 8000, 7000 and 6000 families to put things in perspective.

We used Nvidia's Forceware 177.35 drivers for the GTX 280 - more about the drivers later.

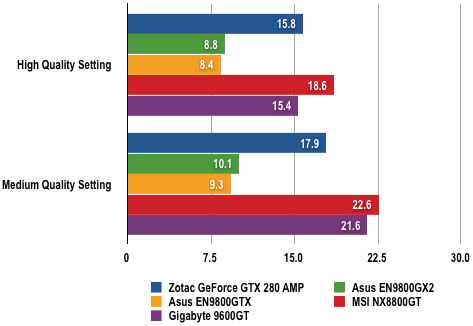

Crysis Test Results

Average frame rate

Longer bars are better

You’ll note that our games tests are rather brief and consist of two runs in Crysis at 1280 x 1024 with the quality settings set, respectively, on Medium and High. The run uses a saved game and is actually game play with a running gunfight as you make your way up a jungle path in bright sunlight. The average frame rate is taken with FRAPS.

The GeForce 6600 GT refused to run Crysis at all, the 6800 GT was feeble and the 7300 GT was poor. Moving up to the 9600 GT made things better, although game play was too slow to be enjoyable, but the 8800 GT fixed that and delivered decent results. Although the 8800 GT sounds as though it's older than the 9600 GT, it's the same chip with double the number of Stream Processors. Curiously, both the 9800 GTX and the dual-chip 9800 GX2 were hopeless in Crysis on these settings although they were superb in 3DMark Vantage, 3DMark06 and PCMark Vantage.

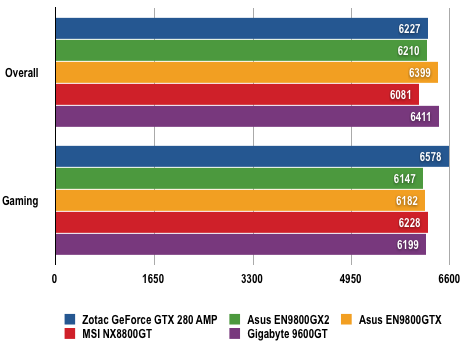

PCMark Vantage Test Results

Longer bars are better

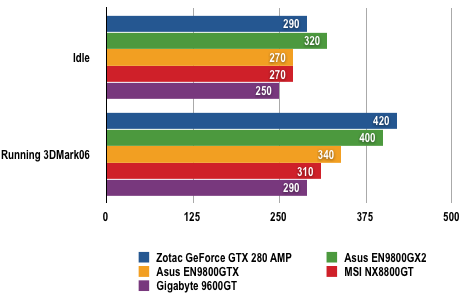

The GTX 280 performed superbly across the range of benchmarks and has an impressively low power draw at idle as the 700MHz core clocks down to 300MHz and the memory seems to run at a mere 100MHz.

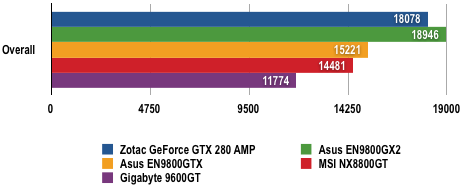

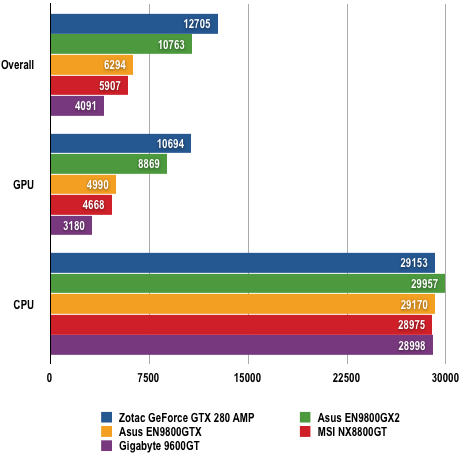

3DMark06 Test Results

Longer bars are better

On the face of it, the performance of GTX 280 in 3DMark Vantage is unequivocal as it stomps the other graphics cards into the dust. However, there's a dispute rumbling on this very subject. 3DMark Vantage tests your PC for its ability to handle physics calculations, which is great if you have an Ageia PhysX chip but as none of us do, a game's physics load is typically handled by the CPU, and that's one reason why 3DMark Vantage has a CPU test.

3DMark Vantage Test Results

Longer bars are better

In its Forceware 177.39 drivers, Nvidia updated the code to move the physics load from the CPU to the GPU where possible as this helps overall game performance. However, it has raised questions marks over its effect on benchmark scores. Driver 177.35, the previous release, has been approved by benchmark creator Futuremark. Forceware 177.39 has not.

As things stand, it's unclear whether Nvidia is taking a valid approach by treating the CPU and GPU as a pool of computing power. This surely gives Nvidia an advantage in 3DMark as it owns PhysX and has the ability to shift the physics workload wherever it desires, breaking down the established boundaries between CPU and GPU roles. It defends its position thus:

"Nvidia did not cheat or violate the FutureMark rules."

It continues: "The initial version of 3DMark Vantage shipped with a PhysX installer that only included support for CPU physics and the Ageia physics chip. The initial version didn't contain a GPU physics installer as nobody had that product. Once we brought physics to the GPU through our Ageia acquisition, we updated the PhysX installer to add support for GPU physics.

"The same thing applies to Unreal Tournament 3. All we’ve done is update the installer to add support for GPU physics.

"The installer will be available for everyone to download off the Nvidia website this week, so folks can play with it and enjoy the new GPU physics effects."

In response, AMD pointed us towards this quote from Oliver Baltuch, President of FutureMark, on the matter:

“We are adhering to the rules set out in our previously published documents (PDF). Based on the specification and design of the CPU tests, GPU make, type or driver version may not have a significant effect on the results of either of the CPU tests as indicated in Section 7.3 of the 3DMark Vantage specification and whitepaper.“

As a result, AMD is confident that Nvidia doesn't have Futuremark’s approval for their GPU acceleration of PhysX.

Power Draw Test Results

Power draw in Watts (W)

Of course, AMD will eventually offer physics-on-GPU code of its own, and Futuremark will have to revamp its tests to take into account the blurred line between CPU and GPU. For now, though, Nvidia can gain higher scores at the cost of ending apples-for-apples comparisons.

Unfortunately, we only had the Zotac for a short time before the business of driver 177.35 vs 177.39 kicked off, and we didn’t get the chance to compare the two back-to-back.

At the very least, Nvidia is sailing close to the wind with 3DMark Vantage, but outside the rarified domain of benchmarks and back in the real world, the idea of handling physics on the GPU makes perfect sense.

We love the Zotac GTX 280 AMP! to bits but it's very hard to recommend the level of expenditure that buying one entails. For the price of a single GTX 280 you can buy a PlayStation 3, and if you’re thinking of SLI or Tri-SLI GTX 280 that would pay for a decent 50in HDTV.

Verdict

Nvidia’s latest and greatest graphics chip truly is superb, but it is also monstrously big, horribly power-hungry and ludicrously expensive. We’re waiting for GT200b to bring DirectX 10.1 and GDDR 5 support to the party, and the sooner Nvidia rolls out 55nm fabrication the better.